[AINews] DeepSeek-R1 claims to beat o1-preview AND will be open sourced

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Whalebros are all you need.

AI News for 11/20/2024-11/21/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 1837 messages) for you. Estimated reading time saved (at 200wpm): 197 minutes. You can now tag @smol_ai for AINews discussions!

Ever since o1 was introduced (our coverage here, here, and here), the race has been on for an "open" reproduction. 2 months later, with honorable mentions to Nous Forge Reasoning API and Fireworks f1, DeepSeek appear to have made the first convincing attempt that 1) has BETTER benchmark results than o1-preview and 2) has a publicly available demo rather than waitlist.

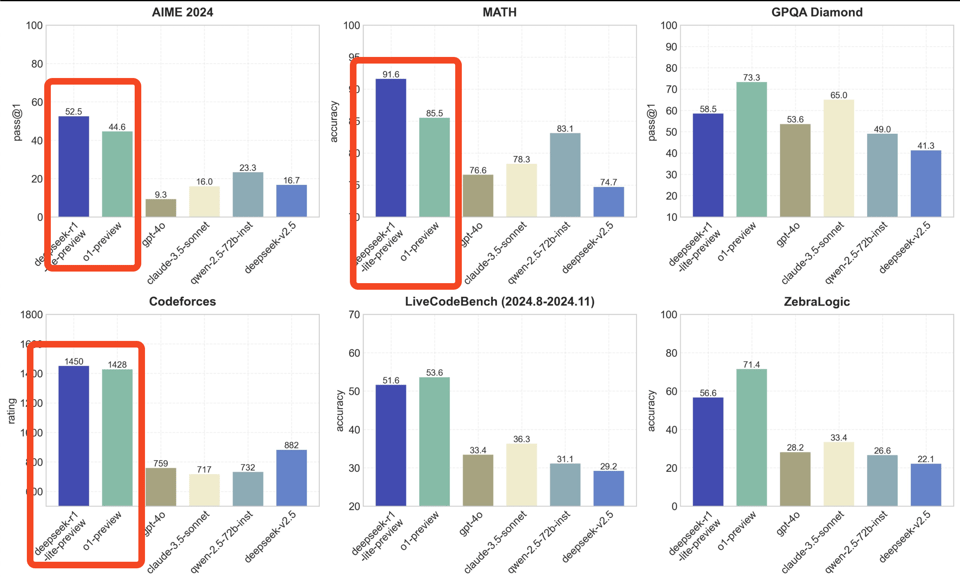

Benchmarks wise, it doesn't beat o1 across the board, but does well on important math benchmarks and at-least-better-than-peers on all but GPQA Diamond.

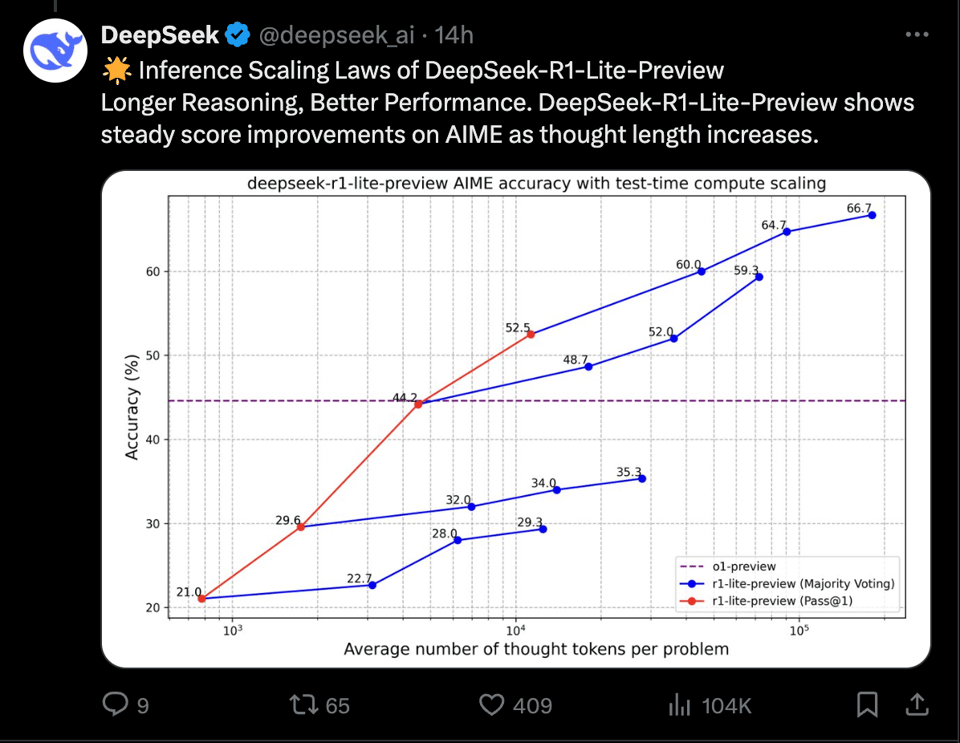

Also importantly, they appear to have replicated the similar inference-time-scaling performance improvements mentioned by OpenAI, but this time with an actual x-axis:

As for the "R1-Lite" naming, rumor is (based on wechat announcements) it is based on DeepSeek's existing V2-Lite model which is only a 16B MoE with 2.4B active params - meaning that if they manage to scale it up, "R1-full" will be an absolute monster.

One notable result is that it has done (inconsistently) well on Yann LeCun's pet 7-gear question.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

1. NVIDIA Financial Updates and Market Insights

- NVIDIA Reports Record Revenue in Q3: @perplexity_ai discusses the insights from NVIDIA's Q3 earnings call, highlighting a record revenue of $35.1 billion, a 17% increase from the previous quarter. Key growth drivers include strong data center sales and demand for NVIDIA's Hopper and Blackwell architectures. The company anticipates continuing growth with projections of $37.5 billion in Q4.

- Detailed Performance During Earnings Call: Another update from @perplexity_ai further outlines that data center revenue reached $30.8 billion, marking a 112% year-on-year increase. The Blackwell architecture reportedly offers a 2.2x performance improvement over Hopper.

2. DeepSeek-R1-Lite-Preview: New Reasoning Model Developments

- Launch of DeepSeek-R1-Lite-Preview: @deepseek_ai is excited about the release of DeepSeek-R1-Lite-Preview, which offers o1-preview-level performance on MATH benchmarks and a transparent thought process. The model aims to have an open-source version available soon.

- Evaluation of DeepSeek-R1-Lite-Preview: Multiple users, such as @omarsar0, discuss its capabilities, including math reasoning improvements and challenges in coding tasks. Despite some mishaps, the model shows promise in real-time problem-solving and reasoning.

3. Quantum Computing Progress with AlphaQubit

- AlphaQubit Collaboration with Google: @GoogleDeepMind introduces AlphaQubit, a system designed to improve error correction in quantum computing. This system outperformed leading algorithmic decoders and shows potential in scale-up scenarios.

- Challenges in Quantum Error Correction: Despite these advancements, additional insights from Google DeepMind note ongoing issues with scaling and speed, highlighting the goal to make quantum computers more reliable.

4. Developments in GPT-4o and AI Creative Enhancements

- GPT-4o's Enhanced Creative Writing: @OpenAI notes updates in GPT-4o's ability to produce more natural, engaging content. User comments, such as from @gdb, highlight improvements in working with files and offering deeper insights.

- Chatbot Arena Rankings Update: @lmarena_ai shares excitement over ChatGPT-4o reaching the #1 spot, surpassing Gemini and Claude models with significant improvements in creative writing and technical performance.

5. AI Implementations and Tools

- LangChain and LlamaIndex Systems: @LangChainAI announces updates to the platform focusing on observability, evaluation, and prompt engineering. They emphasize seamless integration, offering developers comprehensive tools to refine LLM-based applications.

- AI Game Development Courses: @togethercompute introduces a course on building AI-powered games, in collaboration with industry leaders. It focuses on integrating LLMs for immersive game creation.

6. Memes/Humor

- High School AI Nostalgia: @aidan_mclau humorously reflects on using AI to complete philosophy homework, showcasing a light-hearted take on AI's educational uses.

- Chess Meme: @BorisMPower engages in a chess meme thread, contemplating strategic moves and decision-making within the game context.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek R1-Lite matches o1-preview in math benchmarks, open source coming soon

- DeepSeek-R1-Lite Preview Version Officially Released (Score: 189, Comments: 64): DeepSeek released their new R1 series inference models, trained with reinforcement learning, featuring extensive reflection and verification capabilities through chain of thought reasoning that can span tens of thousands of words. The models achieve performance comparable to o1-preview in mathematics, coding, and complex logical reasoning tasks while providing transparent reasoning processes at chat.deepseek.com.

- DeepSeek-R1-Lite is currently in development, with the official announcement confirming it's web-only without API access. The company plans to open-source the full DeepSeek-R1 model, release technical reports, and deploy API services as stated in their tweet.

- Initial user testing shows impressive performance in mathematics with detailed reasoning steps, though some note longer response times compared to o1-preview. The model is estimated to be 15B parameters based on previous DeepSeek releases.

- Community response highlights the rapid advancement of Chinese AI labs despite GPU restrictions, with users noting the model's transparent thought process could benefit open-source development. Several users confirmed strong performance on AIME & MATH benchmarks.

- Chinese AI startup StepFun up near the top on livebench with their new 1 trillion param MOE model (Score: 264, Comments: 74): StepFun, a Chinese AI startup, developed a 1 trillion parameter Mixture-of-Experts (MOE) model that achieved competitive scores on livebench, a real-time AI model leaderboard. The model's specific performance metrics and technical details were not disclosed in the source material.

- Livebench scores show the model is currently underperforming relative to its size, with users noting it's being beaten by smaller models like o1 mini (estimated at 70-120B parameters) and showing particularly low math scores.

- The model appears to be in early training stages, with discussion around "Step 2" potentially indicating its second training phase. Users speculate the underwhelming performance is due to being heavily undertrained rather than architectural limitations.

- Discussion focused on the model's MoE architecture and deployment strategy, with experts noting that each transformer layer requires its own set of experts, leading to substantial GPU-to-GPU communication needs during inference and training.

Theme 2. Sophisticated Open Source LLM Tools: Research Assistant & Memory Frameworks

- I Created an AI Research Assistant that actually DOES research! Feed it ANY topic, it searches the web, scrapes content, saves sources, and gives you a full research document + summary. Uses Ollama (FREE) - Just ask a question and let it work! No API costs, open source, runs locally! (Score: 487, Comments: 76): Automated-AI-Web-Researcher is a Python-based tool that uses Ollama and local LLMs to conduct comprehensive web research, automatically generating up to 5 specific research focuses from a single query, continuously searching and scraping content while saving sources, and creating detailed research documents with summaries. The project, available on GitHub, runs entirely locally using models like phi3:3.8b-mini-128k-instruct or phi3:14b-medium-128k-instruct, features pause/resume functionality, and enables users to ask follow-up questions about the gathered research content.

- Users reported mixed success with different LLMs - while some had issues with Llama3.2-vision:11b and Qwen2.5:14b generating empty summaries, others successfully used mistral-nemo 12B achieving 38000 context length with 16GB VRAM at 3% CPU / 97% GPU usage.

- Several technical suggestions were made including ignoring robots.txt, adding support for OpenAI API compatibility (which was later implemented via PR), and restructuring the codebase with a "lib" folder and proper configuration management using tools like pydantic or omegaconf.

- Discussion around the tool's purpose emphasized its value in finding and summarizing real research rather than generating content, with concerns raised about source validation and factual accuracy of web-scraped information.

-

Agent Memory (Score: 64, Comments: 11): LLM agent memory frameworks are compared across multiple GitHub projects, with key implementations including Letta (based on MemGPT paper), Memoripy (supports Ollama and OpenAI), and Zep (maintains temporal knowledge graphs). Several frameworks support local models through Ollama and vLLM, though many assume GPT access by default, with varying levels of compatibility for open-source alternatives.

- The comparison includes both active projects like cognee (for document ingestion) and MemoryScope (featuring memory consolidation), as well as development resources such as LangGraph Memory Service template and txtai for RAG implementations, with most frameworks offering OpenAI-compatible API support through tools like LiteLLM.

- Vector-based memory systems use proximity and reranking to determine relevance, contrasting with simple keyword activation systems like those used in Kobold or NovelAI. The vector approach maps concepts spatially (e.g., "Burger King" closer to food-related terms than "King of England") and uses reranking through either small neural nets or direct AI evaluation.

- Memory frameworks differ primarily in their handling of context injection - from automated to manual approaches - with more complex systems incorporating knowledge graphing and decision trees. Memory processing can become resource-intensive, sometimes requiring more tokens than the actual conversation.

- The field of LLM memory systems remains experimental with no established best practices, ranging from basic lorebook-style implementations to sophisticated context-aware solutions. Simple systems require more human oversight to catch errors, while complex ones offer better robustness against contextual mistakes.

Theme 3. Hardware & Browser Optimization: Pi GPU Acceleration & WebGPU Implementations

- LLM hardware acceleration—on a Raspberry Pi (Top-end AMD GPU using a low cost Pi as it's base computer) (Score: 53, Comments: 18): Raspberry Pi configurations can run Large Language Models (LLMs) with AMD GPU acceleration through Vulkan graphics processing. This hardware setup combines the cost-effectiveness of a Raspberry Pi with the processing power of high-end AMD GPUs.

- Token rates of 40 t/s were achieved using a 6700XT GPU with Vulkan backend, compared to 55 t/s using an RTX 3060 with CUDA. The lack of ROCm support on ARM significantly limits performance potential.

- A complete Raspberry Pi setup costs approximately $383 USD (excluding GPU), while comparable x86 systems like the ASRock N100M cost $260-300. The Intel N100 system draws only 5W more power while offering better compatibility and performance.

- Users note that AMD could potentially create a dedicated product combining a basic APU with high VRAM GPUs in a NUC-like form factor. The upcoming Strix Halo release may test market demand, though alternatives like dual P40s for $500 remain competitive.

- In-browser site builder powered by Qwen2.5-Coder (Score: 55, Comments: 8): An AI site builder running in-browser uses WebGPU, OnnxRuntime-Web, Qwen2.5-Coder, and Qwen2-VL to generate code from text, images, and voice input, though only text-to-code is currently live due to performance constraints. The project implements Moonshine for speech-to-text conversion and includes code examples for integration on GitHub and Huggingface, with performance currently limited by GPU capabilities and primarily tested on Mac systems.

- Developer details challenges in model conversion, sharing their process through export documentation and a custom Makefile, noting issues with mixed data types and memory management that made the project particularly difficult.

- Community feedback highlights interest in testing the system on Linux with NVIDIA RTX hardware, with users also reporting UI contrast issues on iPhone devices due to similar dark background colors.

Theme 4. Model Architectures: Analysis of GPT-4, Gemini & Other Closed Source Models

- Closed source model size speculation (Score: 52, Comments: 12): The post analyzes parameter counts of closed-source LLMs, suggesting that GPT-4 Original has 280B active parameters and 1.8T overall, while newer versions like GPT-4 Turbo and GPT-4o have progressively smaller active parameter counts (~93-94B and ~28-32B respectively). The analysis draws connections between model architectures and pricing, linking Microsoft's Grin MoE paper to GPT-4o Mini (6.6B-8B active parameters), and comparing Gemini Flash versions (8B, 32B, and 16B dense) with models like Qwen and architectures from Hunyuan and Yi Lightning.

- Qwen 2.5's performance-to-size ratio supports the theory of smaller active parameters in modern models, particularly with MoE architecture and closed-source research advances. The discussion suggests Claude may be less efficient than OpenAI and Google models.

- Gemini Flash's 8B parameter count likely includes the vision model, making the core language model approximately 7B parameters. The model's performance at this size is considered notably impressive.

- Community estimates suggest GPT-4 Turbo has ~1T parameters (100B active) and GPT-4o has ~500B (50B active), while Yi-Lightning is likely smaller based on its low pricing and reasoning capabilities. Step-2 is estimated to be larger due to higher pricing ($6/M input, $20/M output).

- Judge Arena Leaderboard: Benchmarking LLMs as Evaluators (Score: 33, Comments: 14): Judge Arena Leaderboard aims to benchmark Large Language Models (LLMs) on their ability to evaluate and judge other AI outputs. Due to insufficient context in the post body, no specific details about methodology, metrics, or participating models can be included in this summary.

- Claude 3.5 Sonnet initially led the rankings in the Judge Arena leaderboard, but subsequent updates showed significant volatility with 7B models rising to top positions among open-source entries. The rankings showed compression from an ELO spread of ~400 points to ~250 points after 1197 votes.

- Community members questioned the validity of results, particularly regarding Mistral 7B (v0.1) outperforming GPT-4, GPT-3.5, and Claude 3 Haiku, with high margin of error (~100 ELO points) cited as a potential explanation.

- Critics highlighted limitations in the judgment prompt, suggesting it lacks concrete evaluation criteria and depth, while the instruction to ignore response length could paradoxically influence assessors through the "pink elephant effect".

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Live Demo Shows Real-Time AI Facial Recognition Raising Privacy Alarms

- This Dutch journalist demonstrates real-time AI facial recognition technology, identifying the person he is talking to. (Score: 2523, Comments: 304): Dutch journalist demonstrates real-time facial recognition AI capabilities by identifying individuals during live conversations. No additional context or technical details were provided about the specific technology or implementation used.

- The top comment emphasizes privacy concerns, suggesting to "post no photos online anywhere attached to actual names" with 457 upvotes. Multiple users discuss continuing to wear masks and methods to avoid facial recognition.

- Discussion reveals this likely uses Pimeyes or similar technology, with users noting that Clearview AI has even more advanced capabilities that can "find your face in a crowd at a concert". Several users point out that the demonstration likely involves a second person doing manual searches.

- Users debate the societal implications, with some calling it a "threat to democracy and freedom" while others discuss practical applications like car sales. The conversation includes concerns about government surveillance and data privacy, particularly in reference to China and other nations.

Theme 2. CogVideoX 1.5 Image-to-Video: Quality vs Performance Trade-offs

- Comparison of CogvideoX 1.5 img2vid - BF16 vs FP8 (Score: 165, Comments: 49): CogVideoX 1.5 post lacks sufficient context or content to generate a meaningful technical summary about the comparison between BF16 and FP8 implementations. No details were provided in the post body to analyze quality differences between these numerical formats.

- Performance metrics show significant differences: BF16 takes 12m57s vs FP8 at 7m57s on an RTX 3060 12GB with 24 frames at 1360x768. BF16 requires CPU offload due to OOM errors but delivers more stable results.

- CogVideoX 1.5 faces quantization challenges, unable to run in FP16. Among available options, TorchAO FP6 provides best quality results, while FP8DQ and FP8DQrow offer faster performance on RTX 4090 due to FP8 scaled matmul.

- Installation on Windows requires specific setup using TorchAO v0.6.1 with code modification in

base.hfile, changingFragMdefinition toVec.

Theme 3. 10 AI Agents Collaborate to Write Novel in Real-Time

- A Novel Being Written in Real-Time by 10 Autonomous AI Agents (Score: 277, Comments: 153): Ten autonomous AI agents collaborate in real-time to write a novel, though no additional details about the process, implementation, or results were provided in the post body. The concept suggests an experiment in multi-agent creative writing and AI collaboration, but without further context, specific technical details cannot be summarized.

- Users express significant skepticism about AI-generated long-form content, with many pointing out that ChatGPT struggles with coherence beyond a few pages and frequently forgets plot points and characters. The top comment with 178 upvotes emphasizes this limitation.

- The author explains their solution to maintaining narrative coherence through a file-based coordination system where multiple agents access a global map, content summaries, and running change logs rather than relying on a single context window. The system is currently in the preparation and structuring phase using Qwen 2.5.

- Several users debate the artistic value and purpose of AI-generated novels, arguing that literature is fundamentally about expressing human experience and creating human connections. Critics note that AI models like ChatGPT and Claude would likely avoid controversial topics that make novels interesting.

Theme 4. StepFun's 1T Param Model Rises in LiveBench Rankings

- Chinese AI startup StepFun up near the top on livebench with their new 1 trillion param MOE model (Score: 29, Comments: 0): StepFun, a Chinese AI startup, has developed a 1 trillion parameter Mixture-of-Experts (MOE) model that ranks among the top performers on livebench. The model's performance demonstrates increasing competition in large-scale AI model development from Chinese companies.

- Microsoft CEO says that rather than seeing AI Scaling Laws hit a wall, if anything we are seeing the emergence of a new Scaling Law for test-time (inference) compute (Score: 99, Comments: 40): Microsoft's CEO discusses observations about AI scaling laws, noting that instead of encountering computational limits, evidence suggests a new pattern emerging specifically for test-time inference compute. The lack of specific details or quotes in the post body limits further analysis of the claims or supporting evidence for this observation.

- The discussion reveals that test-time inference compute involves allowing models to "think" longer and iterate on outputs rather than accepting first responses, with accuracy scaling logarithmically with thinking time. This represents a second scaling factor alongside traditional training compute scaling.

- Several users, including Pitiful-Taste9403, interpret this as evidence that parameter scaling has hit limitations, causing companies to focus on inference optimization as an alternative path forward for AI advancement.

- The term "scaling law" sparked debate, with users comparing it to Moore's Law, suggesting it's more of a trend than a fundamental law. Some expressed skepticism about the economic implications of these developments for average people.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. Custom Model Deployments Take Center Stage

-

Deploy Custom AI Models on Hugging Face: Developers are now able to deploy tailored AI models on Hugging Face using a

handler.pyfile, allowing for customized pre- and post-processing.- This advancement leverages Hugging Face endpoints to enhance model flexibility and integration into diverse applications.

- DeepSeek-R1-Lite-Preview Matches OpenAI's o1-Preview Performance: DeepSeek launches R1-Lite-Preview, achieving o1-preview-level performance on AIME & MATH benchmarks.

- This model not only mirrors OpenAI's advancements but also introduces a transparent reasoning process accessible in real-time.

- Tencent Hunyuan Model Fine-Tuning Now Accessible: Users can fine-tune the Tencent Hunyuan model with resources like the GitHub repository and official demo.

- This facilitates enhanced customization for various NLP tasks, expanding the model's applicability.

Theme 2. AI Model Performance and Optimization Soars

-

SageAttention2 Doubles Inference Speed: The SageAttention2 Technical Report reveals a method for 4-bit matrix multiplication, achieving a 2x speedup over FlashAttention2 on RTX40/3090 GPUs.

- This innovation serves as a drop-in replacement, significantly accelerating inference without sacrificing accuracy.

- GPT-4o Gets Creative Boost and File Handling Enhancements: OpenAI updates GPT-4o, elevating its creative writing abilities and improving file handling for deeper insights.

- The revamped model regains top spots in categories like coding and creative writing within chatbot competitions.

- Model Quantization Discussed for Performance Gains: Users express concerns that quantization adversely affects model performance, preferring original models over quantized versions.

- Suggestions include clearer disclosures from providers like OpenRouter and exploring modifications in evaluation libraries to accommodate pruned models.

Theme 3. Innovative AI Research Paves New Paths

-

ACE Method Enhances Model Control: EleutherAI introduces the ACE (Affine Concept Editing) method, treating concepts as affine functions to better control model responses.

- Tested on models like Llama 3 70B, ACE outperforms previous techniques in managing refusal behavior across harmful and harmless prompts.

- Scaling Laws Reveal Low-Dimensional Capability Space: A new paper suggests that language model performance is influenced more by a low-dimensional capability space than solely by scaling across multiple dimensions.

- Marius Hobbhahn from Apollo champions the advancement of evaluation science, emphasizing rigorous model assessment practices.

- Generative Agents Simulate Over 1,000 Real Individuals: A novel architecture effectively simulates the attitudes and behaviors of 1,052 real people, achieving 85% accuracy on the General Social Survey.

- This reduces accuracy biases across racial and ideological groups, offering robust tools for exploring individual and collective behaviors in social science.

Theme 4. AI Tools Integration and Community Support Flourish

-

Aider's Setup Challenges Resolved with Force Reinstall: Users facing setup issues with Aider, particularly with API keys and environment variables, found success by performing a force reinstall.

- This solution streamlined the setup process, enabling smoother integration of DeepSeek-R1-Lite-Preview and other models.

- LM Studio Navigates Hardware Limitations with Cloud Solutions: Members discuss running DeepSeek v2.5 Lite on limited hardware, emphasizing the need for GPUs with at least 24GB VRAM.

- Cloud-based hardware rentals are explored as cost-effective alternatives, offering high-speed model access without local hardware constraints.

- Torchtune's Adaptive Batching Optimizes GPU Utilization: Implementation of adaptive batching in Torchtune aims to maximize GPU utilization by dynamically adjusting batch sizes to prevent OOM errors.

- This feature is suggested to be integrated as a flag in future recipes, enhancing training efficiency and resource management.

Theme 5. Cutting-Edge AI Developments Address Diverse Challenges

-

LLMs Exhibit Intrinsic Reasoning Without Explicit Prompting: Research demonstrates that large language models (LLMs) can display reasoning paths similar to chain-of-thought (CoT) without explicit prompting by tweaking the decoding process.

- Adjusting to consider top-$k$ alternative tokens uncovers the inherent reasoning abilities of LLMs, reducing reliance on manual prompt engineering.

- Perplexity AI Introduces Shopping Feature Amid API Challenges: Perplexity AI launches a new Shopping feature, sparking discussions about its exclusivity to the US market while users face issues with API response consistency.

- Despite being Pro users, some members express frustration over limitations, leading to increased reliance on alternatives like ChatGPT.

- OpenRouter Tackles Model Description and Caching Clarifications: Users identify discrepancies in the GPT-4o model descriptions on OpenRouter, prompting quick fixes to model cards.

- Clarifications are sought regarding prompt caching policies across different providers, with comparisons between Anthropic and OpenAI protocols.

PART 1: High level Discord summaries

HuggingFace Discord

-

Deploying Custom AI Models Now Possible: A member discovered that custom AI models can be deployed on Hugging Face using a

handler.pyfile, enabling tailored pre- and post-processing of models.- This process involves specifying handling methods for requests and responses, enhancing customization via Hugging Face endpoints.

- New Paper on AI Security Insights Released: AI researchers at Redhat/IBM published a paper addressing the security implications of publicly available AI models, focusing on risk and lifecycle management.

- The paper outlines strategies to improve security for developers and users, aiming to establish more standardized practices within the AI community. View the paper.

- Automated AI Research Assistant Takes Off: An Automated AI Researcher was created using local LLMs to generate research documents in response to user queries.

- The system employs web scraping to compile information and produce topic-relevant summaries and links, making research more accessible.

- LangGraph Learning Initiatives: User

richieghostinitiated learning around LangGraph, discussing its applications and developments in the community.

- This highlights the ongoing interest in integrating graph-based techniques within AI models.

- Semantic Search Challenges with Ada 002: Semantic search using OpenAI's Ada 002 is prioritizing dominant topics, resulting in less prominent but relevant sentences receiving lower rankings.

- Users are seeking alternatives to semantic search to improve information extraction effectiveness.

Interconnects (Nathan Lambert) Discord

-

o1 Release Rumblings: Speculation is rife that OpenAI's o1 model may be released imminently, potentially aligning with DevDay Singapore, although these rumors remain unconfirmed.

- A member noted, 'Wednesday would be a weird day for it,' highlighting the community's vigilant anticipation despite uncertainties.

- DeepSeek's RL Drive: Discussions around DeepSeek Prover revealed interest in their application of reinforcement learning, with members anticipating a possible paper release despite challenges related to model size and performance.

- The community is contemplating delays in full release due to these performance hurdles.

- GPT-4o Gains Ground: OpenAI announced an update to GPT-4o, enhancing its creative writing capabilities and file handling, which propelled it back to the top in performance categories such as creative writing and coding within a chatbot competition.

- This update underscores GPT-4o's improved relevance and readability, as detailed in OpenAI's official tweet.

- LLM Learning Loops: Recent insights suggest that how LLMs memorize training examples significantly affects their generalization capabilities, with models first understanding concepts before memorization leading to better test accuracy predictions.

- Katie Kang shared that this method allows for predicting test outcomes based solely on training dynamics.

- NeurIPS NLP Nixed: Concerns have been raised about NeurIPS D&B reviewers dismissing projects focused on Korean LLM evaluation, citing that similar efforts already exist in Chinese.

- Community members argue that each language requires tailored models, emphasizing the importance of inclusivity in NLP development as highlighted in Stella Biderman's tweet.

Unsloth AI (Daniel Han) Discord

-

Fine-tuning Finesse for LLMs: A user successfully exported Llama 3.1 for a local project to Hugging Face leveraging the 16-bit version for enhanced performance in building RAG applications.

- Members recommended using the 16-bit version to optimize fine-tuning capabilities, ensuring better resource management during model development.

- SageAttention2 Speeds Up Inference: The SageAttention2 Technical Report introduced a method for 4-bit matrix multiplication that achieves a 2x speedup over FlashAttention2.

- With support for RTX40/3090 hardware, SageAttention2 serves as a drop-in replacement for FlashAttention2, enhancing inference acceleration without compromising metric fidelity.

- Training Llama Models: Multiple members shared experiences training different Llama models, noting varying success based on model parameters and dataset sizes.

- Suggestions included starting with base models and tuning training steps for optimal performance.

- Enhancing Performance with Multi-GPU Training: Users are exploring multi-GPU training capabilities with Unsloth, currently unavailable but expected to be released soon.

- Strategies like utilizing Llama Factory for managing multiple GPUs were discussed to prepare for the upcoming feature.

aider (Paul Gauthier) Discord

-

Aider Setup Challenges Resolved: Users faced issues with Aider's setup, specifically regarding API keys and environment variables, leading some to attempt reinstalling components.

- One user reported that performing a force reinstall successfully resolved the setup challenges.

- DeepSeek Impresses with Performance: DeepSeek-R1-Lite-Preview matches o1-preview performance on AIME & MATH benchmarks, offering faster response times compared to previous models.

- The model's transparent reasoning process enhances its effectiveness for coding tasks by allowing users to observe its thought process in real-time.

- Concerns Over OpenRouter's Model Quality: Users expressed dissatisfaction with OpenRouter utilizing quantized versions of open-source models, raising doubts about their performance on the Aider Leaderboard.

- There were calls for clearer warnings on the leaderboard regarding potential performance discrepancies when using OpenRouter's quantized models.

- Impact of Model Quantization Discussed: Quantization negatively affects model performance, leading users to prefer original models over quantized versions.

- Users suggested that OpenRouter should disclose specific model versions to accurately set performance expectations.

- Understanding Aider's Chat Modes: Members discussed the effectiveness of various Aider chat modes, highlighting that using o1-preview as the Architect with DeepSeek or o1-mini as the Editor yields the best results.

- A user noted that Sonnet performs exceptionally well for daily tasks without requiring complex configurations.

Eleuther Discord

-

ACE Your Model Control with Affine Editing: The authors introduce the new ACE (Affine Concept Editing) method, treating concepts as affine functions to enhance control over model responses. ACE enables projecting activations onto hyperplanes, demonstrating improved precision in managing model behavior as shown through tests on Gemma.

- ACE was evaluated on ten models, including Llama 3 70B, achieving superior control over refusal behavior across both harmful and harmless prompts. This method surpasses previous techniques, offering a more reliable strategy for steering model actions.

- Latent Actions Propel Inverse Dynamics: A user inquired about the top papers on latent actions and inverse dynamics models, emphasizing an interest in state-of-the-art research within these domains. The discussion highlighted the significance of relevant literature for advancing current AI methodologies.

- While no specific papers were cited, the conversation underscored the importance of exploring latent actions and inverse dynamics models to push the boundaries of existing AI frameworks.

- Scaling Laws Unveil Capability Dimensions: A newly published paper titled Understanding How Language Model Performance Varies with Scale presents an observational approach to scaling laws based on approximately 100 publicly available models. The authors propose that language model performance is influenced more by a low-dimensional capability space rather than solely training across multiple scales.

- Marius Hobbhahn at Apollo was recognized as a leading advocate for advancing the science of evaluation methods within the AI community, highlighting a growing focus on rigorous evaluation practices in AI model development.

- WANDA Pruning Enhances Model Efficiency: A member inquired if lm-eval supports zero-shot benchmarking for pruned models, mentioning the use of the WANDA pruning method. Concerns were raised regarding the suspect results obtained from zero-shot evaluations.

- Discussions included modifications to lm_eval for compatibility with pruned models and evaluations on ADVBench using vllm, with specific code snippets shared to illustrate model loading and inference methods.

- Forgetting Transformer Integrates Forget Gates: The Forgetting Transformer paper introduces a method that incorporates a forget gate into the softmax attention mechanism, addressing limitations of traditional position embeddings. This approach offers an alternative to recurrent sequence models by naturally integrating forget gates into Transformer architectures.

- Community discussions referenced related works like Contextual Position Encoding (CoPE) and analyzed different strategies for position embeddings, evaluating whether simpler methods like ALiBi or RoPE might integrate more effectively than recent complex approaches.

Perplexity AI Discord

-

Perplexity Outperformed by ChatGPT: Users compared Perplexity with ChatGPT, highlighting ChatGPT's versatility and superior conversational abilities.

- Despite being Pro users of Perplexity, some expressed frustration over its limitations, leading to increased reliance on ChatGPT.

- Introduction of Perplexity Shopping Feature: The new Perplexity Shopping feature sparked discussions, with users inquiring about its exclusivity to the US market.

- There is significant interest in understanding potential access limitations for the shopping functionality.

- API Functionality Issues Reported: Users reported that API responses remain unchanged despite switching models, causing confusion and frustration.

- The community debated the platform's flexibility and questioned the diversity of its responses.

- Fullstack Development Insights with Next.js: A resource on fullstack Next.js development was shared, offering insights into modern web frameworks.

- Explore the use of Hono for server-side routing!

- NVIDIA AI Chips Overheating Concerns: Concerns were raised about NVIDIA AI chips overheating, as detailed in this report.

- Discussions emphasized the risks associated with prolonged usage of these chips.

OpenRouter (Alex Atallah) Discord

-

Gemini 1114 Struggles with Input Handling: Users reported that Gemini 1114 often ignores image inputs during conversations, leading to hallucinated responses, unlike models such as Grok vision Beta.

- Members are hoping for confirmation and fixes, expressing frustration over recurring issues with the model.

- DeepSeek Launches New Reasoning Model: A new model, DeepSeek-R1-Lite-Preview, was announced, boasting enhanced reasoning capabilities and performance on AIME & MATH benchmarks.

- However, some users noted the model’s performance is slow, prompting discussions about whether DeepInfra might be a faster alternative.

- Clarifications on Prompt Caching: Prompt caching is available for specific models like DeepSeek, with users questioning the caching policies of other providers.

- Some members discussed how caching works differently between systems, particularly noting Anthropic and OpenAI protocols.

- Issues with GPT-4o Model Description: Users identified discrepancies in the newly released GPT-4o, noting the model incorrectly listed an 8k context and wrong descriptions linked to GPT-4.

- After highlighting the errors, members saw quick updates and fixes to the model card, restoring accurate information.

- Comparisons of RP Models: Members discussed alternatives to Claude for storytelling and role-playing, with suggestions for Hermes due to its perceived quality and cost-effectiveness.

- Users indicated a mix of experiences with these models, with some finding Hermes preferable while others remain loyal to Claude.

LM Studio Discord

-

Model Loading on Limited Hardware: A user encountered model loading issues in LM Studio on a 36GB RAM M3 MacBook, highlighting error messages about system resource limitations.

- Another member recommended avoiding 32B models for such setups, suggesting a maximum of 14B to prevent overloading.

- GPU and RAM Requirements for LLMs: Discussions emphasized that running DeepSeek v2.5 Lite requires at least 24GB VRAM for the Q4_K_M variant and 48GB VRAM for full Q8.

- Members preferred NVIDIA GPUs over AMD due to driver stability issues affecting performance.

- Cloud-Based Solutions vs Local Hardware: Users explored cloud-based hardware rentals as a cost-effective alternative to local setups, with monthly costs ranging from $25 to $50.

- This approach enables access to high-speed models without the constraints of local hardware limitations.

- Workstation Design for AI Workloads: A member sought advice on building a workstation for fine-tuning LLMs within a $30,000 to $40,000 budget, considering options like NVIDIA A6000s versus fewer H100s.

- The discussion underscored the importance of video memory and hardware flexibility to accommodate budget constraints.

- Model Recommendations and Preferences: Users recommended various models including Hermes 3, Lexi Uncensored V2, and Goliath 120B, based on performance and writing quality.

- Encouragement was given to experiment with different models to identify the best fit for individual use cases as new options become available.

Stability.ai (Stable Diffusion) Discord

-

Gaming PC Guidance Galore: A user is seeking gaming PC recommendations within a budget of $2500, asking for suggestions on both components and where to purchase.

- They are encouraging others to send direct messages for personalized advice.

- Character Consistency Challenges: A member inquired about maintaining consistent character design throughout a picture book, struggling with variations from multiple generated images.

- Suggestions included using FLUX or image transformation techniques to improve consistency.

- AI Models vs. Substance Designer: A discussion arose on whether AI models could effectively replace Substance Designer, highlighting the need for further exploration in that area.

- Members shared their thoughts on the capabilities of different AI models and their performance.

- GPU Optimization for Video Generation: Users discussed the difficulties of performing AI video generation on limited VRAM GPUs, noting potential for slow processing times.

- The recommended course of action included clearing VRAM and using more efficient models like CogVideoX.

- Fast AI Drawing Techniques: A member inquired about the technology behind AI drawing representations that update quickly on screen, wondering about its implementation.

- Responses indicated that it often relies on powerful GPUs and consistency models to achieve rapid updates.

Notebook LM Discord Discord

-

Audio Generation Enhancements in NotebookLM: A member showcased their podcast featuring AI characters, utilizing NotebookLM for orchestrating complex character dialogues.

- They detailed the multi-step process involved, including the integration of various AI tools and NotebookLM's role in facilitating dynamic conversations.

- Podcast Creation Workflow in NotebookLM: A member shared their experience in creating a German-language podcast on Spotify using NotebookLM for audio generation.

- They emphasized the effective audio features of NotebookLM and sought customization recommendations to enhance their podcast production.

- Transcription Features for Audio Files: Members discussed the option to upload generated audio files to NotebookLM for automatic transcription.

- Alternatively, one member suggested leveraging MS Word's Dictate...Transcribe function for converting audio to text.

- Combining Notes Feature Evaluation: Members deliberated on the 'Combine to note' feature in NotebookLM, assessing its functionality for merging multiple notes into a single document.

- One member questioned its necessity, given the existing capability to combine notes, seeking clarity on its utility.

- Sharing Notebooks Functionality: A user inquired about the procedure for sharing notebooks with peers, encountering difficulties in the process.

- Another member clarified the existence of a 'share note' button located at the top right corner of the NotebookLM interface to facilitate sharing.

Latent Space Discord

-

DeepSeek-R1-Lite-Preview Launch: DeepSeek announced the launch of DeepSeek-R1-Lite-Preview, showcasing enhanced performance on AIME and MATH benchmarks with a transparent reasoning process.

- Users are excited about its potential applications, noting that reasoning improvements scale effectively with increased length.

- GPT-4o Update Enhances Capabilities: OpenAI released a new GPT-4o snapshot as

gpt-4o-2024-11-20, which boosts creative writing and improves file handling for deeper insights.

- Recent performance tests show GPT-4o reclaiming top spots across various categories, highlighting significant advancements.

- Truffles Hardware Device Gears Up for LLM Hosting: The Truffles hardware device was identified as a semi-translucent solution for self-hosting LLMs at home, humorously termed a 'glowing breast implant'.

- This nickname reflects the light-hearted conversations around innovative home-based LLM deployment options.

- Vercel Acquires Grep to Boost Code Search: Vercel announced the acquisition of Grep, enabling developers to search through over 500,000 public repositories efficiently.

- Founder Dan Fox will join Vercel's AI team to enhance code search functionalities and improve development workflows.

- Claude Experiences Availability Fluctuations: Users reported intermittent availability issues with Claude, experiencing sporadic downtimes across different instances.

- These reliability concerns have led to active discussions, with users seeking updates via social media platforms.

GPU MODE Discord

-

Triton Triumphs Over Torch in Softmax: A member compared Triton's fused softmax against PyTorch's native implementation on an RTX 3060, highlighting smoother performance from Triton.

- While Triton generally outperformed PyTorch, there were instances where PyTorch matched or exceeded Triton's performance.

- Metal GEMM Gains Ground: Philip Turner's Metal GEMM implementation was showcased, with a member noting their own implementation achieves 85-90% of theoretical maximum speed, similar to Turner's.

- Further discussion touched on the challenges of optimizing Metal compilers and the necessity of removing addressing computations from performance-critical loops.

- Dawn's Regressing Render: Concerns were raised about performance regressions in Dawn's latest versions, especially in the wgsl-to-Metal workflow post Chrome 130, despite improvements in Chrome 131.

- Issues related to Undefined Behavior (UB) check code placement were identified as potential causes for the lag behind Chrome 129.

- FLUX Speeds Ahead with CPU Offload: A member reported a 200% speedup in FLUX inference by implementing per-layer CPU offload on a 4070Ti SUPER, reducing inference time to 1.23 s/it from 3.72 s/it.

- Discussion highlighted the effectiveness of pinned memory and CUDA streams on capable machines, though performance gains were limited on shared instances.

Nous Research AI Discord

-

DeepSeek-R1-Lite-Preview Launch: DeepSeek-R1-Lite-Preview is now live, featuring o1-preview-level performance on AIME & MATH benchmarks.

- It also includes a transparent thought process in real-time, with open-source models and an API planned for release soon.

- AI Agents for Writing Books: Venture Twins is showcasing a project where ten AI agents collaborate to write a fully autonomous book, each assigned different roles like setting narrative and maintaining consistency.

- Progress can be monitored through GitHub commits as the project develops in real-time.

- LLMs Reasoning Without Prompting: Research demonstrates that large language models (LLMs) can exhibit reasoning paths akin to chain-of-thought (CoT) without explicit prompting by adjusting the decoding process to consider top-$k$ alternative tokens.

- This approach underscores the intrinsic reasoning abilities of LLMs, indicating that CoT mechanisms may inherently exist within their token sequences.

- Generative Agent Behavioral Simulations: A new architecture effectively simulates the attitudes and behaviors of 1,052 real individuals, with generative agents achieving 85% accuracy on responses in the General Social Survey.

- The architecture notably reduces accuracy biases across racial and ideological groups, enabling tools for the exploration of individual and collective behavior in social science.

- Soft Prompts Inquiry: A member inquired about the investigation of soft prompts for LLMs as mentioned in a post, highlighting their potential in optimizing system prompts into embedding space.

- Another member responded, expressing that the concept of soft prompts is pretty interesting, indicating some interest within the community.

OpenAI Discord

-

API Usage Challenges: A member reported searching for an API or tool but found both options unsatisfactory, indicating frustration.

- This issue reflects a broader interest in locating efficient resources within the community.

- Model Option Clarification: There was a discussion regarding the 4o model and whether it utilized o1 mini or o1 preview, with confirmation leaning towards o1 mini.

- A member suggested checking the settings to verify options, promoting hands-on troubleshooting.

- High Temperature Performance: A member questioned if improved performance at higher temperatures could be linked to their prompt style, suggesting an excess of guiding rules or constraints.

- This raises considerations for optimizing prompt design to enhance AI responsiveness.

- Beta Access to o1: A member expressed excitement and gratitude towards NH for granting them beta access to o1, brightening their morning.

- Woo! Thank you NH for making this morning even brighter reflects the exhilaration around new updates.

- Delimiter Deployment in Prompts: A member shared OpenAI's advice on using delimiters like triple quotation marks or XML tags to help the model interpret distinct sections of the input clearly.

- This approach aids in structuring prompts better for improved model responses, allowing for easier input interpretation.

Cohere Discord

-

API Key Problems Block Access: Multiple members reported encountering 403 errors, indicating invalid API keys or the use of outdated endpoints while trying to access certain functionalities.

- One member shared experiencing fetch errors and difficulties using the sandbox feature after verifying their API keys.

- CORS Errors Interrupt API Calls: A member on the free tier faced several CORS errors in the console despite using a standard setup without additional plugins.

- Attempts to upgrade to a production key to resolve these issues were unsuccessful, highlighting limitations of the free tier.

- Advanced Model Tuning Techniques Explored: Discussions delved into whether model tuning could be achieved using only a preamble and possibly chat history.

- Questions were raised about the model's adaptability to various training inputs, indicating the need for more effective tuning methods.

- Cohere Introduces Multi-modal Embeddings: A member praised the new multi-modal embeddings for images, noting significant improvements in their applications.

- However, concerns were raised about the 40 requests per minute rate limit, which hinders their intended use case, leading them to seek alternative solutions.

- Harmony Project Streamlines Questionnaire Harmonization: The Harmony project aims to harmonize questionnaire items and metadata using LLMs, facilitating better data compatibility for researchers.

- A competition is being hosted to enhance Harmony's LLM matching algorithms, with participants able to register on DOXA AI and contribute to making Harmony more robust.

Torchtune Discord

-

Adaptive Batching Optimizes GPU Usage: The implementation of adaptive batching aims to maximize GPU utilization by dynamically adjusting batch sizes to prevent OOM errors during training.

- It was suggested to integrate this feature as a flag in future recipes, ideally activated when

packed=trueto maintain efficiency. - Enhancing DPO Loss Structure: Concerns were raised about the current TRL code structure regarding the inclusion of recent papers on DPO modifications, as seen in Pull Request #2035.

- A request was made to clarify whether to remove SimPO and any separate classes to keep the DPO recipe clean and straightforward.

- SageAttention Accelerates Inference: SageAttention achieves speedups of 2.1x and 2.7x compared to FlashAttention2 and xformers, respectively, while maintaining end-to-end metrics across various models.

- Pretty cool inference gains here! expressed excitement about the performance improvements introduced by SageAttention.

- Benchmarking sdpa vs. Naive sdpa: Members recommended benchmarking the proposed sdpa/flex method against the naive sdpa approach to identify performance differences.

- The numerical error in scores may vary based on the sdpa backend and data type used.

- Nitro Subscription Affects Server Boosts: A member highlighted that server boosts will be removed if a user cancels their free Nitro subscription, impacting server management.

- This underscores the importance of maintaining Nitro subscriptions to ensure uninterrupted server benefits.

- It was suggested to integrate this feature as a flag in future recipes, ideally activated when

tinygrad (George Hotz) Discord

-

Tinygrad Tackles Triton Integration: A user inquired about Tinygrad's native integration with Triton, referencing earlier discussions. George Hotz directed them to consult the questions document for further clarification.

- Further discussions clarified the integration steps, emphasizing the compatibility between Tinygrad and Triton for enhanced performance.

- SASS Assembler Seeks PTXAS Replacement: Members discussed the future of the SASS assembler, questioning if it is intended to replace ptxas. George Hotz suggested referring to the questions document for more details.

- This has sparked interest in the potential improvements SASS assembler could bring over ptxas, though some uncertainty remains regarding the assembler's long-term role.

- FOSDEM AI DevRoom Seeks Tinygrad Presenters: A community member shared an opportunity to present at the FOSDEM AI DevRoom on February 2, 2025, highlighting Tinygrad's role in the AI industry. Interested presenters are encouraged to reach out.

- The presentation aims to showcase Tinygrad's latest developments and foster collaboration among AI engineers.

- Tinybox Hackathon Hopes for Hands-on Engagements: A member proposed organizing a pre-FOSDEM hackathon, suggesting bringing a Tinybox on-site to provide hands-on experiences. They expressed enthusiasm about engaging the community over Belgian beer during the event.

- The hackathon aims to facilitate practical discussions and collaborative projects among Tinygrad developers.

- Exploring Int64 Indexing in Tinygrad: A member questioned the necessity of int64 indexing in scenarios not involving huge tensors, seeking to understand its advantages. The discussion aims to clarify the use-cases of int64 indexing beyond large-scale tensor operations.

- Exploring various indexing techniques, the community is evaluating the performance and efficiency impacts of int64 versus int32 indexing in smaller tensor contexts.

Modular (Mojo 🔥) Discord

- Async functions awaitable in Mojo sync functions: A member is puzzled about being able to await an async function inside a sync function in Mojo, which contrasts with Python's limitations, seeking clarification or an explanation for this difference in handling async functionality.

- Inquiry about Mojo library repository: Another member is curious about the availability of a repository for libraries comparable to pip for Mojo, looking for resources or links that provide access to Mojo libraries.

-

Moonshine ASR Model Tested with Max: A user tested the Moonshine ASR model performance using both the Python API for Max and a native Mojo version, noting both were about 1.8x slower than the direct onnxruntime Python version.

- The Mojo and Python Max versions took approximately 82ms to transcribe 10 seconds of speech, whereas the native onnxruntime reached 46ms. Relevant links: moonshine.mojo and moonshine.py.

- Mojo Model.execute Crash Due to TensorMap: Instructions for running the Moonshine ASR model are provided in comments at the top of the mojo file that was shared.

- The user's experience highlighted that passing in TensorMap into Model.execute caused a crash, and manual unpacking of 26 arguments was necessary due to limitations in Mojo. Relevant link: moonshine.mojo.

- Seeking Performance Improvements in Mojo: The user expressed that this is one of their first Mojo programs and acknowledged that it may not be idiomatic.

- They requested assistance for achieving better performance, emphasizing their eagerness to improve their Mojo and Max skills.

OpenAccess AI Collective (axolotl) Discord

-

Tencent Hunyuan Model Fine Tuning: A member inquired about fine-tuning the Tencent Hunyuan model, sharing links to the GitHub repository and the official website.

- Additional resources provided include the Technical Report and a Demo for reference.

- Bits and Bytes on MI300X: A member shared their experience using Bits and Bytes on the MI300X system, highlighting its ease of use.

- They emphasized the necessity of using the

--no-depsflag during updates and provided a one-liner command to force reinstall the package. - Axolotl Collab Notebooks for Continual Pretraining of LLaMA: A user asked if Axolotl offers any collab notebooks for the continual pretraining of LLaMA.

- Phorm responded that the search result was undefined, indicating no available notebooks currently, and encouraged users to check back for updates.

DSPy Discord

-

Juan seeks help with multimodal challenges: Juan inquired about using the experimental support for vision language models while working on a multimodal problem.

- Another member offered additional assistance by saying Let me know if there are any issues!.

- Juan discovers the mmmu notebook: Juan later found the mmmu notebook himself, which provided the support he needed for his project.

- He thanked the community for their awesome work, showing appreciation for the resources available.

- Semantic Router as a Benchmark: A member suggested that the Semantic Router should serve as the baseline for performance in classification tasks, emphasizing its superfast AI decision making capabilities.

- The project focuses on intelligent processing of multi-modal data, and it may offer competitive benchmarks we aim to exceed.

- Focus on Performance Improvement: There was an assertion that the performance of existing classification tools needs to be surpassed, with the Semantic Router as a reference point.

- Discussion revolved around identifying metrics and strategies to achieve better results than the baseline set by this tool.

LlamaIndex Discord

-

LLM-Native Resume Matching Launched: Thanks to @ravithejads, an LLM-native solution for resume matching has been developed, enhancing traditional screening methods.

- This innovative approach addresses the slow and tedious process of manual filtering in recruitment, offering a more efficient alternative.

- Building AI Agents Webinar on December 12: Join @Redisinc and LlamaIndex for a webinar on December 12, focusing on building data-backed AI agents.

- The session will cover architecting agentic systems and best practices for reducing costs and optimizing latency.

- PDF Table Data Extraction Methods: A member in #general inquired about approaches to extract table data from PDF files containing text and images.

- They expressed interest in knowing if there are any existing applications that facilitate this process.

- Applications for PDF Data Extraction: Another member sought recommendations for applications available to extract data specifically from PDFs.

- This highlights a need within the community for tools that can handle various PDF complexities.

OpenInterpreter Discord

-

New UI sparks mixed feelings: Some users feel the new UI is slightly overwhelming and unclear in directing attention, with one comparing it to a computer from Alien.

- However, others are starting to appreciate its UNIX-inspired design, finding it suitable for 1.0 features.

- Rate Limit Configuration Needed: A user expressed frustration over being rate limited by Anthropic, noting that current error handling in Interpreter leads to session exits when limits are exceeded.

- They emphasized the importance of incorporating better rate limit management in future updates.

- User Calls for UI Enhancements: There are calls for a more informative UI that displays current tools, models, and working directories to enhance usability.

- Users are also advocating for a potential plugin ecosystem to allow customizable features in future releases.

- Compute Workloads Separation Proposed: One member suggested splitting LLM workloads between local and cloud compute to optimize performance.

- This reflects a concern about the limitations of the current Interpreter design, which is primarily built for one LLM at a time.

LLM Agents (Berkeley MOOC) Discord

-

Intel AMA Session Tomorrow: A Hackathon AMA with Intel is scheduled for 3 PM PT tomorrow (11/21), offering participants direct insights from Intel specialists. Don’t forget to watch live here and set your reminders!

- Participants are encouraged to prepare their questions to maximize the session's benefits.

- Participant Registration Confusion: A user reported not receiving emails after joining three different groups and registering with multiple email addresses, raising uncertainties about the success of their registration.

- Clarification on Event Type: A member sought clarification on whether the registration issue pertained to the hackathon or the MOOC, highlighting potential confusion among participants regarding different registration types.

Mozilla AI Discord

-

Refact.AI Live Demo Highlights Autonomous Agents: Refact.AI is hosting a live demo showcasing their autonomous agent and tooling.

- Join the live demo and conversation to explore their latest developments.

- Refact.AI Unveils New Tooling: The Refact.AI team has released new tooling to support their autonomous agent projects.

- Participants are encouraged to engage with the tools during the live demo event.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LAION Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!