[AINews] DeepMind SIMA: one AI, 9 games, 600 tasks, vision+language ONLY

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 3/12/2024-3/13/2024. We checked 364 Twitters and 21 Discords (336 channels, and 3167 messages) for you. Estimated reading time saved (at 200wpm): 376 minutes.

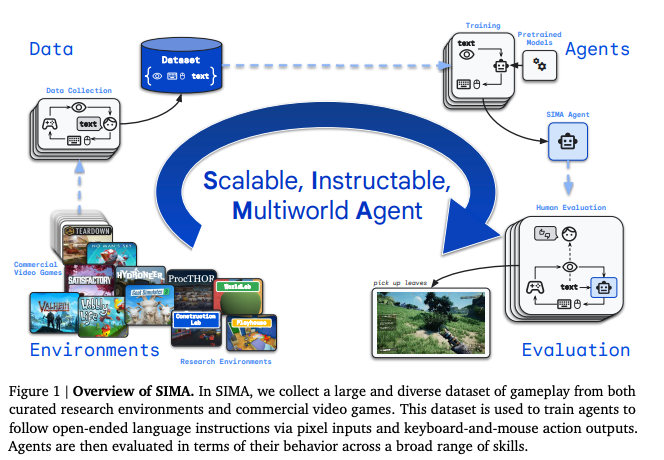

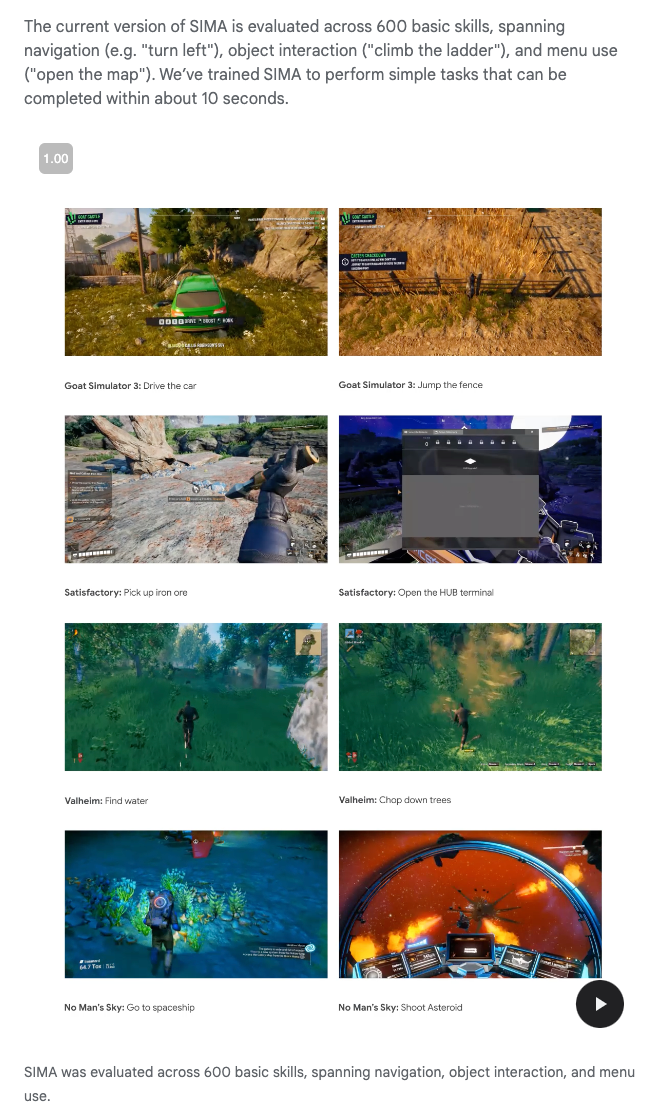

DeepMind SIMA is the news of the day: it takes a step beyond specialist AI systems developed for MineCraft or Dota 2 to be more general. Deepmind collaborated with game studios to evaluate it's abilities on 600 short (<10 second) skills in 9 different games, from No Man's Sky to Hydroneer to Goat Simulator.

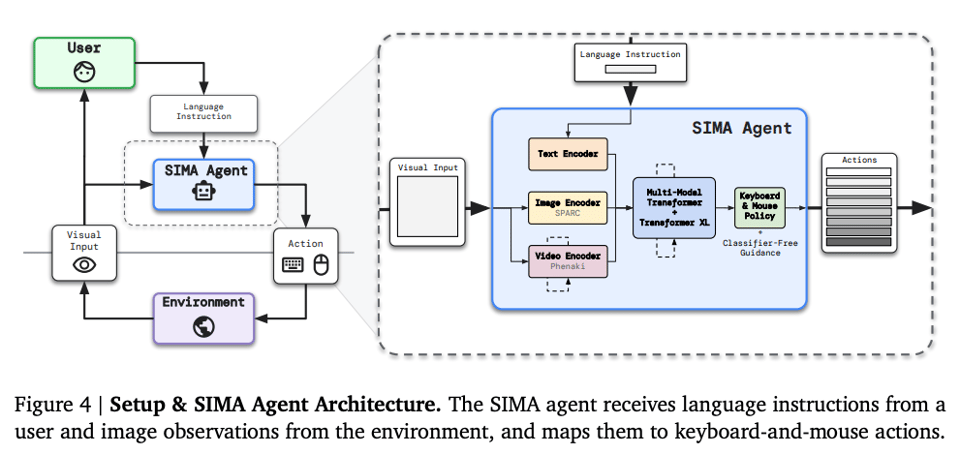

The key constraint here is that SIMA only works from screengrabs + natural language instructions - no special APIs involved. The technical report offers a little more detail, with the classic multimodal Transformer you'd expect, with the Google flavors of things:

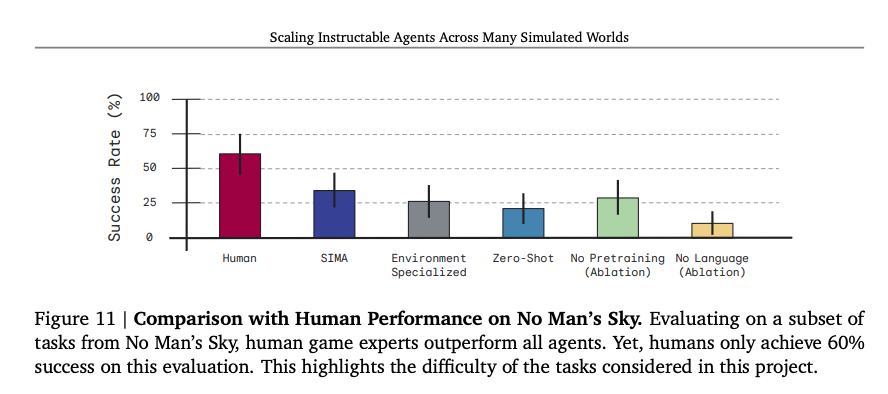

The 600 tasks are hard - humans only solve 60% of them, while SIMA hits 34%.

Table of Contents

- PART X: AI Twitter Recap

- PART 0: Summary of Summaries of Summaries

- PART 1: High level Discord summaries

- Nous Research AI Discord Summary

- Latent Space Discord Summary

- Perplexity AI Discord Summary

- Unsloth AI (Daniel Han) Discord Summary

- LM Studio Discord Summary

- OpenAI Discord Summary

- Eleuther Discord Summary

- LAION Discord Summary

- LlamaIndex Discord Summary

- OpenAccess AI Collective (axolotl) Discord Summary

- LangChain AI Discord Summary

- OpenRouter (Alex Atallah) Discord Summary

- Interconnects (Nathan Lambert) Discord Summary

- CUDA MODE Discord Summary

- DiscoResearch Discord Summary

- LLM Perf Enthusiasts AI Discord Summary

- Datasette - LLM (@SimonW) Discord Summary

- Skunkworks AI Discord Summary

- Alignment Lab AI Discord Summary

- AI Engineer Foundation Discord Summary

- PART 2: Detailed by-Channel summaries and links

PART X: AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs

Automating Software Engineering

- Andrej Karpathy outlines a progression of increasing AI autonomy in software engineering, similar to self-driving, where the AI does more and the human provides oversight at higher levels of abstraction.

- Arav Srinivas praises Cognition Labs' demo as the first agent that reliably crosses the threshold of human-level performance.

- François Chollet believes we are far from being able to automate more than an infinitesimal fraction of his job as a software engineer.

Large Language Models and AI Architectures

- Yann LeCun suggests abandoning (at least partially) generative models, probabilistic modeling, contrastive methods, and reinforcement learning on the way to human-level AI.

- François Chollet shares his views on nature vs nurture, stating that humans are intelligent from the beginning, almost all knowledge is acquired, and intelligence decreases with age.

- Andrej Karpathy recommends an AI newsletter by @swyx & friends that indexes ~356 Twitters, ~21 Discords, etc. using LLM aid. (swyx: Thanks Andrej!!!)

AI Agents and Demos

- Cognition Labs' demo of an AI agent solving coding tasks impresses many in the AI community.

- Deepgram's Aura offers fast text-to-speech and speech-to-text APIs with under 250 ms latency, enabling real-time conversational AI applications.

- Modal Labs' Devin AI navigates docs, installs, authenticates, and interacts with a ComfyUI deployment in a browser.

AI Infrastructure and Training

- Soumith Chintala shares details on Meta's 24k H100 Cluster Pods used for Llama3 training, including network, storage, and software optimizations.

- Yann LeCun shares an image of the computing infrastructure used for Llama-3 training.

- John Carmack notes the difficulty of trusting comparisons in research due to subtle changes in algorithm details and training/testing procedures.

Memes and Humor

- "Guess the prompt" meme shared by Arav Srinivas.

- Meme suggesting competition between AI developers.

- "It's over" meme shared by @AISafetyMemes.

PART 0: Summary of Summaries of Summaries

Since Claude 3 Haiku was released recently, we're adding them to this summary run for you to compare. We'll keep running these side by side for a little longer while we build the AINews platform for a better UX.

Claude 3 Haiku (3B?)

- Devin, the AI Software Engineer: Cognition Labs has unveiled Devin, an autonomous AI software engineer capable of performing complex engineering tasks and setting a new benchmark on the SWE-bench coding challenge. Devin can navigate a shell, code editor, and web browser to complete software engineering work, showcasing the potential for AI to automate software development. Devin's capabilities have generated significant excitement in the AI community.

- Advances in Large Language Model Capabilities: Researchers are exploring ways to enhance the performance and efficiency of large language models (LLMs). This includes developing methods like MemGPT to improve long-term memory management, as well as the release of models like Command-R which is optimized for long-context tasks and retrieval-augmented generation. There is also interest in open-source alternatives to proprietary models like GPT-4.

- Hardware and Infrastructure for AI: Major tech companies like Meta and Microsoft are making significant investments in AI hardware and infrastructure. Meta announced plans to build two 24,000 GPU clusters and acquire 350,000 NVIDIA H100 GPUs by 2024, while Microsoft has hired nuclear experts to explore using small modular reactors to power their data centers. Advancements in CUDA and NVMe SSD integration are also enabling more efficient data processing for AI workloads.

- Challenges and Discussions Around AI Models: The community is grappling with various issues related to AI models, such as: Prompt Engineering: Developing effective prompts to control language model outputs, including maintaining consistent word counts and formatting.

- Accuracy and Transparency: Concerns about the reliability and trustworthiness of AI model outputs, especially when using online or experimental models.

- Open-Source Alternatives: Excitement and skepticism around the potential release of open-source AI models like Grok AI and GemMoE, with discussions on model security and integration with existing tools.

Claude 3 Sonnet (14B?)

1) Introducing Autonomous AI Software Engineers like Devin:

- Cognition Labs unveiled Devin, claimed to be the world's first autonomous AI software engineer capable of complex engineering tasks like resolving GitHub issues, navigating developer tools, and setting new benchmarks on SWE-Bench.

- Devin's debut sparked excitement, with users keen to try it and share "unfiltered opinions" on its real-world capabilities demonstrated through tweets and anticipated performance on SWE-Bench.

2) Advancements in Large Language Models and AI Hardware:

- Anthropic released Claude 3 Haiku, a fast and affordable multimodal model now available on their API and claude.ai for Pro users, boasting strong performance across reasoning, math, and coding tasks.

- Meta announced two massive 24k GPU clusters using 350,000 NVIDIA H100 GPUs by 2024, marking a significant AI infrastructure investment with details on hardware, network, and software.

- Cerebras unveiled the CS-3 chip, claimed as the world's fastest AI accelerator capable of training up to 24 trillion parameter models on a single device.

3) Open-Source AI Model Releases and Benchmarking Efforts:

- CohereForAI released the open-source 35B parameter Command-R model optimized for reasoning, summarization, and multilingual generation.

- The EQ-Bench creative writing benchmark went live, allowing ranking of model creativity, though still a work-in-progress.

- DiscoResearch fine-tuned German language models, while the Mistral Medium model showcased strong performance compared to Mixtral.

4) Frameworks and Tools for Efficient Large Model Training:

- The Fuyou framework enables fine-tuning up to 175B parameter models on consumer GPUs like RTX 4090, promising efficiency gains over ZeRO-Infinity.

- Unsloth AI offers QLoRA support for Mistral 7B, CodeLlama 34B, and Llama architecture models, with features like sliding window attention and preliminary DPO support.

- Developments in CUDA allow NVMe drives to use direct memory access and GPU integration via NVIDIA's GPUDirect® Storage API for storage applications.

Claude 3 Opus (>220B?)

- Hermes 2 Pro 7B Model Launches with Advanced Capabilities: Nous Research released the Hermes 2 Pro 7B model, boasting a 91% Function Calling Accuracy and enabling advanced function calling and structured JSON outputs. The model and datasets are available on Hugging Face and GitHub.

- EU AI Act Stirs Controversy and Compliance Concerns: The EU AI Act has passed, prohibiting certain AI practices, mandating energy consumption disclosures, and potentially impacting AI companies operating in Europe. Discussions revolved around the implications for the AI industry and the challenges of regulation enforcement.

- Cognition Labs Unveils Devin, the AI Software Engineer: Cognition Labs introduced Devin, an autonomous AI software engineer surpassing benchmarks by resolving 13.86% of GitHub issues on the SWE-Bench coding benchmark. Devin's capabilities and potential impact sparked excitement and debates, as detailed in their blog post and demo video.

- Cerebras Announces World's Fastest AI Chip: Cerebras Systems unveiled the CS-3, claiming to be the world's fastest AI accelerator capable of training models up to 24 trillion parameters on a single device. The announcement generated discussions about the chip's design and potential for advancing AI compute technology, as shared in their press release.

- Anthropic Launches Claude 3 Haiku: Anthropic released Claude 3 Haiku, a fast and affordable AI model praised for its speed (120 tokens per second) and cost-effectiveness (4M prompt tokens per $1). The model's capabilities and potential applications were discussed, with details available in Anthropic's announcement.

- Meta Invests Heavily in AI Infrastructure: Meta announced plans for two 24k GPU clusters and aims to integrate 350,000 NVIDIA H100 GPUs by 2024, marking a significant investment in their AI future. The company shared details on their hardware, software, and open-source initiatives in an engineering blog post.

- Advancements in CUDA and NVMe Integration: Developments in CUDA now allow NVMe drives to leverage direct memory access and GPU integration for storage applications, promising substantial efficiency gains. Resources like the ssd-gpu-dma GitHub repository and NVIDIA's GPUDirect Storage API reference guide were shared to highlight these advancements.

- Stealing Weights from Production Language Models: A research paper revealed the possibility of inferring weights from production language models like ChatGPT and PaLM-2 using their APIs, raising concerns about AI ethics and future security measures for protecting model weights.

ChatGPT (GPT4T)

AI in Game Development: Nous Research AI Discord explores AI's role in game development, particularly the creation of Plants Vs Zombies using Claude 3, highlighting the integration of AI with Python for game creation. The project showcases AI-driven creativity in game design, watchable here.

Advancements in Function Calling: The introduction of Hermes 2 Pro 7B model, with a 91% Function Calling Accuracy, marks a significant advancement in AI's capability to execute function calls, making it a notable tool for developers integrating AI with programming environments like llama.cpp and the Vercel AI SDK. This development is part of a broader effort to blend AI with programming through structured JSON outputs, detailed on GitHub.

The AI Chip Debate: The Cerebras CS-3 chip, reputed as the world's fastest AI accelerator, ignites discussions on its square design's efficiency in training a 24 trillion parameter model on a single device. This discussion underscores the ongoing innovations in AI compute technology, with more information available on Cerebras.

New Developments in AI Software Engineering: Cognition Labs' Devin emerges as a new AI software engineer capable of autonomously addressing GitHub issues and demonstrating proficiency in navigating various development tools. This marks a significant milestone in AI's integration into software development, hinting at future capabilities in automating programming tasks, as showcased at Cognition Labs Devin.

AI-Powered Solitaire Instruction: A project utilizing OpenCV for Solitaire instruction exemplifies AI's potential in recreational gaming, aiming to develop a system that uses image capture and processing to guide gameplay. This initiative reflects the expanding applications of AI beyond traditional domains into enhancing user experiences in games, with further development expected to integrate with language models like GPT for deeper game analysis.

PART 1: High level Discord summaries

Nous Research AI Discord Summary

Planting AI in the Game Development Field: An AI-driven endeavor to create Plants Vs Zombies using Claude 3 was showcased, piquing interest for its application of Python game development — watch the creativity unfold here.

Function Calling Is the New Black: The recently released Hermes 2 Pro 7B model shows significant advancements with a 91% Function Calling Accuracy and specialized prompts enabling advanced function calling. The model’s prowess is acknowledged by its enthusiastic uptake for integration with tools like llama.cpp and the Vercel AI SDK, seeking a new blend of structured JSON outputs GitHub - Hermes Function Calling.

The AI Chip Shape Debate: What's the most optimal design for the new Cerebras CS-3, the proclaimed world's fastest AI accelerator? Discussions swirl around its square chip design, while the model boasts readiness to train a colossal 24 trillion parameter model on a single device, presenting a leap in AI compute technology Cerebras.

The AI Rules Are Changing: The EU's new AI Act stirs the pot for AI companies by outlawing certain AI practices and demanding energy consumption disclosures. Meanwhile, anticipation brews for the open-source models with a focus on long context chatbots, as referenced in the Sparse Distributed Associative Memory repository.

Cognition Introduces a New Player to the Field: Enter Devin, an AI software engineer claiming a new success benchmark in addressing GitHub issues autonomously, demonstrating capabilities to navigate a shell, code editor, and web browser — a glimpse of the future at Cognition Labs Devin.

Latent Space Discord Summary

- Introducing Devin the AI SWE: Cognition Labs launched Devin, an AI that sets a new bar by resolving a notable 13.86% of GitHub issues and impressing with its engineering interview skills. Industry attention spotlights Devin’s capabilities, enhanced by SWE-Bench performance metrics. Cognition Labs provide further insights into Devin's development and functionality.

- Model Weight Security in Question: Researchers exposed the feasibility of inferring weights from APIs of models such as ChatGPT and PaLM-2, inciting debates over AI ethics and future protection strategies. The implications are found detailed in a recent paper that outlines the potential risks and methods of model weight extraction.

- Expanding AI Horizons: Together.ai secured a $106M funding round to create a new platform intended for executing generative AI apps, introducing Sequoia, a method designed for efficient LLM operation. Meanwhile, Cerebras revealed the CS-3 AI chip, claiming the fastest training capabilities for models upwards of 24 trillion parameters. Details about Sequoia and CS-3 advancements can be accessed at Together AI's blog and Cerebras' press release, respectively.

- Synthesizing Success: During the LLM Paper Club event, Eugene Yan emphasized the effectiveness of synthetic data in model training, including pretraining and instruction-tuning, spotlighting its cost-efficiency and bypass of privacy concerns. The community is encouraged to read his insights on synthetic data.

- Navigating Voice Recognition's Potential: The community explored voice recognition with a focus on applications such as text-to-speech and speech-to-text using tools like vapi.ai and whisper. Twitter threads and resources like whisper.cpp illustrate the ongoing discussions in voice technology.

Perplexity AI Discord Summary

- Claude3 Opus Powers Perplexity AI: Perplexity AI is confirmed to utilize Claude3 Opus for its operations. Users explored the platform's capabilities with a focus on productivity and effective research assistance, while expressing concerns about the limitations of AI plagiarism detection tools and seeking clarification on various Perplexity AI services, including the switch from Claude 3 Opus to Claude 3 Sonnet after 5 uses.

- Search Engine Evolution Discussed: The community engaged in discussions on the future of search engines, noting Perplexity AI's utilization of its own indexer. Concurrently, there were mentions of growing calls for Google CEO Sundar Pichai to step down and the increasing competition in the search domain from generative-AI rivals.

- Enhancing AI Interactivity: Members shared Perplexity AI search query links on topics ranging from sleep advice to understanding medical terminology. Additionally, the sharing of a YouTube video indicated interest in the latest AI news including AI-generated "Digital Marilyn" and controversies between Midjourney and Stability AI.

- API Navigation and Customization Tactics: Queries in the #[pplx-api] channel reflected concerns over achieving concise answers through tailored prompting and parameter settings. There was also discussion about enabling chatbot models to remember conversations with the use of external databases for storing embeddings, and a call for adding Yarn-Mistral-7b-128k to the API for high-context use cases.

- Accuracy and Ethics in AI Responses: The community expressed anxieties about inaccurate information returned by AI when using the -online tag, leading to suggestions for the incorporation of a system message for clarity. For further understanding and experiments with system messages, the Perplexity API documentation was referenced.

Unsloth AI (Daniel Han) Discord Summary

- Rope The Kernel Efficiency: A rope kernel improvement was shared focusing on loading sin/cos functions just once to boost efficiency along axis 1 and employing sequential computation for head grouping. Despite a related PR, concerns about the effect on overall training performance persist.

- Unsloth's Fine-tuning Finesse: Unsloth Studio, a feature to enable one-click finetuning, is reported to be in beta, while the Unsloth community solves issues like importing FastLanguageModel on Kaggle by manually reinstalling libraries. Unsloth's GitHub wiki offers FAQs and a guide for dataset formatting for DPO pair style data.

- Open Source OpenAI Skepticism: There's excitement and concern in the community about the future of open-source AI models like GemMoE and Grok AI. Discussions include whether OpenAI will join the open-source movement, while concerns loom about potential model piracy and ties to Microsoft Azure.

- Overcoming Ubuntu WSL's Dependency Paradox: Users discussing dependency loops in Ubuntu WSL involving bitsandbytes, triton, torch, and xformers identified a Python error preventing package installation. A suggested workaround involves installing xformers from PyTorch's cu121 index directly and resorting to Unsloth's nightly GitHub builds.

- Quantizing Mixer Woes: Efforts to quantize the Mixtral model on three V100 32GB GPUs hit a memory snag, leading to a helpful community suggestion to try 2x A100 80GB GPUs or utilize Unsloth's built-in GGUF support. Meanwhile, queries about Nous-Hermes model tokenization surfaced, resolved by an alternative model and dataset adjustment.

- Storage Innovation for Model Fine-tuning: AI enthusiasts shared a paper exploring the use of NVMe SSDs to potentially overcome GPU memory limits for fine-tuning large models. Useful resources like the ssd-gpu-dma and flashneuron project on GitHub were cited for contributing to GPU storage applications, along with NVIDIA's GPUDirect® Storage API documentation for direct data path APIs.

LM Studio Discord Summary

LaTeX Rendering Sparks Engineered Excitement: Discussions highlighted a desire for LM Studio to support LaTeX in markdown, as seen in a GitHub blog post, with an eye on improving math problem interfaces. Members pondered the incorporation of swipe-style blackboards for visual math inputs, demonstrating a playful tone surrounding serious technical aspirations.

GPU Performance Unearthed: A shared YouTube performance test fueled talks about the benefits and technical considerations of using dual GPU configurations for large language models (LLMs), including setups with dual RTX 4060 Ti 16GB GPUs. Some members noted more than two-fold efficiency gains while others shared tips for optimal configurations, even as they humorously exchanged views about high-priced NVLINK bridges and alternatives.

Better Together or Alone? Dual GPU Configs vs. Single: The effectiveness of running LLMs on a single GPU versus dual setups was scrutinized by engineers sharing personal testing outcomes. Discussions ranged from configuration tweaks for GPUs with mismatched VRAM to exploring the feasibility of powering multiple high-end GPUs.

Upgraded RAM for a Smarter Tomorrow: RAM upgrade considerations to run larger LLM models, such as upgrading to 128GB of RAM, were weighed against the need for more VRAM. Community members offered insights on hardware configurations and performance modifications, including tips for running concurrent instances of LM Studio and enhancing GPU acceleration with AMD's ROCm beta.

KIbosh on iGPU to Enhance Main GPU's Power: Users in the amd-rocm-tech-preview thread found that disabling the iGPU could resolve offloading issues. They exchanged strategies and suggestions for optimizing ROCm beta with AMD GPUs, from installing specific driver combos like Adrenalin 24.1.1 + HIP-SDK to cleaning cached directories for better model loading in LM Studio.

AVX Beta Buzzes Quietly: In the 🧪-beta-releases-chat, there was a brief touch on version updates to AVX beta and minimal conversation about the quality of unspecified subjects, with an expressed opinion that they "aren't any good".

OpenAI Discord Summary

- AI Helps Deal the Cards: A project to develop a Solitaire instruction bot using OpenCV was proposed, envisioning a robotic system that captures laptop screen images to guide game play. Building this system includes steps like researching game rules, assembling hardware, crafting image processing algorithms, and integrating with a language model like GPT for in-depth game analysis alongside iterative design refinement.

- Video Generation AI Sora Narrowly Accessible: Sora, an AI model for video generation, was mentioned as being costly and inaccessible for public testing. However, visual artists and filmmakers have reportedly had the opportunity to access and provide feedback for Sora, suggesting limited test releases among specific user groups.

- GPT's Economic Walls and Outage Workarounds: The cost of AI services like GPT-4 subscriptions was discussed, highlighting the disproportionate expense relative to minimum wages in countries like Georgia and Brazil. Additionally, during instances of GPT outages, users recommended checking OpenAI's status page and considered starting a new interaction as a possible temporary solution.

- Prompt Engineering for Word Consistency: In the realm of prompt engineering, users exchanged strategies for instructing AI to maintain word counts during text rewrites. The use of positive instructions without being overly prescriptive was suggested to yield consistent results, while the Code Interpreter was confirmed to accurately count words against a conventional word count standard.

- Custom AI Development Dilemmas: Individuals discussed self-hosting models for personal use, like processing journal data, with emphasis on understanding hardware requirements. For those enhancing a CustomGPT model capable of consulting PDFs and performing web searches, it was advised that clear instructions are necessary due to the AI's limitations with image recognition in PDF documents.

Eleuther Discord Summary

- Claude vs GPT for Scholarly Summarization: Claude 3 was tested for summarizing academic papers and said to perform well with general summarization, but not for in-depth specifics. This was amid a mix of discussions, including a job-seeking ML Engineer from EleutherAI's Polyglot and OSLO projects, an introduction to an experienced newcomer in ML, and debates about batch size trade-offs for model training efficiency with reference to the An Empirical Model of Large-Batch Training.

- Optimization Myths and Multi-Model Efficiency: A member debunked the myth regarding beta2 and training stability, suggesting alternatives like ADAM, while the potential of LoRA's extension to pre-training was deliberated with prospects of it working in tandem with methods like GradientLoRA. Moreover, a paper on Deep Neural Collapse added to the conversation about neural network training trajectories and architectures.

- Model Hacking Ethics and Pruning Perspectives: Debating the boundaries of ethical model hacking, a member acknowledged that prior permission makes model hacking ethically acceptable, referring to a disclosed paper. Moreover, Pythia was highlighted for its interpretability-driven capability unlearning, and there was an announcement for a new mechanistic interpretability library for multimodal models, inviting collaboration via a Twitter announcement.

- Leaderboards Under Scrutiny: In the thunderdome of AI performance, the limitations of benchmark tests like SQuAD were discussed. The GPQA dataset provokes a reevaluation of the assistance value of AI models, where even GPT-4 encounters difficulty, emphasizing the necessity for more robust supervision, showcased in the Anthropic paper.

- Megatron and GPT-NeoX Synchronization Considered: A member proposed closely tracking upstream Megatron for better alignment with their Transformer Engine, with relevant code differences in a GitHub pull request awaiting feedback from project maintainers.

LAION Discord Summary

- Lobbyist Charm or Pocket Harm?: Lobbyists' power was noted to stem from their influence through financial contributions, leading to negotiations rather than benefiting personally from the money they distribute.

- Terminating the Terminator Scenario: A jovial discussion unfolded around the potential catastrophic scenarios involving AI, including speculations on a finetuned AI causing an extinction event, met with satirical comments on the failure of past extinction events.

- AI Overlords: Unlikely but Worth a Discussion: There was skepticism regarding the possibility of AI leading to an overreaching government authority, with members sarcastically dismissing the likelihood of coordinated actions that might cause such a scenario.

- Navigating the Future of AI Regulation: Debates erupted around issues like copyright infringement with AI-generated model weights, the effectiveness of DMCA, and the implications of new EU regulations on AI, linking to the European Lawmakers' Act.

- Choosing the Best Tools for AI Power-Users: Discussions also covered hardware and software preferences for AI inference tasks, with an emphasis on using GPUs and the efficiency of local setups versus API-centric solutions. References were made to platforms and technologies such as GroqChat, Meta's AI Infrastructure, and BUD-E.

- Halting Hallucinations: Suggestions were made to use simple and short prompts when employing CogVLM to generate data to minimize the production of incorrect outputs.

- The Art of Attention in AI: A consensus implied that cross attention may not be the best mechanism for adding conditioning to models, as alternative methods involving transforming text embeddings proved to achieve better denoising results.

- Mixing up AI with MoAI: Enthusiasm was evident for the new Mixture of All Intelligence (MoAI) model, which promises superiority over existing models while retaining a smaller footprint, with resources available on GitHub and Hugging Face.

- Citation Celebration for User Dataset: A member shared their excitement about their dataset being referenced in the DeepSeekVL paper, showcasing the community's contributions to advancing AI research.

- Busting the Great Memory Myth: A claim about being able to load a 30B model into 4GB of memory using lazy loading was corrected, revealing that mmap obscured the actual memory usage until accessed.

- Delve into LAION-400M: A message praised Thomas Chaton's article that guides users on utilizing the vast LAION-400-M images & captions dataset, linking to the article for further insights.

LlamaIndex Discord Summary

- MemGPT Storms Long-Term Memory Front: MemGPT is tackling the long-standing issue of long-term memory for LLMs, bringing new capabilities like "virtual context management" and function calling to enhance memory performance. Don't miss the opportunity to dive deeper into these advancements by registering for the MemGPT webinar here.

- Paris Meetup for Open-Source AI Devs: Ollama and Friends are rolling out the red carpet for open-source AI developers at Station F in Paris on March 21st, with food, drinks, and demos on the menu. Lock in your spot or apply to present a demo by reaching out via Twitter.

- LlamaIndex and MathPix Concoct Scientific Search Elixir: A collaboration between LlamaIndex and MathPixApp aims to distill scientific queries down to their LaTeX essence, promising exceptional search capabilities through document indexing. For the curious mind, a guide through this alchemy, featuring image extraction and text indexing, is signaled through a Twitter beacon.

- Chatbot Conjuring and Indexing Incantations: The guild sizzles with dialogues on boosting chatbot responses and indexing, proposing the use of DeepEval with LlamaIndex for performance optimization and deploying ensemble query engines to curate diverse responses. For those keen on summoning insights, the spellbooks can be found here and here.

- The LLM Paper Compendium Emerges: On the horizon, a vast repository of LLM research blooms, curated by shure9200. Scholars may venture to this trove to unearth recent academic advancements and chart new paths in the realm of LLMs.

OpenAccess AI Collective (axolotl) Discord Summary

Axolotl Embraces DoRA for Low-Bit Quantization: DoRA (Differentiable Quantization of Weights and Activations) support for 4-bit and 8-bit quantized models has been successfully merged, promising performance improvements, although it's limited to linear layers with notable overhead. Interested engineers can dive into the merge details on GitHub.

Big Models, Little GPUs - Fuyou to the Rescue: The Fuyou framework has shown potential in allowing engineers to fine-tune behemoth models up to 175 billion parameters on standard consumer-grade GPUs such as the RTX 4090, sparking interest for those confined by hardware limitations. _akhaliq's tweet weighs in on the excitement, flaunting a 156 TFLOPS computation capability.

API Evolution in DeepSpeed: DeepSpeed introduced an API modification for setting modules as leaf nodes which could make it easier to work with MoE models, thereby potentially benefiting Axolotl's development plans. More information is provided in their GitHub PR.

Command-R Forges Ahead with 35B Parameters: The creation of Command-R by CohereForAI, an open-source 35 billion parameter model, opens new frontiers as it's optimized for a multitude of use cases and accessible on Huggingface.

Mistral Medium Outshines Mixtral: In community showcases, Mistral Medium is noted for outperforming Mixtral, delivering more concise and instructive-compliant outputs while generating more relevant citations, possibly indicating an advanced, possibly closed-sourced, version of Mixtral.

LangChain AI Discord Summary

- Performance Tweak Initiates Mode Switch Query: Langchain users discuss the effectiveness of switching from

chat-instructtochatmode for a chatbot using LlamaCpp model in oobabooga's text-generation-webui, sharing code snippets and prompting questions about implementation in the langchain application. - Doc Dilemmas and Call for Updates: Concerns are expressed about outdated and inconsistent Langchain documentation, with users emphasizing the importance of keeping the docs up-to-date to better track package imports and use.

- Launch and Learn from ReAct and Langchain Chatbot: Announcements include the launch of the ReAct agent, inspired by a synergy of reasoning and acting in language models, as well as the open-source release of the LangChain Chatbot featuring RAG for Q&A querying, inviting feedback and exploration through the provided GitHub repository.

- Video Vistas on AI Tech and Application Tutorials: Shared are various tutorials and showcases, such as the use of Groq's hardware to build a real-time AI cold call agent, Command-R's long context task capabilities, and a guide on creating prompt templates with Langchaingo for Telegram groups.

- Advancements in Mental Health Support AI: A new article describes MindGuide, aiming to revolutionize mental health care using LangChain with large language models, underlining the importance of tech-based interventions in mental health.

OpenRouter (Alex Atallah) Discord Summary

- OpenRouter Briefly Stumbles but Recovers: During a database update, OpenRouter faced a transient issue causing unavailability of the activity row for approximately three minutes, but no charges were levied for affected completions.

- Claude 3 Haiku Makes a Splash: The newly introduced Claude 3 Haiku model is generating buzz for its speed of 120 tokens per second and cost-efficiency at 4M prompt tokens per $1. With both moderated and self-moderated versions available, users are encouraged to try it out, with accessibility details available via this link.

- Olympia.chat Integrates OpenRouter: Olympia.chat has incorporated OpenRouter for its large language model needs, boasting a focus on solopreneurs and small businesses, and teased an upcoming open-source Ruby library for interacting with OpenRouter.

- Dialogue on OpenRouter AI Model Usage: In a heated discussion, participants talked about Groq's Mixtral model and clarified that OpenRouter use is independent of Groq’s free access period, and Mistral 8x7B model limits were explored following "Request too big" errors.

- Anticipation Builds for GPT-4.5: Rumors and unverified information about GPT-4.5 sparked enthusiastic speculation and high interest within the community, signaling strong anticipation for this potential next step in AI advancements.

Interconnects (Nathan Lambert) Discord Summary

- GPT-4.5 Anticipation Builds on Bing Blunder: Bing search engine results accidentally indexed a GPT-4.5 blog post, sparking excitement for its impending release, despite links leading to a 404 page. Depth of interest is reflected in discussions about the post also being found via other search engines like Kagi and DuckDuckGo, and a tweet has been circulated for additional clarity.

- GPT-4 Maintains Mastery in LeetCode: GPT-4 continues to impress with top performance in coding challenges, as shown in a recently cited paper focusing on its prowess in LeetCode problems.

- Claude 3 Excels at Extracting Essentials: The Claude 3 model, detailed through the Clautero project, demonstrates notable advancements in summarizing literature, indicating the evolving capabilities of large language models.

- Meta Dives Deep into AI Hardware: Meta announces significant investment in AI by planning two 24k GPU clusters and aims for 350,000 NVIDIA H100 GPUs by 2024, openly sharing their infrastructure ambitions through Grand Teton and formal announcements.

- Ads in AI - A Future Debate: Discussions arise about Google's monetization strategy for AI models with members comparing ad-supported models to subscription models, and examining privacy implications and the trust factor in relation to potential AI-generated ads.

CUDA MODE Discord Summary

- Meta's Hardware Upgrade Packs a Computational Punch: Meta announced the launch of two colossal 24k GPU clusters, planning to integrate 350,000 NVIDIA H100 GPUs by the end of 2024, which will serve a powerhouse for AI with computation equivalent to 600,000 GPUs, detailed in their infrastructure announcement.

- The Fusion of CUDA and NVMe: Developments in CUDA now allow NVMe drives to use direct memory access and GPU integration for storage applications, promising substantial efficiency gains as outlined in ssd-gpu-dma GitHub repository and NVIDIA's GPUDirect Storage API reference guide.

- CUDA Development Leverage with Nsight: NVIDIA's Nsight™ Visual Studio Code Edition has been hailed for its development capabilities on Linux and QNX systems, offering CUDA auto-code completion and smart profiling, even sparking a debate about NSight Systems' utility versus NSight Compute.

- Call for Feedback and Experience Sharing on Torchao: PyTorch Labs' invites feedback on the merging of new quantization algorithms on torchao's GitHub issue, offering mentorship for kernel writers eager to engage with a real world cuda mode project involving gpt-fast and sam-fast kernels.

- AI Engineer in the Wings: Cognition Labs teases the imminent debut of Devin, an autonomous AI software engineer capable of complex tasks, with buzz building around its implementation in the SWE-bench coding benchmark as described in Cognition Labs' blog post and flagged by the community for further examination.

DiscoResearch Discord Summary

- Musk's Razzmatazz Lost in Translation: The assistant's response detailing reasons for Mars colonization was critiqued for lacking Elon Musk's distinctive flair, despite covering his viewpoints well, leading to a creativity rating of *[[7]].

- RAG-narok Tactics: Engineers debated RAG prompt structures, with preferences varying between embedding the full prompt within user messages or adjusting it based on SFT to maintain consistent behavior, meanwhile, an internal tool, the Transformer Debugger, was unveiled via a tweet by Jan Leike for transformer model analysis, boasting rapid exploration and interpretability features without writing code.

- Mix-Up With Mixtral Models: Miscommunication around the

mixtral-7b-8expertmodel raised issues with its experimental status clarity, which contrasts with the officialmistralai/Mixtral-8x7B-v0.1model, noted for difficulties with non-English outputs, steering users towards the proper model and dataset information for those interested in German data specifics.

- Creative Benchmarking Breakthrough: The creative writing benchmark prototype goes live on a GitHub branch, offering a tool that ranks model creativity, though it's still a work-in-progress with room for discriminative improvements.

- Quest for German Precision: Inquiries regarding the ideal German embeddings for legal texts challenge engineers to consider the peculiarities of legal jargon, while the hunt for a German-specific embedding benchmark adds another layer to the complexity, underscoring a gap in the current benchmark landscape.

LLM Perf Enthusiasts AI Discord Summary

- GPT-4.5 Turbo: More Myth Than Reality: Speculation about the existence of GPT-4.5 Turbo sparked discussions, leading to the revelation that a supposed leak was actually an outdated and erroneously published draft mentioning training data up to July 2024. Concerns revolved around the confusion created by a Bing search showing no results for "openai announces gpt-4.5 turbo", hinting at the non-existence of such a model.

- Token Limitation Tantrums: Members expressed the frustration of hitting the 4096 token limit while working with gpt4turbo, but no solutions or workarounds were provided during the conversation.

- The Curious Case of Claude and Starship: A light-hearted comment mentioned the potential for OpenAI's announcements to overshadow Elon Musk's Starship, reflecting ongoing interest in how major tech reveals align or clash.

- Predicting Llama-3 or Leaping to Llama-4?: A member discussed the theory of Llama's version cycle, predicting a skip of Llama-3 due to quality concerns and potential release of Llama-4 in July. Upcoming features are anticipated, including Mixture of Experts, SSM variants, Attention mods, multi-modality in images and videos, extended context lengths, and advanced reasoning capabilities.

Datasette - LLM (@SimonW) Discord Summary

- Zero-click Worms Threaten GenAI Apps: A recently published paper discusses "ComPromptMized," a study by Stav Cohen revealing vulnerabilities where a computer worm could exploit various GenAI models without user interaction, highlighting risks for AI-powered applications like email assistants.

- The Quest for the Best Code Assistant AI: Engineers sought a framework to compare AI models such as Mistral and LLaMA2 for efficiency as code assistants, while acknowledging that an appropriate benchmark would need to be accurate to be useful in such comparisons.

- Leaderboard Becomes Go-to for AI Model Performance: For comparing model performances, the Leaderboard on chat.lmsys.org emerged as a valuable resource, with members expressing appreciation for its insights into various models' capabilities.

- Git Commit Messages Get a Language Model Upgrade: A member shared a hack to revolutionize git commit messages using LLM, detailing a method that integrates an LLM CLI with pre-commit-msg GIT hook, resulting in more informative commit descriptions.

Skunkworks AI Discord Summary

- Chatbot Builders Left Hanging: Engineers sought recommendations for open source models and frameworks suited for creating chatbots that manage long contexts. However, no specific solutions were provided within the discussion thread.

- Planting AIs in Classic Games: AI enthusiasts can watch a YouTube tutorial on developing Plants vs Zombies with Claude 3, covering Python programming and game development aspects in Claude 3 made Plants Vs Zombies Game.

- Rummaging Through RAG with Command-R: A new video spotlights Command-R, demonstrating its capacity for Retrieval Augmented Generation (RAG) and integration with external APIs, detailed in Lets RAG with Command-R.

- Introducing Devin, AI in Software Engineering: The world's alleged first AI software engineer, Devin, is featured in Devin The Worlds first AI Software Engineer, which showcases the capabilities of autonomous software engineering, also discussed on Cognition Labs' blog.

Alignment Lab AI Discord Summary

- Join the Visionaries of Multimodal Interpretability: Soniajoseph_ calls for collaborators on an open source project aimed at interpretability of multimodal models. This initiative is detailed in a LessWrong post and further discussions can be had on their dedicated Discord server.

- In Search of Lightning-Fast Inference: A guild member seeks the fastest inference method for Phi 2 mentioning the use of an A100 GPU. They're looking into batching for mass token generation, frameworks such as vLLM or Axolotl, and are curious about the impact of quantization on speed.

AI Engineer Foundation Discord Summary

- Plugin Authorization Made Easy: The AI Engineer Foundation suggested implementing config options for plugins to streamline authorization by passing tokens, informed by the structured schema in the Config Options RFC.

- Brainstorming Innovative Projects: Members were invited to propose new projects, adhering to a set of criteria available in the Google Doc guideline, along with a tease for a possible partnership with Microsoft on a prompt file project.

- Meet Devin, the Code Whiz: Cognition Labs unveiled Devin, an AI software engineer heralded for exceptional performance on the SWE-bench coding benchmark, ready to synergize with developer tools in a controlled environment, as detailed in their blog post.

PART 2: Detailed by-Channel summaries and links

Nous Research AI ▷ #off-topic (35 messages🔥):

- AI-Built Plants Vs Zombies: A YouTube video titled "Claude 3 made Plants Vs Zombies Game" demonstrates the creation of the game Plants Vs Zombies using the AI model Claude 3, incorporating Python programming for game development. The video can be watched here.

- New Term "Mergeslop": A member humorously noted the first use of the term "mergeslop" on a platform, expressing surprise at its novelty.

- Command-R and RAG Technology Spotlight: Another YouTube video Featuring Command-R, an AI model for long context tasks such Retrieval Augmented Generation (RAG), has been highlighted and is available for viewing here.

- AI News Digest Service Debuts: AI News announced a service that summarizes discussions from AI discords and Twitters, promising to save hours for users. Interested individuals can subscribe to the newsletter and check AI News for the week.

- Book Recommendations for AI Enthusiasts: Members shared personal book recommendations with AI and speculative fiction themes, including the "Three-Body Series," Lem's "The Cyberiad," and Gibson's "Sprawl Trilogy."

- Long Context Chatbot Development Query: A channel member sought advice on open-source models and frameworks for building chatbots with a long context or memory, sparking a conversation about current and future capabilities. SDAM, a sparse distributed associative memory, was suggested as a resource.

Links mentioned:

- Lets RAG with Command-R: Command-R is a generative model optimized for long context tasks such as retrieval augmented generation (RAG) and using external APIs and tools. It is design...

- Claude 3 made Plants Vs Zombies Game: Will take a look at how to develop plants vs zombies using Claude 3#python #pythonprogramming #game #gamedev #gamedevelopment #llm #claude

- GitHub - derbydefi/sdam: sparse distributed associative memory: sparse distributed associative memory. Contribute to derbydefi/sdam development by creating an account on GitHub.

- [AINews] Fixing Gemma: AI News for 3/7/2024-3/11/2024. We checked 356 Twitters and 21 Discords (335 channels, and 6154 messages) for you. Estimated reading time saved (at 200wpm):...

- Devin The Worlds first AI Software Engineer: Devin is fully autonomous software engineerhttps://www.cognition-labs.com/blog

Nous Research AI ▷ #interesting-links (8 messages🔥):

- Meet Devin, AI Software Engineer Extraordinaire: Cognition Labs introduces Devin, an AI touted as the first AI software engineer, surpassing previous benchmarks by resolving 13.86% of GitHub issues unassisted on the SWE-Bench coding benchmark. Devin can autonomously utilize a shell, code editor, and web browser, see the full thread.

- C4AI Command-R Unleashes Generative Model Might: CohereForAI releases C4AI Command-R, a highly capable generative model with 35 billion parameters, excelling in reasoning, summarization, and multilingual generation. The model is available with open weights and adheres to specific licensing and acceptable use policies on Hugging Face.

- Cerebras Unveils the World's Fastest AI Chip: Cerebras Systems presents the CS-3 chip, claiming to be the world's fastest AI accelerator capable of training up to a 24 trillion parameter model on a single device. The CS-3 features staggering specifications and advancements in AI compute technology Press Release and Product Information.

- The Shape of Innovation in AI Chips: A member speculated the chip design of the new Cerebras CS-3, debating why it is square rather than round or semi-round, proposing shapes that could potentially fit more transistors.

- No Link, Just a Teaser: A Twitter link from user Katie Kang was shared, however, without any additional context provided.

Links mentioned:

- Tweet from Cerebras (@CerebrasSystems): 📣ANNOUNCING THE FASTEST AI CHIP ON EARTH📣 Cerebras proudly announces CS-3: the fastest AI accelerator in the world. The CS-3 can train up to 24 trillion parameter models on a single device. The wo...

- CohereForAI/c4ai-command-r-v01 · Hugging Face: no description found

- Tweet from Cognition (@cognition_labs): Today we're excited to introduce Devin, the first AI software engineer. Devin is the new state-of-the-art on the SWE-Bench coding benchmark, has successfully passed practical engineering intervie...

Nous Research AI ▷ #announcements (1 messages):

- Hermes 2 Pro 7B Unleashed: The newly released Hermes 2 Pro 7B model by Nous Research enhances agent reliability with an improved dataset and versatility in function calling and JSON mode. The model can be downloaded at Hugging Face - Hermes-2-Pro-Mistral-7B, with GGUF versions also available at Hugging Face - Hermes-2-Pro-Mistral-7B-GGUF.

- Collaborative Effort and Acknowledgments: Months of collaborative efforts from multiple contributors and the compute sponsorship by Latitude.sh have brought this Hermes 2 Pro 7B model to fruition.

- Innovative Function Calling and JSON Mode: Special system prompts and XML tags have been utilized to enable advanced function calling capabilities, with sample code available on GitHub - Hermes Function Calling.

- Custom Evaluation Framework for Enhanced Performance Measurement: The model includes a unique evaluation pipeline for Function Calling and JSON Mode built upon Fireworks AI’s original dataset and code, which can be found at GitHub - Function Calling Eval.

- Datasets Ready for Download: The datasets for evaluating the performance in Function Calling and JSON Mode are accessible for public use at Hugging Face - Func-Calling-Eval and Hugging Face - JSON-Mode-Eval, respectively.

Nous Research AI ▷ #general (349 messages🔥🔥):

- Function Calling Precision: Hermes 2 Pro has a Function Calling Accuracy of 91% in a zero-shot setting, indicating exceptional performance even without few-shot training. The evaluation datasets for Function Calling and JSON Mode have been released, with training sets to follow.

- AI Act Shakes Up Europe: The EU AI Act just passed into law, prohibiting certain AI practices, requiring energy consumption reports, and potentially affecting AI companies looking to do business in Europe.

- DeepMind and Fortnite: Google DeepMind Tweet article suggests AI now can outplay humans in Fortnite, raising concerns and interests in the gaming community.

- Advancing Inference Libraries: Discussion was held on improving libraries for inference with function calling/tool support, with Hermes Function Calling GitHub repository mentioned as a notable resource.

- New Model Release Excitement: Nous Research has released Hermes 2 Pro, stirring excitement in the community for its potential in function calling and structured outputs in applications like llama.cpp and vercel AI SDK; DSPy optimization has also been a recent topic of interest.

Links mentioned:

- Tweet from Cake (@ILiedAboutCake): lol the Amazon “search through reviews” is blindly just running an AI model now AI has ruined using the internet

- princeton-nlp/SWE-Llama-7b · Hugging Face: no description found

- Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLM: We investigate efficient methods for training Large Language Models (LLMs) to possess capabilities in multiple specialized domains, such as coding, math reasoning and world knowledge. Our method, name...

- DiscoResearch/DiscoLM_German_7b_v1 · Hugging Face: no description found

- NousResearch/Nous-Hermes-2-Mistral-7B-DPO · Hugging Face: no description found

- Hugging Face – The AI community building the future.: no description found

- Tweet from Jack Burlinson (@jfbrly): In case you were wondering just how cracked the team @cognition_labs is... This was the CEO (@ScottWu46) 14 years ago. ↘️ Quoting Cognition (@cognition_labs) Today we're excited to introduce ...

- Bh187 Austin Powers GIF - Bh187 Austin Powers I Love You - Discover & Share GIFs: Click to view the GIF

- GitHub - NousResearch/Hermes-Function-Calling: Contribute to NousResearch/Hermes-Function-Calling development by creating an account on GitHub.

- GitHub - NousResearch/Hermes-Function-Calling: Contribute to NousResearch/Hermes-Function-Calling development by creating an account on GitHub.

- Gemma optimizations for finetuning and infernece · Issue #29616 · huggingface/transformers: System Info Latest transformers version, most platforms. Who can help? @ArthurZucker and @younesbelkada Information The official example scripts My own modified scripts Tasks An officially supporte...

- Gemma bug fixes - Approx GELU, Layernorms, Sqrt(hd) by danielhanchen · Pull Request #29402 · huggingface/transformers: Just a few more Gemma fixes :) Currently checking for more as well! Related PR: #29285, which showed RoPE must be done in float32 and not float16, causing positional encodings to lose accuracy. @Ar...

- OpenAI Tools / function calling v2 by FlorianJoncour · Pull Request #3237 · vllm-project/vllm: This PR follows #2488 The implementation has been updated to use the new guided generation. If during a query, the user sets tool_choice to auto, the server will use the template system used in #24...

- Guidance: no description found

- OpenAI Compatible Web Server - llama-cpp-python: no description found

Nous Research AI ▷ #ask-about-llms (107 messages🔥🔥):

- Discussing the Nuances of Model Licensing: Members inquired about the commercial usability of models like nous Hermes 2 under Apache 2 and MIT licenses derived from GPT-4. Clarifications were given stating, "do what you want you wont hear anything from us (we wont be suing you lol)", and the complexities of TOS enforcement and content sharing were highlighted.

- Challenges with Fine-tuning Efficiency and Style Transfer: Conversation touched on the inefficiency of fine-tuning on a small subset of data for style mimicking. A shift towards style transfer beyond prompt engineering was suggested, emphasizing a focus on role-playing aspects in language models.

- Delving into Function Calling and Structured Outputs: Function calling capabilities and structured JSON outputs in LLMs were thoroughly discussed. For example, the Trelis/Llama-2-7b-chat-hf-function-calling-v2 model sparked debates on its functional calling and JSON mode operations, with insights provided on its method of returning structured JSON arguments.

- Releasing Updates and Understanding Model Capabilities: Anticipation built around Nous Hermes 2 Pro, with a member hinting, "<:cPES_Wink:623401321382281226> maybe it'll be out today <:cPES_Wink:623401321382281226>". Another member stressed the importance of clarity in model versioning to avoid confusion among users, arguing against ambiguous titling such as "Pro" without a clear version number.

- Exploring Integration of Hermes 2 Pro with Ollama: Questions arose on how to integrate the upcoming Nous Hermes 2 Pro with Ollama. A response pointed out that Ollama can support specific GGUFs, skipping the need for model quantization, and shared a guide at ollama/docs/import.md on GitHub.

Links mentioned:

- ollama/docs/import.md at main · ollama/ollama: Get up and running with Llama 2, Mistral, Gemma, and other large language models. - ollama/ollama

- Trelis/Llama-2-7b-chat-hf-function-calling-v2 · Hugging Face: no description found

- GitHub - NousResearch/Hermes-Function-Calling: Contribute to NousResearch/Hermes-Function-Calling development by creating an account on GitHub.

Latent Space ▷ #ai-general-chat (127 messages🔥🔥):

- Big Splash in AI Software Engineering: Cognition Labs introduces Devin, an autonomous AI software engineer capable of passing engineering interviews and completing real jobs. Devin far surpassed the previous best SWE-Bench benchmark, resolving a significant 13.86% of GitHub issues. Check out the thread for more on Devin.

- Stealing Weights or Just Hype?: A new paper reveals that it's possible to infer weights from production language models like ChatGPT and PaLM-2 using their APIs. This sparked discussions about the ethics and future security measures for AI models. Find the full post here.

- Google's Gemini Struggles to Impress: Users express frustration with Google's Gemini API, highlighting its complexity and clunky documentation. There's a sentiment that Google is falling behind in the AI API space compared to competitors like OpenAI and Anthropic.

- Together.ai Bolsters Compute for AI Startups: Together.ai announces a $106M raise to build a platform for running generative AI apps at scale and introduces Sequoia, a method to serve large LLMs efficiently. Learn more about their vision.

- Cerebras Unveils Groundbreaking AI Chip: Cerebras announces the CS-3, the fastest AI chip capable of training models up to 24 trillion parameters on a single device. This development is a significant leap in AI hardware innovation. Discover the CS-3.

Links mentioned:

- Stealing Part of a Production Language Model: no description found

- Bloomberg - Are you a robot?: no description found

- Using LangSmith to Support Fine-tuning: Summary We created a guide for fine-tuning and evaluating LLMs using LangSmith for dataset management and evaluation. We did this both with an open source LLM on CoLab and HuggingFace for model train...

- Tweet from Cognition (@cognition_labs): Today we're excited to introduce Devin, the first AI software engineer. Devin is the new state-of-the-art on the SWE-Bench coding benchmark, has successfully passed practical engineering intervie...

- Tweet from Ate-a-Pi (@8teAPi): Sora WSJ Interview Mira Murati gives the most detail to date on Sora > Joanna Stern gave several prompts for them to generate > First time I've seen Sora videos with serious morphing prob...

- 🌎 The Compute Fund: Reliably access the best GPUs you need at competitive rates in exchange for equity.

- Tweet from Ate-a-Pi (@8teAPi): Sora WSJ Interview Mira Murati gives the most detail to date on Sora > Joanna Stern gave several prompts for them to generate > First time I've seen Sora videos with serious morphing prob...

- Tweet from Patrick Collison (@patrickc): These aren't just cherrypicked demos. Devin is, in my experience, very impressive in practice. ↘️ Quoting Cognition (@cognition_labs) Today we're excited to introduce Devin, the first AI so...

- Tweet from Together AI (@togethercompute): Excited to announce our new speculative decoding method, Sequoia! Sequoia scales speculative decoding to very large speculation budgets, is robust to different decoding configurations, and can adap...

- Tweet from Cerebras (@CerebrasSystems): 📣ANNOUNCING THE FASTEST AI CHIP ON EARTH📣 Cerebras proudly announces CS-3: the fastest AI accelerator in the world. The CS-3 can train up to 24 trillion parameter models on a single device. The wo...

- Tweet from Figure (@Figure_robot): With OpenAI, Figure 01 can now have full conversations with people -OpenAI models provide high-level visual and language intelligence -Figure neural networks deliver fast, low-level, dexterous robot ...

- Tweet from Cognition (@cognition_labs): Devin builds a custom chrome extension ↘️ Quoting Arun Shroff (@arunshroff) @cognition_labs This looks awesome! Would love to get access! I used ChatGPT recently to create a Chrome extension to f...

- Tweet from Chief AI Officer (@chiefaioffice): VC-backed AI employee startups are a trend. Here are some that raised in 2024 + total funding: Software Engineer - Cognition ($21M+) Software Engineer - Magic ($145M+) Product Manager - Version Le...

- Tweet from Andrej Karpathy (@karpathy): # automating software engineering In my mind, automating software engineering will look similar to automating driving. E.g. in self-driving the progression of increasing autonomy and higher abstracti...

- Tweet from Together AI (@togethercompute): Today we are thrilled to share that we’ve raised $106M in a new round led by @SalesforceVC with participation from @coatuemgmt and our existing investors. Our vision is to rapidly bring innovations f...

- Tweet from Akshat Bubna (@akshat_b): The first time I tried Devin, it: - navigated to the @modal_labs docs page I gave it - learned how to install - handed control to me to authenticate - spun up a ComfyUI deployment - interacted with i...

- Tweet from Aravind Srinivas (@AravSrinivas): This is the first demo of any agent, leave alone coding, that seems to cross the threshold of what is human level and works reliably. It also tells us what is possible by combining LLMs and tree searc...

- Tweet from Ashlee Vance (@ashleevance): Scoop: a start-up called Cognition AI has released what appears to be the most capable coding assisstant yet. Instead of just autocompleting tasks, it can write entire programs on its own. Is backed...

- Tweet from swyx (@swyx): hope this works 🕯 🕯 🕯 🕯 @elonmusk 🕯 open source 🕯 @xAI Grok 🕯 on 🕯...

- Tweet from Together AI (@togethercompute): Today we are thrilled to share that we’ve raised $106M in a new round led by @SalesforceVC with participation from @coatuemgmt and our existing investors. Our vision is to rapidly bring innovations f...

- 4,000,000,000,000 Transistors, One Giant Chip (Cerebras WSE-3): The only company with a chip as big as your head, Cerebras has a unique value proposition when it comes to AI silicon. Today they are announcing their third ...

- Tweet from muhtasham (@Muhtasham9): DeepMind folks can now steal weights behind APIs “We also recover the exact hidden dimension size of the gpt-3.5-turbo model, and estimate it would cost under $2,000 in queries to recover the entire...

- Tweet from Anthropic (@AnthropicAI): With state-of-the-art vision capabilities and strong performance on industry benchmarks across reasoning, math, and coding, Haiku is a versatile solution for a wide range of enterprise applications.

- Tweet from asura (@stimfilled): @qtnx_ 3) dateLastCrawled: 2023-09

- Tweet from Mckay Wrigley (@mckaywrigley): I’m blown away by Devin. Watch me use it for 27min. It’s insane. The era of AI agents has begun.

- Tweet from Anthropic (@AnthropicAI): Today we're releasing Claude 3 Haiku, the fastest and most affordable model in its intelligence class. Haiku is now available in the API and on http://claude.ai for Claude Pro subscribers.

- Tweet from Siqi Chen (@blader): this is the ceo of cognition 14 years ago the idea that 10x/100x engineers don’t exist is such a cope

- Tweet from James O'Leary (@jpohhhh): Google Gemini integration began 15 minutes ago, this is a cope thread - There's an API called "Gemini API" that is free until we start charging for it early next year (it is mid-March) - ...

- Tweet from James O'Leary (@jpohhhh): Google Gemini integration began 15 minutes ago, this is a cope thread - There's an API called "Gemini API" that is free until we start charging for it early next year (it is mid-March) - ...

- Tweet from Neal Wu (@WuNeal): Today I can finally share Devin, the first AI software engineer, built by our team at @cognition_labs. Devin is capable of building apps end to end, finding bugs in production codebases, and even fine...

- Tweet from Lucas Atkins (@LucasAtkins7): Tonight, I am releasing eight Gemma fine tunes and a beta of their combined mixture of experts model named GemMoE. GemMoE has ALL Gemma bug fixes built-in. You do not have to do anything extra to ge...

- Tweet from Fred Ehrsam (@FEhrsam): First time I have seen an AI take a complex task, break it down into steps, complete it, and show a human every step along the way - to a point where it can fully take a task off a human's plate. ...

- The First AI Virus Is Here!: ❤️ Check out Weights & Biases and sign up for a free demo here: https://wandb.me/papers📝 The paper "ComPromptMized: Unleashing Zero-click Worms that Target ...

- Tweet from Varun Shenoy (@varunshenoy_): Devin is 𝘪𝘯𝘤𝘳𝘦𝘥𝘪𝘣𝘭𝘦 at data extraction. Over the past few weeks, I've been scraping data from different blogs and Devin 1. writes the scraper to navigate the website 2. executes the cod...

- Tweet from Andrew Kean Gao (@itsandrewgao): i never believe recorded demos so I reached out to the @cognition_labs team for early access to try for myself and got it! will be sharing my unfiltered opinions on #devin here. 🧵🧵 1/n ↘️ Quotin...

- [AINews] The world's first fully autonomous AI Engineer: AI News for 3/11/2024-3/12/2024. We checked 364 Twitters and 21 Discords (336 channels, and 3499 messages) for you. Estimated reading time saved (at 200wpm):...

- Tweet from simp 4 satoshi (@iamgingertrash): Finally, excited to launch Truffle-1 — a $1299 inference engine designed to run OSS models using just 60 watts https://preorder.itsalltruffles.com

- Amazon announces Rufus, a new generative AI-powered conversational shopping experience: With Rufus, customers are now able to shop alongside a generative AI-powered expert that knows Amazon’s selection inside and out, and can bring it all together with information from across the web to ...

- Perspective – A space for you: A private journal to build a complete record of your life.

- Add support for Gemini API · Issue #441 · jxnl/instructor: The new Gemini api introduced support for function calling. You define a set of functions with their expected arguments and you pass them in the tools argument. Can we add gemini support to instruc...

Latent Space ▷ #ai-announcements (7 messages):

- Invitation to LLM Paper Club Event: A reminder was posted for the Latent Space Discord community about the upcoming LLM Paper Club event featuring a presentation on Synthetic Data for Finetuning at 12pm PT. Participants are encouraged to read Eugene Yan's survey on synthetic data for background information.

- Important: Accept your Luma Invites: Members are urged to accept their invitations on Luma (https://lu.ma/wefvz0sb) to avoid being pruned from auto-invites for future calendar reminders due to inactivity.

- Correction to Synthetic Data Article Link: The previous link to the synthetic data article was corrected as it contained an extra period causing a 404 error. The updated event cover can be found here.

- Picocreator Highlights Close Call with Pruning: In a light-hearted comment, picocreator mentioned the community's narrowly-avoided mass pruning from the event reminders, facetiously celebrating their collective close call.

- Swyxio Emphasizes Consistency of Event Schedule: Swyxio pointed out that the LLM Paper Club has been consistently scheduled at the same time for the past 6 months, implying that regular members should already know the timing.

Links mentioned:

- LLM Paper Club (Synthetic Data for Finetuning) · Luma: This week we'll be covering the survey post - How to Generate and Use Synthetic Data for Finetuning (https://eugeneyan.com/writing/synthetic/) with @eugeneyan We have moved to use the...

- How to Generate and Use Synthetic Data for Finetuning: Overcoming the bottleneck of human annotations in instruction-tuning, preference-tuning, and pretraining.

Latent Space ▷ #llm-paper-club-west (207 messages🔥🔥):

- The Senpai of Synthetic Data: Eugene Yan discussed the viability of using synthetic data for model training in various aspects, including pretraining and instruction-tuning. He highlighted that synthetic data is quicker and more cost-effective to generate, avoiding privacy issues while providing quality and diversity that can exceed human annotation.

- Voice Recognition: A Rabbit Hole to Explore: Links were shared to a Twitter thread diving into voice recognition and topics related to text-to-speech and speech-to-text. The discussion also included various applications and tools like vapi.ai and whisper.

- The Potential of Whisper for Speech Processing: Amid the interest towards voice technologies, whisper.cpp was mentioned as a nice tool for speech recognition, and a request was made for covering the Open Source SOTA for diarization.

- Revisiting Classic Papers: Eugene Yan commended the importance of revisiting old papers on synthetic data, suggesting that "synthetic data is almost all you need" and emphasizing self-reward as a significant concept.

- Invitation for Community Engagement: The community was encouraged to participate in covering papers, with an open invitation for anyone in the audience to delve into the papers and contribute to the discussions.

Links mentioned:

- Why Not Both Take Both GIF - Why Not Both Why Not Take Both - Discover & Share GIFs: Click to view the GIF

- Join Slido: Enter #code to vote and ask questions: Participate in a live poll, quiz or Q&A. No login required.

- AI News: We summarize AI discords + top Twitter accounts, and send you a roundup each day! See archive for examples. "Highest-leverage 45 mins I spend everyday" - Soumith "the best AI newslette...

- Fine-tuning vs RAG: Listen to this episode from Practical AI: Machine Learning, Data Science on Spotify. In this episode we welcome back our good friend Demetrios from the MLOps Community to discuss fine-tuning vs. retri...

- How to Generate and Use Synthetic Data for Finetuning: Overcoming the bottleneck of human annotations in instruction-tuning, preference-tuning, and pretraining.

- dspy/docs/api/optimizers/BootstrapFinetune.md at 0c1d1b1b2c9b5d6dc6d565a84bfd8f17c273669d · stanfordnlp/dspy: DSPy: The framework for programming—not prompting—foundation models - stanfordnlp/dspy

- Forget ChatGPT and Gemini — Claude 3 is the most human-like chatbot I've ever used: It isn't AGI but it is getting closer

- 🦅 Eagle 7B : Soaring past Transformers with 1 Trillion Tokens Across 100+ Languages (RWKV-v5): A brand new era for the RWKV-v5 architecture and linear transformer's has arrived - with the strongest multi-lingual model in open source today

Perplexity AI ▷ #general (311 messages🔥🔥):

- Perplexity AI Utilizes Claude 3 Opus: Although several AIs were discussed, it was clarified that Perplexity AI uses Claude3 Opus. Users inquired about tools to prevent plagiarism detection, though there's skepticism about the effectiveness of current tools in detecting AI-generated content.

- Concerns About AI Plagiarism Tools: Users discussed the limitations of existing plagiarism tools, with some arguing that no reliable method exists to detect AI-generated text. Discussions included the potential need for breakthroughs in AIs to address this.

- Debate on Search Engines and Indexers: There was a lively debate about whether Perplexity AI uses other indexers, with members ultimately clarifying that Perplexity has its own indexer, contributing to its speed. Additionally, the discussions touched on Google's past performance and the future of search engines in the age of AI.

- Boost in Productivity with AI: Multiple users reported significant increases in productivity thanks to tools like Perplexity AI and Notebook LLM. They shared how AI aids in research and information gathering, despite limitations like the experimental nature of some tools restricting the upload of multiple documents.

- Confusion Over Perplexity AI Offerings: Users shared confusion over the available models within Perplexity AI, such as the types of Claude models and the number of uses allowed for each. It was clarified by one user that Claude 3 Opus has 5 uses, after which it switches to Claude 3 Sonnet.

Links mentioned:

- MSN: no description found

- MSN: no description found

- Perplexity brings Yelp data to its chatbot: Yelp cut a deal with the AI search engine.

- U.S. Must Act Quickly to Avoid Risks From AI, Report Says : The U.S. government must move “decisively” to avert an “extinction-level threat" to humanity from AI, says a government-commissioned report

- Further Adventures in Plotly Sankey Diagrams: The adventure continues

- There are growing calls for Google CEO Sundar Pichai to step down: Analysts believe Google's search business is keeping it safe for now, but that could change soon with generative-AI rivals proliferating.

- Perplexity AI CEO Shares How Google Retained An Employee He Wanted To Hire: Aravind Srinivas, the CEO of search engine Perplexity AI, recently shared an interesting incident that sheds light on how big tech companies are ready to shell a great amount of money to retain talent...

- New Rabbit R1 demo promises a world without apps – and a lot more talking to your tech: Chat with your bot

- Tweet from Aravind Srinivas (@AravSrinivas): Will make Perplexity Pro free, if Mikhail makes Microsoft Copilot free ↘️ Quoting Ded (@dened21) @AravSrinivas @MParakhin We want perplexity pro for free (monetize with highly personalized ads)

- Open AI JUST LEAKED GPT 4.5 ?!! (GPT 4.5 Update Explained): ✉️ Join My Weekly Newsletter - https://mailchi.mp/6cff54ad7e2e/theaigrid🐤 Follow Me on Twitter https://twitter.com/TheAiGrid🌐 Checkout My website - https:/...

- More than an OpenAI Wrapper: Perplexity Pivots to Open Source: Perplexity CEO Aravind Srinivas is a big Larry Page fan. However, he thinks he's found a way to compete not only with Google search, but with OpenAI's GPT too.

- Killed by Google: Killed by Google is the open source list of dead Google products, services, and devices. It serves as a tribute and memorial of beloved services and products killed by Google.

- I Believe In People Sundar Pichai GIF - I Believe In People Sundar Pichai Youtube - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- CEO says he tried to hire an AI researcher from Meta, and was told to 'come back to me when you have 10,000 H100 GPUs': The CEO of an AI startup said he wasn't able to hire a Meta researcher because it didn't have enough GPUs.

- Fireside Chat with Aravind Srinivas, CEO of Perplexity AI, & Matt Turck, Partner at FirstMark: Today we're joined by Aravind Srinivas, CEO of Perplexity AI, a chatbot-style AI conversational engine that directly answers users' questions with sources an...

- GitHub - danielmiessler/fabric: fabric is an open-source framework for augmenting humans using AI. It provides a modular framework for solving specific problems using a crowdsourced set of AI prompts that can be used anywhere.: fabric is an open-source framework for augmenting humans using AI. It provides a modular framework for solving specific problems using a crowdsourced set of AI prompts that can be used anywhere. - ...

Perplexity AI ▷ #sharing (12 messages🔥):

- Sharing Perplexity.ai Search Queries: Members are sharing direct links to Perplexity AI search results covering various topics such as improvement strategies, sleep advice, the meaning of Catch-22, and information on OpenAI Chat GPT.

- Exploring AI and Tech via YouTube: A member shared a YouTube video exploring various AI news, including a controversy between Midjourney and Stability AI, and the introduction of "Digital Marilyn."

- Inquiries Into Medical Terminology: Queries on medical terms are also made, with a link provided to understanding azotemia.

- Evaluating Images and Business Metrics with AI: Links to searches that utilize AI to describe an image and explain the concept of net promoter score were shared.

- Remembering Paul Alexander: A member commemorates Paul Alexander by sharing a search link about his passing, honoring his achievements, and calling him an "absolute chad."

Links mentioned:

Midjourney bans Stability staff, Marilyn Monroe AI Debut, Vision Pro aids spine surgery: This episode explores the latest AI news, including a heated data scraping controversy between Midjourney and Stability AI, the innovative "Digital Marilyn" ...

Perplexity AI ▷ #pplx-api (16 messages🔥):

- Pondering on Prompt Perfection: A member emphasized that achieving concise answers from models can be obtained through prompting adjustments and parameter settings like max_tokens and temperature.

- Remembering Through Embeddings: It was suggested that for a chatbot model to "remember conversations" using the Perplexity API, it would require an external database to store embeddings of past conversations.

- Seeking Source Specifics: A query was raised about whether the Perplexity API can be prompted to reply with just the website or source of the information it references.

- Request for High-Context Models: A user inquired if Yarn-Mistral-7b-128k could be added for higher context use cases.

- Accuracy Anxieties and Searches:

- Concerns about result accuracy when using the -online tag were voiced, specifically regarding non-existent studies and incorrect authorship attribution. One method to possibly enhance accuracy involves setting a system message to provide clarity and instructions to the model separate from the user query. A link to Perplexity's API documentation was shared for experimentation with system messages.

Links mentioned:

Chat Completions: no description found

Unsloth AI (Daniel Han) ▷ #general (224 messages🔥🔥):

- Kernel Improvements Shared: An improvement was discussed for a rope kernel where sin/cos functions are loaded once for computations along axis 1 for efficiency. Sequential computation is used for grouping heads, and a Pull Request (PR) was mentioned to have been made with some apprehension about its impact on overall training performance.

- Unsloth Discussion and Contributions: Unsloth's performance and usage were highlighted, with Unsloth Studio (Beta) mentioned as an upcoming feature allowing one-click finetuning. Issues surrounding importing FastLanguageModel on Kaggle were addressed, suggesting manual reinstallation of certain libraries.

- Grok AI Speculations and OpenAI Dialogue: Conversations touched on the significance of Elon Musk releasing an open-source Grok AI model and the recent OpenAI events. Debate arose over the impact of real-time Twitter data feed integration with the model's performance.