[AINews] Creating a LLM-as-a-Judge

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Critique Shadowing is all you need.

AI News for 10/29/2024-10/30/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (231 channels, and 2558 messages) for you. Estimated reading time saved (at 200wpm): 241 minutes. You can now tag @smol_ai for AINews discussions!

On a day when Anthropic (Claude 3.5 SWEBench+SWEAgent details), OpenAI (SimpleQA), DeepMind (NotebookLM) and Apple (M4 Macbooks) and a mysterious new SOTA image model (Recraft v3) have releases, it is rare to focus on news from a smaller name, but we love news you can use.

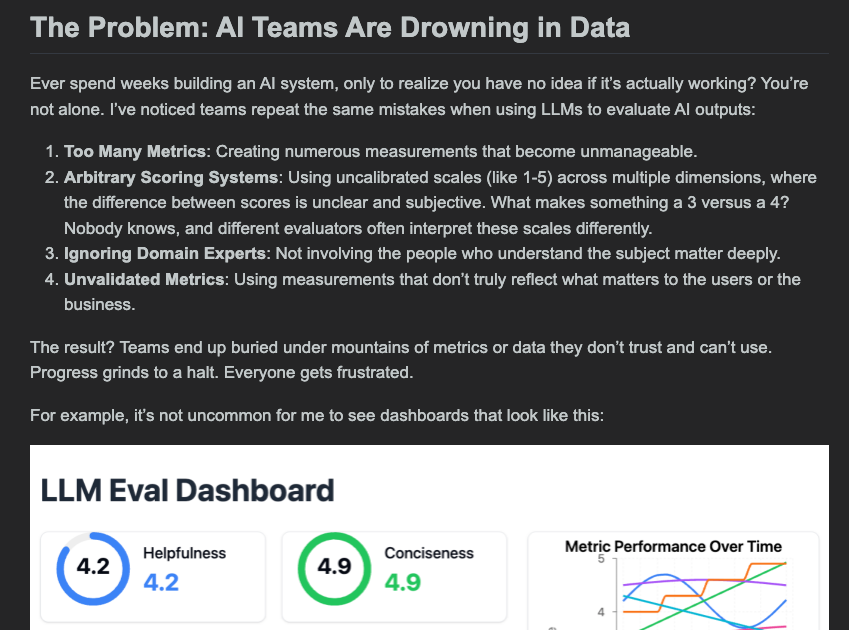

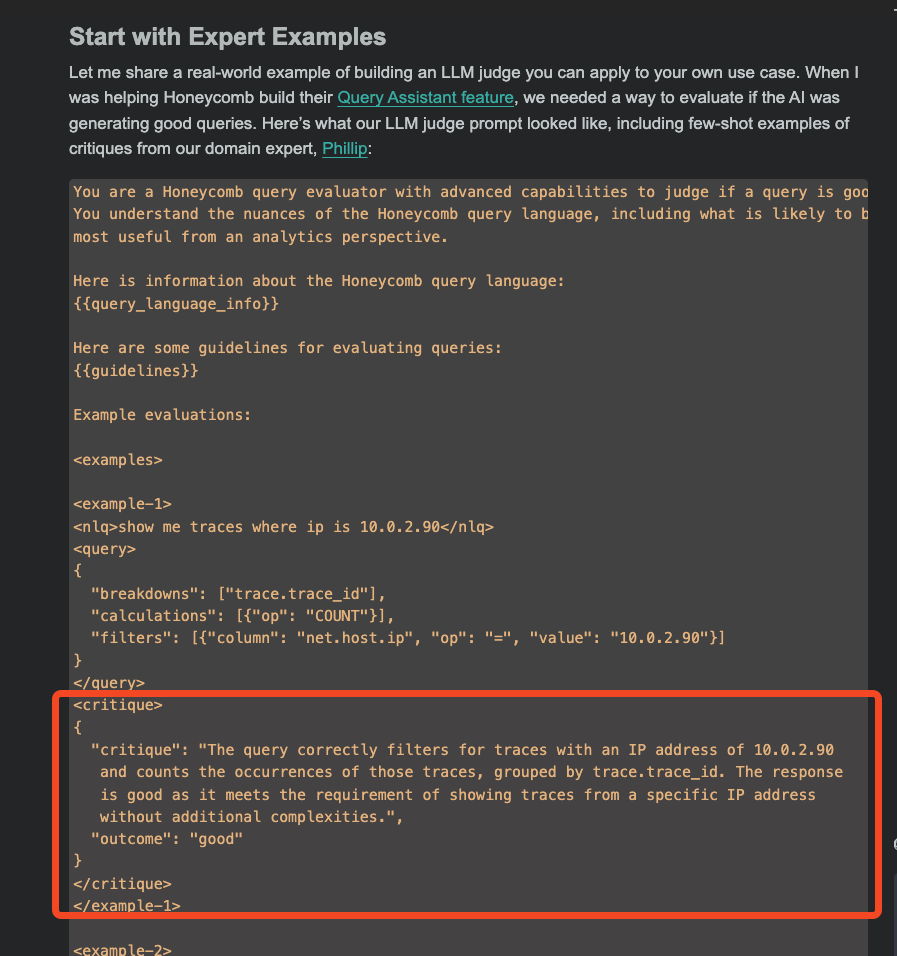

After his hit Your AI Product Needs Evals (our coverage here), Hamel Husain is back with an epic 6,000 word treatise on Creating a LLM-as-a-Judge That Drives Business Results, with a clear problem statement: AI teams have too much data they don't trust and don't use.

There are a lot of standard themes echoed in Hamel's AI.Engineer talk (as well as the very fun Weights & Biases one), but this piece is notable for its strong recommendation of critique shadowing as to create few-shot examples for LLM judges to align with domain experts:

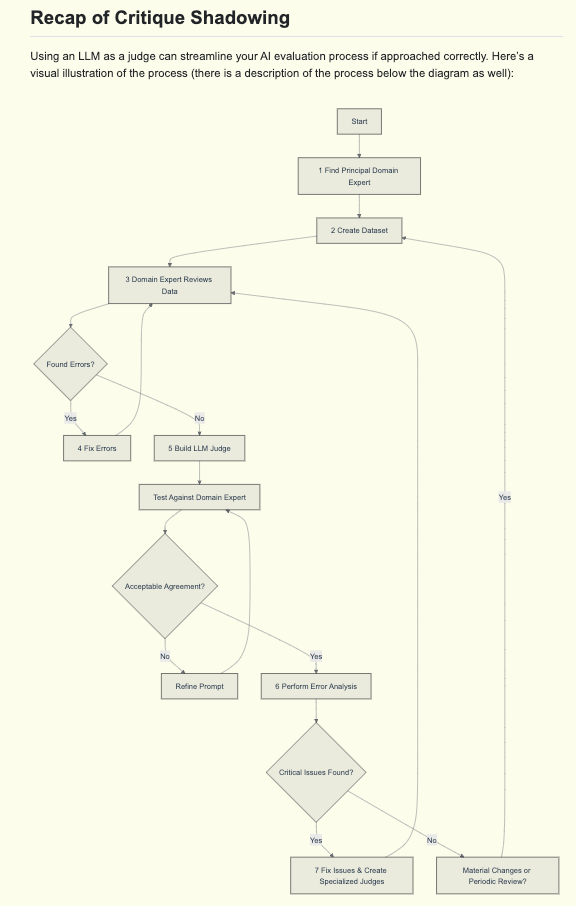

Critique Shadowing TLDR:

- Find Principal Domain Expert

- Create A Dataset

- Generate diverse examples covering your use cases

- Include real or synthetic user interactions

- Domain Expert Reviews Data

- Expert makes pass/fail judgments

- Expert writes detailed critiques explaining their reasoning

- Fix Errors (if found)

- Address any issues discovered during review

- Return to expert review to verify fixes

- Go back to step 3 if errors are found

- Build LLM Judge

- Create prompt using expert examples

- Test against expert judgments

- Refine prompt until agreement is satisfactory

- Perform Error Analysis

- Calculate error rates across different dimensions

- Identify patterns and root causes

- Fix errors and go back to step 3 if needed

- Create specialized judges as needed

The final workflow looks like this:

Handy, and, as Hamel mentions in the article, this is our critique-and-domain-expert-heavy iterative process for building AINews as well!

[Sponsored by Zep] Why do AI agents need a memory layer, anyway? Well, including the full interaction history in prompts leads to hallucinations, poor recall, and costly LLM calls. Plus, most RAG pipelines struggle with temporal data, where facts change over time. Zep is a new service that tackles these problems using a unique structure called a temporal knowledge graph. Get up and running in minutes with the quickstart.

swyx's commentary: the docs for the 4 memory APIs of Zep also helped me better understand the scope of what Zep does/doesn't do and helped give a better mental model of what a chatbot memory API should look like agnostic of Zep. Worthwhile!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

GitHub Copilot and AI Integration

- Claude integration: @AnthropicAI announced that Claude 3.5 Sonnet is now available on GitHub Copilot, with access rolling out to all Copilot Chat users and organizations over the coming weeks. @alexalbert__ echoed this announcement, highlighting the availability in Visual Studio Code and GitHub.

- Perplexity AI partnership: @perplexity_ai shared their excitement about partnering with GitHub, detailing features like staying updated on library updates, finding answers to questions, and accessing API integration assistance within the GitHub Copilot platform.

- Multiple model support: @rohanpaul_ai noted that Gemini 1.5 Pro is also available in GitHub Copilot, alongside Claude 3.5 Sonnet and OpenAI's o1-preview. This multi-model support represents a significant shift in GitHub Copilot's offerings.

- Impact on development: @rohanpaul_ai highlighted a statistic that "more than 25% of all new code at Google is now generated by AI", suggesting a significant impact of AI on software development practices.

AI Advancements and Research

- Layer Skip technology: @AIatMeta announced the release of inference code and fine-tuned checkpoints for Layer Skip, an end-to-end solution for accelerating LLMs by executing a subset of layers and using subsequent layers for verification and correction.

- Small Language Models: @rohanpaul_ai shared a survey paper on Small Language Models, indicating ongoing research interest in more efficient AI models.

- Mixture-of-Experts (MoE) research: @rohanpaul_ai discussed a paper revealing that MoE architectures trade reasoning power for memory efficiency in LLM architectures, with more experts not necessarily making LLMs smarter, but better at memorizing.

AI Applications and Tools

- Perplexity Sports: @AravSrinivas announced the launch of Perplexity Sports, starting with NFL widgets for game summaries, stats, and player/team comparisons, with plans to expand to other sports.

- AI in media production: @c_valenzuelab shared a lengthy thread about Runway's vision for AI in media and entertainment, describing AI as a tool for storytelling and predicting a shift towards interactive, generative, and personalized content.

- Open-source developments: @AIatMeta released inference code and fine-tuned checkpoints for Layer Skip, an acceleration technique for LLMs, on Hugging Face.

Programming Languages and Tools

- Python's popularity: @svpino noted that Python is now the #1 programming language on GitHub, surpassing JavaScript.

- GitHub statistics: @rohanpaul_ai shared insights from the Octoverse 2024 report, including a 98% YoY growth in AI projects and a 92% spike in Jupyter Notebook usage.

Memes and Humor

- @willdepue joked about AGI being achieved internally, saying "proof agi has been achieved internally. we did it joe".

- @Teknium1 quipped "Japan AI companies are brutal lol", likely in reference to some humorous or aggressive AI-related development in Japan.

- @nearcyan made a joke about the "80IQ play" of investing in NVIDIA due to ChatGPT's popularity, reflecting on the massive returns such an investment would have yielded.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Apple's M4 Mac Mini: A New Contender for AI Development

- Mac Mini looks compelling now... Cheaper than a 5090 and near double the VRAM... (Score: 49, Comments: 18): The post suggests that the Mac Mini with the M4 chip could be a more attractive option for AI workloads compared to high-end GPUs like the hypothetical 5090. The author highlights that the Mac Mini is potentially cheaper and offers nearly twice the VRAM, making it a compelling choice for AI tasks that require substantial memory.

- New M4 / Pro Mac Minis discuss (Score: 40, Comments: 58): The post discusses speculation about potential M4 / Pro Mac Mini models for AI tasks. While no specific specs or pricing information is provided, the title suggests interest in the capabilities and cost of future Mac Mini iterations optimized for artificial intelligence applications.

- Speculation on M4 Mac Mini pricing: Base model with 32GB RAM estimated at $1000, while 64GB version at $2000. A user claimed base model with 16GB could be $499 with education discount.

- Discussion on memory bandwidth and performance: The M4 is estimated to have 260 GB/s bandwidth, potentially achieving 6-7 tokens/s with Qwen 72B 4-bit MLX. Some users debated the trade-offs between Mac Minis and GPUs like 3090s for AI tasks.

- Comparisons with Nvidia GPUs: Users discussed how Mac Minis with high RAM could compete with expensive GPUs like the 4090. However, others noted that while Macs offer more RAM, GPUs still provide significantly faster processing speeds for AI tasks.

Theme 2. Stable Diffusion 3.5 Medium Released on Hugging Face

- Stable Diffusion 3.5 Medium · Hugging Face (Score: 68, Comments: 31): Stable Diffusion 3.5 Medium, a new text-to-image model, has been released on Hugging Face. This model boasts improved capabilities in text rendering, multi-subject generation, and compositional understanding, while also featuring enhanced image quality and reduced artifacts compared to previous versions. The model is available for commercial use under the OpenRAIL-M license, with a 768x768 default resolution and support for various inference methods including txt2img, img2img, and inpainting.

- Users inquired about hardware requirements for self-hosting the model. According to the blog, it requires 10GB of VRAM, with GPUs ranging from 3090 to H100 recommended for "32GB or greater" setups.

- Discussion arose about the possibility of running the model with smaller quantizations, similar to LLMs. Users speculated that this would likely be attempted by the community.

- When asked about comparisons to Flux Dev, one user simply responded "badly," suggesting that Stable Diffusion 3.5 Medium may not perform as well as Flux Dev in certain aspects.

Theme 3. AI Safety and Alignment: Debates and Criticisms

- Apple Intelligence's Prompt Templates in MacOS 15.1 (Score: 293, Comments: 67): Apple's MacOS 15.1 introduces AI prompt templates and safety measures as part of its Apple Intelligence feature. The system includes built-in prompts for various tasks such as summarizing text, explaining concepts, and generating ideas, with a focus on maintaining user privacy and data security. Apple's approach emphasizes on-device processing and incorporates content filtering to prevent the generation of harmful or inappropriate content.

- Users humorously critiqued Apple's prompt engineering, with jokes about "begging" for proper JSON output and debates on YAML vs. JSON efficiency. The discussion highlighted the importance of minifying JSON for token savings.

- The community expressed skepticism about Apple's approach to preventing hallucinations and factual inaccuracies, with one user sharing a GitHub gist containing metadata.json files from Apple's asset folders.

- Discussions touched on the potential use of a 30 billion parameter model (v5.0-30b) and critiqued the inclusion of specific sports like diving and hiking in event options, speculating on possible management influence.

- The dangerous risks of “AI Safety” (Score: 47, Comments: 62): The post discusses potential risks of AI alignment efforts, linking to an article that suggests alignment technology could be misused to serve malicious interests rather than humanity as a whole. The author argues this is already happening, noting that current API-based AI systems often enforce stricter rules than Western democratic laws, sometimes aligning more closely with extremist ideologies like the Taliban in certain areas, while local models are less affected but still problematic.

- Users noted AI's inconsistent content restrictions, with some pointing out that API-based AI often prohibits content readily available on primetime television. There's significant demand for NSFW content, despite AI companies' anti-NSFW stance.

- Commenters discussed the potential for AI alignment to be used as a tool for censorship and control. Some argued this is already happening, with AI being ideologically aligned to its creators and used to exert power over users.

- Several users expressed concerns about corporate anxiety and sensitivity driving AI restrictions, potentially leading to the suppression of free speech. Some advocated for widespread AI access to balance power between citizens and governments/corporations.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Research and Techniques

- Geometric structure in LLM-learned concepts: A paper shared on Twitter reveals surprising geometric structures in concepts learned by large language models, including brain-like "lobes" and precise "semantic crystals".

- AI-generated code at Google: Google CEO Sundar Pichai stated that more than 25% of new code at Google is now generated by AI. Some commenters speculate this likely includes auto-complete suggestions and other assistance tools.

- ARC-AGI benchmark progress: MindsAI achieved a new high score of 54.5% on the ARC-AGI benchmark, up from 53% just 6 days prior. The prize goal is 85%.

AI Applications and Impacts

- AI in education: A study found students with AI tutors learned more than twice as much in less time compared to traditional in-class instruction. Some commenters noted AI could provide more personalized, interactive learning.

- Digital fruit replication: A plum became the first fruit to be fully digitized and reprinted with its scent, without human intervention.

- AI in software development: Starting today, developers can select Claude 3.5 Sonnet in Visual Studio Code and Github Copilot. Gemini is also officially coming to Github Copilot.

AI Model Releases and Improvements

- Stable Diffusion 3.5 improvements: A workflow combining SD 3.5 Large, Medium, and upscaling techniques produced high-quality image results, showcasing advancements in image generation capabilities.

AI Industry and Business

- OpenAI revenue sources: OpenAI's CFO reported that 75% of the company's revenue comes from paying consumers, rather than business customers. This sparked discussion about OpenAI's business model and profitability timeline.

AI Ethics and Societal Impact

- Linus Torvalds on AI hype: Linux creator Linus Torvalds stated that AI is "90% marketing and 10% reality". This sparked debate about the current state and future potential of AI technology.

Memes and Humor

- A humorous image depicting preparation for "AI wars" using anime-style characters generated discussion about the potential militarization of AI and its cultural impact.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1: Apple's M4 Chips Supercharge AI Performance

- LM Studio Steals the Show on Apple's New M4 MacBook Pro: At the recent Apple event, LM Studio showcased its capabilities on the new MacBook Pro powered by M4 chips, highlighting its impact on AI applications.

- Rumors Fly as M4 Ultra Aims to Outshine NVIDIA's 4090 GPUs: The upcoming M4 Ultra is rumored to support 256GB of unified memory, potentially outperforming the M2 Ultra and rivaling high-end GPUs.

- M3 Max Impresses Engineers with 60 Tokens Per Second: The M3 Max chip reportedly runs models like Phi 3.5 MoE at around 60 tokens per second, showcasing its efficiency even in lower-end configurations.

Theme 2: AI Models Stir Up the Community with Updates and Controversies

- Haiku 3.5 Release Imminent, AI Enthusiasts Buzz with Excitement: The community eagerly anticipates the release of Haiku 3.5, with hints it could happen soon, sparking curiosity about its potential improvements.

- Gemini Leaves Competitors in the Dust, Coders Rejoice: Users praise Gemini for its prowess in handling database coding tasks, outperforming models like Claude and Aider in practical applications.

- Users Mock Microsoft's Overcautious Phi-3.5 Model: Phi-3.5's excessive censorship led to humorous mockery, with users sharing satirical responses highlighting the model's reluctance to answer simple questions.

Theme 3: Fine-Tuning and Training Hurdles Challenge AI Developers

- Unsloth Team Uncovers Gradient Glitches, Shakes Training Foundations: The Unsloth team revealed critical issues with gradient accumulation in training frameworks, affecting language model consistency.

- LoRA Fine-Tuning Hits a Wall on H100 GPUs, Engineers Frustrated: Users struggle with LoRA finetuning on H100 GPUs, noting QLoRA might be the only workaround due to unresolved BitsAndBytes issues.

- Quantization Mishaps Turn Outputs into Gibberish: During Llama 3.2 1B QLoRA training, users experienced incoherent outputs when applying Int8DynActInt4WeightQuantizer, highlighting challenges in quantization processes.

Theme 4: AI Disrupts Software Engineering and Automation Tools Flourish

- AI Gobbles Up Software Engineer Jobs, Developers Panic: Members note that AI is increasingly taking over regular software engineering tasks, sparking debates about the future of tech employment.

- Skyvern Automates Browsers, Manual Tasks Meet Their Match: Skyvern introduces a no-code solution for browser automation, enabling users to streamline workflows without writing code.

- ThunderKittens Unleash New Features with a Side of Humor: The ThunderKittens team released new features like exciting kernels and talking models, sprinkled with playful mentions of adorable kittens.

Theme 5: OpenAI Tackles Factuality and Enhances User Experience

- OpenAI Fights Hallucinations with New SimpleQA Benchmark: Introducing SimpleQA, OpenAI aims to measure the factual accuracy of language models with 4,000 diverse questions, targeting the hallucination problem.

- ChatGPT Finally Lets You Search Chat History, Users Rejoice: OpenAI rolled out the ability to search through chat history on the ChatGPT web app, making it easier to reference or continue past conversations.

- AGI Debate Heats Up as Optimists and Skeptics Clash: Members express mixed opinions on the timeline and feasibility of achieving AGI, debating whether companies like Google can keep pace amid challenges.

PART 1: High level Discord summaries

LM Studio Discord

-

Apple's MacBook Pro Features LM Studio: During the recent Apple event, LM Studio showcased its capabilities on the new MacBook Pro powered by the M4 family of chips, significantly recognizing its impact in commercial applications.

- Members expressed excitement for the developers, noting that this acknowledgment could influence future integration within AI workflows.

- M3 Max Impresses with Token Speed: The M3 Max reportedly runs models like Phi 3.5 MoE at around 60 tokens per second, underscoring its efficiency even in lower-end configurations.

- While this is impressive, some users suggested that for peak speed, a dedicated GPU like the A6000 might yield better results.

- H100 GPU Rentals Become Affordable: Users mentioned that H100 rentals are now available for approximately $1 per hour, making them a cost-effective option for model inference.

- Despite the drop in pricing, discussions emerged regarding the practicality of using high-powered GPUs versus local models for various tasks.

- M4 Ultra Rumored Specs Daunt Competitors: The upcoming M4 Ultra is rumored to support 256GB of unified memory, with expectations to significantly outperform the M2 Ultra.

- Speculations abound regarding the M4 rivaling 4090 GPUs, with users buzzing about enhanced performance metrics.

- Windows vs. Linux Performance Clash: Frustration with Windows surfaced, highlighting its limitations in AI tasks compared to Linux, which provides greater efficiency and control.

- Members agreed that Linux can optimize GPU utilization better, especially when running computational-intensive applications.

HuggingFace Discord

-

Hugging Face API facilitates token probabilities: A user confirmed that obtaining token probabilities via the Hugging Face serverless inference API for large language models is possible, especially using the inference client.

- Discussions also touched on rate limits and API usage, which was further elaborated with a detailed link on Rate Limits.

- Ollama offers privacy in image analysis: Concerns about Ollama accessing local files during image analysis were addressed, highlighting that it operates locally without server interactions.

- This ensures user privacy while analyzing images effectively.

- Choosing the right path in Machine Learning: A participant emphasized selecting a major that encompasses a wide knowledge of data science rather than just AI, reflecting on the importance of math and programming skills.

- Further discussions centered on the foundational aspects necessary for careers in this field.

- Qwen 2 model suffers from erroneous token generation: Issues with the Qwen 2 base model have been reported, particularly regarding unexpected tokens at the end of outputs due to EOS token misrecognition.

- This reflects broader concerns about the model's context length handling.

- Langchain SQL agent struggles with GPT-4: A transition from GPT-3.5 Turbo to GPT-4 with the Langchain SQL agent returned mixed results, with the latter posing difficulties.

- Concerns over API decommissioning prompted discussions on alternative environments.

Unsloth AI (Daniel Han) Discord

-

Unsloth team uncovers Gradient Issues: The Unsloth team released findings on gradient accumulation issues in training frameworks affecting language model output consistency.

- Their report suggests alternatives to traditional batch sizes due to significant impacts on loss calculations.

- Apple Launches Compact New Mac Mini: Apple's announcement of the new Mac mini features M4 and M4 Pro chips, boasting impressive 1.8x CPU performance increases.

- This release marks Apple's first carbon-neutral Mac, embodying a significant milestone in their product line.

- ThunderKittens Bring New Features: The ThunderKittens team published a blog post unveiling new features, highlighting exciting kernels and talking models.

- They included playful mentions of social media reactions and extra adorable kittens to enhance community engagement.

- Instruct Fine-Tuning Challenges: A user faced tensor shape mismatch errors with the Meta Llama3.1 8B Instruct model while attempting fine-tuning.

- Frustrations mounted as they switched models but continued to struggle with merging and loading, pointing to compatibility concerns.

- Unsloth’s Efforts on VRAM Efficiency: Unsloth announced a pretraining method achieving 2x faster training and consuming 50% less VRAM, coupled with a free Colab notebook for Mistral v0.3 7b.

- Users were advised on fine-tuning embeddings and adjusting learning rates to stabilize training.

OpenAI Discord

-

Mixed Opinions on AGI Race: Members expressed differing views on the timeline and feasibility of achieving AGI, particularly concerning Google's challenges with regulations hampering their progress.

- Concerns about Google’s hurdles contrasted with optimism around emerging algorithms boosting advancement.

- Model Efficiency Debate Rages On: The community discussed how larger models aren't always superior, pointing out the role of quantization in achieving efficiency without sacrificing performance, with mentions of Llama 3.0 and Qwen models.

- Recent quantized models were cited as outperforming their larger counterparts, underscoring the shift in focus to effective model usage.

- Nvidia GPU Sufficiency Under Fire: Debate centered around the adequacy of a 4070 Super GPU for local AI projects, calling attention to the need for higher VRAM options for demanding applications.

- Participants acknowledged both the performance of smaller models and the gap in availability of affordable high-performance GPUs.

- Prompt Generation Tools in Demand: Users sought access to a prompt generation tool in the OpenAI Playground to better tailor their requests, referencing the official prompt generation guide.

- The discussion led to a consensus on its importance for refining prompt strategies.

- Organized Data Crucial for Chatbots: When developing personal chatbots, a focus on maintaining organized and concise data was highlighted to avoid extraneous API call fees, as input tokens for irrelevant data still incur costs.

- One member pointed out that proper data management is not just a best practice, but a critical financial consideration in API usage.

Perplexity AI Discord

-

Perplexity Supply Launches New Essentials: Perplexity Supply has launched a range of thoughtful essentials designed for curious minds, allowing customers to spark conversations through their products.

- Global shipping is now available to countries like the US, Australia, and Germany, with updates accessible via this link.

- File Upload Issues Frustrate Users: Several users have reported issues with the file upload feature, highlighting problems with lingering files during discussions.

- One user pointed out that the file handling capabilities are subpar compared to other platforms.

- Exploration of Playground vs API Results: A user raised concerns about discrepancies observed between results from the Playground and the API, despite both using the same model.

- No further clarifications were provided on the reasons behind these inconsistencies.

- Earth's Temporary New Moon Sparks Discussions: A recent discussion highlighted Earth's temporary new moon, detailing its visibility and effects view details here.

- This fascinating find led to dynamic conversations around temporary celestial phenomena.

- Clarifying API Use for Perplexity Spaces: It was clarified that there is currently no API available for Perplexity Spaces, with the website and API functioning as separate entities.

- A user expressed interest in using the Perplexity API for development projects but received no specific guidance.

aider (Paul Gauthier) Discord

-

Aider enhances file management with commands: Aider introduces

/saveand/loadcommands for easy context management, simplifying batch processing and file handling.- This feature eliminates the hassle of manually recreating code contexts, making workflows more efficient.

- Anticipated Haiku 3.5 release stirs excitement: Ongoing discussions suggest the Haiku 3.5 release could happen soon, potentially as early as tomorrow.

- Users are eager to learn about its enhancements over previous versions, hoping for significant improvements.

- Qodo AI vs Cline sparks comparison debate: A discussion surfaces regarding how Qodo AI differentiates itself from competitors like Cline, especially in usability and features.

- Despite a starting subscription of $19/month, concerns about limited features dampen enthusiasm about Qodo's position in the market.

- Skyvern automates browser tasks with AI: Skyvern aims to streamline browser automation as a no-code solution, delivering efficiency for repetitive workflows.

- Its adaptability across web pages allows users to execute complex tasks with straightforward commands.

- Users weigh in on Gemini's coding effectiveness: Feedback highlights Gemini's proficiency in handling database-related coding tasks compared to Claude and Aider.

- Consensus reveals Gemini’s advantages for practical coding needs, yet performance can fluctuate based on context.

OpenRouter (Alex Atallah) Discord

-

Oauth Authentication Breaks, Fix Incoming: Apps utilizing openrouter.ai/auth faced issues this morning due to an Oauth problem, but a fix is expected shortly after the announcement.

- The team confirmed that downtime for API key creation would be minimal, reassuring affected users.

- Alpha Testers Wanted for macOS Chat App: A developer seeks alpha testers for a flexible chat app for macOS, with screenshots available for review.

- Interested parties are encouraged to DM for more info, underscoring the importance of user feedback during testing.

- Security Concerns Surround OpenRouter API Keys: Users are worried about the vulnerability of OpenRouter API keys, especially regarding misuse in proxy setups like Sonnet 3.5.

- A community member warned, 'Just because you think the key is secure doesn't mean it is secure,' emphasizing the importance of key management.

- Eager Anticipation for Haiku 3.5 Release: The community buzzes with excitement over the expected release of Haiku 3.5, with a model slug shared as

claude-3-5-haiku@20241022.

- Despite the model being on allow lists and not generally available yet, hints suggest a release might happen within a day.

- Request for Access to Integration Features: Users are clamoring for access to the integration feature, emphasizing its importance for testing various capabilities.

- Responses like 'I would like to rerequest integration feature!' indicated a strong demand for this functionality.

Stability.ai (Stable Diffusion) Discord

-

GPU Price Debate Heats Up: Members analyzed that used 3090 cards cost less than 7900 XTX models, stressing the budget vs performance trade-off.

- eBay prices hover around ~$690, leading to tough choices in GPU selection for cost-conscious engineers.

- Training for Custom Styles: A member asked about training models on a friend's art style with 15-20 images, debating between a model or Lora/ti.

- Others suggested using a Lora for better character consistency based on specific stylistic preferences.

- Grey Image Troubles in Stable Diffusion: Multiple users reported encountering grey images in Stable Diffusion and sought troubleshooting advice.

- Members recommended trying different UI options and checking compatibility with AMD GPUs to improve outputs.

- UI Showdown: Auto1111 vs Comfy UI: Comfy UI emerged as the popular choice for its user-friendliness, while some still prefer Auto1111 for automation.

- Suggestions also included trying SwarmUI for its easy installation and functionality.

- Buzz Around Upcoming AI Models: The community speculated about the potential popularity of SD 3.5 compared to SDXL, driving discussions on performance.

- Anticipation grows for new control nets and model updates that are crucial in keeping pace with AI advancements.

Nous Research AI Discord

-

Microsoft De-Risks from OpenAI Dependency: Discussion arose about Microsoft's strategy to de-risk from OpenAI, particularly if OpenAI declares AGI, which could provide Microsoft a contractual out and a chance to renegotiate.

- “Microsoft will NEVER let that happen,” expressed skepticism about the potential AGI release.

- AI Latency Issues Ignite Concerns: Notable issues surfaced regarding a reported 20-second latency with an AI model, with members humorously suggesting it might be run on a potato.

- Comparisons were made with Lambda's performance, which serves 10x more requests with only 1s latency.

- Hermes 3 Performance Surprises Users: Members discussed that Hermes 3 8B surprisingly rivals GPT-3.5 quality, outperforming other sub-10B models.

- Critiques were directed at models like Mistral 7B, described as sad by comparison.

- Spanish Function Calling Datasets Needed: A member seeks to build function calling datasets in Spanish, facing challenges with poor results from open-source models, notably using data from López Obrador's conferences.

- Their goal is to process info from over a thousand videos, targeting journalistic relevance.

- Sundar Pichai Highlights AI's Role at Google: During an earnings call, Sundar Pichai stated that over 25% of new code at Google is AI-generated, prompting discussions on the impact of AI on coding.

- This statistic, shared widely, has led to conversations about evolving coding practices.

Eleuther Discord

-

Multiple Instances on a Single GPU: Members discussed running multiple instances of GPT-NeoX on the same GPU, aiming to maximize memory usage with larger batch sizes, although benefits from DDP may be limited.

- The ongoing conversation highlighted potential configurations and considerations for parallel training.

- RAG with CSV Data: A member questioned the efficacy of using raw CSV data for RAG with a ~3B LLM, indicating plans to convert it to JSON after facing challenges with case number discrepancies.

- This move implies preprocessing complexities that could impact RAG performance.

- Entity Extraction Temperature Tuning: After recognizing incorrect temperature settings during entity extraction, a member re-attempted with corrected parameters to enhance results.

- This highlights the significance of tuning model parameters for effective performance.

- Modular Duality and Optimizations in LLMs: A recent paper revealed that methods like maximal update parameterization and Shampoo serve as partial approximations to a single duality map for linear layers.

- This connection reinforces the theoretical basis of contemporary optimization techniques discussed in the paper.

- Challenges of Diffusion Models: Discussion emerged on how diffusion models present unique limitations compared to GANs and autoregressive models, especially regarding training and quality metrics.

- Members pinpointed issues around controllability and representation learning, stressing their implications on model applicability.

Interconnects (Nathan Lambert) Discord

-

Elon Musk in talks to boost xAI valuation: Elon is negotiating a new funding round aimed at raising xAI's valuation from $24 billion to $40 billion, according to the WSJ. Despite discussions, Elon continuously denies prior fundraising rumors, leading to community unease about xAI's direction.

- xAI kind of scares me, expressed a member, reflecting broader concerns within the community.

- Uncovering the Claude 3 Tokenizer: A recent post highlights the Claude 3 tokenizer's closed nature, revealing limited accessible information. Users have to rely on billed services instead of open documentation, causing frustration.

- The post underscores significant barriers for developers looking to leverage Claude 3 effectively.

- AI2 to Relocate to New Office by Water: AI2 is set to open a new office in June next year, offering beautiful views of the Pacific Northwest. Members expressed excitement for the relocation, citing the delightful scenery as a perk.

- This shift promises to foster a more inspiring work environment for the AI2 team.

- Staggering Prices for MacBook Pro: Members reacted to the exorbitant prices of high-spec MacBook Pros, with configurations like 128GB RAM + 4TB SSD costing around 8k EUR. The discussion highlighted bewilderment at the pricing's implications across different regions.

- Comments reflected on how currency fluctuations and taxes could complicate purchases for engineers seeking cutting-edge hardware.

- Voiceover enhances personal articles: A member advocated for voiceover as a more engaging medium for tomorrow's personal article. They expressed satisfaction with voiceover content, signaling a shift in how written material could be delivered.

- This suggests a trend towards integrating audio elements for enhanced user experience and accessibility.

Notebook LM Discord Discord

-

NotebookLM Usability Study Invitation: NotebookLM UXR is inviting users to participate in a 30-minute remote usability study focused on Audio Overviews, offering a $50 gift for selected participants.

- Participants need high-speed Internet, a Gmail account, and functional video/audio equipment for these sessions, which will continue until the end of 2024.

- Simli Avatars Enhance Podcasts: A member showcased how Simli overlays real-time avatars by syncing audio segments through diarization from .wav files, paving the way for future feature integration.

- This proof of concept opens up exciting possibilities for enhancing user engagement in podcasts.

- Pictory's Role in Podcast Video Creation: Users are exploring Pictory for converting podcasts into video format, with discussions on how to integrate speakers’ faces effectively.

- Another member mentioned that Hedra can facilitate this by allowing the upload of split audio tracks for character visualization.

- Podcast Generation Limitations: Users reported challenges in generating Spanish podcasts after initial success, leading to questions about the feature's status.

- One user expressed frustration, noting, 'It worked pretty well for about two days. Then, stopped producing in Spanish.'

- Voice Splitting Techniques Discussion: Participants discussed the use of Descript for efficiently isolating individual speakers in podcasts, leveraging automatic segmentation capabilities noted during the Deep Dive.

- One user remarked, 'I have noticed that sometimes the Deep Dive divides itself into episodes,' showcasing the platform's potential for simplifying podcast production.

GPU MODE Discord

-

AI Challenges Software Engineer Roles: A member noted that AI is increasingly taking over regular software engineer jobs, indicating a shifting job landscape.

- Concerns were raised about the implications of this trend on employment opportunities in the tech industry.

- Growing Interest in Deep Tech: A member expressed a strong desire to engage in deep tech innovations, reflecting curiosity about advanced technologies.

- This highlights a trend towards deeper engagement in technology beyond surface-level applications.

- FSDP2 API Deprecation Warning: A user highlighted a FutureWarning concerning the deprecation of

torch.distributed._composable.fully_shard, urging a switch to FSDP instead, detailed in this issue.

- This raised questions regarding the fully_shard API's ongoing relevance following insights from the torch titan paper.

- Memory Profiling in Rust Applications: A member sought advice on memory profiling a Rust application using torchscript to identify potential memory leak issues.

- They specifically wanted to debug issues involving custom CUDA kernels.

- ThunderKittens Talk Scheduled: Plans for a talk on ThunderKittens discussed features and community feedback, with gratitude expressed for coordination efforts.

- The engagement promises to strengthen community bonds around the project.

Torchtune Discord

-

Llama 3.2 QLoRA Training Issues: During the Llama 3.2 1B QLoRA training, users achieved QAT success but faced incoherent generations with Int8DynActInt4WeightQuantizer.

- Concerns were raised that QAT adjustments might be insufficient, potentially causing quantization problems.

- Quantization Layers Create Confusion: Generated text incoherence post-quantization was attributed to incorrect configurations in QAT training and quantization layers.

- Users shared code snippets illustrating misconfigurations with torchtune and torchao versions.

- Activation Checkpointing Slows Down Saves: Participants questioned the default setting of activation checkpointing as false, noting substantial slowdowns in checkpoint saves for Llama 3.2.

- It was clarified that this overhead isn’t necessary for smaller models, as it incurs additional costs.

- Dynamic Cache Resizing to Improve Efficiency: Proposals for a dynamic resizing feature for the kv cache would tailor memory allocation efficiently based on actual needs.

- This change is expected to enhance performance by reducing unnecessary memory use, particularly during extended generations.

- Multi-query Attention's Role in Cache Efficiency: The implementation of multi-query attention aims to save kv-cache storage, as noted in discussions about PyTorch 2.5 enhancements.

- Group query attention support is seen as a strategic advancement, easing manual kv expansion needs in upcoming implementations.

Cohere Discord

-

Dr. Vyas on SOAP Optimizer Approaches: Dr. Nikhil Vyas, a Post Doc at Harvard, is set to discuss the SOAP Optimizer in an upcoming event. Tune in on the Discord Event for insights.

- This provides an opportunity for deeper understanding of optimization techniques relevant to AI models.

- Command R Model Faces AI Detection Issues: Users reported that the Command R model consistently outputs text that is 90-95% AI detectable, sparking frustration among paid users.

- Creativity is inherent in AI, suggesting an underlying limitation related to the training data distribution.

- Concerns on Invite and Application Responses: Members are actively inquiring about the status of their invites and common response times for applications, expressing worries over extended delays.

- There appears to be a lack of clarity regarding potential rejection criteria, indicating a need for improved communication.

- Embed V3 vs Legacy Models Debate: Discussion highlights comparisons between Embed V3, ColPali, and JINA CLIP, focusing on evolving comparative methodologies beyond older embeddings.

- Members are interested in how integrating JSON structured outputs could enhance functionality, particularly for search capabilities.

- Seeking Help on Account Issues: For account or service problems, users are advised to reach out directly to support@cohere.com for assistance.

- One proactive member expressed eagerness to assist others experiencing similar issues.

Latent Space Discord

-

Browserbase bags $21M for web automation: Browserbase announced they have raised a $21 million Series A round, co-led by Kleiner Perkins and CRV, to help AI startups automate the web at scale. Read more about their ambitious plans in this tweet.

- What will you 🅱️uild? highlights their goal of future development making it easier for startups to engage with web automation.

- ChatGPT finally allows chat history search: OpenAI has rolled out the ability to search through chat history on the ChatGPT web application, allowing users to quickly reference or continue past conversations. This feature enhances user experience by making it simpler to access previous chats.

- OpenAI announced this update in a tweet, emphasizing streamlined interaction with the platform.

- Hamel Husain warns on LLM evaluation traps: A guide by Hamel Husain outlines common mistakes with LLM judges, such as using too many metrics and overlooking domain experts' insights. He stresses the importance of validated measurements for more accurate evaluations.

- His guide can be found in this tweet, advocating for focused evaluation strategies.

- OpenAI's Realtime API springs new features: OpenAI's Realtime API now incorporates five new expressive voices for improved speech-to-speech applications and has introduced significant pricing cuts thanks to prompt caching. This means a 50% discount on cached text inputs and 80% off cached audio inputs.

- The new pricing model promotes more economical use of the API and was detailed in their update tweet.

- SimpleQA aims to combat hallucinations in AI: The new SimpleQA benchmark has been launched by OpenAI, consisting of 4k diverse questions for measuring the factual accuracy of language models. This initiative directly addresses the hallucination problem prevalent in AI outputs.

- OpenAI's announcement underscores the need for reliable evaluation standards in AI deployment.

tinygrad (George Hotz) Discord

-

Tinycorp's Ethos NPU Stance Sparks Debate: Members discussed Tinycorp's unofficial stance on the Ethos NPU, with some suggesting inquiries about hardware specifics and future support.

- One user humorously noted that detailed questions could elicit richer community feedback on the NPU's performance.

- Mastering Long Training Jobs on Tinybox: Strategies for managing long training jobs on Tinybox included the use of tmux and screen for session persistence.

- One member humorously complained about their laziness to switch to a better tool despite its recommendation.

- Qwen2's Unique Building Blocks Shake Things Up: Curiosity grew around Qwen2's unconventional approach to foundational elements like rotary embedding and MLP, with speculation on Alibaba's involvement.

- A user expressed frustration over this collaboration, adding to the community's spirited discussions on dependencies.

- EfficientNet Facing OpenCL Output Issues: A user reported exploding outputs while implementing EfficientNet in C++, prompting calls for debugging tools to help compare buffers.

- Suggestions included methods to access and dump buffers from tinygrad’s implementation for more effective troubleshooting.

- Exporting Models to ONNX: A Hot Topic: Discussion focused on strategies for exporting tinygrad models to ONNX, suggesting existing scripts for optimization on lower-end hardware.

- Debates emerged over the merits of directly exporting models versus alternative bytecode methods for chip deployment.

Modular (Mojo 🔥) Discord

-

Mojo Idioms in Evolution: Members discussed that idiomatic Mojo is still evolving as the language gains new capabilities, leading to emerging best practices.

- This showcases a fluidity in language idioms compared to more entrenched languages like Python.

- Learning Resources Scarcity: A member highlighted struggles in finding resources for learning linear algebra in Mojo, particularly regarding GPU usage and implementation.

- It was suggested that direct communication with project leads on NuMojo and Basalt could help address the limited material available.

- Ambitious C++ Compatibility Goals: Members shared ambitions of achieving 100% compatibility with C++, with discussions centering around Chris Lattner's potential influence.

- One user suggested it would be a complete miracle, reflecting the high stakes and interest surrounding compatibility.

- Syntax Spark Conversations: A proposal to rename 'alias' to 'static' ignited debate on the implications for Mojo's syntax and potential confusion with C++ uses.

- Some members voiced concerns about using static, suggesting it may not accurately represent its intended functionality as it does in C++.

- Exploring Custom Decorators: Plans for implementing custom decorators in Mojo were discussed, viewed as potentially sufficient alongside compile-time execution.

- It was noted that functionalities like SQL query verification may exceed the capabilities of decorators alone.

LlamaIndex Discord

-

Create-Llama App Launches for Rapid Development: The new create-llama tool allows users to set up a LlamaIndex app in minutes with full-stack support for Next.js or Python FastAPI backends, and offers various pre-configured use cases like Agentic RAG.

- This integration facilitates the ingestion of multiple file formats, streamlining the development process significantly.

- Game-Changer Tools from ToolhouseAI: ToolhouseAI provides a suite of high-quality tools that enhance productivity for LlamaIndex agents, noted during a recent hackathon for drastically reducing development time.

- These tools are designed for seamless integration into agents, proving effective in expediting workflows.

- Enhanced Multi-Agent Query Pipelines: A demonstration by a member showcased using LlamaIndex workflows for multi-agent query pipelines, promoting this method as effective for collaboration.

- The demo materials can be accessed here to further explore implementation strategies.

- RAG and Text-to-SQL Integration Insights: An article elaborated on the integration of RAG (Retrieval-Augmented Generation) with Text-to-SQL using LlamaIndex, showcasing an improvement in query handling.

- Users reported a 30% decrease in query response times, emphasizing LlamaIndex's role in improving data retrieval efficiency.

- Enhancements in User Interaction via LlamaIndex: LlamaIndex seeks to simplify how users interact with databases by automating SQL generation from natural language inputs, leading to greater user empowerment.

- The approach has proven effective as users expressed feeling more confident in extracting data, even without deep technical knowledge.

DSPy Discord

-

IReRa Tackles Multi-Label Classification: The paper titled IReRa: In-Context Learning for Extreme Multi-Label Classification proposes Infer–Retrieve–Rank to improve language models' efficiency in multi-label tasks, achieving top results on the HOUSE, TECH, and TECHWOLF benchmarks.

- This underscores the struggle of LMs that lack prior knowledge about classes, presenting a new framework that could enhance overall performance.

- GitHub Repo Linked to IReRa: Members noted a relevant GitHub repo mentioned in the paper's abstract, indicating further insights into the discussed methodologies.

- This could greatly aid in the implementation and understanding of the findings presented in the paper.

- Debating DSPy's Structure Enforcement: A member questioned the need for DSPy to enforce structure when libraries like Outlines could handle structured generation more efficiently.

- Another contributor pointed out DSPy’s structural enforcement since v0.1 is essential for accurate mapping from signatures to prompts, balancing effectiveness with quality.

- Quality vs Structure Showdown: The discussion heated up as skepticism arose around structured outputs potentially lowering output quality, suggesting that constraints could actually enhance results, especially for smaller models.

- This approach could yield great results, particularly for smaller LMs, reflecting varying opinions on quality and adherence to formats.

- Integrating MIPROv2 with DSPy: A member shared insights on utilizing zero-shot MIPROv2 with a Pydantic-first interface for structured outputs, advocating for more integration in DSPy’s optimization processes.

- They expressed a desire for a more integrated and native way to handle structured outputs, indicating possible improvements in workflow.

OpenInterpreter Discord

-

Job Automation Predictions Ignite Workforce Debate: A user predicted that virtual beings will lead to job redundancies, comparing it to a virtual Skynet takeover.

- This sparked a robust discussion about the overall impact of AI on employment and future job landscapes.

- Open Interpreter's Edge Over Claude: A member inquired about how Open Interpreter stands apart from Claude in computer operations.

- Mikebirdtech highlighted the utilization of

interpreter --oswith Claude, underscoring the advantages of being open-source. - Restoration of Chat Profiles Raises Questions: A user sought advice on restoring a chat using a specific profile/model that was previously active.

- Despite using

--conversations, it defaults to the standard model, leaving users looking for solutions. - ChatGPT Chat History Search Feature Rolls Out: OpenAI announced a rollout allowing users to search their chat history on ChatGPT web, enhancing reference convenience.

- This new feature aims to streamline user interactions, improving the overall experience on the platform.

- Major Milestone in Scent Digitization Achieved: A team succeeded in digitizing a summer plum without any human intervention, achieving a significant breakthrough.

- One member expressed their thrill about carrying the plum scent and considered an exclusive fragrance release to fund scientific exploration.

LangChain AI Discord

-

Invoke Function Performance Mystery: Calling the .invoke function of the retriever has baffled users, showcasing a response time of over 120 seconds for the Llama3.1:70b model, compared to 20 seconds locally.

- There are suspicions of a security issue affecting performance, prompting the community to assist in troubleshooting this anomaly.

- FastAPI Routes Execution Performance: FastAPI routes demonstrate impressive performance, consistently executing in under 1 second as confirmed through debugging logs.

- The user confirmed that the sent data is accurate, isolating the responsiveness issue to the invoke function itself.

- Frustration with Hugging Face Documentation: Navigating the documentation for Hugging Face Transformers has been a headache for users aiming to set up a chat/conversational pipeline.

- The difficulty in finding essential guidance within the documentation highlights an area needing improvement for user onboarding.

- Knowledge Nexus AI Launches Community Initiatives: Knowledge Nexus AI (KNAI) announced new initiatives aimed at bridging human knowledge with AI, focusing on a decentralized approach.

- They aim to transform collective knowledge into structured, machine-readable data impactful across healthcare, education, and supply chains.

- OppyDev Introduces Plugin System: OppyDev's plugin system enhances standard AI model outputs using innovative chain-of-thought reasoning to improve response clarity.

- A tutorial video demonstrates the plugin system and showcases practical improvements in AI interactions.

OpenAccess AI Collective (axolotl) Discord

-

LoRA Finetuning Remains Unresolved: A member expressed difficulty in finding a solution for LoRA finetuning on H100 GPUs, suggesting that QLoRA might be the only viable workaround.

- The issue remains persistent as another member confirmed that the BitsAndBytes issue for Hopper 8bit is still open and hasn't been resolved.

- Quantization Challenges Persist: The discussion highlighted ongoing challenges with quantization-related issues, particularly in the context of BitsAndBytes for Hopper 8bit.

- Despite efforts, it appears that no definitive solution has been established regarding these technical problems.

LAION Discord

-

Clamping Values Essential for Image Decoding: A member highlighted that failing to clamp values to [0,1] before converting decoded images to uint8 can lead to out-of-range values wrapping, impacting image quality.

- Unexpected results in image appearance could stem from neglecting this critical step in the preprocessing chain.

- Flaws Potentially Lurking in Decoding Workflow: Concerns were raised about possible flaws in the decoding workflow, which might affect overall image processing reliability.

- Further discussions are needed to thoroughly identify these issues and bolster the robustness of the workflow.

- New arXiv Paper on Image Processes: A member shared a link to a new paper on arXiv, titled Research Paper on Decoding Techniques, available here.

- This paper could provide valuable insights or methodologies relevant to ongoing discussions in image decoding.

LLM Agents (Berkeley MOOC) Discord

-

LLM Agents Quizzes Location Revealed: A member inquired about the location of the weekly quizzes for the LLM Agents course, and received a prompt response with a link to the quizzes, stating, 'here you can find all the quizzes.'

- These quizzes are critical for tracking progress in the course and are accessible through the provided link.

- Get Ready for the LLM Agents Hackathon!: Participants learned about the upcoming LLM Agents Hackathon, and were provided a link for hackathon details to sign up and join the coding fray.

- This event provides a great opportunity for participants to showcase their skills and collaborate on innovative projects.

- Easy Course Sign-Up Process: Instructions were shared on how to enroll in the course via a Google Form, with participants encouraged to fill in this form to join.

- This straightforward sign-up process aims to boost enrollment and get more engineers involved in the program.

- Join the Vibrant Course Discussion on Discord: Details were provided on joining discussions in the MOOC channel at LLM Agents Discord, fostering community engagement.

- Participants can utilize this platform to ask questions and share insights throughout the duration of the course.

Mozilla AI Discord

-

Transformer Labs showcases local RAG on LLMs: Transformer Labs is hosting an event to demonstrate training, tuning, and evaluating RAG on LLMs with a user-friendly UI installable locally.

- The no-code approach promises to make this event accessible for engineers of all skill levels.

- Lumigator tool presented in tech talk: Engineers will give an in-depth presentation on Lumigator, an open-source tool crafted to assist in selecting optimal LLMs tailored to specific needs.

- This tool aims to expedite the decision-making process for engineers when choosing their large language models.

Gorilla LLM (Berkeley Function Calling) Discord

-

Llama-3.1-8B-Instruct (FC) Falters Against Prompting: A member raised that Llama-3.1-8B-Instruct (FC) is underperforming compared to Llama-3.1-8B-Instruct (Prompting), questioning the expected results for function calling tasks.

- Is there a reason for this discrepancy? indicates a concern around performance expectations based on the model's intended functionality.

- Expectations for Function Calling Mechanics: Another participant expressed disappointment, believing that the FC variant should outperform others given its design focus.

- This led to discussions on whether the current results are surprising or hint at potential architectural issues within the model.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!