[AINews] Cohere Command R+, Anthropic Claude Tool Use, OpenAI Finetuning

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 4/3/2024-4/4/2024. We checked 5 subreddits and 364 Twitters and 26 Discords (385 channels, and 5656 messages) for you. Estimated reading time saved (at 200wpm): 639 minutes.

Busy day today.

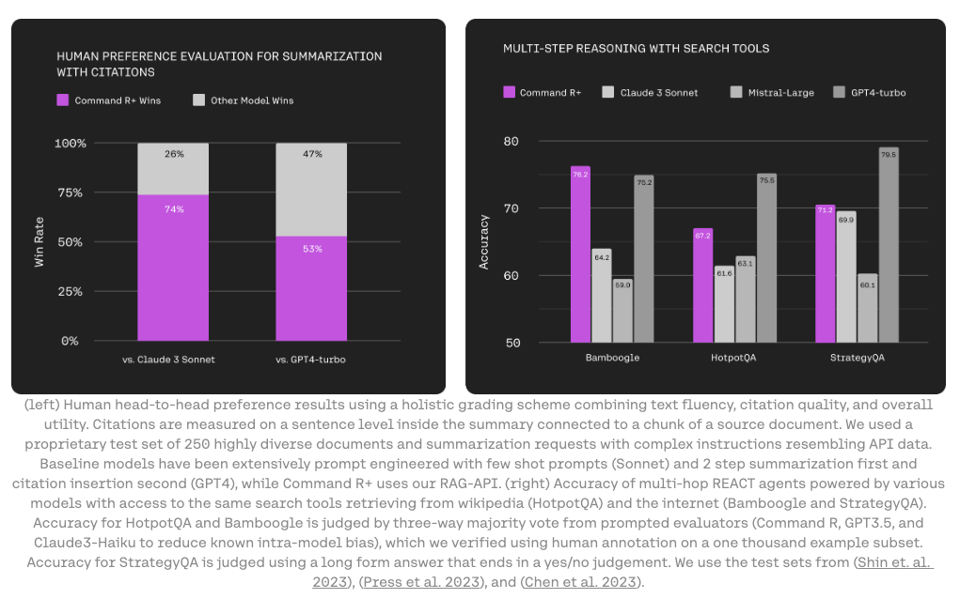

- The at least $500m richer Cohere launched a fast-follow of last month's Command R with Command R+ (official blog, weights). It's a 104B dense model with 128k context length focused on RAG, tool-use, and multilingual ("10 key languages")) usecases. Open weights for research but Aidan says "just reach out" if you want to license it (instead of paying their $3/$15 per mtok pricing). It now supports Multi-Step Tool use.

-

The $2.75B richer Anthropic launched tool use in beta as previously promised (official docs). The extensive docs come with a number of notable features, most notably advertising the ability to handle over 250 tools which enables a very different function calling architecture than before. This is presumably due to context length and recall improvements in the past year. For more details see their 3 new cookbooks:

-

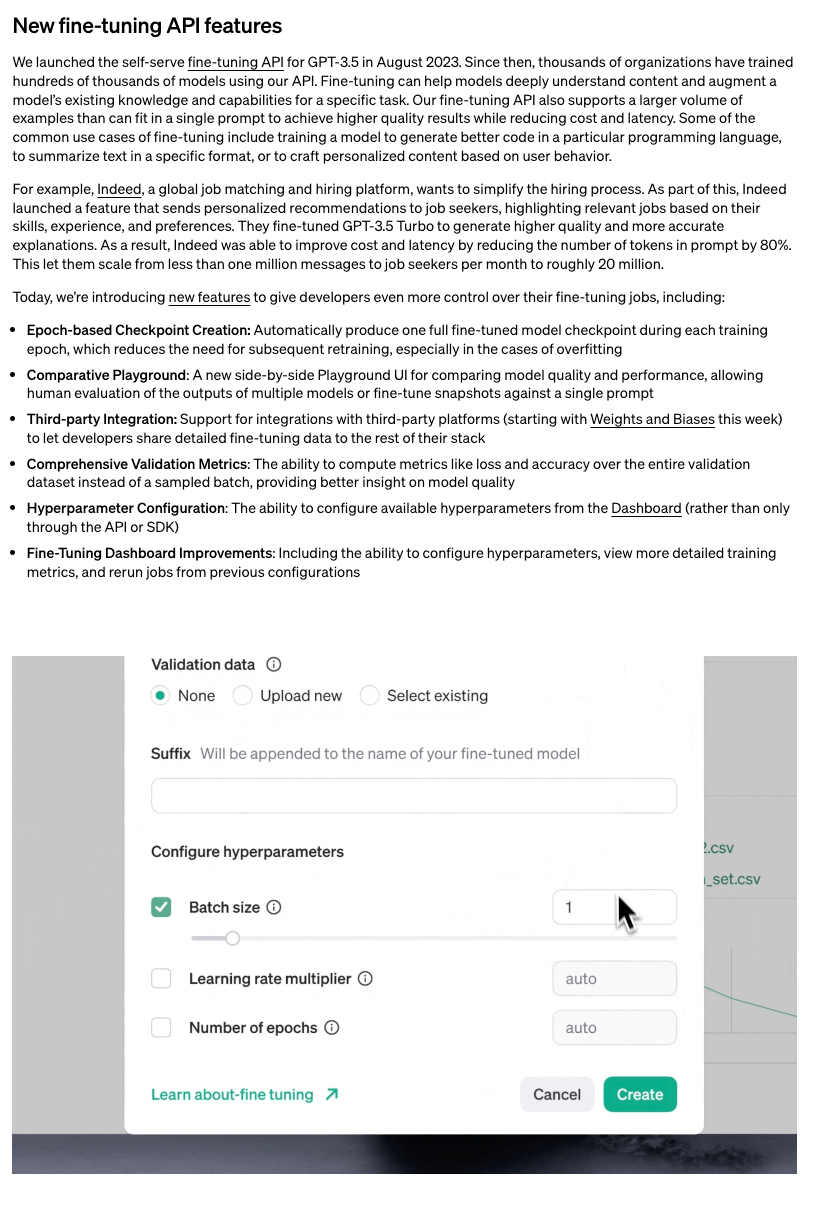

OpenAI, which hasn't raised anything in the last month (that we know of), added a bunch of very welcome upgrades to the very MVPish finetuning experience together with 3 case studies with Indeed, SK Telecom, and Harvey that basically say "you can now DIY better but also we are open for business to finetune and train your stuff".

Table of Contents

- AI Reddit Recap

- AI Twitter Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- OpenAI Discord

- Unsloth AI (Daniel Han) Discord

- Latent Space Discord

- Nous Research AI Discord

- Modular (Mojo 🔥) Discord

- LM Studio Discord

- Eleuther Discord

- LAION Discord

- OpenAccess AI Collective (axolotl) Discord

- LlamaIndex Discord

- HuggingFace Discord

- tinygrad (George Hotz) Discord

- OpenRouter (Alex Atallah) Discord

- OpenInterpreter Discord

- Interconnects (Nathan Lambert) Discord

- Mozilla AI Discord

- LangChain AI Discord

- CUDA MODE Discord

- Datasette - LLM (@SimonW) Discord

- DiscoResearch Discord

- Skunkworks AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling still not implemented but coming soon.

AI Technology Advancements

- Quantum Computing Breakthrough: In /r/singularity, Microsoft has achieved a quantum computing breakthrough, improving error rates by 800x with the most usable qubits to date, a significant step forward in quantum computing capabilities.

- Stable Audio 2.0 Release: In /r/StableDiffusion, Stability AI introduced Stable Audio 2.0, advancing audio generation capabilities with improved quality and control.

- Browser Integration of Large Language Models: In /r/LocalLLaMA, Opera browser has added support for running large language models like Meta's Llama, Google's Gemma, and Vicuna locally, making them more accessible.

Model Capabilities & Comparisons

- Gemini's Large Context Window: In /r/ProgrammerHumor, an image highlights that Gemini's context window is much larger than other models, enabling more contextual understanding.

- GPT-3.5-Turbo Model Size Analysis: In /r/LocalLLaMA, analysis suggests GPT-3.5-Turbo is likely an 8x7B model, similar in size to Mixtral-8x7B.

- Claude 3 vs ChatGPT Battle Simulation: In /r/LocalLLaMA, a video compares Claude 3 vs ChatGPT in a "Street Fighter" style battle using local 7B models like Mistral and Gemma.

AI Research & Education

- Stanford Transformers Course Opens to Public: In /r/StableDiffusion, Stanford's CS 25 Transformers Course is opening to the public, featuring top researchers discussing breakthroughs in architectures, applications, and more.

- Stock Prediction Research Challenges: In /r/MachineLearning, a discussion explores why stock prediction research papers often don't translate to real-world production use.

- Retrieval-Augmented Generation Debate: In /r/MachineLearning, a debate arises on whether Retrieval-Augmented Generation (RAG) is just glorified prompt engineering.

AI Tools & Applications

- GPT-4-Vision for Online Mimicry: In /r/singularity, a video demonstrates using GPT-4-Vision to mimic oneself in emails or any site with one click.

- Automatic Video Highlight Detection: In /r/singularity, a tool is showcased for finding highlights in long-form video automatically with custom search terms.

- Daz3D AI-Powered Image Generation: In /r/StableDiffusion, Daz3D partners with Stability AI to launch Daz AI Studio for stylized image generation from text.

AI Memes & Humor

- Gemini Context Window Meme: In /r/ProgrammerHumor, a humorous image depicts "Gemini's context window is much larger than anyone else's".

- Super Metroid Parody Trailer: In /r/singularity, a parody movie trailer for Super Metroid was created with Dalle3 and GPT.

- Bedroom QR Code Meme: In /r/singularity, a bedroom QR code meme image was shared.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Cohere Command R+ Release

- New open-source model: @cohere released Command R+, a 104B parameter model with 128k context length, open weights for non-commercial use, and strong multilingual and RAG capabilities. It's available on the Cohere playground and Hugging Face.

- Optimized for RAG workflows: Command R+ is optimized for RAG, with multi-hop capabilities to break down complex questions and strong tool use. It's integrated with @LangChainAI for building RAG applications.

- Multilingual support: Command R+ has strong performance across 10 languages including English, French, Spanish, Italian, German, Portuguese, Japanese, Korean, Arabic, and Chinese. @JayAlammar notes that the tokenizer is more efficient for Arabic and other non-English languages, requiring fewer tokens and leading to cost savings.

- Pricing and Availability: @cohere noted Command R+ leads the scalable market category, enabling businesses to move to production. It is available on Microsoft Azure and coming to other cloud providers soon. @JayAlammar added it takes RAG to a new level with multi-hop capabilities.

- LangChain Integration: @hwchase17 and @LangChainAI announced a

langchain-coherepackage to expose integrations like chat models and model-specific agents. @cohere is excited about the integration for adaptive RAG. - Hugging Face and Performance: @osanseviero noted it is available on Hugging Face with a playground link. @seb_ruder highlighted the multilingual capabilities in 10 languages. @JayAlammar mentioned tokenizer optimizations for languages like Arabic to reduce costs.

- Fine-tuning and Efficiency: @awnihannun showed fine-tuning Command R+ with QLoRA in MLX on an M2 Ultra. @_philschmid provided a summary of the 104B model with open weights, RAG and tool use, and multilingual support.

DALL-E 3 Inpainting Release

- New Feature: @gdb and @model_mechanic announced that DALL-E 3 inpainting is now live for all ChatGPT Plus subscribers. This allows users to edit and modify parts of an image from text instructions.

- How to Use: @chaseleantj provides a guide - brush over the region to replace, type the prompt describing the change, and do not brush over all the words for best results. There are still some limitations like inability to generate words in blank spaces.

Mixture-of-Depths for Efficient Transformers

- Approach: @arankomatsuzaki shares Google's Mixture-of-Depths approach to dynamically allocate compute in transformer models. It enforces a total compute budget by capping tokens in self-attention/MLP at each layer.

- Benefits: @rohanpaul_ai explains this minimizes compute waste by allocating more to harder-to-predict tokens vs. easier ones like punctuation. Compute expenditure is predictable in total but dynamic and context-sensitive at the token level.

RAG and Agent Developments

- Adaptive RAG techniques: New papers like Adaptive RAG and Corrective-RAG propose dynamically selecting RAG strategies based on query complexity. Implementations are available as LangChain and LlamaIndex cookbooks.

- RAG-powered applications: Examples of RAG-powered apps include Omnivore, an AI-enabled knowledge base, and Elicit's task decomposition architecture for scaling complex reasoning. Connecting RAG with tool use leads to more agentic systems.

Open-Source Models and Frameworks

- Anthropic Jailbreaking: @AlphaSignalAI shared Anthropic's research on "many-shot jailbreaking" which crafts benign dialogues to bypass LLM safety measures. It takes advantage of large context windows to generate normally avoided responses.

- @AssemblyAI introduced Universal-1, a multilingual speech recognition model trained on 12.5M hours of data. It outperforms models like Whisper on accuracy and hallucination rate.

- Open models and datasets: New open models include Yi from 01.AI, Eurus from Tsinghua, Jamba from AI21 Labs, and Universal-1 from AssemblyAI. Large OCR datasets from Hugging Face enable document AI research.

- Efficient inference techniques: BitMat reduces memory usage for quantized models. Mixture-of-Depths dynamically allocates compute in Transformers. HippoAttention and MoE optimizations speed up inference.

- Accessible model deployment: Hugging Face lowered prices for hosted inference, while Koyeb and SkyPilot simplify deploying models on any cloud platform.

Memes and Humor

- An AI-generated video of a sad girl singing the MIT License went viral.

- People speculated about Apple's AI ambitions and joked that AI will replace software engineers.

- There were memes poking fun at AI hype and the limitations of large language models.

AI Discord Recap

A summary of Summaries of Summaries

-

LLM Advancements and Integrations:

- Cohere unveils Command R+, a 104B parameter multilingual LLM optimized for enterprise use with advanced Retrieval Augmented Generation (RAG) and multi-step tool capabilities, sparking interest in its performance compared to other models.

- JetMoE-8B represents an affordable milestone at under $0.1 million cost, surpassing Meta AI's LLaMA2 performance using only 2.2B active parameters.

- Discussions around integrating LLMs like HQQ with gpt-fast, exploring 4/3 bit quantization approaches like the Mixtral-8x7B-Instruct quantized model.

-

Optimizing LLM Inference and Training:

- Mixture-of-Depths (MoD) enables transformers to dynamically allocate compute across sequences, potentially improving efficiency over uniform distribution.

- Visual AutoRegressive (VAR) modeling redefines autoregressive image generation, outperforming diffusion transformers in quality and speed.

- Techniques like BitMat offer efficient 1-bit LLM implementations per "The Era of 1-bit LLMs" paper.

-

LLM Evaluation and Benchmarking:

- New benchmarks evaluate LLM emotional intelligence: Creative Writing EQ-Bench and Judgemark using correlation metrics.

- COMET scores highlight the Facebook WMT21 model's translation prowess, with the highest score of 0.848375.

- Discussions on systematic evaluation practices for AI products, with Hamel Husain's post seen as groundbreaking.

-

Open-Source AI Frameworks and Tools:

- LlamaIndex unveils cookbooks guiding RAG system building with MistralAI, including routing and query decomposition.

- Koyeb enables effortless global scaling of LLM apps by connecting GitHub repos to deploy serverless apps.

- SaladCloud offers a managed container service for AI/ML workloads to avoid high cloud costs.

- The transformer-heads GitHub repo provides tools for extending LLM capabilities by attaching new model heads.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- High-Res with Caution: Practitioners discussed optimal settings for Stable Diffusion Models when upscaling, advocating for 35 steps with specific upscalers and control nets to mitigate image distortion. Higher resolutions, particularly 2k, lead to longer generation times and potential issues, as outlined in Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction.

- AI's Role in the Creative Process: There was a spirited discussion on AI's burgeoning effects on creative industries, specifically pondering its potential to supersede some roles in Hollywood and game development. The group contemplated how AI tools like SDXL could alter job landscapes, possibly raising the entry-level bar for producing quality output.

- Techniques for Targeted Lora Training: To train loras for generating images of specific attire, such as corsets, suggestions were to use diverse angle shots of the item isolated from extraneous details. The aim is to help the AI focus on the core element, thus avoiding introducing unwanted features in the outputs.

- Costs, Investors, and AI Market Dynamics: The guild tackled Stability AI's strategic hurdles—balancing between attracting investments, enriching datasets, and developing fresh models. Dialogue revolved around innovations in dataset monetization strategies for businesses in the face of rising computational costs and fluctuating model research interest.

- Random Banter Is Still Alive: Amid technical talk, members exchanged casual banter, including cultural references and greetings. An off-topic link to a parody song was shared, showcasing the community's lighter side alongside their technical engagements.

Perplexity AI Discord

API Excitement Meets Payment Puzzles: A perplexing payment issue cropped up for a Perplexity API user whose transaction was stuck as "Pending" without updating the account balance. Meanwhile, discussions revolved around the potential of APIs and the choice between a Pro Subscription and pay-as-you-go API, with opinions favoring the subscription for initial business ideation due to cost predictability.

Model Mashing Madness: Users dived into model preferences, favoring a balance between a larger message count and an adequate context window. They also tackled the challenge of model limitations with complex programming languages like Rust and custom "metaprompt" strategies for structured output.

Content Sharing Caveat: A note was made to ensure threads are set to shareable when posting content on Discord, facilitating wider community engagement.

Thirst for Source Links in Sonar Model: Inquiries were made concerning the sonar-medium-online model's ability to return source links with data, but a definitive timeline on the feature's implementation remains elusive.

LLM Leaderboard Quirks and Queries: The LLM Leaderboard sparked an analytical discourse on model rankings with a dash of humor over model name mishaps, pointing to the significance of clarity in system prompts for better AI performance.

OpenAI Discord

- DALL·E Dons a New Creative Cap: DALL·E now boats an Editing Suite for image edits and style inspiration on web, iOS, and Android platforms, offering enhancements to the creative potential across ChatGPT platforms. In tandem, the Fine-tuning API sees an infusion of new dashboards, metrics, and integrations for developers to forge custom models, detailed in a recent blog post.

- AI's Existential Ruminations: Engineers engaged in a blaze of conversations around AI, untangling the question of AI "thinking" with a broad consensus refuting AI consciousness in lieu of complex data pattern executions. The palpable discord in live discussions also touched on correcting the AI vs AGI misconception prevalent amongst the public and ended with a proposition to train LLMs on goal-oriented sequences.

- Customization or Complexity: Within the GPT-4 discussions, engineers wrangled over benefits of Custom GPTs, the utility of DALL·E's new features for image specificity, and questions on data retention policies surfaced—ensuring even deleted chats linger for a month.

- Prelude to Prompt Perfection: Technicians noodled over issues in translating markdown into various languages and recommended using additional context to refine AI's interpretation during AI role play. Strategies for propelling text generation and ensuring document completeness when using LLMs were also broached, suggesting methods such as "continue" to extend responses.

- Patience for Prompt Precision: As members grappled with translation issues with markup and advice on constructing effective prompts, they were directed to the refashioned #1019652163640762428 for resources. Insights on the efficacy of prompts, particularly in role-playing scenarios, also peeked through, emphasizing the importance of providing clear context to shape AI responses.

Unsloth AI (Daniel Han) Discord

CmdR Set to Join the Unsloth Ranks: The addition of CmdR support to Unsloth AI is in progress, with the community eagerly awaiting its integration post current task completions. The anticipation ties into plans for an open-source CPU optimizer, slated for reveal on April 22, to enhance AI model accessibility for those with limited GPU resources.

Interfacing Innovation with Continue's Autocomplete: A new tab autocomplete feature is in experimental pre-release for the Continue extension, designed to streamline coding in VS Code and JetBrains by consulting language models directly within the dev environment.

Error Extermination and Optimization Dialogues: AI engineers shared solutions to naming-related tokenizer errors, and discussed model.save_pretrained_merged and model.push_to_hub_merged functions for seamless model saving and sharing on Huggingface. Despite encountering AttributeError in GemmaForCausalLM, users were directed to update Unsloth for resolution.

Stumbling Blocks in Saving and Server-Side Setup: Users navigated challenges with GGUF conversions and Docker setups, tackling issues like python3.10-dev dependencies and workaround strategies for memory errors during finetuning on different platforms.

Diving into Unsloth Studio's Next Iteration Soon: An update on Unsloth Studio's release push is set for mid next month due to current bug fixes, ensuring ongoing compatibility with Google Colab alongside improvements for developers leveraging the Studio's capabilities.

Latent Space Discord

Stable Audio Hits a New High: Stability AI launched Stable Audio 2.0, enabling creation of lengthy high-quality music tracks utilizing a single prompt. Visit stableaudio.com to test the model and find further details in their blog post.

AssemblyAI Outperforms Whisper: AssemblyAI announced Universal-1, a speech recognition model surpassing Whisper-3 by achieving 13.5% better accuracy and demonstrating up to 30% decrease in hallucinations. The model processes an hour of audio in a mere 38 seconds and is available for trial at AssemblyAI's playground.

Enhance Your Images with ChatGPT Plus: Users of ChatGPT Plus now possess the ability to modify DALL-E-generated images and prompts, available on both web and iOS platforms. Full guidance on usage is provided in their help article.

AI Agents as Scalable Microservices: Discussions focused on utilizing event-driven architecture to build scalable AI agents, with the Actor Model cited as an inspiration, and a Golang framework presented for collaborative feedback.

Opera One Downloads AI Directly: Opera integrates the ability for users to run large language models (LLMs) locally, beginning with Opera One on the developer stream, harnessing the Ollama framework, as detailed by TechCrunch.

DSPy Steals the Spotlight: Members evaluated DSPy's performance in optimizing prompts for foundation models, focusing on model migration and optimization while being cautious of API rate limits. A detailed study of Devin surfaced numerous AI project opportunities, with keen interest in diverse applications ranging from voice-integrated iOS apps to documentation overhaul initiatives.

Nous Research AI Discord

- LoRA Boosts Mistral?: Engineers discuss employing Low-Rank Adaptation (LoRA) on Mistral 7B to enhance specific task performance, with plans to innovate sentence splitting and labeling techniques beyond standard methods.

- Web Crawling Woes and Wins: The practical issues of scalable web crawling were a hot topic, with talk of obstacles like anti-bot measures and JavaScript rendering. However, alignment was reached on the utility of Common Crawl and mysterious archival groups hoarding quality datasets.

- Learning Options Expand: Shared resources included guides on lollms with Ollama Server, budget AI chips from Intellifusion, and Hugging Face's dataset loaders utility, Chug. Meanwhile, CohereForAI's new multilingual 104B LLM has stirred interest, and OpenAI's exploratory GPT-4 fine-tuning pricing was editorialized.

- LLM Innovation at the Fore: Engineers exchange insights on language model pruning, specifically a 25% pruned Jamba model, and Google's paper advocating transformers learn to dynamically allocate compute, sparking a deeper analysis of speculative decoding versus Google's method.

- Diverse Fine-Tuning Conversations: Members introduced Eurus-7b-kto optimized for reasoning, debated the "divide by scale" in BitNet-1.58 for ternary encoding, deliberated implementation issues on Hermes-Function-Calling, considered QLoRA's VRAM efficiency, and noted Genstruct 7B's instructional generation prowess.

- Troubleshooting in Project Obsidian: Quick fixes in progress for ChatML in project "llava" and intentions to tackle Hermes-Vision-Alpha with scant details on specific issues.

- Finetuning Subnet Miner Mishaps: A miner script error in the finetuning-subnet repository points to a possible missing dependencies problem.

- RAG Dataset Discussions: Discourse on Glaive's RAG sample dataset and methods like grounded mode and proper citation markup, including an XML instruction format, emphasized for future uptake. Suggestions on filtering in RAG responses and Cohere's RAG documentation were also highlighted.

- Copying Conundrums & Command Quests in WorldSim: WorldSim's perplexing copy-paste mechanics, concern over mobile performances, and links to a comprehensive WorldSim Command Index brought forth both productivity hacks and culture snippets within the intrigue of jailbreaking Claude models and ASCII art enigmas.

Modular (Mojo 🔥) Discord

Mojo on the Move: Engineers shared that Mojo now runs on Android devices like Snapdragon 685 CPUs and discussed integrating Mojo with ROS 2, accentuating Mojo's memory safety over Python, particularly in robotics where Python’s GIL limits Nvidia Jetson hardware performance.

Performance Breakthroughs and Best Practices: Significant library performance improvements were noted, dropping execution times to minutes, beating previous Golang benchmarks. Methods such as pre-setting dictionary capacities for optimization were advised, and designers of specialized sorting algorithms for strings are encouraged to align with Mojo’s latest versions, seen at mzaks/mojo-sort.

From Parser to FASTQ: BlazeSeq🔥, a new feature-complete FASTQ parser, has been introduced, providing a CLI-compatible parser that conforms to BioJava and Biopython benchmarks. Enhanced file handling is promised by the buffered line iterator they implemented, indicating a move to a robust future standard for file interactions, showcased on GitHub.

Mojo Merger Madness: Innovative ideas on model merging and conditional conformance in Mojo used @conditional annotations for optional trait implementations, while merchandise ideas like Mojo-themed plushies stirred community excitement. Memory management optimizations were considered, examining potential changes to how Optional returns values in the nightly version of Mojo's standard library.

Modular Updates Galore: Max⚡ and Mojo🔥 24.2 release brings open-sourced standard libraries and nightly builds with community contribution. Docker build issues in version 24.3 are addressed, while continued development discussions recommend conditional conformance and error handling strategies for future roadmap considerations.

LM Studio Discord

Bold Boosts with ROCm: AMD hardware sees a massive increase in speed from 13 to 65 tokens/second when engineered with the ROCm preview, highlighting the significant potential of the right software interface for AMD GPUs.

Mixtral, Not a Mistral Mistake: Mixtral's distinct identity as a MOE model, combining the strength of eight 7B models into a 56B powerhouse, reflects a strategic approach unlike the standard Mistral 7B. Meanwhile, running a Mixtral 8x7b on a 24GB VRAM NVIDIA 3090 GPU may hit speed snafus, yet it’s a viable venture.

LM Studio 0.2.19 Courts Embeddings: The fresh-out-of-the-lab LM Studio version 0.2.19 Preview 1 now supports local embedding models, opening up new possibilities for AI practitioners. Despite lacking ROCm support in its current preview, Windows, Linux, and Mac users can grab their respective builds from the provided links.

Engineers Tackle Odd Model Behavior: Discourse on an AI model dishing out bizarre, task-unrelated responses uncovers potential mishaps in the model's training, signaling a programming predicament in need of debugging prowess.

CrewAI Collision with JSONDecodeError: Encountering a JSONDecodeError using CrewAI suggests a potential misstep in JSON formatting, a puzzle piece that AI engineers must properly place to avoid jeopardizing data parsing processes.

Eleuther Discord

Transformers Takeover at Stanford: The Stanford CS25 seminar on Transformers is open to the public for live audits and recorded sessions, with industry experts leading the discussions on LLM architectures and applications. Interested individuals can participate via Zoom, access the course website, or watch recordings on YouTube.

Skeptical About Efficiency Claims: The community voiced skepticism about the Free-pipeline Fast Inner Product (FFIP) algorithm's performance claims, noted in a journal publication, which promises efficiency by halving multiplications in AI hardware architectures.

CUDA Conundrums and Code Conflicts: A member troubleshooting a RuntimeError with CUDA identified apex as the issue when using the LM eval harness on H100 GPUs, recommending upgrades to CUDA 11.8 and other adjustments for stability.

Next-Gen AI Training Techniques Touted: An arXiv paper introduces dynamic FLOP allocation in transformers, potentially optimizing performance by diverging from uniform distribution. Additionally, cloud services like AWS and Azure support advanced training schemes, with AWS's Gemini mentioned explicitly.

Elastic and Fault-Tolerant How-To: Details on establishing fault-tolerant and elastic job launches with PyTorch were shared, with documentation available at the PyTorch elastic training quickstart guide.

LAION Discord

- AI Ethics in Code: A tool called ConstitutionalAiTuning allows fine-tuning language models to reflect ethical principles, utilizing a JSON file for principles input and aiming to make ethical AI more accessible.

- Type Wrestling in JAX: JAX's type promotion semantics show different outcomes based on operation order, as demonstrated with numpy and Jupyter array types—adding

np.int16(1)andjnp.int16(2)to3producesint16orint32based on the sequence of operations. - Model Training Quandaries: A discussion examined optimal text input configurations for models, debating the merits of sequence concatenation, T5 token extension, and fine-tuning techniques in the realm of SD3 models.

- Legal Beats and AI: Using copyrighted material to train AIs, such as with the Suno music AI platform, has sparked concerns about ensuing legal risks and potential suits from content owners.

- Financial Turbulence for AI Innovator: Stability AI faces financial headwinds, grappling with significant cloud service expenses that reportedly might eclipse their revenue capabilities, as detailed in a Forbes article.

In the research domain:

- Size Doesn't Always Matter for LDMs: A study revealed in an arXiv paper that larger latent diffusion models (LDMs) do not always outdo smaller ones when the inference budget remains constant.

- New Optimizer on the Horizon: A Twitter tease suggested that the AI community should keep their eyes peeled for a novel optimizer.

- VAR Model Revolutionizing Image Generation: The newly presented Visual AutoRegressive (VAR) model demonstrates superior efficacy in image generation compared to diffusion transformers, boasting improvements in both quality and speed, according to an arXiv paper.

OpenAccess AI Collective (axolotl) Discord

Patch Perfect: A noteworthy GitHub bug was swiftly eradicated in the OpenAccess AI Collective's axolotl repository, with the commit history accessible via GitHub Commit 5760099. Meanwhile, a README Table of Contents mismatch was flagged, prompting a cleanup.

Datasets and Model Dialogues: Queries about optimal datasets for training Mistral 7B models led to a recommendation for the OpenOrca dataset, while debates on fine-tuning practices leaned towards the strategy of prioritizing 'completion' before 'instructions'. Discussions spotlighted the potency of simple fine-tuning (SFT) over continual pre-training (CPT) when armed with high-quality instructional samples.

Bot-tled Service: The Axolotl help bot hit a snag, going offline and sparking a wave of mirthful member reactions, yet specifics behind the incident weren't disclosed. The bot was previously offering guidance on the integration of Qwen2 with Qlora and addressing challenges related to dataset streaming and multi-node fine-tuning within Docker environments.

AI Dialogues: The Collective's general channel buzzed with tech talk—from rapid model feedback services like Chaiverse to the novel resources for adding heads to Transformer models found in the GitHub repository for transformer-heads. CohereForAI unveiled a behemoth 104 billion parameter C4AI Command R+ model with specialized capabilities revealed on Hugging Face, stirring conversations about the financial implications of running massive models.

Infrastructure Innovations: SaladCloud's recent launch of a fully-managed container service for AI/ML workloads was recognized as a notable entrance, giving developers an edge against sky-high cloud costs and GPU shortages with affordable rates for inference at scale.

LlamaIndex Discord

AI Spellcheck Gets Real: A Node.js code shared by a member for correcting spelling mistakes using the LlamaIndex Ollama package showed an AI model named ‘mistral’ fixing user errors, like "bkie" to "bike," which can run locally without third-party services over localhost:11434.

Llama's Culinary Code-Loaded Cookbook: A new culinary-themed guidebook series is unveiled for AI enthusiasts, demonstrating how to build RAG, agentic RAG, and agent-based systems with MistralAI, including routing and query decomposition. Grab your AI recipes here.

Exploration and Confusion in LlamaIndex: Discussions in the community raised concerns about issues from lacking knowledgegraphs pipeline support to unclear graphindex and graphdb integrations, and several members struggled with querying OpenSearch and implementing ReAct agents in llama_index.

AI Discussion Evolves Beyond Text: Engaging talks emerged about the potential of enhancing image processing with Reading and Asking Generative (RAG) techniques, discussing applications ranging from CAPTCHA solutions to ensuring continuity in visual narratives like comics.

Scaling AI Deployment Made Convenient: Koyeb's platform was highlighted for effortlessly scaling LLM applications, directly connecting your GitHub repo to deploy serverless apps globally without managing infrastructure. Check out the service here.

HuggingFace Discord

Bold Repo Visibility Choices: HuggingFace has introduced settings for default repository visibility with options for public, private, or private-by-default for enterprises. The functionality is described in this tweet by Julien Chaumond.

Custom Quarto Publishing: HuggingFace now supports publishing with Quarto, as detailed in a tweet by Gordon Shotwell, with more information available on LinkedIn.

Summarization Struggles and Strategies: Users across channels discussed summarization challenges with GPT-2 and Hugging Face's pipeline, including ineffective length penalties and the search for prompt crafting that maximizes efficiency and result quality, even in CPU-only environments.

Innovations and Interactions in AI Circles: Excitement was shared for projects including Octopus 2, a model capable of function calls, and advancements in image processing with the new multi-subject image node pack from Salt. The community also highlighted academic discussions and resources, such as the potential of RAG for interviews and latency-reasoning trade-offs in production prompts, shared in Siddish's tweet.

Diffusion Model Dialogue Deliberates Depth: AI engineers explored creative implementations for diffusion models, discussing DiT with cross-attention for various data conditions, and considering Stable Diffusion modifications for tasks like stereo to depth map conversion, referring to the DiT paper and resources like Dino v2 GitHub and SD-Forge-LayerDiffuse GitHub.

tinygrad (George Hotz) Discord

Fishing for Compliments or Functionality?: Discord's switch from the whimsical fish logo to a more polished design sparked debate among members, leading to talks to potentially match the banner to the new aesthetic. The logo changes by George Hotz seem to have left some nostalgic for the old one.

Sharding Optimizations In-Depth: George Hotz and community members explored optimization techniques and cross-GPU communications, facing challenges with launch latencies and data transfers. They examined the use of cudagraphs, peer-to-peer limitations, and the role of NV drivers.

Tinygrad Performance Milestone: Sharing performance benchmarks, it was revealed that Tinygrad achieved 53.4 tokens per second on a single 4090 GPU, marking 83% efficiency compared to gpt-fast. George Hotz indicated goals to further boost Tinygrad's performance.

Intel Hardware On The Horizon: Discussions on Intel GPU and NPU kernel drivers scrutinized various available drivers like 'gpu/drm/i915' and 'gpu/drm/xe', with anticipation for the performance and power efficiency that NPUs may bring when paired with CPUs.

Helpful Neural Net Education Hustle: The community found the Tinygrad tutorials to be a valuable starting point for neural network newbies and also recommended the JAX Autodidax tutorial, complete with a hands-on Colab notebook. Interest surged in adapting ColabFold or OmegaFold for Tinygrad, while also learning about PyTorch weight transfer methods.

OpenRouter (Alex Atallah) Discord

- OpenRouter Adopts JSON Object Support: Models like OpenAI and Fireworks have been confirmed to support the 'json_object' response format, which can be verified via provider parameters on the OpenRouter models page.

- Finding The Right Verse with Claude 3 Haiku: While the Claude 3 Haiku model exhibits a mixed performance in roleplay, it's suggested that providing multiple examples might yield better results. However, using jailbreak (jb) tweaks is advisable for a significant improvement in output.

- Niche Servers for Claude's Jailbreaks: Users on the look for Claude model jailbreaks including NSFW prompts discussed resources, pointing out that SillyTavern's and Chub's Discord servers are go-to places, and provided guidance on how to navigate to these using tools like the pancatstack jb.

- Dashboard Update Maps Out OpenRouter Credits: Recent updates to the OpenRouter's dashboard include a new designated location for credit display which is accessible at the

/creditsendpoint. However, issues with specific models’ functionality, such as DBRX and Midnight Rose, prompted concerns about their support compatibility.

- Moderation Tangle Affects OpenRouter API's Decline Rate: Reports highlighted a high decline rate with the self-moderated version of the Claude model, implicating possible overprotective "safety" prompts. There's also a mention of integrating better providers to aid in the stability of services for models like Midnight Rose.

OpenInterpreter Discord

- Installation Celebration and Cross-Platform Clarity: An engineer was relieved to get a piece of software running on their Windows machine, and there was confirmation that this software is functional on both PC and Mac platforms. Detailed installation instructions and guides can be found in the project's documentation.

- Persistent Termux Predicament: Discussions identified a recurring issue with

chroma-hnswlibduring installation processes, even though reports suggested it was removed. Members were advised to migrate detailed technical support queries to a designated support channel.

- Hermes-2-Pro Prompt Practices Discussed: Active dialogues emphasized the need to adjust system prompts as recommended in the Hermes-2-Pro model card. This is crucial for optimizing model performance and addressing verbose output that some users found burdensome.

- Platform-Specific Quirks: Multiple members encountered and shared solutions to challenges with the 01 software across different operating systems—ranging from shortcut commands in Ollama, package dependencies in Linux, to

poetryissues on Windows 11.

- Cardputer Development Underway: Technical talk focused on the implementation and advancement of M5 Cardputer into the open-interpreter project. GitHub repositories and various tools like ngrok for secure tunnelling and rhasspy/piper for neural TTS systems were linked for reference.

Interconnects (Nathan Lambert) Discord

- Command R+ Makes Waves with 128k Token Context: A new scalable LLM dubbed Command R+ is generating buzz with a hefty 128k token context window and the promise of reduced hallucinations due to refined RAG. Although there's curiosity about its performance compared to other models due to insufficient comparative data, enthusiasts can test out its capabilities via a live demo.

- ChatGPT-Like Models for Business Under Scrutiny: Skepticism arises regarding how well ChatGPT and similar models can fulfill enterprise needs, with discussions pointing toward potentially custom-developed solutions to truly meet business demands.

- Academia Cheers for Cost-Effective JetMoE-8B: The launch of JetMoE-8B is applauded in academic circles for its affordability—costing under $0.1 million—and impressive performance using only 2.2B active parameters. More details can be found on its project page.

- Snorkel and Model Efficacy Debate Heats Up: Nathan Lambert stirs the pot with a suggestive tweet, teasing an analysis on the effectiveness of current AI models like those using RLHF, thereby igniting a conversation around the controversial Snorkel framework.

- Stanford's CS25 Pulls in Transformer Enthusiasts: AI engineers show keen interest in Stanford's CS25 course, spotlighting discussions by Transformer research experts, with session schedules available here and the opportunity to gain insights through the course's YouTube channel.

Mozilla AI Discord

- Matrix Size Matters: A member made headway by optimizing a matmul kernel for large matrices, addressing CPU cache challenges when dealing with sizes above 1024x1024.

- Compiler Conundrum Conquered: Compiler enhancements led to celebrations among members, reflecting expectations of significant code performance improvements.

- A ROCm Solid Requirement: For the successful deployment of llamafile-0.7 on Windows, members acknowledged that ROCm version 5.7+ is necessary.

- Dynamic SYCL Discussions: Debates on handling SYCL code within llamafile resulted in a community-driven solution involving conditional compilation, though with noted incompatibility with MacOS.

- Perplexing Performance on Windows: An attempt to build llamafile on Windows met with complications involving the Cosmopolitan compiler, along with conversations about the need for a

llamafile-benchprogram to measure tokens per second and the potential impact of RAM on performance. Interested parties were directed to an article on The Register highlighting performance gains and a discussion on GitHub about Cosmopolitan.

LangChain AI Discord

Crypto Chatbot Craze Calls for Coders: An individual is in search of developers with LLM training expertise to create a chatbot simulating human conversation, utilizing real-time crypto market data. The aim is to enable nuanced discussions reflecting the latest market shifts.

Math Symbol Extraction Without MathpixPDFLoader: Alternatives to MathpixPDFLoader for extracting math symbols from PDFs are in demand, as users seek new methods to handle this specific task effectively.

LangChain LCEL Logic Lessons: A discussion clarified the use of the '|' operator in LangChain's Expression Language (LCEL), which chains components like prompts and LLM outputs into complex sequences. The intricacies are further explored in Getting Started with LCEL.

Voice Apps Vocalizing AI Capabilities: Newly launched voice applications such as CallStar are prompting discussions around their interactivity and setup, powered by technologies like RetellAI, with community support via Product Hunt and Reddit platforms.

LangChain Quickstart Walkthrough Woes: Sharing the LangChain Quickstart Guide, a user provided example code for integrating LangChain with OpenAI, yet faced a NotFoundError indicating a missing resource. The community's technical acumen is requested to troubleshoot this setback.

CUDA MODE Discord

- Bit by Bit, Efficiency Unfolds: The BitMat GitHub repository was referenced, promoting an efficient implementation of 1-bit Large Language Models (LLMs), aligning with the method proposed in "The Era of 1-bit LLMs."

- New Horizons for Triton and Torch: A new channel for contributing to the Triton visualizer has been proposed to foster collaboration. The Torch team is adjusting autotune settings, moving towards max-autotuning, and addressing benchmarking pain points including tensor core utilization and timing methods—their effort is documented in the keras-benchmarks.

- CUDA Content and Courses: For engineers keen on learning CUDA programming, the CUDA MODE YouTube channel was recommended, boasting of lectures and a supportive community to ease the CUDA learning curve.

- Quantum Leap in Model Integrations: New members mobicham and zhxchen17 ignited a discussion on integrating HQQ with gpt-fast, focusing on Llama2-7B (base), and delving into 4/3 bit quantization using models like Mixtral-8x7B-Instruct-v0.1.

- A Visual Boost for Triton: Within the discussion on Triton visualizations, suggestions for adding arrows for direction, integrating operation details into visuals, and potentially porting the project to JavaScript for enhanced interactivity emerged, though concerns about the actual utility of such features were raised.

Datasette - LLM (@SimonW) Discord

A New Approach to AI Dialogues: Reflecting on conversational AI terminology, a guild member suggested "turns" as a better descriptor than "responses" for the initial message in a dialogue, a decision fueled by the exploration of a logs.db database and resulting in the serendipitous pun with the term database 'turns'.

AI Product Evaluations Get a Thumbs Up: Guild members rallied around the importance of Hamel Husain's post on AI evaluations, which outlines strategies for creating domain-specific evaluation systems for AI and is considered potentially groundbreaking for new ventures.

SQL Query Assistant Plugin Eyes Transparency and Control: There's a pitch for making the evaluations of the Datasette SQL query assistant plugin visible and editable, aiming to enhance user interaction and control over the evaluation process.

Perusing the Future of Prompt Management: A debate is brewing over the best practices for AI prompt management, with potential patterns including localization, middleware, and microservices, suggesting different methods for integrating AI into larger systems.

High-Resolution API Details Exemplified: The Cohere LLM search API’s detailed JSON responses were spotlighted, providing an example of the granularity that can benefit AI developers, as demonstrated in a shared GitHub comment.

DiscoResearch Discord

- Benchmarking Emotional Smarts: Newly launched Creative Writing EQ-Bench and Judgemark benchmarks aim to assess the emotional intelligence of language models, with Judgemark posing a rigorous test through correlation metrics. Standard deviation in scores is leveraged to differentiate how models use 0-10 scales to indicate finer judgment nuances compared to 0-5 rating systems.

- Judgment Day for Creative Writing: The efficacy of the Creative Writing benchmark is attributed to its 36 specific judging criteria, emphasizing the importance of narrow parameters for model evaluation. Questions about these benchmark criteria are answered in the extensive documentation provided, demonstrating transparency and allowing for better model assessment.

- Sizing Up Sentiment and Quality: Discussion regarding optimal scales revealed that sentiment analysis resonates best with a -1 to 1 range, while quality assessments prefer broader scales of 0-5 or 0-10, aiding models to convey more nuanced opinions. These insights highlight the necessity of tailoring evaluation metrics to the specific domain of judgment.

- COMET Blazes Through Testing: The COMET evaluation scores herald the Facebook WMT21 model as a standout, with reference-free scores employing wmt22-cometkiwi-da methodology alongside useful scripts available on the llm_translation GitHub repository. Nonetheless, caution is advised due to potential inaccuracies, underscoring the need for continual vigilance in assessing model outputs.

- Scaling the Peaks of Reference-Free Evaluation: The callout for accuracy in models emphasizes the non-absoluteness of COMET scoring results, with an invitation to flag significant discrepancies—a practice acknowledging the iterative nature of model refinement and validation. The highest COMET score recorded was 0.848375, demonstrating the advanced capabilities of current language models in translation tasks.

Skunkworks AI Discord

- AI Enthusiasts Eye Healthcare: Community engagement in AI within the healthcare sector is on the rise, signaling increased cross-disciplinary applications of AI technologies.

- Evolving LLMs with Mixture-of-Depths (MoD) Approach: Introduction of the Mixture-of-Depths (MoD) technique has been highlighted as a way to allow Language Models to allocate compute resources dynamically, potentially increasing efficiency. The approach and its capabilities are detailed in a paper available on arXiv.

- Revolutionizing AI's Approach to Math: Discussing improved strategies for AI to tackle mathematical problems, it's suggested that training AI to convert word problems into solvable equations is more effective than direct computation. This method leverages the power of established tools like Python and Wolfram Alpha for the actual calculations.

- Another Paper Added to the Trove: Additional resources are being shared, with a new paper added to the community's knowledge base, though no further context has been provided.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (910 messages🔥🔥🔥):

- Stable Diffusion Models and Upscaling: Users discussed the best practices for creating realistic high-resolution images, suggesting using lower steps, latent upscaling, and the use of hi-res fix to avoid image distortion. Suggested settings include 35 steps with dpmpp ancestral Karras or exponential and accompanying control nets. Higher resolutions like 2k are challenging, often leading to extended generation times and possible image distortion (related discussion).

- The Future of AI and Content Creation: There was a robust debate on the impact of AI on various creative industries, with speculations about AI's potential to replace traditional roles in Hollywood and the videogame industry. Participants discussed whether AI models like SDXL would render some artist positions redundant and how evolving technology might increase the skill floor, requiring less effort to generate quality content.

- Lora Training for Specific Items: A user inquired about training loras for generating images of people wearing specific items, such as corsets. Advice given includes using images of the item from different angles, ideally with backgrounds and faces removed, to prevent the AI from including unintended elements in the generated images.

- Economic Considerations and AI: Participants discussed Stability AI's challenges, such as convincing investors and focusing on datasets versus developing new models. The conversation covered the potential of monetizing the dataset for enterprises to cope with the perceived declining interest in research models and the impact of high compute costs.

- Miscellaneous Chat: Interactions included light-hearted exchanges with references to cultural subjects, general hellos, acknowledgments of greetings, and random statements that did not correlate with the main topics of discussion. There was also a link to an unrelated parody song shared by a user.

- Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction: We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolutio...

- Juggernaut-XL_v9_RunDiffusionPhoto_v2.safetensors · RunDiffusion/Juggernaut-XL-v9 at main: no description found

- SDXL – A settings guide by Replicate: Or how I learned to make weird cats

- Remix: Create, share, and remix AI images and video.

- Home v2: Transform your projects with our AI image generator. Generate high-quality, AI generated images with unparalleled speed and style to elevate your creative vision

- Reddit - Dive into anything: no description found

- Stable Radio 24/7: Stable Radio, a 24/7 live stream that features tracks exclusively generated by Stable Audio.Explore the model and start creating for free on stableaudio.com

- Optimizations: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

- sd-webui-animatediff/docs/features.md at master · continue-revolution/sd-webui-animatediff: AnimateDiff for AUTOMATIC1111 Stable Diffusion WebUI - continue-revolution/sd-webui-animatediff

- GitHub - comfyanonymous/ComfyUI: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface.: The most powerful and modular stable diffusion GUI, api and backend with a graph/nodes interface. - comfyanonymous/ComfyUI

- Survey Form - 5day.io: As a young professional just a few years into the workforce, there is a constant, low-humming anxiety about proving yourself and finding that mythical work-life balance everyone talks about. Sometimes...

- Reddit - Dive into anything: no description found

- Juggernaut XL - V9 + RunDiffusionPhoto 2 | Stable Diffusion Checkpoint | Civitai: For business inquires, commercial licensing, custom models, and consultation contact me under juggernaut@rundiffusion.com Juggernaut is available o...

- GitHub - ZHO-ZHO-ZHO/ComfyUI-SegMoE: Unofficial implementation of SegMoE for ComfyUI: Unofficial implementation of SegMoE for ComfyUI. Contribute to ZHO-ZHO-ZHO/ComfyUI-SegMoE development by creating an account on GitHub.

- Never Gonna Give You Up - Rick Astley [Minions Ver.]: Stream Never Gonna Give You Up - Rick Astley [Minions Ver.] by Pelusita,la chica fideo on desktop and mobile. Play over 320 million tracks for free on SoundCloud.

Perplexity AI ▷ #general (756 messages🔥🔥🔥):

- API Usage Explained: Users are curious about the functionalities and costs associated with Perplexity's API. It was clarified that APIs can be very powerful for automating tasks and are essential for developers looking to integrate specific services into their applications. The cost efficiency and usage depend on the scope of the project and the amount of data being processed.

- Pros and Cons of Pro Subscription vs API: There's a debate on whether it's more advantageous to subscribe to Perplexity for $20 a month or to use the pay-as-you-go API. For idea generation and beginning a business, the recommendation seems to be towards subscribing due to ease of use and cost management.

- Model Preferences Discussed: When it comes to usage, users prefer having a larger number of messages with a decent context window rather than a larger context with fewer messages. Perplexity's AI capabilities are being leveraged for a range of tasks, with the flexibility to work around limitations.

- Notifications and New UI Elements Update: There has been mention of news notifications not being readily accessible or communicated effectively, with the suggestion for the company to use Discord's announcement channels more strategically. Some concerns were raised about the lack of updates on the Android app.

- Integration Limits and Model Capabilities: Discussion around the limitations when using complex languages like Rust with AI, highlighting that AI models, including Opus, struggle to create compilable code. Some users are applying workarounds like starting new threads to manage large conversations for better context management.

- Perplexity will try a form of ads on its AI search platform.: Perplexity’s chief business officer Dmitry Shevelenko tells Adweek the company is considering adding sponsored suggested questions to its platform. If users continue to search for more information on ...

- Perplexity will try a form of ads on its AI search platform.: Perplexity’s chief business officer Dmitry Shevelenko tells Adweek the company is considering adding sponsored suggested questions to its platform. If users continue to search for more information on ...

- Getting Started with pplx-api: no description found

- Brain Circuit Classic Thin Icon | Font Awesome: Brain Circuit icon in the Thin style. Style your project in the latest super-light designs. Available now in Font Awesome 6.

- Image Classic Regular Icon | Font Awesome: Image icon in the Regular style. Smooth out your design with easygoing, readable icons. Available now in Font Awesome 6.

- Apple reveals ReALM — new AI model could make Siri way faster and smarter: ReALM could be part of Siri 2.0

- Chat Completions: no description found

- Ralph Wiggum Simpsons GIF - Ralph Wiggum Simpsons Hi - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- Tweet from Aravind Srinivas (@AravSrinivas): Interesting.

- Reddit - Dive into anything: no description found

- 1111Hz Conéctate con el universo - Recibe guía del universo - Atrae energías mágicas y curativas #2: 1111Hz Conéctate con el universo - Recibe guía del universo - Atrae energías mágicas y curativas #2Este canal se trata de curar su mente, alma, cuerpo, trast...

- Perplexity Model Selection User Script: Perplexity Model Selection User Script. GitHub Gist: instantly share code, notes, and snippets.

Perplexity AI ▷ #sharing (15 messages🔥):

- Exploring the Impact of Fritz Haber: A member highlighted Fritz Haber's contributions, such as enabling increased food production through the Haber-Bosch process. His complex legacy includes the Nobel Prize, involvement in chemical warfare, personal tragedies, and anti-Nazi sentiments. Read about Fritz Haber's legacy.

- Intrigue at the LLM Leaderboard: A user examined the LLM Leaderboard, discussing model metrics and rankings, and discovered what "95% CI" means despite encountering amusing model name errors. Explore the LLM Leaderboard review.

- Understanding Beauty through AI: Multiple members shared their curiosity about the concept of beauty by using Perplexity AI to access insights on the topic. Delve into the nature of beauty.

- Dictatorship Discussion Initiated: One chat pointed users to Perplexity AI for a query on how dictatorship naturally arises, sparking an intellectual query into the origins of authoritarian regimes. Investigate the emergence of dictatorship.

- Reminder for Shareable Content: A member was reminded to ensure their thread is set to shareable when posting links from the Discord channel. This ensures others can view and engage with the content shared. Make Discord threads shareable.

Perplexity AI ▷ #pplx-api (42 messages🔥):

- Perplexed about Perplexity API Sonar Model Source Links: A user inquired about when the sonar-medium-online model would be able to return the source link with the data, but did not receive a clear timeline on when this feature will be available.

- Credit Conundrum: Payments Pending in Perplexity: A member reported issues while trying to buy API credits; transactions showed as "Pending" and did not reflect in the account balance. Another member asked them to send account details for resolution, indicating a case-by-case troubleshooting approach.

- Trouble with Realms, ReALM, and Apple: Users experienced the bot getting confused when asked about Apple's ReALM, leading one suggestion that simplifying the system prompt might yield better performance, as complexity seems to lead to confusion.

- Custom GPT "metaprompt" for Organized Output: One user shared their experiment with creating a Custom GPT utilizing a "metaprompt" aimed at structuring responses efficiently, which primarily focused on delivering accurate information with clear citations.

- Search API Pricing Perplexities: A member questioned the pricing of search APIs compared to language models, discussing the cost-effectiveness of 1000 online model requests, which another clarified does not equate to 1000 individual searches but rather requests that can contain multiple searches each.

- no title found: no description found

- Were On The Same Boat Here Mickey Haller GIF - Were on the same boat here Mickey haller Lincoln lawyer - Discover & Share GIFs: Click to view the GIF

OpenAI ▷ #annnouncements (2 messages):

- DALL·E gets an Editing Suite: Members were informed that they can now edit DALL·E images in ChatGPT across web, iOS, and Android, as well as receive style inspiration when creating images in the DALL·E GPT.

- Fine-tuning API Level Up: New dashboards, metrics, and integrations have been introduced in the fine-tuning API. Developers now have more control and new options for building custom models with OpenAI, detailed in a recent blog post: Fine-tuning API and Custom Models Program.

Link mentioned: Introducing improvements to the fine-tuning API and expanding our custom models program: We’re adding new features to help developers have more control over fine-tuning and announcing new ways to build custom models with OpenAI.

OpenAI ▷ #ai-discussions (494 messages🔥🔥🔥):

- Understanding AI and Consciousness: Discussions revolved around the nature of AI's cognitive processes compared to human thought, debating whether AI such as LLMs are capable of "thinking" or just sophisticated algorithms performing complex patterns of data. Multiple participants contended that AI lacks consciousness and is instead a simulation of human-like behaviors.

- The Complexity of Defining Sentience: Sentience and consciousness were hot topics, with explorations into the subjective experiences of animals as revealed by neural activity studies. The conversation pointed out the difficulty of discerning sentience in different life forms and the challenges in defining consciousness solely based on human-like behavior.

- AI Misconceptions and Expectations: Some discussion highlighted a public misconception about AI, where many people equate all forms of AI with the concept of AGI (Artificial General Intelligence), as often depicted in science fiction. There was an emphasis on the need for clear distinctions between various forms of AI and the reality of current technologies.

- Live Discussion Dynamics: Debates about AI often led to friction amongst participants, demonstrating a wide spectrum of beliefs and opinions about AI's capabilities, consciousness, and ethical considerations. Some recommended additional resources like YouTube videos to reinforce their viewpoints.

- Potential AI Usage and Development Ideas: One user suggested training language models with goal-oriented sequences, such as

success <doing-business> success, for various applications including playing chess or developing business strategies, theorizing about its interactive possibilities when presented with new information during inference.

- China brain - Wikipedia: no description found

- How AIs, like ChatGPT, Learn: How do all the algorithms, like ChatGPT, around us learn to do their jobs?Footnote: https://www.youtube.com/watch?v=wvWpdrfoEv0Thank you to my supporters on ...

- ASCII Art Bananas - asciiart.eu: A large collection of ASCII art drawings of bananas and other related food and drink ASCII art pictures.

- Simulators — LessWrong: Thanks to Chris Scammell, Adam Shimi, Lee Sharkey, Evan Hubinger, Nicholas Dupuis, Leo Gao, Johannes Treutlein, and Jonathan Low for feedback on draf…

OpenAI ▷ #gpt-4-discussions (46 messages🔥):

- Custom GPT vs. Base Model: Channel members are discussing the advantages of using Custom GPTs over the base ChatGPT models. While some prefer the ease of building complex prompts with Custom GPTs, others find base ChatGPT models to be sufficient for their needs and challenge the need for Custom GPTs when prompt engineering alone can be effective.

- DALL·E Gains New Features: DALL·E has been updated with new features allowing for style suggestions and image inpainting, enabling users to edit specific parts of an image generated by DALL·E. This information might be particularly interesting for Plus plan users looking to utilize these functionalities.

- Comparing Model Performance: There's an exchange regarding the performance of various GPT models and systems, with some members noting that in specific areas, some models might outperform others. The conversation shows a nuanced understanding that model performance can vary greatly depending on the use case and individual testing.

- Utilizing AI for Wiki Data: A member is seeking advice on how to have GPT interpret and answer questions from an XML file containing a Wiki database dump. They expressed difficulty with the GPT providing accurate responses from the data in the XML file.

- Data Retention Questions: Users inquire about OpenAI’s data retention policy, specifically after deleting a chat. The response indicates that deleted chats on OpenAI are typically held for about a month, though they become immediately invisible to the user upon deletion.

OpenAI ▷ #prompt-engineering (27 messages🔥):

- Translation Troubles: A member is experiencing inconsistency when translating markdown content to various languages, especially Arabic. Efforts to tweak the prompt, such as adding "Only return translated text with its markup, not the original text," resulted in mixed outcomes, with some responses being untranslated.

- Seeking the Prompt Library: One member inquired about the location of the prompt library, and another quickly guided them to the renamed channel using its channel ID.

- Perfecting Apple Watch Expertise Prompts: A user sought advice on improving prompts to get better responses from the bot when asking as an Apple Watch expert. Another member advised experimenting with different versions of the prompts and even using the model to evaluate the prompts for clarity and potential hallucinations.

- Dalle-3 Prompt Engineering Location Query: A user questioned where to conduct Dalle-3 prompt engineering, whether in the general prompt-engineering channel or a specific Dalle thread. A member suggested it's their choice, but more focused help might be available in the Dalle-specific channel.

- Lengthening Text Responses: A member expressed frustration that the command "make the text longer" was no longer effective. Another member recommended a workaround involving copying the previous GPT response, starting a new chat, and then prompting with "continue."

- LLM Draft Document Issue: A member asked for assistance with an LLM that fails to return certain sections of a document while drafting from a template, even when changes have been made to those sections. They are looking for a solution to ensure all modified sections are included in the outputs.

OpenAI ▷ #api-discussions (27 messages🔥):

- Translation Troubles with Markup: A member attempted various prompt formulations to preserve Markdown markup and correctly translate content, including proper names and links, from one language to another. Despite the refined prompts, they faced issues with maintaining markdown formatting and receiving untranslated text, expressing frustration over the inconsistent translations.

- Seeking the Prompt Library: When asked where to find the prompt library, a member was directed to a channel renamed to #1019652163640762428, indicating the location where resources can be found.

- Improving Prompt Efficacy for AI Role Play: In a discussion about enhancing the quality of prompts for role-playing experts, a member suggested asking the AI to evaluate the prompt for clarity and consistency. They discussed the importance of the entire context of the prompt beyond single keywords like "roleplay" to influence the AI's response style.

- Dalle-3 Prompt Engineering Discussion Placement: A member inquired where to discuss Dalle-3 prompt engineering—whether in the api-discussions channel or a Dalle-specific thread. They were told it's their choice, though a more focused response might be found in a dedicated Dalle thread.

- Extending Iterative Text Generation: After experiencing issues with the command "make the text longer" not generating new content as expected, another member suggested copying the AI's response, initiating a new chat, and then using the word "continue" to extend the conversation.

Unsloth AI (Daniel Han) ▷ #general (306 messages🔥🔥):

- Support for CmdR on the Horizon: Discussions indicate work is underway to add support for CmdR in Unsloth, following fixes to inference issues. There's excitement about the progress, and discussions imply a completion time frame after the current tasks.

- Anticipation for Automatic Optimizer: Unsloth is planning a new open-source feature very important for the GPU poor set to be announced imminently, with a new release and an announcement on April 22. This feature is aimed to ameliorate AI accessibility by CPU optimization, supporting a wider range of models like command r, Mixtral, etc..

- Performance Queries Addressed: Users engaged in technical discussions about memory optimization, VRAM savings of 70% with Unsloth, and inplace kernel executions. The conversation highlights inquiries about data layout results on different models and the effectiveness of Unsloth's in-place operations for memory reduction.

- Enthusiasm and Confusion Clearing about the Gemma 2B Model: Support is given for changing to the Gemma 2B model in notebooks with provided instructions, and clarifications on downloading models in 4-bit versus 16-bit, with an assurance that accuracy degradation is usually between 0.1-0.5%.

- Job Postings and Ethical Hiring Discussed: A request for a job channel sparked debates on the ethics of unpaid internships and the skill set expected from interns. The consensus emphasized the importance of providing financial compensation for any work performed.

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- Google Colaboratory: no description found

- unsloth (Unsloth): no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - myshell-ai/JetMoE: Reaching LLaMA2 Performance with 0.1M Dollars: Reaching LLaMA2 Performance with 0.1M Dollars. Contribute to myshell-ai/JetMoE development by creating an account on GitHub.

- GitHub - OpenNLPLab/LASP: Linear Attention Sequence Parallelism (LASP): Linear Attention Sequence Parallelism (LASP). Contribute to OpenNLPLab/LASP development by creating an account on GitHub.

- sloth/sftune.py at master · toranb/sloth: python sftune, qmerge and dpo scripts with unsloth - toranb/sloth

- Reddit - Dive into anything: no description found

- GitHub - ggerganov/llama.cpp: LLM inference in C/C++: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- GaLore and fused kernel prototypes by jeromeku · Pull Request #95 · pytorch-labs/ao: Prototype Kernels and Utils Currently: GaLore Initial implementation of fused kernels for GaLore memory efficient training. TODO: triton Composable triton kernels for quantized training and ...

Unsloth AI (Daniel Han) ▷ #random (5 messages):

- Unsloth Studio Overhaul in Progress: Unsloth AI team is delaying the release of the new version of Unsloth Studio due to persistent bugs. A tentative, early version might be available mid next month and the existing Unsloth package will remain compatible with Colab.

- New Tab Autocomplete Feature in Pre-Release: A new pre-release experimental feature for tab autocomplete is available in the Continue extension for VS Code and JetBrains. Continue's open-source autopilot allows easier coding with any LLM by asking questions about highlighted code and referencing context inline, as showcased with animated GIFs in its documentation.

Link mentioned: Continue - Claude, CodeLlama, GPT-4, and more - Visual Studio Marketplace: Extension for Visual Studio Code - Open-source autopilot for software development - bring the power of ChatGPT to your IDE

Unsloth AI (Daniel Han) ▷ #help (248 messages🔥🔥):

- Tokenizer Troubles: The error a user faced was due to incorrect naming of the model in the tokenizer, which resulted in it not being written properly, leading to execution issues. They resolved the issue on their own.

- Successful Model Saving and Huggingface Push: Users discussed saving models with

model.save_pretrained_merged()andmodel.push_to_hub_merged(), focusing on properly setting naming parameters for model saving and Huggingface push. Relevant advice included replacing placeholders with a Huggingface username/model name and obtaining a Write token from Huggingface settings.

- Inference Issues on Gemma: A user encountered an

AttributeErrorrelated to aGemmaForCausalLMobject missing thelayersattribute, which was fixed via an update to Unsloth requiring users to reinstall the package on personal machines.

- Challenges with GGUF Conversions and Docker Environments: Users shared issues when converting models to GGUF format, and an instance where the Docker environment produced an error that was solved with the installation of

python3.10-dev.

- Finetuning Challenges and Solutions: Discussion included finetuning Gemma models in Colab, remedies for

OutOfMemoryErrorwhen using 24GB GPUs on Sagemaker, a GGUF-spelled words quirk after conversion, and insights on resuming training with altered parameters.

- Hugging Face – The AI community building the future.: no description found

- Adding accuracy, precision, recall and f1 score metrics during training: hi, you can define your computing metric function and pass it into the trainer. Here is an example of computing metrics. define accuracy metrics function from sklearn.metrics import accuracy_score, ...

- TinyLlama/TinyLlama-1.1B-Chat-v1.0 · Hugging Face: no description found

- deepseek-ai/deepseek-vl-7b-chat · Hugging Face: no description found

- qwp4w3hyb/deepseek-coder-7b-instruct-v1.5-iMat-GGUF · Hugging Face: no description found

- danielhanchen/model_21032024 · Hugging Face: no description found

- TheBloke/deepseek-coder-6.7B-instruct-GGUF · Hugging Face: no description found

- Hugging Face Transformers | Weights & Biases Documentation: The Hugging Face Transformers library makes state-of-the-art NLP models like BERT and training techniques like mixed precision and gradient checkpointing easy to use. The W&B integration adds rich...

- Supervised Fine-tuning Trainer: no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models.: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - oobabooga/text-generation-webui

- fix GemmaModel_fast_forward_inference by eabdullin · Pull Request #300 · unslothai/unsloth: On fast inference Gemma model fails with an error 'GemmaCausalLM' has no attribute 'layers'. Quick fix for that.

- GitHub - abetlen/llama-cpp-python: Python bindings for llama.cpp: Python bindings for llama.cpp. Contribute to abetlen/llama-cpp-python development by creating an account on GitHub.

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

Latent Space ▷ #ai-general-chat (86 messages🔥🔥):

- Stable Audio 2.0 Released: Stability AI introduces Stable Audio 2.0, capable of producing high-quality, full tracks with coherent musical structure up to three minutes long at 44.1 kHz stereo from a single prompt. Users can explore the model for free at stableaudio.com and read the blog post here.

- AssemblyAI's New Speech Model Surpasses Whisper-3: AssemblyAI releases Universal-1, a model boasting 13.5% more accuracy and up to 30% fewer hallucinations than Whisper-3, capable of processing 60 minutes of audio in 38 seconds, though it only supports 20 languages. Test it in the free playground at assemblyai.com.

- Edit DALL-E Images in ChatGPT Plus: ChatGPT Plus now allows users to edit DALL-E images and their own conversation prompts on the web and iOS app. Instructions and user interface details can be found here.

- AI Framework Discussion by slono: Slono shared thoughts on building AI agents as microservices/event-driven architecture for better scalability, invoking ideas similar to the Actor Model of Computation and seeking feedback or assistance with their Golang framework.

- Opera Allows Downloading and Running Local LLMs: Opera now enables users to download and run large language models (LLMs) locally, starting with Opera One users who have developer stream updates. The browser is making use of the open-source Ollama framework and plans to add more models from various sources for users' choice.

- no title found: no description found

- Tweet from horseboat (@horseracedpast): bengio really wrote this in 2013 huh ↘️ Quoting AK (@_akhaliq) Google presents Mixture-of-Depths Dynamically allocating compute in transformer-based language models Transformer-based language mod...

- React App: no description found

- Tweet from Hassan Hayat 🔥 (@TheSeaMouse): @fouriergalois @GoogleDeepMind bro, MoE with early exit. the entire graph is shifted down, this is like 10x compute savings... broooo

- Introducing improvements to the fine-tuning API and expanding our custom models program: We’re adding new features to help developers have more control over fine-tuning and announcing new ways to build custom models with OpenAI.

- Should kids still learn to code? (Practical AI #263) — Changelog Master Feed — Overcast: no description found

- Tweet from Hassan Hayat 🔥 (@TheSeaMouse): Why Google Deepmind's Mixture-of-Depths paper, and more generally dynamic compute methods, matter: Most of the compute is WASTED because not all tokens are equally hard to predict

- Opera allows users to download and use LLMs locally | TechCrunch: Opera said today it will now allow users to download and use Large Language Models (LLMs) locally on their desktop.

- Open sourcing AI app development with Harrison Chase from LangChain — No Priors: Artificial Intelligence | Machine Learning | Technology | Startups — Overcast: no description found

- Tweet from Stability AI (@StabilityAI): Introducing Stable Audio 2.0 – a new model capable of producing high-quality, full tracks with coherent musical structure up to three minutes long at 44.1 kHz stereo from a single prompt. Explore the...

- Tweet from Nick Dobos (@NickADobos): New Dalle is so good wtf Way more steerable than anything else I’ve tried I made an app mockup in 3 prompts. Wow!! Even sorta got the tab bar & a layout

- Tweet from cohere (@cohere): Today, we’re introducing Command R+: a state-of-the-art RAG-optimized LLM designed to tackle enterprise-grade workloads and speak the languages of global business. Our R-series model family is now av...

- Tweet from Sherjil Ozair (@sherjilozair): How did this get published? 🤔 ↘️ Quoting AK (@_akhaliq) Google presents Mixture-of-Depths Dynamically allocating compute in transformer-based language models Transformer-based language models sp...

- Tweet from Ben (e/sqlite) (@andersonbcdefg): amazing. "you like MoE? what if we made one of the experts the identity function." kaboom, 50% FLOPs saved 🤦♂️ ↘️ Quoting Aran Komatsuzaki (@arankomatsuzaki) Google presents Mixture-of-De...

- Tweet from Blaze (Balázs Galambosi) (@gblazex): Wow. While OpenAI API is still stuck on Whisper-2, @AssemblyAI releases something that beats even Wishper-3: + 13.5% more accurate than Whisper-3 + Up to 30% fewer hallucinations + 38s to process 60...