[AINews] Summer of Code AI: $1.6b raised, 1 usable product

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Code + AI is all you need.

AI News for 8/28/2024-8/29/2024. We checked 7 subreddits, 400 Twitters and 30 Discords (213 channels, and 2980 messages) for you. Estimated reading time saved (at 200wpm): 338 minutes. You can now tag @smol_ai for AINews discussions!

One of the core theses in the Rise of the AI Engineer is that code is first among equals among the many modalities that will emerge. Above the obvious virtuous cycle (code faster -> train faster -> code faster), it also has the nice property of being 1) internal facing (so lower but nonzero liability of errors), 2) improving developer productivity (one of the most costly headcounts), 3) verifiable/self-correcting (in the Let's Verify Step by Step sense).

This Summer of Code kicked off with:

- Cognition (Devin) raising $175m (still under very restricted waitlist) (their World's Fair talk here)

- Poolside raising $400m (still mostly stealth)

Today, we have:

- Codeium AI raising $150m on top of their January $65m raise (their World's Fair talk here)

- Magic raising $320m on top of their $100m Febuary raise, announcing LTM-2, officially confirming their rumored 100m token context model, though still remaining in stealth.

While Codeium is the only product of the 4 you can actually use today, Magic's announcement is the more notable one, because of their promising long context utilization (powered by HashHop) and efficiency details teased by Nat Friedman in the previous raise:

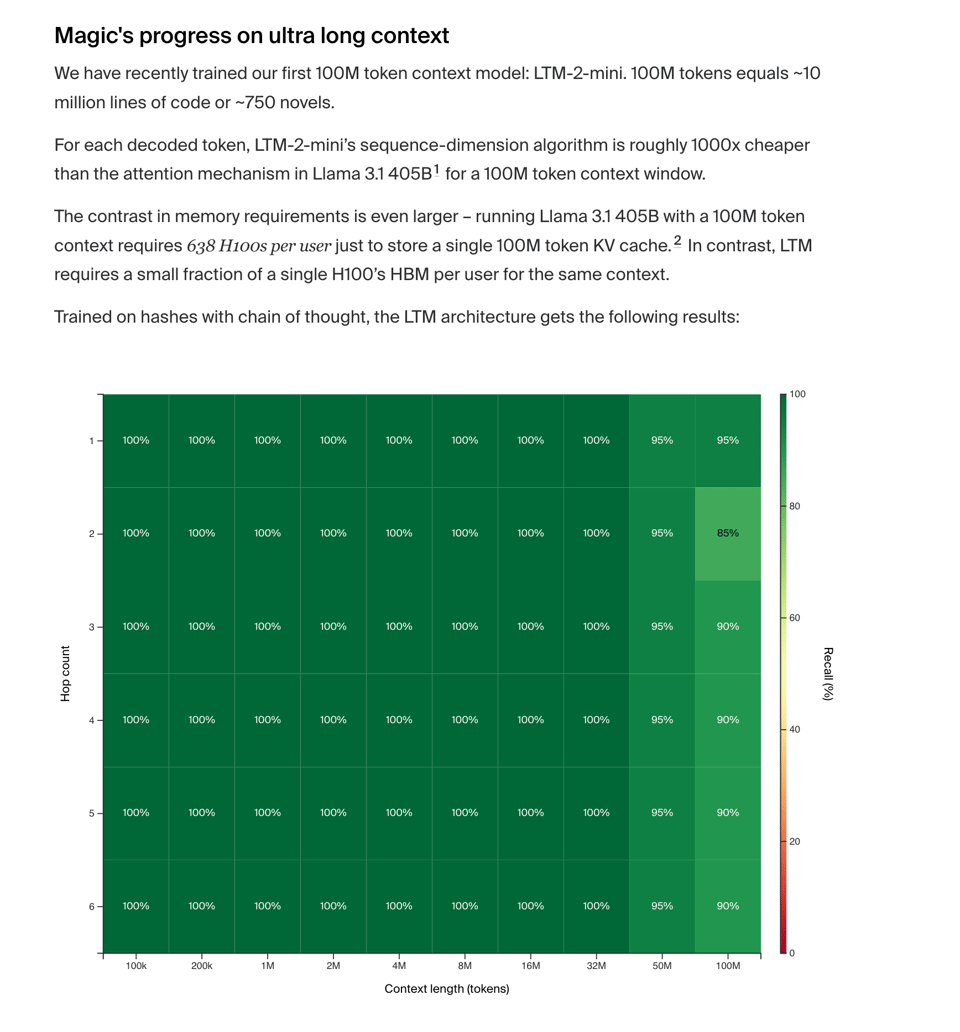

For each decoded token, LTM-2-mini’s sequence-dimension algorithm is roughly 1000x cheaper than the attention mechanism in Llama 3.1 405B1 for a 100M token context window. The contrast in memory requirements is even larger – running Llama 3.1 405B with a 100M token context requires 638 H100s per user just to store a single 100M token KV cache. In contrast, LTM requires a small fraction of a single H100’s HBM per user for the same context.

This was done with a completely-written-from-scratch stack:

To train and serve 100M token context models, we needed to write an entire training and inference stack from scratch (no torch autograd, lots of custom CUDA, no open-source foundations) and run experiment after experiment on how to stably train our models.

They also announced a Google Cloud partnership:

Magic-G4, powered by NVIDIA H100 Tensor Core GPUs, and Magic-G5, powered by NVIDIA GB200 NVL72, with the ability to scale to tens of thousands of Blackwell GPUs over time.

They mention 8000 h100s now, but "over time, we will scale up to tens of thousands of GB200s" with former OpenAI Supercomputing Lead Ben Chess.

Their next frontier is inference-time compute:

Imagine if you could spend $100 and 10 minutes on an issue and reliably get a great pull request for an entire feature. That’s our goal.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Applications

- Gemini Updates: Google DeepMind announced new features for Gemini Advanced, including customizable "Gems" that act as topic experts and premade Gems for different scenarios. @GoogleDeepMind highlighted the ability to create and chat with these customized versions of Gemini.

- Neural Game Engines: @DrJimFan discussed GameNGen, a neural world model capable of running DOOM purely in a diffusion model. He noted that it's trained on 0.9B frames, which is a significant amount of data, almost 40% of the dataset used to train Stable Diffusion v1.

- LLM Quantization: Rohan Paul shared information about AutoRound, a library from Intel's Neural Compressor team for advanced quantization of LLMs. @rohanpaul_ai noted that it approaches near-lossless compression for popular models and competes with recent quantization methods.

- AI Safety and Alignment: François Chollet @fchollet highlighted concerns about the prevalence of AI-generated content in election-related posts, estimating that a considerable fraction (~80% by volume, ~30% by impressions) aren't from actual people.

AI Infrastructure and Performance

- Inference Speed: @StasBekman suggested that for online inference, 20 tokens per second per user might be sufficient, allowing for serving more concurrent requests with the same hardware.

- Hardware Developments: David Holz @DavidSHolz mentioned forming a new hardware team at Midjourney, indicating potential developments in AI-specific hardware.

- Model Comparisons: Discussions about model performance included comparisons between Gemini and GPT models. @bindureddy noted that Gemini's latest experimental version moved the needle slightly but still trails behind others.

AI Applications and Research

- Multimodal Models: Meta FAIR introduced Transfusion, a model combining next token prediction with diffusion to train a single transformer over mixed-modality sequences. @AIatMeta shared that it scales better than traditional approaches.

- RAG and Agentic AI: Various discussions centered around Retrieval-Augmented Generation (RAG) and agentic AI systems. @omarsar0 shared information about an agentic RAG framework for time series analysis using a multi-agent architecture.

- AI in Legal and Business: Johnson Lambert, an audit firm, reported a 50% boost in audit efficiency using Cohere Command on Amazon Bedrock, as shared by @cohere.

AI Development Practices and Tools

- MLOps and Experiment Tracking: @svpino emphasized the importance of reproducibility, debugging, and monitoring in machine learning systems, recommending tools like Comet for experiment tracking and monitoring.

- Open-Source Tools: Various open-source tools were highlighted, including Kotaemon, a customizable RAG UI for document chatting, as shared by @_akhaliq.

AI Ethics and Regulation

- Voter Fraud Discussions: Yann LeCun @ylecun criticized claims about non-citizens voting, emphasizing the importance of trust in democratic institutions.

- AI Regulation: Discussions around AI regulation, including California's SB1047, were mentioned, highlighting ongoing debates about AI safety and governance.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Innovative Local LLM User Interfaces

- Yet another Local LLM UI, but I promise it's different! (Score: 170, Comments: 50): The post introduces a new Local LLM UI project developed as a PWA (Progressive Web App) with a focus on creating a smooth, familiar user interface. The developer, who was laid off in early 2023, highlights key features including push notifications for offline interactions and cross-device compatibility, with plans to implement a Character.ai-like experience for persona interactions. The project is available on GitHub under the name "suaveui", and the author is seeking feedback, GitHub stars, and potential job opportunities.

- Users praised the UI's sleek design, comparing it to messaging real humans. The developer plans to add more skins inspired by popular messaging apps and implement a one-click-and-run experience with built-in secure tunneling.

- Several users requested easier installation methods, including Docker/docker-compose support and a more detailed tutorial. The developer acknowledged these requests and promised improvements in the coming days.

- Discussion around compatibility revealed plans to support OpenAI-compatible endpoints and various LLM servers. The developer also expressed interest in implementing voice call support, inspired by Character.ai's call feature.

Theme 2. Advancements in Large Language Model Capabilities

- My very simple prompt that has defeated a lot of LLMs. “My cat is named dog, my dog is named tiger, my tiger is named cat. What is unusual about my pets?” (Score: 79, Comments: 89): The post presents a simple prompt designed to challenge Large Language Models (LLMs). The prompt describes a scenario where the author's pets have names typically associated with different animals: a cat named dog, a dog named tiger, and a tiger named cat, asking what is unusual about this arrangement.

- LLaMA 3.1 405b and Gemini 1.5 Pro were noted as the top performers in identifying both the unusual naming scheme and the oddity of owning a tiger as a pet. LLaMA's response was particularly praised for its human-like tone and casual inquiry about tiger ownership.

- The discussion highlighted the varying approaches of different LLMs, with some focusing solely on the circular naming, while others questioned the legality and practicality of owning a tiger. Claude 3.5 was noted for its direct skepticism, stating "you claim to have a pet tiger".

- Users debated the merits of different AI responses, with some preferring more casual tones and others appreciating direct skepticism. The thread also included humorous exchanges about the AI-generated image of a tiger on an air sofa, with comments on its unrealistic aspects.

Theme 3. Challenges in Evaluating AI Intelligence and Reasoning

- Regarding "gotcha" tests to determine LLM intelligence (Score: 112, Comments: 73): The post critiques "gotcha" tests for LLM intelligence, specifically addressing a test involving unusually named pets including a tiger. The author argues that such tests are flawed and misuse LLMs, demonstrating that when properly prompted, even a 9B parameter model can correctly identify owning a tiger as the most unusual aspect. An edited example with a more precise prompt shows that most tested models, including Gemma 2B, correctly identified the unusual aspects, with only a few exceptions like Yi models and Llama 3.0.

- Users criticized the "gotcha" test, noting it's a flawed measure of intelligence that even humans might fail. Many, including the OP, initially missed the point about the tiger being unusual, focusing instead on the pet names.

- The test was compared to other LLM weaknesses, with users sharing links to more challenging puzzles like ZebraLogic. Some argued that LLMs can reason, citing benchmarks and clinical reasoning tests that show performance similar to humans.

- Discussion touched on how LLMs generate responses, with debates about whether they truly reason or just predict. Some users pointed out that asking an LLM to explain its reasoning post-generation can lead to biased or hallucinated explanations.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Research and Techniques

- Google DeepMind's GameNGen: A neural model game engine that enables real-time interaction with complex environments over long trajectories. It can simulate DOOM at over 20 FPS on a single TPU, with next frame prediction achieving a PSNR of 29.4.

- Diffusion Models for Game Generation: The GameNGen model generates DOOM gameplay in real-time as the user plays, demonstrating the potential for AI-generated interactive environments.

AI Model Releases and Improvements

- OpenAI's GPT-4 Iterations: OpenAI has released several versions of GPT-4, including GPT-4, GPT-4o, GPT4o-mini, and GPT4o Turbo. There is speculation about future releases and naming conventions.

AI Impact on Industry and Employment

- Klarna's AI-Driven Job Cuts: The buy now, pay later company Klarna is planning to cut 2,000 jobs as their AI chatbots now perform tasks equivalent to 700 human employees.

Technical Details and Discussions

- GameNGen Architecture: The model uses 65 frames of game resolution as input, with the last frame being generated. It employs a noise addition technique to mitigate incremental corruption in AI-generated video.

- GPT-4 Training Challenges: Discussions mention the significant computational resources required for training large language models, including the need for new power plants to support future generations of models.

AI Discord Recap

A summary of Summaries of Summaries by GPT4O (gpt-4o-2024-05-13)

1. LLM Advancements

- LLMs Struggle with Image Comparison: A member in the LM Studio Discord inquired about LM Studio's support for image formats, but others noted that most LLMs are not trained for image comparison tasks and they "see" images differently. They suggested trying a LLaVA model, which is specifically designed for vision tasks.

- @vision_expert noted, "LLaVA models have been showing promising results in vision tasks, which might be a good fit for your needs."

- Gemini's Capabilities Questioned: A user in the OpenAI Discord criticized Gemini's VR information, pointing out its incorrect labeling of the Meta Quest 3 as "upcoming". The user expressed their preference for ChatGPT, concluding that Gemini is a "bad AI".

- Other users chimed in, agreeing that Gemini needs improvements, particularly in accuracy and up-to-date information.

2. Model Performance Optimization

- Slowing Down Inference Speed: A member in the LM Studio Discord wanted to artificially slow down inference speed for a specific use case. LM Studio doesn't currently support this feature, but the server API can be used to achieve similar results by loading multiple models.

- This workaround sparked a discussion on optimizing server API usage to handle multiple models efficiently.

- RAG & Knowledge Graphs: A Powerful Duo: In the LangChain AI Discord, the user highlighted the benefits of Retrieval-Augmented Generation (RAG) for AI applications, enabling models to access relevant data without retraining. They expressed interest in combining RAG with knowledge graphs, exploring a hybrid approach for their text-to-SQL problem.

- @data_guru suggested integrating knowledge graphs to enhance the semantic understanding and accuracy of the models.

3. Fine-tuning Strategies

- The Prompt Engineering vs Fine-tuning Debate: In the OpenAI Discord, members engaged in a lively discussion about the merits of fine-tuning and prompt engineering for achieving desired writing styles. While some highlighted the effectiveness of prompt-by-example, others stressed the importance of data preparation for fine-tuning.

- @model_tuner emphasized that fine-tuning requires a well-curated dataset to avoid overfitting and ensure generalizability.

- Unsloth: Streamlined Fine-tuning: A member in the Unsloth AI Discord highlighted the benefits of using Unsloth for fine-tuning LLMs like Llama-3, Mistral, Phi-3, and Gemma, claiming it makes the process 2x faster, uses 70% less memory, and maintains accuracy. The member provided a link to the Unsloth tutorial, which includes automatic export of the fine-tuned model to Ollama and automatic

Modelfilecreation.- This sparked interest in the community, with members discussing their experiences with memory optimization and training efficiency.

4. Open Source AI Developments

- Daily Bots Launches Open Source Cloud for AI: In the OpenInterpreter Discord, Daily Bots, a low-latency cloud for voice, vision, and video AI, has been launched, allowing developers to build voice-to-voice interactions with any LLM at latencies as low as 500ms. The platform offers open source SDKs, the ability to mix and match AI models, and runs at scale on Daily's real-time global infrastructure, leveraging the open source projects RTVI and Pipecat.

- This launch was met with excitement, with @developer_joe noting the potential for real-time applications in customer service and beyond.

- Llama 3 Open Source Adoption Surges: In the Latent Space Discord, the open-source Llama model family continues to gain traction, with downloads on Hugging Face surpassing 350 million, a tenfold increase compared to last year. Llama's popularity extends to cloud service providers, with token usage more than doubling since May, and adoption across various industries, including Accenture, AT&T, DoorDash, and many others.

- @data_scientist discussed the implications of this growth, emphasizing the importance of community support and open-source collaboration.

5. AI Community and Events

- Perplexity Discord Celebrates 100K Members: The Perplexity AI Discord server has officially reached 100,000 members! The team expressed gratitude for the community's support and feedback, and excitement for future growth and evolution.

- Members shared their favorite Perplexity AI features and discussed potential improvements and new features they would like to see.

- AI Engineer Meetup & Summit: In the Latent Space Discord, the AI Engineer community is expanding! The first London meetup is scheduled for September, and the second AI Engineer Summit in NYC is planned for December. Those interested in attending the London meetup can find more information here, and potential sponsors for the NYC summit are encouraged to get in touch.

- The announcement generated buzz, with members expressing interest in networking opportunities and collaboration at the events.

PART 1: High level Discord summaries

LM Studio Discord

- LLMs Struggle with Image Comparison: A member inquired about LM Studio's support for image formats, but others noted that most LLMs are not trained for image comparison tasks and they "see" images differently.

- They suggested trying a LLaVA model, which is specifically designed for vision tasks.

- Slowing Down Inference Speed: A member wanted to artificially slow down inference speed for a specific use case.

- LM Studio doesn't currently support this feature, but the server API can be used to achieve similar results by loading multiple models.

- LM Studio's New UI Changes: A few members inquired about the missing Load/Save template feature in LM Studio 0.3.2, which was previously used to save custom settings for different tasks.

- They were informed that this feature is no longer necessary and custom settings can now be changed by holding ALT during model loading or in the My Models view.

- LM Studio's RAG Feature Facing Issues: A member reported an issue with LM Studio's RAG feature, where the chatbot continues to analyze a document even after it's finished being processed, making it difficult to carry on normal conversations.

- Another member reported an issue with downloading the LM Studio Windows installer, but this was resolved by removing a space from the URL.

- PCIE 5.0 x4 Mode for 3090: A user asked if a 3090 could be installed in PCIE 5.0 x4 mode and if that would provide enough bandwidth.

- Another user confirmed that current GPUs barely use PCIE 4.0 and 5.0 controllers run hot, with the first 5.0 SSDs needing active cooling.

OpenAI Discord

- Gemini's Capabilities Questioned: A user criticized Gemini's VR information, pointing out its incorrect labeling of the Meta Quest 3 as "upcoming."

- The user expressed their preference for ChatGPT, concluding that Gemini is a "bad AI."

- The Call for Personalized LLMs: A member proposed a vision for personalized LLMs, outlining desired features like customizable AI personalities, long-term memory, and more human-like conversations.

- They believe these features would enhance the meaningfulness and impact of interactions with AI.

- Tackling Context Window Limitations: Users discussed the limitations of context windows and the high cost of using tokens for long-term memory in LLMs.

- Solutions proposed included utilizing RAG to retrieve relevant history, optimizing token usage, and developing custom tools for memory management.

- The Prompt Engineering vs Fine-tuning Debate: Members engaged in a lively discussion about the merits of fine-tuning and prompt engineering for achieving desired writing styles.

- While some highlighted the effectiveness of prompt-by-example, others stressed the importance of data preparation for fine-tuning.

- OpenAI API: Cost and Alternatives: Conversations centered around the high cost of utilizing the OpenAI API, particularly for projects involving long-term memory and complex characters.

- Users explored strategies for optimization and considered alternative models like Gemini and Claude.

Stability.ai (Stable Diffusion) Discord

- SDXL Backgrounds Still a Challenge: A user expressed difficulty in creating good backgrounds with SDXL, often resulting in unknown things.

- The user is seeking advice on how to overcome this challenge and produce more realistic and coherent backgrounds.

- Lora Creation: Close-ups vs. Full Faces: A user asked if creating a Lora requires just a close-up of the desired detail, like a nose, or if the whole face needs to be included.

- The user is looking for guidance on the best practices for Lora creation, specifically regarding the necessary extent of the training data.

- Can ComfyUI Handle Multiple Characters?: A user asked if ComfyUI can help create images with two different characters without mixing their traits.

- The user is seeking to understand if ComfyUI offers features that enable the generation of images with multiple distinct characters, avoiding unwanted trait blending.

- Regularization Explained: AI Toolkit: A user asked how regularization works in AI Toolkit, after watching a video where the creator used base images without regularization.

- The user is requesting clarification on the purpose and implementation of regularization within the AI Toolkit context.

- SDXL on a 2017 Mid-Range Laptop: Feasibility?: A user inquired about the feasibility of running SDXL on a 2017 mid-range Acer Aspire E Series laptop.

- The user is seeking information on whether their older laptop's hardware capabilities are sufficient for running SDXL effectively.

Unsloth AI (Daniel Han) Discord

- Unsloth: Speed & Memory Gains: Unsloth uses 4-bit quantization for much faster training speeds and lower VRAM usage compared to OpenRLHF.

- While Unsloth only supports 4-bit quantized models currently for finetuning, they are working on adding support for 8-bit and unquantized models, with no tradeoff in performance or replicability.

- Finetuning with Unsloth on AWS: Unsloth doesn't have a dedicated guide for finetuning on AWS.

- However, some users are using Sagemaker for finetuning models on AWS, and there are numerous YouTube videos and Google Colab examples available.

- Survey Seeks Insights on ML Model Deployment: A survey was posted asking ML professionals about their experiences with model deployment, specifically focusing on common problems and solutions.

- The survey aims to identify the top three issues encountered when deploying machine learning models, providing valuable insights into the practical hurdles faced by professionals in this field.

- Gemma2:2b Fine-tuning for Function Calling: A user seeks guidance on fine-tuning the Gemma2:2b model from Ollama for function calling, using the XLM Function Calling 60k dataset and the provided notebook.

- They are unsure about formatting the dataset into instruction, input, and output format, particularly regarding the 'tool use' column.

- Unsloth: Streamlined Fine-tuning: A member highlights the benefits of using Unsloth for fine-tuning LLMs like Llama-3, Mistral, Phi-3, and Gemma, claiming it makes the process 2x faster, uses 70% less memory, and maintains accuracy.

- The member provides a link to the Unsloth tutorial, which includes automatic export of the fine-tuned model to Ollama and automatic

Modelfilecreation.

- The member provides a link to the Unsloth tutorial, which includes automatic export of the fine-tuned model to Ollama and automatic

Perplexity AI Discord

- Perplexity Discord Celebrates 100K Members: The Perplexity AI Discord server has officially reached 100,000 members! The team expressed gratitude for the community's support and feedback, and excitement for future growth and evolution.

- Perplexity Pro Membership Issues: Several users reported problems with their Perplexity Pro memberships, including the disappearing of magenta membership and free LinkedIn Premium offer, as well as issues with the "Ask Follow-up" feature.

- Others also experienced issues with the "Ask Follow-up" feature, where the option to "Ask Follow-up" when highlighting a line of text in perplexity responses disappeared.

- Perplexity AI Accuracy Concerns: Users expressed concerns about Perplexity AI's tendency to present assumptions as fact, often getting things wrong.

- They shared examples from threads where Perplexity AI incorrectly provided information about government forms and scraping Google, showcasing the need for more robust fact-checking and human review in its responses.

- Navigating the Maze of AI Models: Users expressed confusion over selecting the best AI model, debating the merits of Claude 3 Opus, Claude 3.5 Sonnet, and GPT-4o.

- Several users noted that certain models, such as Claude 3 Opus, are limited to 50 questions, and users are unsure if Claude 3.5 Sonnet is a better choice, despite its limitations.

- Perplexity AI Usability Challenges: Users highlighted issues with Perplexity AI's platform usability, including difficulty in accessing saved threads and problems with the prompt section.

- One user pointed out that the Chrome extension description is inaccurate, falsely stating that Perplexity Pro uses GPT-4 and Claude 2, potentially misrepresenting the platform's capabilities.

Cohere Discord

- LLMs Tokenize, Not Letters: A member reminded everyone that LLMs don't see letters, they see tokens - a big list of words.

- They used the example of reading Kanji in Japanese, which is more similar to how LLMs work than reading letters in English.

- Claude's Sycophancy Debate: One member asked whether LLMs have a tendency to be sycophantic, particularly when it comes to reasoning.

- Another member suggested adding system messages to help with this, but said even then, it's more of a parlor trick than a useful production tool.

- MMLu Not Great for Real-World Use: One member noted that MMLu isn't a good benchmark for building useful LLMs because it's not strongly correlated with real-world use cases.

- They pointed to examples of questions on Freud's outdated theories on sexuality, implying the benchmark isn't reflective of what users need from LLMs.

- Cohere For AI Scholars Program Open for Applications: Cohere For AI is excited to open applications for the third cohort of its Scholars Program, designed to help change where, how, and by whom research is done.

- The program is designed to help researchers and like minded collaborators and you can find more information on the Cohere For AI Scholars Program page.

- Internal Tool Soon to Be Publicly Available: A member shared that the tool is currently hosted on the company's admin panel, but a publicly hosted version will be available soon.

- The tool is currently hosted on the company's admin panel, but a publicly hosted version is expected soon.

LlamaIndex Discord

- LlamaIndex Workflows Tutorial Now Available: A comprehensive tutorial on LlamaIndex Workflows is now available in the LlamaIndex docs, covering a range of topics, including getting started with Workflows, loops and branches, maintaining state, and concurrent flows.

- The tutorial can be found here.

- GymNation Leverages LlamaIndex to Boost Sales: GymNation partnered with LlamaIndex to improve member experience and drive real business outcomes, resulting in an impressive 20% increase in digital lead to sales conversion and an 87% conversation rate with digital leads.

- Function Calling LLMs for Streaming Output: A member is seeking an example of building an agent using function calling LLMs where they stream the final output, avoiding latency hits caused by passing the full message to a final step.

- They are building the agent from mostly scratch using Workflows and looking for a solution.

- Workflows: A Complex Logic Example: A member shared a workflow example that utilizes an async generator to detect tool calls and stream the output.

- They also discussed the possibility of using a "Final Answer" tool that limits output tokens and passes the final message to a final step if called.

- Optimizing Image + Text Retrieval: A member inquired about the best approach for combining image and text retrieval, considering using CLIP Embeddings for both, but are concerned about CLIP's semantic optimization compared to dedicated text embedding models like txt-embeddings-ada-002.

Latent Space Discord

- Agency Raises $2.6 Million: Agency, a company building AI agents, announced a $2.6 million fundraise to develop "generationally important technology" and bring their AI agents to life.

- The company's vision involves building a future where AI agents are ubiquitous and integral to our lives, as highlighted on their website agen.cy.

- AI Engineer Meetup & Summit: The AI Engineer community is expanding! The first London meetup is scheduled for September, and the second AI Engineer Summit in NYC is planned for December.

- Those interested in attending the London meetup can find more information here, and potential sponsors for the NYC summit are encouraged to get in touch.

- AI for Individual Use: Nicholas Carlini, a research scientist at DeepMind, argues that the focus of AI should shift from grand promises of revolution to its individual benefits.

- His blog post, "How I Use AI" (https://nicholas.carlini.com/writing/2024/how-i-use-ai.html), details his practical applications of AI tools, resonating with many readers, especially on Hacker News (https://news.ycombinator.com/item?id=41150317).

- Midjourney Ventures into Hardware: Midjourney, the popular AI image generation platform, is officially entering the hardware space.

- Individuals interested in joining their new team in San Francisco can reach out to hardware@midjourney.com.

- Llama 3 Open Source Adoption Surges: The open-source Llama model family continues to gain traction, with downloads on Hugging Face surpassing 350 million, a tenfold increase compared to last year.

- Llama's popularity extends to cloud service providers, with token usage more than doubling since May, and adoption across various industries, including Accenture, AT&T, DoorDash, and many others.

OpenInterpreter Discord

- OpenInterpreter Development Continues: OpenInterpreter development is still active, with recent commits to the main branch of the OpenInterpreter GitHub repo.

- This means that the project is still being worked on and improved.

- Auto-run Safety Concerns: Users are cautioned to be aware of the risks of using the

auto_runfeature in OpenInterpreter.- It is important to carefully monitor output when using this feature to prevent any potential issues.

- Upcoming House Party: A House Party has been planned for next week at an earlier time to encourage more participation.

- This event will be a great opportunity to connect with other members of the community and discuss all things OpenInterpreter.

- Terminal App Recommendations: A user is looking for a recommended terminal app for KDE as Konsole, their current terminal, bleeds the screen when scrolling GPT-4 text.

- This issue could be due to the terminal's inability to handle the large amount of text output from GPT-4.

- Daily Bots Launches Open Source Cloud for AI: Daily Bots, a low-latency cloud for voice, vision, and video AI, has been launched, allowing developers to build voice-to-voice interactions with any LLM at latencies as low as 500ms.

- The platform offers open source SDKs, the ability to mix and match AI models, and runs at scale on Daily's real-time global infrastructure, leveraging the open source projects RTVI and Pipecat.

OpenAccess AI Collective (axolotl) Discord

- Macbook Pro Training Speed Comparison: A user successfully trained large models on a 128GB Macbook Pro, but it was significantly slower than training on an RTX 3090, with training speed roughly halved.

- They are seeking more cost-effective training solutions and considering undervolted 3090s or AMD cards as alternatives to expensive H100s.

- Renting Hardware for Training: A user recommends renting hardware before committing to a purchase, especially for beginners.

- They suggest spending $30 on renting different hardware and experimenting with training models to determine the optimal configuration.

- Model Size and Training Speed: The user is exploring the relationship between model size and training speed.

- They are specifically interested in how training time changes when comparing models like Nemotron-4-340b-instruct with Llama 405.

- Fine-Tuning LLMs for Dialogue: A member has good models for long dialogue, but the datasets used for training are all of the 'ShareGPT' type.

- They want to personalize data processing, particularly streamlining content enclosed by asterisks (), for example, she smile to smiling*.

- Streamlining Content via Instruction: A member asks if a simple instruction can be used to control a fine-tuned model to streamline and rewrite data.

- They inquire about LlamaForCausalLM's capabilities and if there are better alternatives, with another member suggesting simply passing prompts with a system prompt to Llama.

LangChain AI Discord

- Hybrid Search with SQLDatabaseChain & PGVector: A user is using PostgreSQL with

pgvectorfor embedding storage andSQLDatabaseChainto translate queries into SQL, aiming to modifySQLDatabaseChainto search vectors for faster responses.- This approach could potentially improve search speed and provide more efficient results compared to traditional SQL-based queries.

- RAG & Knowledge Graphs: A Powerful Duo: The user highlights the benefits of Retrieval-Augmented Generation (RAG) for AI applications, enabling models to access relevant data without retraining.

- They express interest in combining RAG with knowledge graphs, exploring a hybrid approach for their text-to-SQL problem, potentially improving model understanding and accuracy.

- Crafting Adaptable Prompts for Multi-Database Queries: The user faces the challenge of creating optimal prompts for different SQL databases due to varying schema requirements, leading to performance issues and redundant templates.

- They seek solutions for creating adaptable prompts that cater to multiple databases without compromising performance, potentially improving efficiency and reducing development time.

- Troubleshooting OllamaLLM Connection Refused in Docker: A user encountered a connection refused error when attempting to invoke

OllamaLLMwithin a Docker container, despite successful communication with the Ollama container.- A workaround using the

langchain_community.llms.ollamapackage was suggested, potentially resolving the issue and highlighting a potential bug in thelangchain_ollamapackage.

- A workaround using the

- Exploring Streaming in LangChain v2.0 for Function Calling: The user inquired about the possibility of using LangChain function calling with streaming in version 2.0.

- Although no direct answer was provided, it appears this feature is not currently available, suggesting a potential area for future development in LangChain.

Torchtune Discord

- Torchtune Needs Your Help: The Torchtune team is looking for community help to contribute to their repository by completing bite-sized tasks, with issues labeled "community help wanted" available on their GitHub issues page.

- They are also available to assist contributors via Discord.

- QLoRA Memory Troubles: A member reported encountering out-of-memory errors while attempting to train QLoRA with Llama 3.1 70B using 4x A6000s.

- Another member questioned if this is expected behavior, suggesting it should be sufficient for QLoRA and advising to open a GitHub issue with a reproducible example to troubleshoot.

- Torchtune + PyTorch 2.4 Compatibility Confirmed: One member inquired about the compatibility of Torchtune with PyTorch 2.4 and received confirmation that it should work.

- Fusion Models RFC Debated: A member questioned whether handling decoder-only max_seq_len within the

setup_cachesfunction might cause issues, particularly forCrossAttentionLayerandFusionLayer.- Another member agreed and proposed exploring a utility to handle it effectively.

- Flamingo Model's Unique Inference: The conversation explored the Flamingo model's use of mixed sequence lengths, particularly for its fusion layers, necessitating a dedicated

setup_cachesapproach.- The need for accurate cache position tracking was acknowledged, highlighting a potential overlap between the Flamingo PR and the Batched Inference PR, which included updating

setup_caches.

- The need for accurate cache position tracking was acknowledged, highlighting a potential overlap between the Flamingo PR and the Batched Inference PR, which included updating

DSPy Discord

- LinkedIn Job Applier Automates Applications: A member shared a GitHub repo that utilizes Agent Zero to create new pipelines, automatically applying for job offers on LinkedIn.

- The repo is designed to use AIHawk to personalize job applications, making the process more efficient.

- Generative Reward Models (GenRM) Paper Explored: A new paper proposes Generative Reward Models (GenRM), which leverage the next-token prediction objective to train verifiers, enabling seamless integration with instruction tuning, chain-of-thought reasoning, and utilizing additional inference-time compute via majority voting for improved verification.

- The paper argues that GenRM can overcome limitations of traditional discriminative verifiers that don't utilize the text generation capabilities of pretrained LLMs, see the paper for further details.

- DSPY Optimization Challenges: One member struggled with the complexity of using DSPY for its intended purpose: abstracting away models, prompts, and settings.

- They shared a link to a YouTube video demonstrating their struggle and requested resources to understand DSPY's optimization techniques.

- Bootstrapping Synthetic Data with Human Responses: A member proposed a novel approach to bootstrapping synthetic data: looping through various models and prompts to minimize a KL divergence metric using hand-written human responses.

- They sought feedback on the viability of this method as a means of generating synthetic data that aligns closely with human-generated responses.

- DSPY Optimizer Impact on Example Order: A user inquired about which DSPY optimizers change the order of examples/shots and which ones don't.

- The user seems interested in the impact of different optimizer strategies on the order of training data, and how this may affect model performance.

AI21 Labs (Jamba) Discord

- Jamba 1.5 Dependency Issue: PyTorch 23.12-py3: A user reported dependency issues while attempting to train Jamba 1.5 using pytorch:23.12-py3.

- Jamba 1.5 shares the same architecture and base model as Jamba Instruct (1.0).

- Transformers 4.44.0 and 4.44.1 Bug: Transformers versions 4.44.0 and 4.44.1 were discovered to contain a bug that inhibits the ability to execute Jamba architecture.

- This bug is documented on the Hugging Face model card for Jamba 1.5-Mini: https://huggingface.co/ai21labs/AI21-Jamba-1.5-Mini.

- Transformers 4.40.0 Resolves Dependency Issues: Utilizing transformers 4.40.0 successfully resolved the dependency issues, enabling successful training of Jamba 1.5.

- This version should be used until the bug is fully resolved.

- Transformers 4.44.2 Release Notes: The release notes for transformers 4.44.2 mention a fix for Jamba cache failures, but it was confirmed that this fix is NOT related to the bug affecting Jamba architecture.

- Users should continue using transformers 4.40.0 until the Jamba bug is addressed.

tinygrad (George Hotz) Discord

- Tinygrad Optimized for Static Scheduling: Tinygrad is highly optimized for statically scheduled operations, achieving significant performance gains for tasks that do not involve dynamic sparsity or weight selection.

- The focus on static scheduling allows Tinygrad to leverage compiler optimizations and perform efficient memory management.

- Tinygrad's ReduceOp Merging Behavior: A user inquired about the rationale behind numerous

# max one reduceop per kernelstatements within Tinygrad'sschedule.pyfile, specifically one that sometimes triggers early realization of reductions, hindering their merging in the_recurse_reduceopsfunction.- A contributor explained that this issue manifests when chaining reductions, like in

Tensor.randn(5,5).realize().sum(-1).sum(), where the reductions aren't merged into a single sum, as expected, and a pull request (PR #6302) addressed this issue.

- A contributor explained that this issue manifests when chaining reductions, like in

- FUSE_CONV_BW=1: The Future of Convolution Backwards: A contributor explained that the

FUSE_CONV_BW=1flag in Tinygrad currently addresses the reduction merging issue by enabling efficient fusion of convolutions in the backward pass.- They also noted that this flag will eventually become the default setting once performance optimizations are achieved across all scenarios.

- Tinygrad Documentation: Your Starting Point: A user asked for guidance on beginning their journey with Tinygrad.

- Multiple contributors recommended starting with the official Tinygrad documentation, which is considered a valuable resource for beginners.

- Limitations in Dynamic Sparse Operations: While Tinygrad shines with static scheduling, it might encounter performance limitations when handling dynamic sparsity or weight selection.

- These types of operations require flexibility in memory management and computation flow, which Tinygrad currently doesn't fully support.

Gorilla LLM (Berkeley Function Calling) Discord

- Groq is Missing From the Leaderboard: A member asked why Groq is not on the leaderboard (or changelog) for Gorilla LLM.

- The response explained that Groq has not been added yet and the team is waiting for their PRs, which are expected to be raised next week.

- Groq PRs Expected Next Week: A member asked why Groq is not on the leaderboard (or changelog) for Gorilla LLM.

- The response explained that Groq has not been added yet and the team is waiting for their PRs, which are expected to be raised next week.

LAION Discord

- CLIP-AGIQA Boosts AIGI Quality Assessment: A new paper proposes CLIP-AGIQA, a method using CLIP to improve the performance of AI-Generated Image (AIGI) quality assessment.

- The paper argues that current models struggle with the diverse and ever-increasing categories of generated images, and CLIP's ability to assess natural image quality can be extended to AIGIs.

- AIGIs Need Robust Quality Evaluation: The widespread use of AIGIs in daily life highlights the need for robust image quality assessment techniques.

- Despite some existing models, the paper emphasizes the need for more advanced methods to evaluate the quality of these diverse generated images.

- CLIP Shows Promise in AIGI Quality Assessment: CLIP, a visual language model, has shown significant potential in evaluating the quality of natural images.

- The paper explores applying CLIP to the quality assessment of generated images, believing it can be effective in this domain as well.

Alignment Lab AI Discord

- Nous Hermes 2.5 Performance: A recent post on X discussed performance improvements with Hermes 2.5, but no specific metrics were given.

- The post linked to a GitHub repository, Hermes 2.5 but no further details were provided.

- No further details provided: This was a single post on X.

- There were no further details or discussion points.

Mozilla AI Discord

- Common Voice Seeks Contributors: The Common Voice project is an open-source platform for collecting speech data with the goal of building a multilingual speech clip dataset that is both cost and copyright-free.

- This project aims to make speech technologies work for all users, regardless of their language or accent.

- Join the Common Voice Community: You can join the Common Voice community on the Common Voice Matrix channel or in the forums.

- If you need assistance, you can email the team at commonvoice@mozilla.com.

- Contribute to the Common Voice Project: Those interested in contributing can find the guidelines here.

- Help is needed in raising issues where the documentation looks outdated, confusing, or incomplete.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!