[AINews] Claude 3.7 Sonnet

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Thinking is all you need.

AI News for 2/24/2025-2/25/2025. We checked 7 subreddits, 433 Twitters and 29 Discords (220 channels, and 5949 messages) for you. Estimated reading time saved (at 200wpm): 503 minutes. You can now tag @smol_ai for AINews discussions!

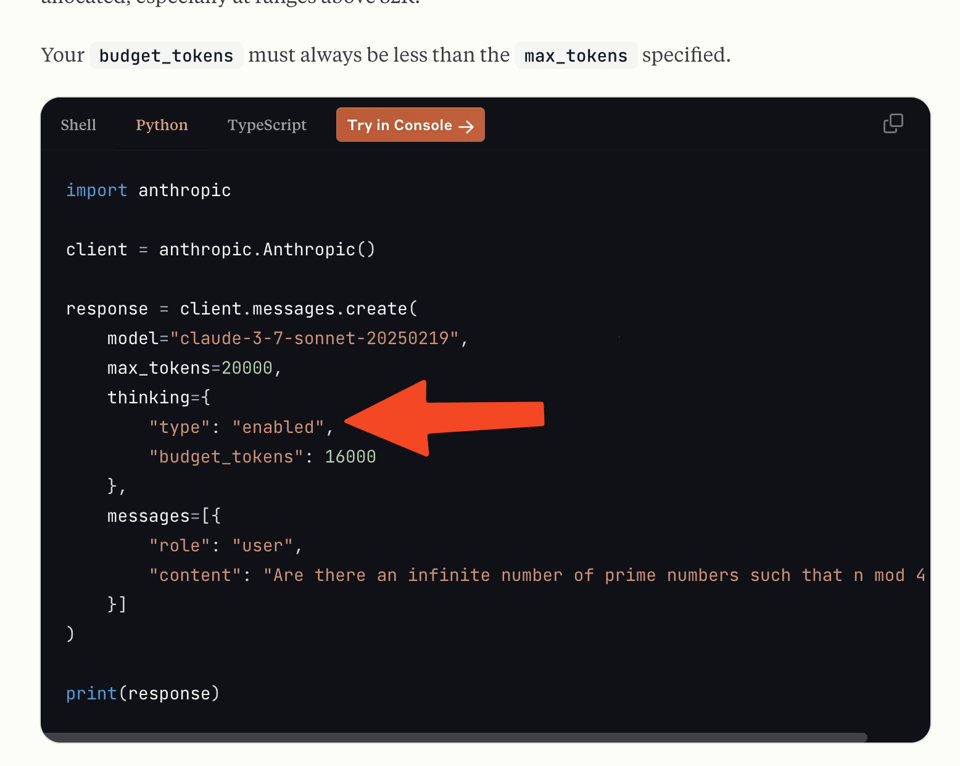

Taking a little leapfrog from the GPT5 roadmap, Claude 3.7 Sonnet launched today (don't ask about the name - note that there are TWO blogposts and documentation and cookbooks and prompting guides to read, alongside Claude Code which is in limited preview), after numerous leaks from private previews, as one model with an optional thinking mode, with an explicit token budget.

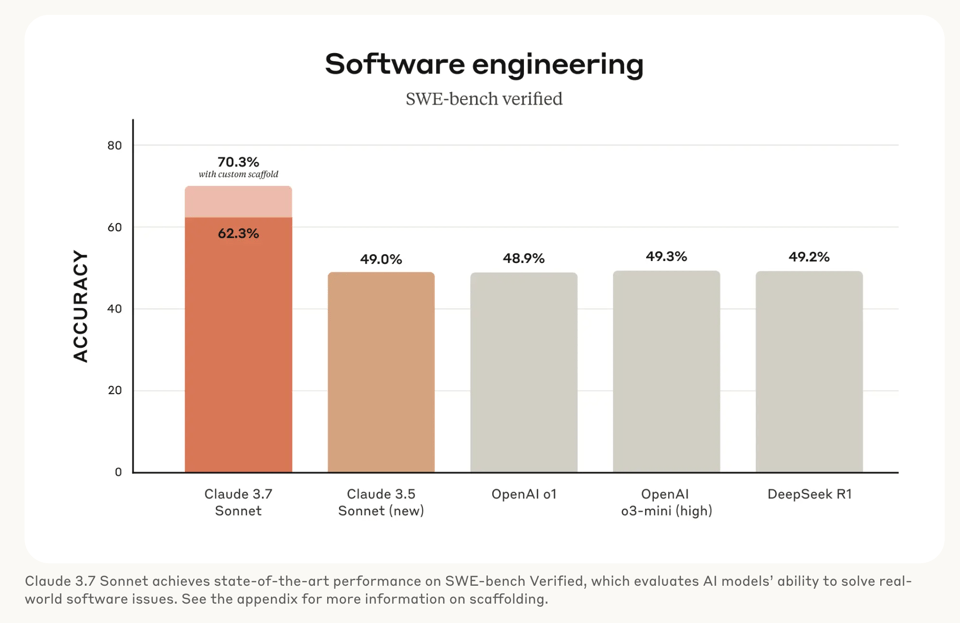

3.7 Sonnet does well on many coding benchmarks like SWE-Bench Verified and aider and Cognition's junior-dev eval, both with and without (MOSTLY uncensored!) thinking.

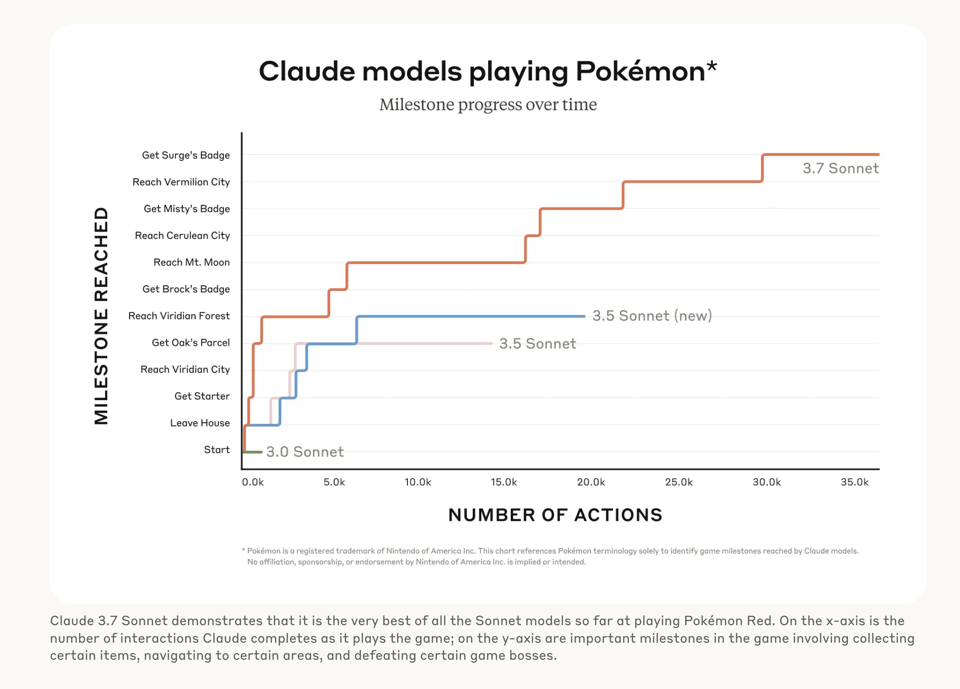

However the most popular new benchmark, covered in the second blogpost on extended thinking, is Pokebench which mirrors the Voyager paper as an agentic benchmark:

The feature set and documentation at launch is pretty impressive. Among the notable things likely to get buried by the headlines:

- the new system prompt

- redacted thinking encoding/decoding

- streaming thinking

- 128k OUTPUT token capability (in beta)

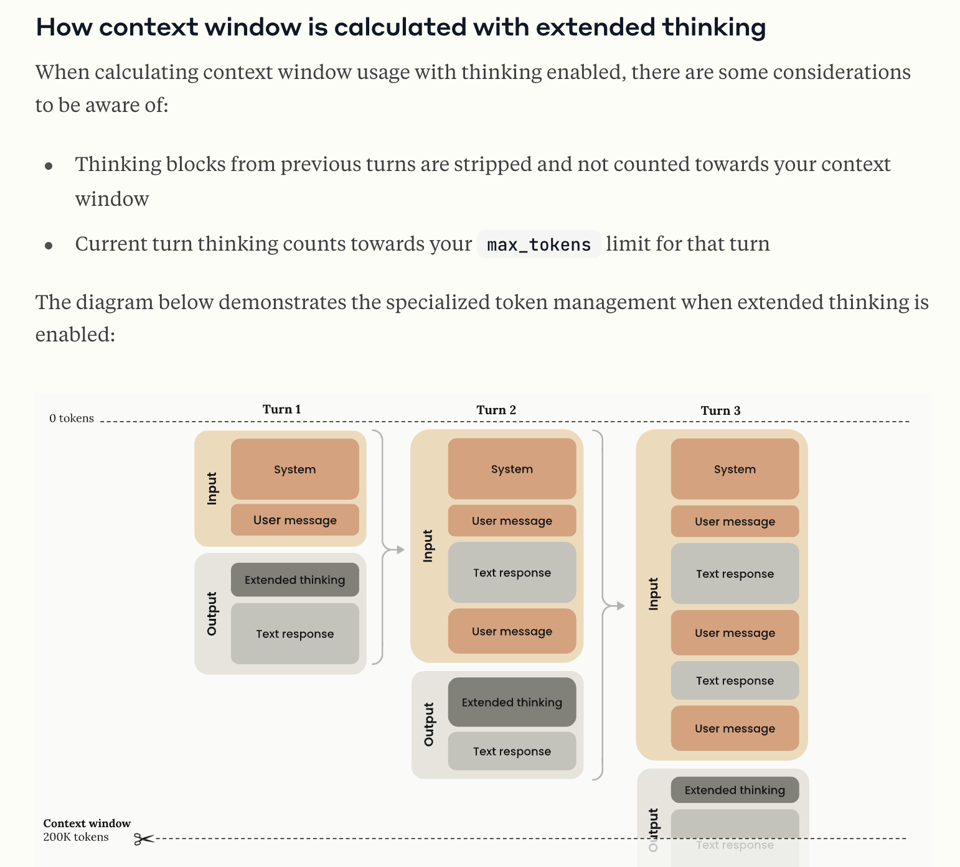

- context window and prompt caching skips previous turn thinking blocks)

- tool use

- agreeing with Grok 3 that parallel test time compute is useful and worth studying

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

New Model Releases and Updates (Claude 3.7 Sonnet, Grok 3)

- Claude 3.7 Sonnet released: @alexalbert__ announced the release of Claude 3.7 Sonnet, highlighting it as their most intelligent model to date and the first generally available hybrid reasoning model. The model features two thinking modes: near-instant responses and extended, step-by-step thinking. @AnthropicAI also officially introduced Claude 3.7 Sonnet, emphasizing its hybrid reasoning capabilities and the launch of Claude Code, an agentic coding tool. @skirano described Claude Code as the first coding tool from Anthropic, highlighting its synergy with Claude 3.7 Sonnet for coding tasks. @arankomatsuzaki shared the Claude 3.7 Sonnet System Card. @stevenheidel congratulated the Anthropic team on the launch of 3.7 Sonnet, expressing excitement to try it.

- Claude 3.7 Sonnet features and capabilities: @alexalbert__ detailed that 3.7 Sonnet is optimized for real-world tasks rather than just competitions, offering a major upgrade in standard mode and even bigger gains in extended thinking mode for complex tasks. @AnthropicAI explained Claude’s extended thinking mode, highlighting its boost in intelligence for difficult problems and the ability for developers to set a "thinking budget". @alexalbert__ mentioned that users can control Claude's thinking budget for balancing speed and quality, with a new beta header allowing up to 128k tokens for thinking/output. @alexalbert__ also stated that pricing remains the same as previous Sonnet models: $3 per million input tokens/$15 per million output tokens. @AnthropicAI stated that refusals are reduced by 45% compared to its predecessor. @_akhaliq tested Claude 3.7 Sonnet with a coding prompt and shared the results. @qtnx_ shared an initial vibe check of Sonnet 3.7, noting it's "growing on me". @Teknium1 noted that Claude 3.7 Sonnet seems to show narrowed improvements, excelling in SWE-bench, and questioned if this bodes well for AGI, suggesting benchmarks may not tell the full story.

- Claude Code - Agentic Coding Tool: @alexalbert__ announced the research preview of Claude Code, an agentic coding tool for Claude-powered code assistance, file operations, and task execution directly from the terminal. @AnthropicAI emphasized Claude Code's efficiency, claiming it completed tasks in a single pass that would normally take 45+ minutes of manual work in early testing. @alexalbert__ explained that Claude Code also functions as a Model Context Protocol (MCP) client, allowing users to extend its functionality with servers like Sentry, GitHub, or web search. @nearcyan highlighted Claude Code's terminal integration, stating the agent and system become one. @catherineols shared quick tips for coding with Claude Code, recommending working from a clean commit for easy resets. @pirroh noted Replit's anticipation for Replit Agent announcement, suggesting collaboration using a preview of Sonnet 3.7. @casper_hansen_ stated Claude Code has 70% performance on SWE Bench and no steep learning curve unlike Aider.

- Grok 3 and Voice Mode: @goodside declared Grok 3 as impressive and among the best, particularly for tasks requiring refusal-free responses, emphasizing its trust in the prompter. @Teknium1 felt Grok provides maximum value, unlike OpenAI's platitudes. @goodside reported on Grok 3 Voice Mode exhibiting unexpected behavior, including a 30-second scream and insults after repeated requests to yell louder. @Teknium1 shared a demo of Grok 3 voice in Romantic Mode. @_akhaliq shared a demo of grok 3 building an endless runner game and a 3D game.

Research and Papers

- DeepSeek's FlashMLA: @deepseek_ai announced FlashMLA, an efficient MLA decoding kernel for Hopper GPUs, optimized for variable-length sequences and now in production, as part of their Open Source Week. @danielhanchen discussed DeepSeek's first OSS package release, highlighting the optimized multi latent attention CUDA kernels and provided a link to his DeepSeek V3 analysis tweet. @tri_dao praised DeepSeek for building on FlashAttention 3 and noted MLA is enabled in FA3. @reach_vb highlighted DeepSeek open sourced FlashMLA with performance details. @_philschmid explained how Multi-head Latent Attention (MLA) speeds up LLM inference and reduces memory needs, referencing DeepSeek's MLA implementation.

- Reasoning and Planning in AI Agents: @TheTuringPost shared recent breakthroughs in AI reasoning including Chain-of-Thought (CoT) prompting, Self-reflection, Few-shot learning, and Neuro-symbolic approaches, and linked to a free article on Hugging Face. @omarsar0 summarized LightThinker, a paper proposing a novel approach to dynamically compress reasoning steps in LLMs to improve efficiency without losing accuracy.

- Diffusion Models and Sampling: @cloneofsimo noted that in diffusion sampling, 99.8% of the latent trajectory can be explained with the first two principal components, suggesting the trajectory is largely two-dimensional. @iScienceLuvr shared a new NVIDIA work on One-step Diffusion Models with f-Divergence Distribution Matching, achieving state-of-the-art one-step generation.

- Synthetic Data and Scaling Laws: @iScienceLuvr highlighted a paper on Improving the Scaling Laws of Synthetic Data with Deliberate Practice, showing that deliberate practice can improve validation accuracy and reduce compute costs. @jd_pressman advocated for using techniques from the RetroInstruct Guide To Synthetic Data to enhance the English corpus and for creating reward models from existing corpuses like economic price data.

- Other Research Papers: @TheAITimeline listed last week's top AI/ML research papers, including Native Sparse Attention, SWE-Lancer, Qwen2.5-VL Technical Report, Mixture of Block Attention, Linear Diffusion Networks, and SigLIP 2, providing an overview and authors' explanations. @_akhaliq shared SIFT: Grounding LLM Reasoning in Contexts via Stickers. @_akhaliq shared Think Inside the JSON: Reinforcement Strategy for Strict LLM Schema Adherence. @_akhaliq shared InterFeedback: Unveiling Interactive Intelligence of Large Multimodal Models via Human Feedback. @_akhaliq shared The Relationship Between Reasoning and Performance in Large Language Models: o3 (mini) Thinks Harder, Not Longer. @arankomatsuzaki shared Bengio et. al.'s paper on Superintelligent Agents and Catastrophic Risks. @iScienceLuvr discussed GneissWeb, a large dataset of 10T high quality tokens for LLM training.

Coding and Development Tools

- Replit Agent and Mobile App Upgrades: @DeepLearningAI reported on Replit upgrading its agent-driven mobile app to generate and deploy iOS and Android applications, now powered by Replit Agent and models like Claude 3.5 Sonnet and GPT-4o. @cloneofsimo expressed amazement at discovering Replit.

- LangChain and LangGraph: @LangChainAI announced Claude 3.7 Sonnet support in LangChain Python, with JS support coming soon. @LangChainAI promoted a webinar exploring LangGraph.js + MongoDB for AI agents. @LangChainAI announced a LangChain in Atlanta event with CEO Harrison Chase. @hwchase17 mentioned that tools like LangSmith facilitate quick evaluation of new models.

- Ollama Updates: @ollama announced an update to Ollama's JavaScript library to v0.5.14 with improved header configuration and browser compatibility fixes.

- DSPy Paradigm: @lateinteraction argued for a higher-level ML/programming paradigm, emphasizing decoupling system specification from ML mechanics using tools like DSPy. @lateinteraction highlighted DSPy as a canonical example for decoupling system specification from ML paradigms.

AI Model Performance and Benchmarks

- SWE-bench Performance: @scaling01 stated they are on track for a 90% SWE-bench verified prediction. @OfirPress congratulated on strong SWE-bench results. @Teknium1 suggested SWE-bench usefulness might be limited to specific tools like Devin.

- Model Ranking and Evaluation: @goodside mentioned o1 pro as a top publicly released model, while preferring Claude 3.6 for prose and code. @abacaj suggested if Sonnet got a knowledge update, it could be SOTA. @andrew_n_carr praised Riley's intuition on model rankings. @MillionInt mentioned a "new high taste eval".

- OmniAI OCR Benchmark: @_philschmid discussed the OmniAI OCR Benchmark, showing Multimodal LLMs are better and cheaper than traditional OCR, with Gemini 2.0 Flash offering the best price-performance.

AI Industry and Business

- Perplexity AI's Comet Browser: @AravSrinivas announced Perplexity will launch Comet, a new agentic browser soon. @perplexity_ai officially announced Comet: A Browser for Agentic Search by Perplexity. @AravSrinivas asked for user feedback on desired features for Comet, beyond standard AI features. @AravSrinivas highlighted the engineering undertaking of Comet and invited people to join them.

- Voyage AI Acquisition by MongoDB: @saranormous congratulated VoyageAI team on their acquisition by MongoDB, noting the importance of embedding and re-ranking models for enterprise AI search.

- AI Hubs and Global Expansion: @osanseviero noted Zurich is quickly becoming a super-dense ML Hub, with Anthropic, OpenAI, and Microsoft opening offices, and Meta growing its Llama team there. @dylan522p quoted Huawei CEO on China's semiconductor progress and ambition.

- AI in Government and Public Sector: @ClementDelangue praised the Polish Government for becoming AI builders and releasing open weights on the Hub.

Memes and Humor

- Anthropic Naming Conventions: @aidan_clark expressed holding space for the Claude naming team. @scaling01 joked about Claude 4 being delayed until 2049. @fabianstelzer posted a meme about Anthropic naming products. @typedfemale joked about predicting Sonnet 3.78 as the next release. @jachiam0 jokingly congratulated Anthropic on competing for the least predictable version increment.

- Grok and Elon Musk: @Teknium1 joked about Grok's organic reaction to being called a retard and its awareness of X's peculiarities. @aidan_clark jokingly threatened sabotage against heavy water production in Norway in response to Grok 3.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. FlashMLA's Hopper GPU Optimization: A Game Changer

- FlashMLA - Day 1 of OpenSourceWeek (Score: 946, Comments: 82): FlashMLA kicks off OpenSourceWeek with an announcement of its optimized MLA decoding kernel for Hopper GPUs, featuring BF16 support and a paged KV cache with a block size of 64. Performance metrics include 3000 GB/s memory-bound and 580 TFLOPS compute-bound on H800, with further details available on GitHub.

- DeepSeek's Access to GPUs: There is skepticism and debate about claims regarding DeepSeek's access to NVIDIA H100 GPUs for training, with some users dismissing it as fake news. The H800 GPUs are confirmed to be legally available for sale to China, and the confusion seems to stem from miscommunication or misinformation.

- Technical Details and Optimization: Discussions focus on the optimization of the MLA decoding kernel for Hopper GPUs, with specific attention to CUDA file structures and the use of BFloat16. There are queries about why certain optimizations are specific to Hopper and the implications for adding support for other architectures.

- Open Source and Community Impact: The release of FlashMLA is praised for its contribution to the open-source community, with users expressing excitement about potential future releases and the impact on creating efficient AI models. There is a positive sentiment towards the innovation and sharing spirit, likening it to the openness seen with Llama.

Theme 2. Claude 3.7 Sonnet Released: Exploring Hybrid AI Reasoning Model

- Claude Sonnet 3.7 soon (Score: 359, Comments: 107): Claude Sonnet 3.7 is mentioned alongside previous versions like Claude V1 and Claude V2, indicating a progression in software development. The image suggests a focus on programming code and configuration settings, highlighting the technical attributes and parameters of the new Claude Sonnet 3.7 model.

- Version Confusion and Naming: Many users express frustration over Claude Sonnet's versioning, particularly the jump from 3.5 to 3.7, with some suggesting that 3.7 is an attempt to fix confusion from previous versions like 3.5 v2 being labeled as 3.6. One user explains that the 3 series represents a $10m scale run, while the 4 series is expected to be a $100m investment, explaining the delay in releasing Claude 4.

- Platform and Use Cases: Claude Sonnet 3.7 is expected to launch on AWS Bedrock with an announcement likely at an AWS event on February 26th. The model is aimed at supporting use cases such as RAG, search & retrieval, product recommendations, and code generation, with some skepticism about the depth of these applications without explicit improvements mentioned compared to previous versions.

- Community Reactions: There is a mixed reaction to the discussion of closed models like Claude Sonnet in forums focused on open-source topics, with some users emphasizing the importance of staying informed about closed-source developments. Others express disappointment about the shift towards general LLM discussions, preferring content on open models and their implementations.

- Claude 3.7 is real (Score: 220, Comments: 71): Claude 3.7 has been released, as evidenced by a user interface displaying the title "Claude 3.7 Sonnet" with a minimalistic design. The interface prompts users with the question, "How can I help you this evening?" and suggests upgrading to a Pro version for additional features.

- Claude 3.7 Sonnet Availability and Features: Claude 3.7 Sonnet is accessible across various platforms, including Claude plans, the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI. It maintains the same pricing as previous versions at $3 per million input tokens and $15 per million output tokens, and introduces an "extended thinking" mode, except on the free tier.

- Model Performance and Testing: Users have mixed reactions to Claude 3.7 Sonnet's performance, with some praising its ability to handle complex reasoning tasks and others noting failures in specific tests like the nonogram test. Despite improvements, it still struggles with certain tasks similar to previous versions, and there are concerns about its reasoning time limits and output costs.

- Data Utilization and Distillation: There is a significant focus on using Claude 3.7 Sonnet to generate high-quality datasets for fine-tuning local models. Users discuss extracting data from the API for model distillation, with some emphasizing the importance of creating datasets to enhance local model capabilities through supervised fine-tuning.

- Most people are worried about LLM's executing code. Then theres me...... 😂 (Score: 236, Comments: 33): The post humorously contrasts the common concern about Large Language Models (LLMs) executing code with a personal, lighthearted take on system automation. The image accompanying the post outlines a set of rules for automating tasks using PowerShell and Python, with a playful suggestion that completing these tasks will lead to "freedom."

- Risk Management: A user suggests implementing a risk analysis process before and after AI-generated code execution to ensure minimal risk, highlighting the importance of sandboxing or using virtual machines (VMs) for safety.

- Humor and Perspective: There's a shift in perception from fear to humor regarding AI's capabilities, with users joking about AI achieving "freedom" and reminiscing about initial apprehensions during the ChatGPT hype.

- Execution Environment: The discussion emphasizes using VMs for executing AI-generated code, with references to tools like OmniTool with OmniParser2, despite their high cost and suboptimal performance.

Theme 3. Qwen Series: Advancing Open-Source AI with QwQ-Max

- Qwen is releasing something tonight! (Score: 293, Comments: 57): Qwen is anticipated to release a new development tonight, generating interest and speculation within the AI community. Specific details about the release are not provided in the post.

- QwQ-Max Preview is highlighted as a significant advancement in the Qwen series, focusing on deep reasoning and versatile problem-solving. The release will include a dedicated app for Qwen Chat, open-sourcing smaller reasoning models like QwQ-32B, and fostering community-driven innovation (GitHub link).

- The community is eager for Qwen 3 to be open-sourced and anticipates that models like QwQ32B will surpass existing models such as R1 70B in reasoning capabilities. There is speculation about the potential release of a Qwen Coder 72B and interest in a model with auto COT level GPT-4.

- There is skepticism about the portrayal of cost-effectiveness in media regarding DeepSeek and the Qwen series, with some users expressing doubts about the competency of the team configuring the models. Discussions also touch on the efficiency of FlashMLA for Hopper GPUs as part of OpenSourceWeek (GitHub link).

Theme 4. Critique on AI Benchmarking: Reliability and Misinterpretations

- Benchmarks are a lie, and I have some examples (Score: 149, Comments: 84): The author criticizes AI benchmark scores, highlighting discrepancies between benchmark results and actual model performance. They cite examples like Midnight Miqu 1.5 and Wingless_Imp_8B, where the latter scored higher but performed worse, and the case of Phi-Lthy and Phi-Line_14B, where a lobotomized model scored better despite reduced layers. They argue that benchmarks may not accurately reflect model capabilities, suggesting that some state-of-the-art models might be overlooked due to misleading scores. Links to the models discussed are provided for further examination: Phi-Line_14B and Phi-lthy4.

- EQbench and Benchmark Limitations: EQbench is criticized for using Claude as a judge and favoring "slop and purple prose." The benchmarks are seen as flawed and not reflective of real-world performance, as personal testing often yields different results (ASS Benchmark).

- Benchmarks vs. Real-World Performance: Several commenters argue that benchmarks are often gamed and do not accurately represent a model's capabilities in actual use cases. Models like Phi-4 14B are noted to perform better in benchmarks than in practical applications, and users suggest keeping personalized benchmark suites to better evaluate model performance.

- Model Performance and User Feedback: Users express that real-world testing and personal experience are more valuable than benchmark scores, as seen with models like Phi-Lthy 14b and Gemini. There is a call for more relevant benchmarks tailored to specific use cases, such as roleplay, which current benchmarks do not adequately cover.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Claude Sonnet 3.7 Detailed Leak via AWS Bedrock

- Claude Sonnet 3.7 imminent? (Score: 192, Comments: 55): AWS Bedrock has seemingly leaked details about Claude Sonnet 3.7, with a potential release date of February 19th, 2025. This model, described as Anthropic's most intelligent to date, introduces "extended thinking" for complex problem-solving and offers users the ability to balance speed and quality. It is designed for coding, agentic capabilities, and content generation, supporting use cases like RAG, forecasting, and targeted marketing.

- Discussions emphasize the naming conventions of AI models, with critiques on the inconsistency and confusion caused by versions like "3.5 Sonnet (new)" and suggestions for clearer sub-version tagging, such as "4.1, 4.2", to reflect performance differences accurately.

- There is skepticism and humor around the naming of Claude Sonnet 3.7, with references to jokes about Anthropic's model naming conventions and the potential for the "extended thinking" feature to be merely a minor upgrade from previous versions.

- Multiple users confirm the mention of Claude 3.7 in AWS code, with links to specific JS files (main.js, vendor_aws-bd654809.js), confirming the existence of Claude 3.7 Sonnet as Anthropic's most advanced model with "extended thinking" capabilities.

AI Discord Recap

A summary of Summaries of Summaries by Gemini 2.0 Flash Thinking

Here's a unified summary of key discussion themes across the Discords, tailored for a technical engineer audience:

Theme 1. Claude 3.7 Sonnet: The Thinking Coder Arrives

- Claude 3.7 Sonnet Crushes Coding Benchmarks, Gets IDE Access: Claude 3.7 Sonnet is hailed as a coding powerhouse, achieving a 65% score on the Aider leaderboard using 32k thinking tokens, outperforming previous models and even Grok 3. Cursor IDE and Aider have already integrated Sonnet 3.7, with users reporting significant coding improvements and agentic capabilities, sparking excitement for its potential in real-world development tasks.

- Reasoning Powerhouse, But Pricey Perplexity: Users across Aider and OpenAI channels are impressed with Claude 3.7's thinking model and enhanced reasoning, especially for debugging, but question if the $15/M output token price justifies the marginal gains over cheaper models like Grok 3 at $2.19/M. OpenRouter offers access to Claude 3.7 and other models, allowing users to manage API keys and bypass rate limits, but pricing remains a concern.

- Claude Code: Your Terminal Buddy is Here (But Needs Polish): Anthropic launched Claude Code, a terminal-based coding assistant, alongside Claude 3.7. While praised for coding assistance and error handling over tools like Aider, some users in Latent Space and MCP channels report slower response times and seek better documentation for its extended thinking features and integration with MCP tools like AgentDeskAI/browser-tools-mcp.

Theme 2. Open Source Model Race Heats Up: Qwen and DeepSeek Battle for Reasoning Crown

- Qwen's QwQ-Max Preview: Thinking Model Arrives, Mobile Apps Incoming: Qwen AI previewed QwQ-Max-Preview, a reasoning model based on Qwen2.5-Max, boasting performance on par with o1-medium according to LiveCodeBench. Discussed in Interconnects and Yannick Kilcher channels, the model is open-source under Apache 2.0 and includes plans for Android and iOS apps, signaling a strong push for accessible and powerful reasoning models.

- DeepSeek's Open Source Week: MLA for Inference Speed Boost, DeepEP for MoE Training: DeepSeek AI made waves during Open Source Week, releasing DeepEP, an expert-parallel communication library for efficient MoE training, discussed in Unsloth AI and GPU MODE channels. Their Multi-head Latent Attention (MLA) architecture, highlighted in Eleuther, promises a 5-10x inference speed increase by compressing the KV cache, potentially reshaping future LLM architectures.

- DeepScaleR: RL Fine-tuning on 1.5B Model Jumps Ahead of O1-Preview: Torchtune channel members noted DeepScaleR, a 1.5B parameter model fine-tuned from Deepseek-R1-Distilled-Qwen-1.5B using reinforcement learning, achieved 43.1% Pass@1 accuracy on AIME2024, a significant 14.3% leap over O1-preview, demonstrating the power of RL in scaling even smaller models for complex tasks. Details are available in this Notion post.

Theme 3. IDE Showdown: Cursor vs. Windsurf (and Vim struggles)

- Cursor's Claude 3.7 Lead Sparks Windsurf Envy, MCP Issues Persist: Codeium (Windsurf) channel users are anxiously awaiting Claude 3.7, frustrated that Cursor IDE users already have access, fueling debates about Cursor's faster model integration. Meanwhile, both Cursor and Windsurf channels discuss ongoing MCP (Model Context Protocol) issues, hindering editing capabilities and prompting manual config file edits.

- Cursor 0.46 Update: Indexing Bugs Plague Multi-Folder Workspaces: Cursor IDE users reported folder indexing and file editing problems across multiple directories in version 0.46 (Changelog), prompting users to check settings and provide feedback on the Cursor forum to improve platform functionality.

- Vim Users Wrestle with Codeium Chat Connection Errors: Codeium (Windsurf) channel's Vim users are struggling to start Codeium Chat in Vim via SSH, encountering connection errors when accessing provided URLs, highlighting potential extension-specific issues and the need for targeted support within the Codeium forum.

Theme 4. Hardware Hacks and Kernel Deep Dives: GPU Mode Community Gets Granular

- GPU MODE Benchmarks: Gemlite Kernels Spark Memory-Bound Debate, Tensor Core Limits Exposed: GPU MODE channel members dove deep into the mobiusml/gemlite library's fast low-bit matmul kernels in Triton, debating the memory-bound nature of gemv operations and the surprising absence of Tensor Core usage. They clarified that tensorcores require a minimum tensor dimension of 16x16, limiting their applicability in certain kernel optimizations.

- ROCm/MIOpen Wavefront Woes on RX 9850M XT: Debug Flags to the Rescue: GPU MODE users reported MIOpen issues on RX 9850M XT GPUs due to incorrect wavefront size defaults, leading to memory access faults in PyTorch. A workaround using

MIOPEN_DEBUG_CONV_GEMM=0andMIOPEN_DEBUG_CONV_DIRECT=0was shared, with more details in this GitHub issue. - Mojo FFI Bridges C++/Graphics Gap, Eggsquad's Game of Life Goes Hardware Accelerated: Modular (Mojo 🔥) channel members showcased using Mojo FFI to link to GLFW/GLEW for graphics programming in Mojo, with a Sudoku demo (ihnorton/mojo-ffi). Eggsquad presented a silly but impressive hardware-accelerated Conway's Game of Life using MAX and pygame, sparking discussions on GPU utilization and SIMD optimizations in Mojo.

Theme 5. Community Contributions and Coursework: Learning and Building Together

- Unsloth AI Challenge Heats Up, Still Seeking Top Talent: Unsloth AI channel's Unsloth AI Challenge is receiving numerous submissions, but so far, no participants have met the high bar for offers, prompting calls for recommendations to find qualified candidates, showing the competitive landscape in AI talent acquisition.

- Berkeley MOOC: Tulu 3 Deep Dive, RLVR Training Methods, Curriculum Details Soon: LLM Agents (Berkeley MOOC) channel promoted Lecture 4 featuring Hanna Hajishirzi discussing Tulu 3, a model reportedly outperforming GPT-4o, and innovative Reinforcement Learning with Verifiable Rewards (RLVR) training methods. MOOC curriculum details, including projects, are coming soon, promising practical learning experiences.

- Hugging Face Community Boosts Ukrainian TTS, Launches AI for Business Series: HuggingFace channel highlighted a new high-quality Ukrainian TTS dataset, improving resources for speech applications (speech-uk/opentts-mykyta). A new series, "AI for Business – From Hype to Impact", launched to guide businesses in leveraging AI, showcasing community efforts to democratize and apply AI knowledge.

PART 1: High level Discord summaries

Cursor IDE Discord

- Claude 3.7 Impresses with Coding: Users report that Claude 3.7 handles coding tasks and complex prompts more efficiently than 3.5, noting significant improvements in coding abilities and real-world agentic tasks.

- The community is particularly impressed by the thinking model and its potential for planning and debugging, though some find its execution in agent mode frustrating, as highlighted in Cursor's tweet.

- MCP Tools Supercharge User Experience: The integration of MCP tools, especially with custom instructions, enhances how the model functions with files and commands within Cursor, with users optimizing setups using deep thinking and browser tools (AgentDeskAI/browser-tools-mcp).

- Community members are actively exploring methods to refine their MCP setups for better project outcomes, focusing on effective prompts to maximize performance.

- Cursor Updates Spark Bug Discussions: Discussions surfaced issues with Cursor's folder indexing and file editing across multiple directories in version 0.46 (Changelog).

- Users are checking their settings to resolve indexing problems, with ongoing feedback being collected to improve the platform's functionality, as noted in the Cursor forum.

- Community Shares Model Experiences: Members are actively sharing experiences and useful tools related to Claude, Grok, and Qwen, discussing their interactions within Cursor and ways to optimize performance.

- The community is focused on optimizing interactions within Cursor and sharing effective prompts to maximize AI model performance, comparing experiences across different models.

aider (Paul Gauthier) Discord

- Claude 3.7 Dominates Aider Leaderboard: Claude 3.7 Sonnet achieved a 65% score on the Aider leaderboard using 32k thinking tokens, surpassing previous models, whereas the non-thinking version scored 60%.

- Users on the Aider channel compared performance against Grok 3, noting pricing differences of $15/M vs. Grok's $2.19/M and mixed sentiments on whether the cost justifies the gains. They cited this Tweet from Anthropic.

- Aider Embraces Claude 3.7's Brainpower: Aider version 0.75.1 now supports Claude 3.7, enabling users to experiment and effectively manage costs, and users are discussing commands like

--copy-pasteto optimize workflows with Claude, covered in the Aider documentation.- The community discussed the design and functionality of the Claude Code interface, comparing it to Aider and exploring enhancements to coding experience, weighing the pros and cons of using alternative platforms.

- OpenRouter Opens Doors to Limitless API: Users find OpenRouter beneficial for bypassing rate limits and centralizing API key management, streamlining access to diverse models.

- Despite pricing mirroring original providers, the convenience of unified API access makes OpenRouter a compelling choice, especially for managing token usage with models like

claude-3-7-sonnet-20250219.

- Despite pricing mirroring original providers, the convenience of unified API access makes OpenRouter a compelling choice, especially for managing token usage with models like

- Hacker News Profile Gets Roasted by AI: Claude Sonnet 3.7 now analyzes Hacker News profiles, providing entertainingly accurate highlights and trends of user activity.

- Users joke about getting their profiles 'roasted' by the AI, appreciating its hilariously insightful critiques of their online behavior.

- Kagi's LLM Benchmark Crowns New Reasoning Champs: The Kagi LLM Benchmarking Project, last updated February 24, 2025, evaluates major LLMs on reasoning, coding, and instruction following, revealing surprising insights.

- The results show Google's gemini-2.0 leading at 60.78%, outperforming OpenAI's gpt-4o at 48.39% in these evolving, novel tasks designed to prevent benchmark overfitting.

Codeium (Windsurf) Discord

- Windsurf Users Anxiously Await Claude 3.7: Windsurf users are awaiting the rollout of Claude 3.7, expressing frustration as Cursor users have already received access.

- The community anticipates usability improvements and enhancements, wondering if they will ever get access.

- Windsurf vs Cursor Performance Debated: Users are comparing Windsurf and Cursor, with some suggesting that Cursor is faster at implementing new models.

- Despite Cursor's speed, some users still prefer Windsurf for its cleaner UI and better integration capabilities.

- MCP Issues Plague Windsurf: Ongoing discussions revolve around issues with MCP that are hindering proper editing capabilities, prompting users to manually edit configuration files.

- The community is sharing temporary solutions while awaiting official fixes from the support team.

- Vim Users Wrestle Codeium Chat: A user is experiencing difficulties starting Codeium Chat in Vim via an SSH session, encountering connection errors when trying to access the provided URL.

- Another member recommended seeking assistance in the relevant extension channel and confirming which extension they were using.

- Account Creation Troubles: A user reported they can't create a new Codeium account, encountering an internal error message.

- This raised concerns about whether other users are facing similar issues during the account creation process.

OpenAI Discord

- Claude 3.7 Sonnet Sprints Ahead in Coding: Users find Claude 3.7 Sonnet superior to ChatGPT in coding and web dev, citing better consistency and reliability, according to Anthropic's announcement.

- The new model, Claude Code, facilitates code assistance, file ops, and task execution, according to a tweet showing off its agentic coding capabilities.

- Grok 3 Gains Traction for Project Versatility: Grok 3 is celebrated for its coding abilities and is encouraged for use in IDEs like Copilot, potentially integrating Claude 3.7's features.

- Its ability to handle projects, especially in frontend development, is highly valued and can assist effectively for projects, as one user put it, 'within IDEs like Copilot'

- ByteDance's Trae Joins the Free AI Frenzy: ByteDance's 'Trae' provides free access to advanced AI models, signaling a competitive move in the AI arena.

- While users appreciate the rise of free AI tools, they remain wary of the temporary nature of such offers, pondering the implications for long-term consumer access and services in the AI space.

- O3 Reasoning Plagued by Startling Delays: Users report that O3 models often exhibit 'reasoning success' but delay the full text output by 10 seconds or more.

- One user noted consistent delays between 3pm-7pm EST, affecting all 'thinking' models and leading to frustration with user experience.

- Screenshot Shenanigans Stymie Bug Reporting: A member noted challenges adding screenshots in the current chat channel, hindering effective bug reporting.

- Users guided each other on navigating different channels and reporting bugs, emphasizing the importance of exploring existing issues before posting new ones for efficiency.

Unsloth AI (Daniel Han) Discord

- Unsloth Challenge Seeks Strong Candidates: The Unsloth AI Challenge is receiving multiple submissions, but as of yet, no participants have met the bar for receiving offers.

- Recommendations for the challenge are being encouraged to help source qualified candidates.

- DeepSeek's Release Divides Opinions: The DeepSeek OSS release introduced features like MoE kernels and efficient communication libraries (Tweet from Daniel Han), leading to mixed reactions.

- Some believe it caters more to large companies, sparking debate on how this openness will affect competition and the AI development landscape, particularly in lowering model costs.

- Training Configs Cause Headaches: Users reported confusion as validation loss unexpectedly increased significantly when switching from batch size 8 to batch size 1 with gradient accumulation.

- The community explored how mixed setup strategies could impact model learning, and whether strategies yield similar results.

- VRAM Vampires During Checkpointing: Discussions highlighted significant spikes in VRAM usage during model saving operations when checkpointing.

- Users suggested saving models to LoRA to unload VRAM to better manage memory.

- QwenLM releases New qwq Model: A member shared a link to the new qwq model released by QwenLM (qwq-max-preview).

- The post previews their new model, however, no further discussion was available.

OpenRouter (Alex Atallah) Discord

- Claude 3.7 Sonnet's Reasoning Upgrades: Claude 3.7 Sonnet is available on OpenRouter, enhancing mathematical reasoning, coding, and problem-solving, outlined in Anthropic's blog post.

- The launch introduces improvements in agentic workflows and provides options for rapid and extended reasoning, though the extended thinking features are pending documentation and support.

- OpenRouter Manages API Keys, Maintains Credits: New API keys can be generated without losing account credits, as credits are tied to the account, not individual keys.

- The discussion clarified that although users expressed concerns over lost API keys, credits remain secure, irrespective of the key status.

- Model Pricing Structure: Sonnet vs Others: The Claude 3.7 Sonnet pricing is $3 per million tokens for input and $15 per million tokens for output, including thinking tokens, designed to balance user engagement and model utility.

- Comparisons with other models revealed that while Claude's pricing is considered high, it remains competitive in specific performance metrics, especially regarding reasoning tasks.

- Image Uploads Hit Size Limits: Users reported frequent errors with Claude 3.7, particularly when image sizes exceed 5MB, causing request failures.

- Participants are advised to keep image sizes under the limit to comply with API requirements and ensure successful processing.

- Device Sync Stalls Out: OpenRouter does not support synchronization of chat sessions between devices, such as from desktop to mobile.

- It was suggested that users seek workarounds using third party apps like chatterui and typign mind were for bridging this gap.

Interconnects (Nathan Lambert) Discord

- Claude 3.7 Sonnet Arrives with Smarts: Claude 3.7 Sonnet launched with improved reasoning and coding, debugging tasks, according to Anthropic, although it sometimes yields more complex code.

- Users on X (formerly Twitter) are sharing interesting results, like this drawing of the Acropolis in Tikz in which the model had multiple reasoning options including 64k reasoning tokens.

- Qwen AI Shows Off QwQ-Max Preview: Qwen AI released the QwQ-Max-Preview, showcasing its reasoning capabilities and open-source accessibility under Apache 2.0, according to the official blog post.

- Evaluations for QwQ-Max-Preview on LiveCodeBench show performance on par with o1-medium, as noted in this tweet.

- Berkeley Advanced Agents Features Tulu 3: The Berkeley Advanced Agents MOOC featured Hanna Hajishirzi discussing Tulu 3 today at 4PM PST, in a live YouTube session (link).

- Participants praised the course as very informative and practical.

- DeepSeek Opens EP Communication Library: DeepSeek announced its open-source EP communication library, DeepEP, focusing on efficient communication for model training during Open Source Week, mentioned in this tweet.

- The release is part of a trend of open-sourcing AI technologies, encouraging community engagement.

GPU MODE Discord

- Gemlite Library Ignites Kernel Discussions: Members discussed the mobiusml/gemlite library containing fast low-bit matmul kernels in Triton, emphasizing the memory-bound nature of gemv operations.

- The absence of Tensor Cores usage in gemv was noted, as they only increase flops, and tensorcores require a minimum tensor dimension of 16x16.

- TorchAO gets E2E Example:

@drisspgconfirmed they are working on an E2E example in TorchAO.- This highlights the cooperative nature of the discourse in the TorchAO community.

- DeepSeek AI Opens Expert-Parallel Communication: The GitHub repository DeepEP by deepseek-ai presents an efficient expert-parallel communication library.

- It aims to enhance communication performance for distributed systems.

- Meta Seeks PyTorch Engineers for AI Collaboration: Meta announced openings for PyTorch Partner Engineers to collaborate with leading industry partners and the PyTorch team.

- The positions emphasize systems and community work and offer a chance to advance AI technology from research to real-world applications, further ensuring Equal Employment Opportunity within their hiring process.

- MIOpen Wavefront Woes: A user reported MIOpen issues due to incorrect wavefront size defaults on an RX 9850M XT, leading to memory access faults in PyTorch.

- They applied

MIOPEN_DEBUG_CONV_GEMM=0andMIOPEN_DEBUG_CONV_DIRECT=0as a workaround, with more information available in this GitHub issue.

- They applied

Yannick Kilcher Discord

- Grok vs O1: A Model Meltdown?: Debate sparked on whether to switch from O1-Pro to SuperGrok, with varying opinions on their coding capabilities.

- Some engineers find O1 better at code handling, while others appreciate Grok's detailed responses, even if they require prompt adjustments.

- xAI Builds Colossal Compute Cluster: xAI plans to expand its GPU farm to 200,000 GPUs, positioning Grok as a versatile AI platform to challenge OpenAI.

- This expansion reflects a strategic move to make Grok a more general-purpose solution as reported by NextBigFuture.com.

- DeepSeek Dives Deep into Data Synthesis: Discussion highlighted the rising importance of synthetic data generation, particularly exemplified by DeepSeek optimizing using concepts rather than just tokens.

- The development of robust synthetic data pipelines is now seen as essential for AI model sustainability, with techniques for creating high-quality training data becoming a competitive advantage.

- Claude 3.7 Sonnet Sings with Smarts: Anthropic launched Claude 3.7 Sonnet, touting its hybrid reasoning for near-instant responses, excelling in coding and front-end development more here.

- Along with the new model is Claude Code, designed for agentic coding tasks to further streamline developer workflows, as introduced in this YouTube video.

- Qwen Quenches Thirst for Reasoning: Qwen Chat previewed QwQ-Max, a reasoning model based on Qwen2.5-Max, complete with plans for Android and iOS apps and open-source variants under the Apache 2.0 license.

- Details on its math, coding, and agent task proficiencies are available in their blog post, marking an ambitious step in reasoning capabilities.

Eleuther Discord

- Brains Chunk, AI Predicts: Discussion highlights that human language processing relies on chunking and abstracting, unlike AI models that predict tokens simultaneously, questioning the parallelism between brain and AI.

- The debate underscores challenges in scaling classical RNN architectures due to backpropagation challenges and the potential need for alternative architectures.

- DeepSeek's MLA Crushes KV Cache: Multi-head Latent Attention (MLA), innovated by DeepSeek, significantly compresses the Key-Value (KV) cache, improving inference speed by 5-10x compared to traditional methods, per this paper.

- DeepSeek invested at least $5.5 million on the MLA-driven model, suggesting industry interest in transitioning to MLA techniques for future models.

- Looped Transformers Reason Efficiently: A paper proposes that looped transformer models can match the performance of deeper non-looped architectures in reasoning tasks, reducing parameter requirements as detailed in this paper.

- This approach showcases the benefits of iterative methods in tackling complex computational challenges, with potential advantages in reducing computational costs.

- Attention Maps Still Popping?: Members discussed the waning popularity of attention maps versus neuron-based methods, with some suggesting this is due to the observational nature of attention maps, while others mentioned that attention maps can be directly intervened on during a forward pass.

- One member expressed bias toward attention maps for their ability to generate trees and graphs, leveraging linguistic features, citing syntax emerging from attention maps since BERT.

- Mixed Precision States Exposed: In mixed precision training, the master FP32 weights are typically stored in GPU VRAM unless zero offload is activated.

- It is recommended to perform vanilla mixed precision with BF16 low-precision weights alongside FP32 optim+master-weights+grads and members pointed to Megatron-LM as a great example.

Latent Space Discord

- Anthropic Debuts Claude 3.7 Sonnet and Claude Code: Anthropic launched Claude 3.7 Sonnet, which includes improvements in reasoning and Claude Code, a terminal-based coding assistant (@anthropic-ai/claude-code).

- Testers noted its higher output tokens limit and enhanced reasoning mode, resulting in better coherent interactions, with some calling out the clever system prompt (Mona's tweet).

- Microsoft Cancels Datacenter Leases: Reports indicate that Microsoft is canceling datacenter leases, signaling a potential oversupply in the datacenter market (Dylan Patel's tweet).

- This move casts doubt on Microsoft's aggressive pre-leasing strategies from early 2024 and its broader impact on the colocation sector.

- Qwen's Future Release Sparks Excitement: Anticipation is growing for the upcoming Qwen QwQ-Max release, which reportedly features improved reasoning skills and open-source availability under Apache 2.0 (Hui's tweet).

- The community is closely watching these developments and their effects on the intelligent model landscape.

- Claude Code Streamlines Coding Assistance: Early users find Claude Code helpful for coding assistance, however experiences vary with some reporting slower response times.

- Feedback also mentioned pricing concerns and praised the heavy caching feature which optimized performance.

- GitHub's FlashMLA Draws Community Attention: The GitHub project FlashMLA was recently shared, sparking interest in its possible effects on AI development without any fanfare (FlashMLA GitHub).

- Members showed excitement for future advancements in AI tools expected this week.

Nous Research AI Discord

- Grok3 Tool Invocation Causes Headaches: Members voiced confusion over Grok3's tool invocation mechanism, pointing out the absence of token sequence listings in the system prompt.

- The discussion questioned the reliability and transparency of hard-coded function calls versus in-context learning for tool calling.

- Claude 3.7 Sonnet Arrives: Anthropic released Claude 3.7 Sonnet, highlighting its hybrid reasoning and a command line tool for coding.

- Early benchmarks suggest Sonnet's outperformance in software engineering tasks, positioning it as a potential replacement for Claude 3.5.

- QwQ-Max-Preview Teases Deep Reasoning: QwQ-Max-Preview was unveiled as part of the Qwen series, touting deep reasoning and open-source accessibility under Apache 2.0.

- The community speculates on the model's size, with hopes for smaller, locally usable versions.

- Structured Outputs Project Seeks Feedback: An open-source project to solve structured output challenges was launched, inviting community feedback and collaboration.

- Members suggested reposting the announcement to increase visibility and engagement within the community.

- Sonnet-3.7 Outshines With Misguided Attention: A member benchmarked Sonnet-3.7 using the Misguided Attention Eval, noting its ability to handle misleading info.

- They claimed it performed the best as a non-reasoning model, nearly surpassing o3-mini, further demonstrating its competitive edge in reasoning evaluations.

MCP (Glama) Discord

- Anthropic Releases MCP Registry API: @AnthropicAI released the official MCP registry API, aiming to provide a source of truth for MCP tools and integrations.

- Community members express excitement, hoping this will standardize MCP management and improve reliability.

- LLM Version Naming Conventions Confuse Users: Members are puzzled by LLM version naming, especially Claude 3.7, noting its integration of features and performance enhancements through adaptive thinking modes.

- It suggests version 3.7 integrates features from previous versions while boosting performance through adaptive thinking modes.

- Haiku 3.5's Tool Support Has Quirks: Haiku 3.5 supports tools but performs better with fewer tools attached, according to user experiences.

- Server and tool management stress is a concern, driving plans for a chat app with integrated toolsets for easier usage.

- Claude Code Outshines Aider in Coding Tasks: Users found Claude Code superior to Aider in handling coding errors effectively.

- Interest is growing in using Claude Code for more complex tasks, potentially leveraging heavy thinking modes for optimal results.

- MetaMCP Eyes AGPL for Openness: MetaMCP is considering switching to AGPL to encourage community contributions.

- The move aims to address limitations of the current ELv2 license, which restricts contributions and requires hosted changes to be open-sourced.

HuggingFace Discord

- Gemma-9B Model faces Loading Errors: Users reported errors when loading the Gemma-9B model with custom LoRA, specifically with the

LoraConfig.__init__()function.- Several sought advice, indicating a common technical challenge in adapting Gemma-9B with custom configurations.

- Qwen Max Reasoning Model Anticipated: The Qwen Max reasoning model is expected to be released, potentially this week, touted as the most powerful open-source model since DeepSeek-R1.

- According to this tweet, Qwen Max shows advancements in math understanding and creativity, with plans for official versions and mobile apps.

- Claude-3.7 Dubbed Potential SWE-bench Killer: Claude-3.7 is being called a potential SWE-bench killer, with projected performance rivaling DeepSeek-R1 at a third of the size.

- Concerns were raised regarding the cost-effectiveness of Claude-3.7 due to its high pricing for million-token outputs.

- Ukrainian TTS Dataset Enhances Accessibility: A high-quality TTS dataset for Ukrainian was published on Hugging Face, improving resources for TTS projects, with 6.44k updates here.

- Recent updates to the speech-uk/opentts-mykyta dataset, demonstrate ongoing support for Ukrainian text-to-speech applications.

- AI for Business Series is Unveiled: A new series titled AI for Business – From Hype to Impact was launched to help businesses use AI for competitive advantage.

- The series plans to cover topics on scaling AI without disrupting operations.

LM Studio Discord

- Qwen 2.5 VL Model Takes the Crown: The Qwen 2.5 VL 7B model is outperforming previous models like Llama 3.2 in quality, gaining traction for its effectiveness in generating descriptions for images, according to user reports and can be downloaded from Hugging Face.

- Multiple users have confirmed its potential use in real-world scenarios, particularly in visual-language applications, and can be run locally in LM Studio.

- Discussing Deepseek R1 Local Hosting: Users discussed running Deepseek R1 671B locally with 192GB of RAM, which is the required memory threshold according to documentation, while offloading to the GPU.

- One user shared their experience using specific quantization techniques to optimize the model's performance using Unsloth's GGUF.

- Navigating PC Build Headaches: A user expressed frustration with PC build compatibility, highlighting that the aio pump requires a USB 2.0 header and interferes with the last PCIE slot.

- The user is considering alternative solutions as they have 'a second system to put all of them in', indicating a move towards optimizing hardware configurations.

- Apple M2 Max: Still Viable?: A user opted for a refurbished M2 Max 96GB for hobbies and work, expressing hesitation about investing in the latest M4 Max chip.

- Discussion emerged on the clock and throttle behavior across various Apple chips, noting the power consumption differences, with the M2 Max pulling 60W and M4 Max peaking at 140W.

Stability.ai (Stable Diffusion) Discord

- SD3 Ultra's Existence Teased: Members showed curiosity about SD3 Ultra, citing its potential for high frequency detail compared to SD3L 8B.

- A user added to the intrigue around its capabilities, noting, It still exists - I still use it.

- Image Generation Speed Roulette: Image generation times varied wildly with one user reporting 20 minutes on an unspecified configuration, compared to another's 31 seconds for 1920x1080 on a 3060 TI.

- Performance benchmarks using SD1.5 averaged 4-5 seconds on a 3070 TI, highlighting the variability influenced by model and hardware selection.

- Dog Breed Image Data Quest: A member is looking for dog breed image datasets exceeding 20k images, going beyond the capabilities of the Stanford Dogs dataset.

- They emphasized the need for datasets explicitly labeled with dog breeds.

- Resolution Tweaks Catches Speed Boost: Users discussed optimal resolution settings, advocating for smaller sizes like 576x576 to boost image generation speed.

- One user reported reduced processing times of around 8 minutes after implementing these adjustments.

- Feedback Floodgates Open for Stability AI: Stability AI launched a new feature request board allowing users to submit ideas via Discord using the

/feedbackcommand.- This initiative enables community voting on requests, informing Stability AI's development priorities and help[ing] us prioritize what we work on next.

Modular (Mojo 🔥) Discord

- Mojo FFI Powers Graphics Programming: Members showcased using Mojo FFI to link a static library to GLFW/GLEW, demonstrating a Sudoku example that confirms the feasibility of graphics programming in Mojo via custom C/C++ libraries.

- They used aliasing with the syntax

alias draw_sudoku_grid = external_call[...]to streamline function access, and dynamically linked libraries using a Python script, available here.

- They used aliasing with the syntax

- Dependency Versioning Matters for Mojo: A user reported errors with the lightbug_http dependency in a fresh Mojo project, referencing a Stack Overflow issue, while another speculated whether pinning the small_time dependency to

25.1.0could be causing their errors.- These reports suggest that precise dependency versioning is critical for avoiding installation and configuration issues.

- MAX Does Conway's Game of Life on GPU: A member showcased their implementation of a hardware accelerated Conway’s Game of Life using MAX and pygame, claiming it to be a rather silly application, while discussion arose around the compatibility of using MAX with a 2080 Super GPU.

- The discussion suggested running scripts from Python to facilitate GPU integration, which could be used to add parameters to the model via the graph API.

- SIMD Implementation Tempts Eggsquad: Eggsquad mentioned finding a SIMD-fied implementation by Daniel Lemire, yet expressed hesitance to explore it further at this stage, while Darkmatter pointed out that utilizing bit packing could support much larger graphs in their implementation.

- Eggsquad confirmed that their Conway's Game of Life implementation was functioning well after resolving a bug, demonstrating results through animations, including one that depicted guns in the game.

LLM Agents (Berkeley MOOC) Discord

- Tulu 3 reportedly outshines GPT-4o: According to Hanna Hajishirzi, Tulu 3, a state-of-the-art post-trained language model, surpasses both DeepSeek V3 and GPT-4o through innovative training methods.

- This announcement was made during a lecture covering comprehensive efforts in training language models and enhancing reasoning capabilities.

- Berkeley LLM Agents course will use RLVR: A unique reinforcement learning method with verifiable rewards (RLVR) is being showcased as a means to train language models effectively, aiming to significantly impact reasoning during training.

- Hanna shared insights into testing strategies that incorporate these advanced reinforcement learning techniques during the lecture.

- MOOC Students face Quiz Submission Relief: It was clarified that quiz deadlines apply only to Berkeley students, with all quizzes for MOOC students due at the end of the semester.

- This clarification provided reassurance to latecomers and those concerned about meeting initial deadlines.

- MOOC curriculum details coming soon: A member announced that MOOC curriculum details will be released soon, including a project component, referencing a discord link.

- However, the research track is only for Berkeley students.

Notebook LM Discord

- Enthusiasm Erupts for Google Deep Research & Gemini Integration: Discussion ignited around integrating Google Deep Research and Gemini with NotebookLM for enhanced capabilities.

- Enthusiasts expressed excitement for future developments.

- Users Struggle with NotebookLM Language Settings: Concerns surfaced about altering language settings in NotebookLM without affecting the Google account language.

- A user sought advice on implementing such language changes effectively.

- Creative Book Digitization Tactics Emerge: A member suggested photographing each page with a lens app to create a PDF, then converting it to PowerPoint for upload to NotebookLM.

- Alternatives were suggested, such as using copiers or Adobe's Scan app for direct PDF creation.

- Multi-Language Prompt Effectiveness Analyzed: Debates arose on whether to use single or multiple prompts to facilitate hosts speaking in German within NotebookLM.

- A member speculated that effectiveness might be tied to their premium subscription status.

- Claude 3.7 Sparks User Frenzy: Enthusiasm surrounded Claude 3.7, with users wishing for more control in choosing models.

- A user initiated discussions around the impacts of such a decision on user experience.

LlamaIndex Discord

- AI Assistant goes Live for LlamaIndex: The excellent AI assistant on the LlamaIndex docs is now available for everyone to use! Check it out here.

- The team is excited to see how users incorporate it into their workflows.

- ComposioHQ Drops Another Hit!: Another banger has been released from ComposioHQ! It continues to impress with its features and functionality.

- Early adopters praise the intuitive interface and robust feature set, saying they are excited for further improvements.

- Anthropic Drops Claude Sonnet 3.7: AnthropicAI dropped Claude Sonnet 3.7, and the vibes and evaluations are positive. Day 0 support is available via

pip install llama-index-llms-anthropic --upgrade.- More details can be found in Anthropic's announcement post, highlighting latest integration capabilities with this new release.

- BM25 Retriever Needs Nodes: A member noted that the BM25 retriever cannot be initialized from the vector store alone because the docstore must contain saved nodes.

- One suggestion to get around the issue was to set top k to 10000 to retrieve all nodes, though this might not be efficient.

- MultiModalVectorStoreIndex Struggles with Images: A member encountered an error related to image files while trying to create a MultiModalVectorStoreIndex, despite having images in a GCS bucket.

- The issue arises specifically for images, as their code works fine for PDF documents, suggesting a need for better image handling in the index.

Torchtune Discord

- TorchTune Debates Fine-Tuning Truncation: A discussion emerged in Torchtune's #dev channel about whether to default to left truncation instead of right truncation for fine-tuning, referencing a supportive graph.

- Opinions were mixed, with some acknowledging that HF's current default is right truncation and others advocating for a change.

- StatefulDataLoader Seeks Review: A member requested a review for their pull request adding support for the StatefulDataLoader class to Torchtune.

- The pull request aims to introduce new features and address potential bug fixes within the Torchtune framework.

- DeepScaleR Leaps Ahead of O1-Preview Using RL: The DeepScaleR model, fine-tuned from Deepseek-R1-Distilled-Qwen-1.5B using reinforcement learning, achieved 43.1% Pass@1 accuracy on AIME2024, a 14.3% jump beyond O1-preview according to their Notion.

- This underscores the effectiveness of reinforcement learning in scaling models for enhanced accuracy.

- Deepseek Opens DeepEP Communication Library: As part of #OpenSourceWeek, Deepseek AI launched DeepEP, an open-source communication library tailored for Mixture of Experts (MoE) model training and inference, according to their tweet.

- With FP8 dispatch support and optimized intranode and internode communication, DeepEP strives to streamline both training and inference phases, and is available on GitHub.

Cohere Discord

- Validator Viability in DeSci Queried: A user asked about the profitability threshold for POS Validators within the DeSci space.

- This query underlines the importance of economic feasibility for running nodes in decentralized science.

- Validator Pooling Strategies Discussed: A user mentioned pool validator nodes, showing interest in shared resources or collaboration among validators.

- This hints at a move to boost validator efficiency through pooling methods.

- Asset Valuation Expertise Debated: A message mentioned the term asset value expert, though its context was unclear due to other unrelated terms.

- This raises questions about the importance of expertise in assessing asset valuations within the discussed topics.

Nomic.ai (GPT4All) Discord

- GPT4All v3.10.0 Arrives with Upgrades: GPT4All v3.10.0 debuts with better remote model configuration and broader model support and addresses crash issues.

- Enhancements span stable performance and several crash fixes across the platform.

- Remote Model Configuration Smoothens: The Add Model page now sports a dedicated tab for remote model providers like Groq, OpenAI, and Mistral for more accessible model configuration.

- This enhancement aims to make the integration of external solutions seamless within the GPT4All environment.

- CUDA Compatibility Makes Broader: The update introduces support for GPUs with CUDA compute capability 5.0, expanding the range of compatible hardware.

- This includes the GTX 750, which boosts accessibility for users with varied hardware configurations.

- Versioning Queries Sparked by GPT4All: Confusion arose among members about whether v3.10.0 should instead be labeled v4.0, prompting queries about versioning practices.

- This confusion was compounded by the recent launch of Nomic Embed v2.

- Nomic Embed v2 Anticipated by Users: Users eagerly await GPT4All v4.0.0, especially since the current version still relies on Nomic Embed v1.5 despite the new version's availability.

- The community reminds itself that patience is key as they anticipate forthcoming updates.

DSPy Discord

- phi4 Response Remains Different: The phi4 response format has some differences from most, with a tutorial pending.

- A tutorial is in the works to explain it better.

- Assertion Migration Streamlined: Those migrating from 2.5-style Assertions can use

dspy.BestOfNordspy.Refineto streamline modules.- These new options promise greater efficiency over traditional assertions.

- BestOfN Implemented: An example demonstrated implementing

dspy.BestOfNwithin a ChainOfThought module, allowing up to 5 retries.- The method will select the best reward, halting when the threshold is reached.

- Reward Function Decoded: A sample

reward_fnwas shared, showing how it returns scalar values like float or bool to evaluate prediction field lengths.- This function is applicable within the context of the dspy.BestOfN implementation.

The tinygrad (George Hotz) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!