[AINews] ChatGPT Canvas GA

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Karina Nguyen is all you need.

AI News for 12/9/2024-12/10/2024. We checked 7 subreddits, 433 Twitters and 31 Discords (206 channels, and 5518 messages) for you. Estimated reading time saved (at 200wpm): 644 minutes. You can now tag @smol_ai for AINews discussions!

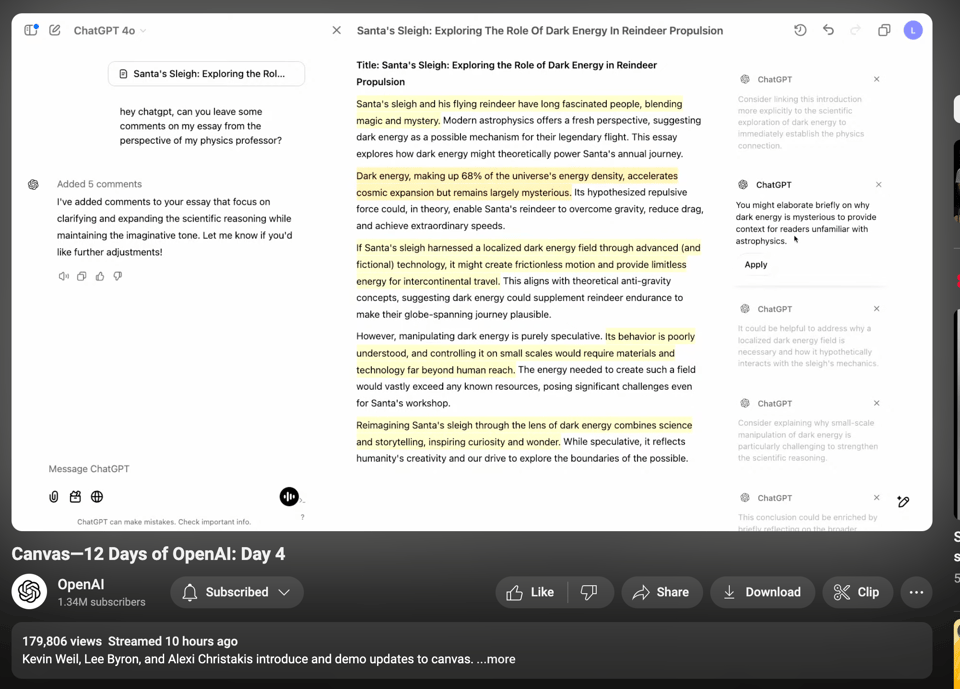

It's still early innings but already we are ready to call OpenAI's 12 Days of Shipmas a hit. While yesterday's Sora launch is still (as of today) plagued with gated signups to deal with overwhelming demand, ChatGPT Canvas needs no extra GPUs and launched to all free and paid users today with no hiccup.

Canvas now effectively supercedes Code Interpreter and is also remarkably Google Docs-like, which further demonstrates the tendency of OpenAI to build Google features faster than Google can build OpenAI.

There's a theory that the jokes ending each episode are a preview of the next one. If this is true, tomorrow's ship will be a doozy.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Here's a categorized summary of the key Twitter discussions:

AI Model & Research Updates

- @deepseek_ai announced their V2.5-1210 update with improved performance on MATH-500 (82.8%) and LiveCodebench (34.38%)

- Meta introduced COCONUT (Chain of Continuous Thought), a new paradigm for LLM reasoning using continuous latent space

- @Huggingface released TGI v3 which processes 3x more tokens and runs 13x faster than vLLM on long prompts

Product Launches & Updates

- OpenAI launched Canvas for all users with features like code execution, GPT integration, and improved writing tools

- @cognition_labs released Devin, an AI developer that successfully built a Kubernetes operator with testing environment

- Hyperbolic raised $12M Series A to build an open AI platform with H100 GPU marketplace at $0.99/hour

Industry & Market Analysis

- @AravSrinivas shared US vs Canada per capita GDP comparison with 72,622 impressions

- @sama noted significant underestimation of Sora demand, working on expanding access

- Discussion about AI capabilities and employment impact over next decades

NeurIPS Conference

- Multiple researchers and companies announcing their presence at NeurIPS 2024 in Vancouver

- @GoogleDeepMind hosting demos of GenCast weather forecasting and other AI tools

- Debate scheduled between Jonathan Frankle and Dylan about the future of AI scaling

Memes & Humor

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Llama 3.3-70B Finetuning: 90K Context on <41GB VRAM

- Llama 3.3 (70B) Finetuning - now with 90K context length and fits on <41GB VRAM. (Score: 360, Comments: 63): Llama 3.3 (70B) can now be fine-tuned to support 90,000 context lengths using Unsloth, which is significantly longer than the 6,900 context lengths supported by Hugging Face + FA2 on an 80GB GPU. This improvement is achieved through gradient checkpointing and Apple's Cut Cross Entropy (CCE) algorithm, and the model fits on 41GB of VRAM. Additionally, Llama 3.1 (8B) can reach 342,000 context lengths using Unsloth, vastly surpassing its native support of 128K context lengths.

- Unsloth uses gradient checkpointing to offload activations to system RAM, saving 10 to 100GB of GPU memory, and Apple's Cut Cross Entropy (CCE) performs cross entropy loss on the GPU, reducing the need for large logits matrices, which further saves memory. This allows models to fit on 41GB of VRAM.

- Users are curious about the rank used in the tests and the potential for multi-GPU support, which is currently unavailable but in development. There's also interest in making Unsloth compatible with Apple devices.

- The Unsloth tool is praised for democratizing fine-tuning capabilities, making advanced techniques accessible to the general public, and potentially reducing costs by allowing the use of smaller 48GB GPUs.

- Hugging Face releases Text Generation Inference TGI v3.0 - 13x faster than vLLM on long prompts 🔥 (Score: 347, Comments: 52): Hugging Face has released Text Generation Inference (TGI) v3.0, which processes 3x more tokens and is 13x faster than vLLM on long prompts, with zero configuration needed. By optimizing memory usage, a single L4 (24GB) can handle 30k tokens on llama 3.1-8B, while vLLM manages only 10k, and the new version reduces reply times on long prompts from 27.5s to 2s. Benchmark details are available for verification.

- TGI v3.0 Performance: Discussions highlight the significant speed improvements of TGI v3.0 over vLLM, particularly in handling long prompts due to the implementation of cached prompt processing. The library can respond almost instantly by keeping the initial conversation data, with a lookup overhead of approximately 5 microseconds.

- Comparison and Usage Scenarios: Users expressed interest in comparisons between TGI v3 and other models like TensorRT-LLM and ExLlamaV2, as well as queries about its performance on short queries and multi-GPU setups. There is also curiosity about TGI's suitability for single versus multi-user scenarios, with some users acknowledging its optimized use for hosting models for multiple users.

- Support and Documentation: Questions arose about the support for consumer-grade RTX cards like the 3090, as current documentation lists only enterprise Nvidia accelerators. Additionally, users are interested in the roadmap for adding features like streaming tool calls and any potential drop in output quality compared to fp16 processing.

Theme 2. DeepSeek V2.5-1210: Final Version and What Next

- deepseek-ai/DeepSeek-V2.5-1210 · Hugging Face (Score: 170, Comments: 11): The post announces the release of DeepSeek V2.5-1210 on Hugging Face, indicating a new version of the AI tool with unspecified improvements. Further details about the release are not provided in the post.

- DeepSeek V2.5-1210 has been confirmed as the final release in the v2.5 series, with a v3 series anticipated in the future. The changelog indicates significant improvements in mathematical performance (from 74.8% to 82.8% on the MATH-500 benchmark) and coding accuracy (from 29.2% to 34.38% on the LiveCodebench benchmark), alongside enhanced user experience for file uploads and webpage summarization.

- There is considerable interest in the R1 model, with users expressing hope that it will be released soon. Some speculate that the current version may have been trained using R1 as a teacher, and others are looking forward to an updated Lite version with a 32B option.

- The community is actively discussing the potential release of a quantized version with exo and has expressed a desire for further updates, including an R1 Lite version.

- DeepSeek-V2.5-1210: The Final Version of the DeepSeek V2.5 (Score: 147, Comments: 36): DeepSeek-V2.5-1210 marks the final version of the DeepSeek V2.5 series, concluding its development after five iterations since its open-source release in May. The team is now focusing on developing the next-generation foundational model, DeepSeek V3.

- Hardware Requirements and Limitations: Utilizing DeepSeek-V2.5 in BF16 format requires significant resources, specifically 80GB*8 GPUs. Users expressed concerns about the lack of software optimization, particularly with the kv-cache, which limits the model's performance on available hardware compared to others like Llama.

- Model Performance and Capabilities: Users noted the model's deep reasoning capabilities but criticized its slow inference speed. Despite this, DeepSeek models are considered high-quality alternatives to other large language models, featuring a Mixture of Experts (MoE) structure with about 22 billion active parameters, allowing reasonable CPU+RAM performance.

- Development and Release Frequency: The DeepSeek team has maintained an impressive release schedule, with almost monthly updates since May, indicating a successful training process. However, the models lack vision understanding and are primarily focused on text due to the founder's preference for research over commercial applications.

Theme 3. InternVL2.5 Released: Top Performance in Vision BM

- InternVL2.5 released (1B to 78B) is hot in X. Can it replace the GPT-4o? What is your experience so far? (Score: 131, Comments: 42): InternVL2.5, with models ranging from 1B to 78B parameters, has been released and is gaining attention on X. The InternVL2.5-78B model is notable for being the first open-source MLLM to achieve over 70% on the MMMU benchmark, matching the performance of leading closed-source models like GPT-4o. You can explore the model through various platforms like InternVL Web, Hugging Face Space, and GitHub.

- Vision Benchmark Discussion: There is a debate about the effectiveness of the InternVL2.5-78B model on vision benchmarks, with some users suggesting that 4o outperforms Sonnet in vision tasks. Concerns were raised about the reliability of benchmarks and the credibility of the model's claims, especially given some questionable Reddit and Hugging Face histories.

- Geopolitical and Educational Context: There is a discussion around the global STEM landscape, particularly comparing the US and China, highlighting China's STEM PhD numbers and educational achievements. A comment references an 11-year-old Chinese child building rockets, sparking a debate about the accuracy and context of such claims.

- Model Availability and Performance: Users appreciate the availability of smaller model versions of InternVL2.5 beyond the 78B parameter model, noting their strong performance and potential for local deployment. The 78B model is noted for its superior performance in Ukrainian and Russian languages compared to other open models.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Google Willow: Quantum Computing's Gargantuan Leap

- Google Willow : Quantum Computing Chip completes task in 5 minutes which takes septillion years to best Supercomputer (Score: 311, Comments: 77): Google introduced the Willow Quantum Computing Chip, achieving a computational speed approximately 10^30 times faster than the fastest supercomputer, Frontier, completing tasks in 5 minutes that would otherwise take septillion years. This is considered the most significant technological release of the year, with more information available in a YouTube video.

- Several commenters, like beermad, express skepticism about the benchmark tests, suggesting they are optimized for quantum computers and lack real-world utility. They argue that these tests are designed to favor quantum chips over classical computers without demonstrating practical applications.

- huffalump1 highlights the significance of Google's breakthrough in error correction, which surpasses the physical limit of a qubit. This is crucial for quantum computing, as error correction is a major challenge in the field.

- The discussion touches on the potential financial impact, with bartturner noting a 4% increase in GOOG shares in pre-market trading, suggesting that investors recognize the potential value of this technological advancement.

- OpenAI Sora vs. Open Source Alternatives - Hunyuan (pictured) + Mochi & LTX (Score: 204, Comments: 56): The post discusses OpenAI Sora in comparison with open-source alternatives like Hunyuan, Mochi, and LTX in the context of quantum computing vs. classical supercomputer performance. Without additional details from the video, the specifics of these comparisons or performance metrics are not provided.

- Commenters discuss the comparison between OpenAI Sora and open-source models like HunyuanVideo, noting that open-source options are competitive and often more accessible. HunyuanVideo is highlighted for its potential and ability to run on consumer GPUs, with some users expressing a preference for open-source due to its uncensored nature.

- Sora's performance is praised for its quality in some areas, such as detailed imagery and landscapes, but it faces criticism for limited accessibility and issues with physical interactions. Users note that HunyuanVideo performs better in some scenarios, and there is interest in further comparisons with models like TemporalPromptEngine.

- There is a call for the West to improve their open-source AI efforts, as Chinese open models are seen as impressive for their quality and ability to operate on consumer hardware. The sentiment reflects a desire for more open and accessible AI development in Western countries.

- I foresee advertisers and retailers getting sued very soon. This was a legit ad for me. (Score: 177, Comments: 50): The post discusses an advertisement that uses a cartoon-style illustration with a controversial theme to capture attention, potentially leading to legal issues for advertisers and retailers. The use of humor in the ad, involving a sad-looking cat and bleach, raises ethical concerns and highlights the fine line between creative marketing and misleading or offensive content.

- Commenters express skepticism and humor regarding the ad's intent, with SomeRandomName13 sarcastically suggesting the ad's absurdity in using bleach on a cat's eyes to solve a problem, highlighting the ad's controversial nature.

- j-rojas and others suggest the ad's design is intentionally absurd to act as clickbait, sparking curiosity due to its extreme ridiculousness.

- chrismcelroyseo warns of the dangers of mixing bleach and ammonia, providing a link to an article about the toxic effects, which underscores the potential real-world risks associated with the ad's content.

Theme 2. Gemini 1.5 Outperforms Llama 2 70B: Industry Reactions

- Let’s be honest, unlike ChatGPT, 97% of us won’t care about or be using Sora a month from now. I’ve tried every video generator and music generator, cool for like a week, then meh. Good chance you will forget about sora after your 50 monthly generation is up. (Score: 302, Comments: 113): Gemini 1.5 is discussed as surpassing Llama 2 70B, but the author expresses skepticism about the lasting impact of Sora. They argue that, similar to other AI tools like video and music generators, Sora might be initially intriguing but will likely be forgotten by most users after limited use.

- Users debated the long-term utility of Sora in professional settings, with some arguing that studios will adopt it to cut costs, while others see it as an overpriced novelty with limited use due to its constraints like the 5-second, 720p output. Sora was compared to past tech trends that initially garnered interest but faded, similar to other AI tools like Suno and DALL-E 3 which had brief usage spikes before declining.

- Some commenters emphasized the importance of AI tools in transforming workflows, citing examples in video-related businesses where AI has significantly impacted operations over the last six months. Despite skepticism, others pointed out that AI tools continue to be valuable for specific professional tasks, even if they don't have universal appeal.

- The discussion also touched on the broader applicability of AI tools beyond initial hype, with parallels drawn to historical tech adoption patterns like the Apple Vision and early web browsers. The consensus was that while tools like Sora may not be universally essential, they hold significant value for niche markets and specific professional uses.

- AI art being sold at an art gallery? (Score: 285, Comments: 181): The author describes attending an art gallery event where they suspect two paintings, priced between 5k and 15k euros, might be AI-generated due to peculiarities in the artwork, such as a crooked hand and an illogical bag handle. They contacted the organizer to investigate the possibility of AI involvement, awaiting further information.

- Many commenters suspect the paintings are AI-generated due to peculiarities like malformed fingers, nonsensical bag handles, and odd room layouts. Fingers and dog features are frequently cited as AI telltales, with some users noting the absurdity of these being part of a human artist's style.

- SitDownKawada provided links to the paintings on sale for around €4k and questioned the authenticity of the artist's online presence, which appears AI-generated. The artist's Instagram and other social media accounts were scrutinized for their recent activity and prolific output.

- Discussions also touched on the broader implications of AI in art, with some users pondering if AI-generated elements in hand-painted works should still be considered AI art. There is a debate on whether the medium or the creative process holds more value, especially as AI becomes more indistinguishable from human artistry.

- Do you use ChatGPT or any other AI Search for Product Recommendations or Finding New Products? If Yes, please mention what kind of product recommendations you trust AI (Score: 255, Comments: 18): Gemini 1.5 is being discussed for its potential in providing AI-driven product recommendations. The community is encouraged to share experiences on whether they trust AI recommendations for discovering new products, with a focus on specific types of products that are more reliable when suggested by AI tools like ChatGPT.

- AI's role in product discovery is highlighted by a user finding a game called "Vagrus - The Riven Realms" through AI recommendations, which they had not heard of before but found impressive. This underscores AI's potential in suggesting lesser-known products that might align with user interests.

- Trust in AI recommendations tends to be higher for products with hard specifications, such as computer hardware and electronics. Users find AI particularly useful for comparing technical details, which would otherwise require extensive manual research, as exemplified by a user who used AI to compare routers.

Theme 3. Sora Video Generator: Redefining AI Creativity

- Cortana made with Sora (Score: 383, Comments: 42): Sora is highlighted as a tool enhancing AI video generation techniques, as demonstrated in a video linked in the post. The video showcases a creation named Cortana, though specific details about the video content or the techniques used are not provided in the text.

- Discussions mention Sora as a tool for generating videos instead of text, with some users questioning if access to Sora is currently available to the public.

- Comments include humorous and satirical takes on the appearance of Cortana, with users referencing features like "jiggle physics" and making light-hearted remarks about her design.

- Some comments focus on the technical aspects and visual design, with requests for additional features like color and skin, and jokes about "wire management" for Cortana.

- Pigs Tango in the Night (Score: 373, Comments: 61): The author created a video using Sora to accompany a humorous song made by their brother in Suno. They ran out of credits during the process but consider it a successful initial experiment with the technology, with plans to possibly post another video in a month.

- Sora's accessibility and subscription: Users discussed accessibility of Sora via a $20 subscription, which allows creating 50 five-second clips per month, and compared its value to other generators. Users appreciated this feature but noted limitations in credits, with one user running out of credits for the month.

- Prompt understanding and remix feature: Discussion on how Sora interprets prompts revealed that users describe each scene they want, and use the "remix" feature to make adjustments if clips don't meet expectations. One user mentioned running out of credits due to extensive remixing.

- Sora's performance and user feedback: Feedback highlighted Sora's capability in generating dance moves, with some users praising its output compared to other models. However, there were mixed reactions regarding the content's relevance, such as expectations of tango music which were unmet.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1: AI Model Advancements and New Releases

- Gated DeltaNet Steals the Spotlight: Gated DeltaNet outperforms models like Mamba2 in long-context tasks, leveraging gated memory control and delta updates to address limitations in standard transformers. This advancement significantly improves task accuracy and efficiency in complex language modeling scenarios.

- Llama 3.3 Breaks Context Barriers: Unsloth now supports fine-tuning Llama 3.3 with context lengths up to 89,000 tokens on an 80GB GPU, enhancing efficiency by reducing VRAM usage by 70%. This allows 2-3 minutes per training step on A100 GPUs, vastly exceeding previous capabilities.

- DeepSeek V2.5 Drops the 'Grand Finale': DeepSeek announces the release of DeepSeek-V2.5-1210, adding live Internet Search to their chat platform, providing users with real-time answers at their fingertips.

Theme 2: AI Tools and User Experience Challenges

- Cursor Takes a Coffee Break: Users report persistent slow requests in Cursor, disrupting productivity despite recent updates to Composer and Agent modes. Both modes are still underperforming, negatively impacting coding workflows.

- Bolt Hits a Speed Bump: Bolt.new users face confusion over token allocations when subscriptions end, with tokens not stacking and resets every 30 days. Issues with image uploads and 'No Preview Available' errors further frustrate users, leading to discussions about token management strategies.

- Linting Nightmares in Cursor: Cursor's linting features are triggering without actual errors, causing users to burn through their fast message quotas unnecessarily. Frequent false positives reinforce the sentiment that Cursor's features are still in beta and need refinement.

Theme 3: AI Integration in Software Development

- Mojo Destroys Old Habits with New Keyword: Introducing the

destroykeyword in Mojo enforces stricter memory management within linear types, enhancing safety but sparking debates about complexity for newcomers. This distinction from Python'sdelaims to improve programming practices. - Aider Multiplies Productivity with Multiple Instances: Engineers are running up to 20 Aider instances simultaneously to handle extensive project workflows, showcasing the tool's scalability. Users explore command execution across instances to optimize coding approaches for large-scale developments.

- LangChain and Aider Make a Dynamic Duo: Aider's integration with LangChain's ReAct loop enhances project management tasks, with users noting superior results compared to other tools. This collaboration improves AI-assisted coding workflows and efficiency.

Theme 4: Community and Open Source Initiatives in AI

- vLLM Joins the PyTorch Party: vLLM officially integrates into the PyTorch ecosystem, enhancing high-throughput, memory-efficient inference for large language models. This move is expected to boost AI innovation and accessibility for developers.

- Grassroots Science Goes Multilingual: A new initiative aims to develop multilingual LLMs by February 2025 through open-source efforts and community collaboration. The project seeks to engage grassroots communities in multilingual research using open-source tools.

- State of AI Agents 2024 Report Unveiled: Ahmad Awais releases an in-depth report analyzing 184 billion tokens and feedback from 4,000 builders, highlighting trends and future directions in AI agents.

Theme 5: AI in Creative Content and User Interaction

- NotebookLM Hits the High Notes in Podcasting: Users share a tutorial titled "NotebookLM Podcast Tutorial: 10 Secret Prompts (People Will Kill You For!)" offering exclusive prompts to enhance podcast creativity. Experimenting with features like fact-checkers improves dialogue quality in AI-generated podcasts.

- WaveForms AI Adds Emotion to the Mix: WaveForms AI launches, aiming to solve the Speech Turing Test by integrating Emotional Intelligence into AI systems. This advancement strives to enhance human-AI interactions with more natural and expressive communication.

- Sora's Mixed Debut Leaves Users Guessing: OpenAI's Sora garners skepticism due to five-second video outputs and questions about content quality. Users compare it unfavorably with models like Claude, Leonardo, and Ideogram, leading some to prefer alternative solutions.

PART 1: High level Discord summaries

Codeium / Windsurf Discord

- Windsurf AI Launches Merch Giveaway: Windsurf AI kicked off their first merch giveaway on Twitter, inviting users to share their creations for a chance to win a care package.

- The campaign leverages the hashtag #WindsurfGiveaway to track submissions and boost community engagement.

- Ongoing Credit System Flaws: Users report that purchased credits often fail to appear in their accounts, causing widespread frustration and an influx of support tickets.

- Despite team assurances, the lack of timely support responses continues to disappoint the user base.

- Confusion Over Windsurf's Pricing Model: There are rising concerns about Windsurf's pricing, especially regarding the high limits on flow and regular credits relative to the features offered.

- Users are advocating for a more sustainable model, including the introduction of a rollover system for unused credits.

- Performance Drops in Windsurf IDE: Recent updates have led to criticisms of the Windsurf IDE, with users citing increased bugs and decreased efficiency.

- Comparisons with competitors like Cline reveal a preference for Cline's superior functionality and reliability.

- Cline Outperforms Windsurf in Coding Tasks: Cline is being favored over Windsurf for certain coding tasks, offering better prompt responses despite slower performance in some areas.

- Cline's ability to generate specific coding outputs without errors has been particularly praised by the community.

Eleuther Discord

- Reproducibility Challenges in LLMs: Discussions highlighted reproducibility concerns in large language models, particularly in high-stakes applications like medical systems, emphasizing the complexities beyond classic software development. Members debated the nuances of recreating LLMs and the importance of reliable benchmarks.

- Participants referenced the HumanEval Benchmark PR pending review, which aims to enhance evaluation standards by integrating pass@k metrics from the HF evaluate module.

- Coconut Architecture vs. Universal Transformers: Coconut Architecture introduces a novel approach by feeding back the final hidden state post-

token as a new token, altering the KV cache with each iteration. This contrasts with Universal Transformers, which typically maintain a static KV cache across repetitions. - The method's potential resemblance to UTs under specific conditions was discussed, particularly in scenarios involving shared KV caches and state history management, highlighting opportunities for performance optimization.

- Gated DeltaNet Boosts Long-Context Performance: Gated DeltaNet has shown superior performance in long-context tasks compared to models like Mamba2 and previous DeltaNet versions, leveraging gated memory control and delta updates. This advancement addresses limitations in standard transformers regarding long-term dependencies.

- Benchmark results were cited, demonstrating significant improvements in task accuracy and efficiency, positioning Gated DeltaNet as a competitive architecture in complex language modeling scenarios.

- Batch Size Impacts GSM8k Evaluation Accuracy: Evaluations on the GSM8k benchmark revealed that a batch size of 1 achieved the highest accuracy of 85.52%, whereas larger batch sizes resulted in notable performance declines. This discrepancy is potentially linked to padding or attention mechanism implementations.

- Members are investigating the underlying causes, considering adjustments to padding strategies and model configurations to mitigate the adverse effects of increased batch sizes on evaluation metrics.

- Attention Masking Issues in RWKV and Transformers: Concerns were raised regarding RWKV model implementations, specifically related to attention masking and left padding, which may adversely affect evaluation outcomes. Additionally, using the SDPA attention implementation in multi-GPU environments was flagged for potential performance inconsistencies.

- Participants emphasized the necessity for careful configuration and potential exploration of alternative attention backends to ensure reliable model performance across different hardware setups.

Cursor IDE Discord

- Cursor Slows to a Crawl: Multiple users report persistent slow requests in Cursor, disrupting their productivity despite recent updates to Composer and Agent modes.

- Users feel that both Composer and Agent modes are still underperforming, negatively impacting their coding workflows.

- AI Model Face-Off: Gemini, Claude, Qwen: Claude is favored by many users for superior performance in coding tasks compared to Gemini and Qwen.

- While Gemini shows potential in some tests, inconsistent quality has led to developer frustration.

- Agent Mode File Handling Confusion: Questions arise about whether agents in Cursor's Agent mode access file contents directly or merely suggest reading them.

- This uncertainty highlights ongoing concerns regarding the functionality and reliability of Cursor's agent features.

- AI Praises User's Code Structure: A user shared feedback where AI commended their code structure as professional despite the user's lack of experience.

- This showcases the advanced capabilities of current AI in assessing development practices accurately.

- Linting Triggers Frustrate Users: Cursor's linting features are triggering without actual errors, causing frustration among users who believe their fast message quota is being misused.

- Frequent false positives reinforce the sentiment that Cursor's features are still in beta and need refinement.

aider (Paul Gauthier) Discord

- Aider v0.68.0 Unveils Enhanced Features: The latest Aider v0.68.0 release introduces copy-paste mode and the

/copy-contextcommand, significantly improving user interactions with LLM web chat UIs.- Enhanced API key management allows users to set keys for OpenAI and Anthropic via

--openai-api-keyand--anthropic-api-keyswitches, streamlining environment configuration through a YAML config file.

- Enhanced API key management allows users to set keys for OpenAI and Anthropic via

- Gemini Models Exhibit Varied Performance: Users report that Gemini models offer improved context handling but face limitations when editing large files, sparking discussions on performance benchmarks.

- There are calls to run comparative analyses with other models to better understand architectural capabilities, as highlighted in DeepSeek's update.

- Aider Seamlessly Integrates with LangChain: Aider's integration with LangChain's ReAct loop enhances project management tasks, with users noting superior results compared to other tools.

- Further testing and potential collaborations on this integration could provide deeper insights into AI-assisted coding workflows.

- Managing Multiple Aider Instances for Complex Workflows: Engineers are running up to 20 Aider instances simultaneously to handle extensive project workflows, demonstrating the tool's scalability.

- Users are exploring command execution across instances to optimize coding approaches for large-scale developments.

- Community Shares Aider Tutorials and Resources: Members appreciate tutorials and resources shared by the community, fostering a collaborative learning environment.

- Conversations emphasize enhancing the learning experience through shared knowledge and video content, supporting skill advancement among AI Engineers.

Unsloth AI (Daniel Han) Discord

- Llama 3.3 Achieves Ultra Long Context Lengths: Unsloth now supports fine-tuning the Llama 3.3 model with a context length of up to 89,000 tokens on an 80GB GPU, significantly enhancing its capability compared to previous versions.

- This improvement allows users to perform 2-3 minutes per training step on A100 GPUs while utilizing 70% less VRAM, as highlighted in Unsloth's latest update.

- APOLLO Optimizer Reduces LLM Training Memory: The APOLLO optimizer introduces an approach that approximates learning rate scaling to mitigate the memory-intensive nature of training large language models with AdamW.

- According to the APOLLO paper, this method aims to maintain competitive performance while decreasing optimizer memory overhead.

- QTIP Enhances Post-Training Quantization for LLMs: QTIP employs trellis coded quantization to optimize high-dimensional quantization, improving both the memory footprint and inference throughput of large language models.

- The QTIP method enables effective fine-tuning by overcoming limitations associated with previous vector quantization techniques.

- Fine-tuning Qwen Models for OCR Tasks: There is growing interest in fine-tuning Qwen2-VL models specifically for OCR tasks, aiming to enhance information extraction from documents like passports.

- Users are confident in this approach's effectiveness, leveraging Qwen's robust capabilities to address specialized OCR challenges.

- Awesome RAG Project Expands RAG and Langchain Integration: The Awesome RAG GitHub project focuses on enhancing RAG, VectorDB, embeddings, LlamaIndex, and Langchain, inviting community contributions.

- This repository serves as a central hub for resources and tools aimed at advancing retrieval-augmented generation techniques.

Stability.ai (Stable Diffusion) Discord

- Image Enhancement vs AI Tools: Members debated whether Stable Diffusion can improve images without altering core content, suggesting traditional editing tools like Photoshop for such tasks.

- Some highlighted the need for skills in color grading and lighting for professional results, indicating that AI may add noise rather than refine.

- Llama 3.2-Vision Model in Local Deployment: The Llama 3.2-Vision model was mentioned as a viable local option for image classification and analysis, supported by software like KoboldCPP.

- Members noted that local models could run on consumer GPUs and emphasized that online services often require users to relinquish rights to their data.

- Memory Management in Automatic1111 WebUI: There was a discussion on memory management issues affecting image generation in Automatic1111 WebUI, particularly with batch sizes and VRAM usage.

- Members suggested that larger batches led to out-of-memory errors, potentially due to inefficiencies in how prompts are stored in the system.

- Challenges in Image Metadata and Tagging: Participants discussed the challenge of extracting tags or descriptions from images, with suggestions including using metadata readers or AI models for classification.

- Concerns were raised about how classification methods could miss certain details, with some advocating for the use of specific tags like those found on imageboards.

- Copyright and Data Rights in AI Image Services: A warning was shared about using online services for AI image generation, highlighting that such services often claim extensive rights over user-generated content.

- Members encouraged local model usage to maintain clearer ownership and control over created works, contrasting it with the broad licensing practices of web-based services.

Perplexity AI Discord

- Perplexity AI image generation issues: Users report that the 'Generate Image' feature in Perplexity AI is often hidden or unresponsive depending on device orientation, hindering the image generation process.

- One user resolved the issue by switching their device to landscape mode, which successfully revealed the 'Generate Image' feature.

- Claude vs GPT performance in Perplexity: Claude models are recognized for their writing capabilities, but discussions indicate they may underperform within Perplexity AI compared to their official platforms.

- Pro users find the paid Claude versions more advantageous, citing enhanced features and improved functionality.

- Custom GPTs in Perplexity: Custom GPTs in Perplexity allow users to modify personality traits and guidance settings, optimizing user interactions and task management.

- A participant expressed interest in utilizing custom GPTs for organizing thoughts and developing project ideas.

- OpenAI Sora launch: OpenAI's Sora has been officially released, generating excitement within the AI community regarding its new capabilities.

- A member shared a YouTube video detailing Sora's features and potential applications.

- Perplexity Pro features: Perplexity Pro plan offers extensive features over the free version, enhancing research and coding capabilities for subscribers.

- Members discussed using referral codes for discounts, showing interest in the subscription's advanced functionalities.

OpenAI Discord

- Sora Generation Skepticism and AI Model Comparisons: Users expressed doubts about Sora's content quality, questioning if it relies on stock footage, while comparing its performance to models like Claude, O1, Leonardo, and Ideogram for ease of use and output quality.

- Some prefer O1 for specific tasks, noting that Leonardo and Ideogram offer superior usability, whereas Sora's limitation of five-second video generation was highlighted as a constraint for substantial content creation.

- Custom GPTs Continuity and OpenAI Model Fine-Tuning Challenges: Custom GPTs lose tool connections upon updates, prompting members to synthesize continuity by retrieving key summaries from existing GPTs, while addressing ongoing management needs.

- Challenges in fine-tuning OpenAI models were discussed, with users encountering generic responses post fine-tuning in Node.js environments and seeking assistance with their training JSONL files for effective model customization.

- Optimizing Nested Code Blocks in Prompt Engineering: Participants shared techniques for managing nested code blocks in ChatGPT, emphasizing the use of double backticks to ensure proper rendering of nested structures.

- Examples included YAML and Python code snippets demonstrating the effectiveness of internal double backticks in maintaining the integrity and readability of nested code blocks.

- AI Capabilities Expectations and User Feedback Insights: Discussions focused on the future potential of AI models to dynamically generate user interfaces and adapt responses without explicit instructions, aiming for seamless user interactions.

- Skepticism was raised regarding the practicality of fully AI-driven interactions, with concerns about user confusion and usability, alongside feedback emphasizing the need for more tangible advancements in AI functionalities.

Bolt.new / Stackblitz Discord

- Token Twists After Subscriptions End: Users report confusion over token allocations when their subscription ends, with some tokens not stacking and Pro plan tokens resetting every 30 days. For billing issues, contacting support is recommended.

- A member noted that tokens do not stack, and this reset policy has sparked discussions about token management strategies.

- Payment Gateway Integrates with Bolt?: Users are exploring payment gateway integrations with platforms like Payfast, PayStack, and questioning if it parallels Stripe's integration process. No definitive solutions were provided.

- One user suggested that separating dashboard features might enhance functionality for larger projects.

- Bolt Lacks Multi-LLM Support: A user inquired about leveraging multiple LLMs simultaneously within Bolt for complex projects, but another member confirmed that this feature is not currently available.

- Participants discussed methods to enhance productivity and manage larger codebases without native multi-LLM support.

- Local Images Fail in Bolt Uploads: Issues were raised about local images not displaying correctly in Bolt, leading to frustrations over token usage without successful uploads. Suggestions included using external services for image uploads.

- A guide was shared to correctly integrate image upload functionality within Bolt applications.

- 'No Preview Available' Error Strikes Bolt Users: Some users encounter a 'No Preview Available' error when projects fail to load after modifications, prompting the idea to create dedicated discussion topics for detailed troubleshooting.

- One member outlined steps like reloading projects and focusing on error messages to resolve the issue effectively.

Modular (Mojo 🔥) Discord

- Introducing Mojo's 'destroy' Keyword: Discussions emphasized the necessity of a new

destroykeyword in Mojo, distinguishing it from Python'sdelby enforcing stricter usage within linear types to enhance memory management safety. Ownership and borrowing | Modular Docs.- Some members highlighted that mandating

destroycould complicate the learning curve for newcomers transitioning from Python, emphasizing the need for clarity in documentation.

- Some members highlighted that mandating

- Optimizing Memory Management in Multi-Paxos: Multi-Paxos implementations now utilize statically allocated structures to comply with no-heap-allocation requirements, supporting pipelined operations essential for high performance. GitHub - modularml/max.

- Critiques underscored the necessity for comprehensive handling of promises and leader elections to ensure the consensus algorithm's robustness.

- Clarifying Ownership Semantics in Mojo: Conversations about ownership semantics in Mojo demanded clarity on destructor handling, especially when contrasting default behaviors for copy and move constructors. Ownership and borrowing | Modular Docs.

- Topics like

__del__(destructor) were flagged as potentially confusing for those coming from languages with automatic memory management, stressing the need for consistent syntax.

- Topics like

- Addressing Network Interrupts Impact on Model Weights: A discussion revealed that network interrupts could cause models to use incorrect weights due to validation deficiencies, resulting in data corruption. Checksums have been incorporated into the downloading process to improve reliability.

- Sample outputs from interrupted scenarios showcased bizarre data corruption, underscoring the effectiveness of the new checksum measures.

- Enhancing MAX Graph with Hugging Face Integration: Integration with

huggingface_hubnow enables automatic restoration of interrupted downloads, boosting system robustness and reliability. Hugging Face Integration.- This enhancement follows previous issues with large weight corruptions, leveraging Hugging Face to optimize MAX Graph pipelines performance.

Notebook LM Discord Discord

- NotebookLM Expands Podcast Functionality: A member shared a YouTube tutorial titled "NotebookLM Podcast Tutorial: 10 Secret Prompts (People Will Kill You For!)" that offers exclusive prompts to enhance podcast creativity.

- Users also explored adding a fact-checker to AI-generated podcasts, aiming to improve dialogue quality and ensure accuracy during a 90-minute show.

- Limited Source Utilization in NotebookLM: A user expressed frustration that NotebookLM only processes 5-6 sources when 15 sources are needed for a paper, highlighting a limitation in source diversity.

- Community members advised setting source limits during queries to ensure a broader range of references, addressing the issue of source scarcity.

- Enhanced Language Support Requested in NotebookLM: Users inquired about changing the language settings to English in NotebookLM, citing urgency due to upcoming exams.

- Discussions included methods such as adjusting browser settings and refreshing the NotebookLM page to achieve the desired language, with requests for future support in languages like French and German.

- Challenges in Sharing Notebooks with NotebookLM: Users reported difficulties when sharing notebooks using 'copy link,' as recipients viewed a blank page unless added as viewers first.

- Clarifications were provided on the necessary steps to successfully share notebooks, ensuring proper access permissions for collaborators.

LM Studio Discord

- Manual Updates for LM Studio: Users highlighted that LM Studio does not automatically update to newer versions like 0.3.x, necessitating manual updates to maintain compatibility with the latest models.

- A manual update approach was recommended to ensure seamless integration with updated features and models.

- Tailscale Integration Enhances Accessibility: Configuring LM Studio with Tailscale using the device's MagicDNS name improved accessibility and resolved previous connection issues.

- This method streamlined network configurations, making LM Studio more reliable for users.

- Model Compatibility Challenges: Discussions emerged around compatibility issues with models like LLAMA-3_8B_Unaligned, suggesting potential breaks due to recent updates.

- Users speculated that the LLAMA-3_8B_Unaligned model might be non-functional following the latest changes.

- Optimizing GPU Cooling Solutions: Members praised their robust GPU cooling setups, emphasizing that shared VRAM can slow performance and recommending limiting GPU load for optimal efficiency.

- Techniques such as modifying batch sizes and context lengths were shared to enhance GPU processing and resource management.

- Alphacool Reservoirs Compatible with D5 Pumps: Alphacool provides reservoirs that accommodate D5 pumps, as noted by users adjusting their setups to fit hardware requirements.

- One user shared a link to the Alphacool reservoir they selected for their build.

Nous Research AI Discord

- VLM Fine-tuning Faces Challenges: Members discussed the difficulties in fine-tuning VLM models like Llama Vision, noting that Hugging Face (hf) doesn't provide robust support for these tasks.

- They recommended using Unsloth and referenced the AnyModal GitHub project to enhance multimodal framework adjustments.

- Breakthroughs in Long-Term Memory Pathways: An article was shared about neuroscientists at the Max Planck Florida Institute for Neuroscience discovering new pathways for long-term memory formation, bypassing standard short-term processes (read more).

- The community explored how manipulating these memory creation pathways could improve AI cognitive models.

- Crafting a Security Agent with OpenAI API: A user outlined their method for building a security agent using the OpenAI API, detailing steps like creating a Tool class and implementing a task completion loop.

- Other members noted that scaling to advanced architectures, such as multi-agent systems and ReAct strategies, introduces significant complexity.

- Exploring ReAct Agent Strategies: Discussions focused on various ReAct agent strategies to enable agents to reason and interact dynamically with their environments.

- Members considered the potential of using agent outputs as user inputs to enhance interaction workflows.

- Insights from Meta's Thinking LLMs Paper: A member reviewed Meta's Thinking LLMs paper, highlighting its approach for LLMs to list internal thoughts and evaluate responses before finalizing answers.

- They showcased an example where an LLM tends to 'overthink' during answer generation, sparking discussions on optimizing reasoning processes (read more).

Interconnects (Nathan Lambert) Discord

- DeepSeek V2.5 Launchs Grand Finale: DeepSeek announced the release of DeepSeek-V2.5-1210, referred to as the 'Grand Finale', sparking enthusiasm among community members who had been anticipating this update.

- Members discussed the launch with excitement, noting the significance of the new version and its impact on DeepSeek's capabilities.

- Internet Search Feature Live on DeepSeek: DeepSeek introduced the Internet Search feature, now available on their chat platform, allowing users to obtain real-time answers by toggling the feature.

- Community members welcomed the new feature, expressing optimism about its potential to enhance user experience and provide immediate search results.

- DeepSeek License Allows Synthetic Data: A discussion emerged where a member inquired if DeepSeek's current license permits synthetic data generation, showing interest in licensing terms.

- Another member confirmed that synthetic data generation is allowed under the existing license, though it is not widely practiced, prompting further curiosity about OLMo testing.

- vLLM Integrates with PyTorch Ecosystem: The vLLM project officially joined the PyTorch ecosystem to enhance high-throughput, memory-efficient inference for large language models.

- Leveraging the PagedAttention algorithm, vLLM continues to evolve with new features like pipeline parallelism and speculative decoding.

- Fchollet Clarifies Scaling Law Position: Fchollet addressed misconceptions about his stance on scaling laws in AI through a tweet, emphasizing that he does not oppose scaling but critiques over-reliance on larger models.

- He advocated for shifting focus from whether LLMs can reason to their ability to adapt to novelty, proposing a mathematical definition to support this view.

Latent Space Discord

- WaveForms AI Launch Introduces Emotional Audio LLM: Announced by WaveForms AI, the company aims to solve the Speech Turing Test and integrate Emotional Intelligence into AI systems.

- This launch aligns with the trend of enhancing AI's emotional understanding capabilities to improve human-AI interactions.

- vLLM Joins PyTorch Ecosystem: vLLM Project announced its integration into the PyTorch ecosystem, ensuring seamless compatibility and performance optimization for developers.

- This move is expected to enhance AI innovation and make AI tools more accessible to the developer community.

- Devin Now Generally Available at Cognition: Cognition has made Devin publicly available starting at $500/month, offering benefits like unlimited seats and various integrations.

- Devin is designed to assist engineering teams with tasks such as debugging, creating PRs, and performing code refactors efficiently.

- Sora Launch Featured in Latest Podcast: The latest podcast episode includes a 7-hour deep dive on OpenAI's Sora, featuring insights from Bill Peeb.

- Listeners can access the episode here for an extensive overview of the Sora launch.

- State of AI Agents 2024 Report Released: Ahmad Awais introduced the 'State of AI Agents 2024' report, analyzing 184 billion tokens and feedback from 4K builders to highlight trends in AI agents.

- These insights are critical for understanding the trajectory and evolution of AI agent technologies in the current landscape.

Axolotl AI Discord

- Torch Compile: Speed vs Memory: Members discussed their experiences with torch.compile, noting minimal speed improvements and increased memory usage.

- One member remarked, 'it could just be me problem tho.'

- Reward Models in Online RL: The discussion concluded that in online RL, the reward model is always a distinct model used for scoring, and it remains frozen during real model training.

- Members explored the implications of having a reward model, highlighting its separation from the main training process.

- KTO Model's Performance Claims: Kaltcit praised the KTO model's potential to exceed original dataset criteria, claiming enhanced robustness.

- However, members expressed the need for confirmation that KTO indeed improves over accepted data.

- Corroboration of KTO Findings: Kaltcit mentioned that Kalo corroborated the KTO paper findings but noted the lack of widespread quantitative research among finetuners.

- Nanobitz observed that much of this work may occur within organizations that don't widely share their findings.

- Axolotl Reward Model Integration: An inquiry was made about integrating a reward model for scoring in Axolotl, emphasizing experimentation beyond existing datasets.

- Kaltcit indicated that the current KTO setup might suffice for maximizing answers beyond original advantages.

LLM Agents (Berkeley MOOC) Discord

- Function Calling in LLMs: A member shared the function calling documentation, explaining that it utilizes function descriptions and signatures to set parameters based on prompts.

- It was suggested that models are trained on numerous examples to enhance generalization.

- Important Papers in Tool Learning: A member highlighted several key papers, including arXiv:2305.16504 and ToolBench on GitHub, to advance tool learning for LLMs.

- Another paper, Tool Learning with Foundation Models, was noted as potentially significant in the discourse.

LlamaIndex Discord

- LlamaParse Auto Mode Optimizes Costs: LlamaParse introduces Auto Mode, which parses documents in a standard, cheaper mode while selectively upgrading to Premium mode based on user-defined triggers. More details can be found here.

- A video walkthrough of LlamaParse Auto Mode is available here, reminding users to update their browsers for compatibility.

- Enhanced JSON Parsing with LlamaParse: LlamaParse's JSON mode provides detailed parsing of complex documents, extracting images, text blocks, headings, and tables. For additional information, refer to this link.

- This feature enhances control and capability in handling structured data extraction.

- End-to-End Invoice Processing Agent Developed: The team is exploring innovative document agent workflows that extend beyond traditional tasks to automate complex processes, including an end-to-end invoice processing agent aimed at extracting information from invoices and matching it with vendors. Keep an eye on the developments here.

- This promising workflow automation tool is set to streamline invoice management.

- Cohere Rerank 3.5 Now Available in Bedrock: Cohere Rerank 3.5 is now available through Bedrock as a postprocessor, integrating seamlessly with recent updates. Documentation can be accessed here.

- Installation can be done via

pip install llama-index-postprocessor-bedrock-rerank.

- Installation can be done via

- ColPali Enhances Reranking During PDF Processing: The ColPali feature functions as a reranking tool during PDF processing rather than a standalone process, clarifying its role in dynamic document handling. It operates primarily for reranking image nodes after retrieval, as confirmed by users.

- This clarification helps in understanding the integration of ColPali within existing workflows.

Cohere Discord

- Cohere Business Humor Clashes: Members voiced frustration over Cohere's use of irrelevant humor in business discussions, stressing that lightheartedness shouldn't mute serious conversations.

- The ongoing debate highlighted the balance moderators need to maintain between levity and maintaining professional discourse.

- Plans for Rerank 3.5 English Model: A member inquired about upcoming plans for the Rerank 3.5 English model, seeking details on its development timeline.

- No responses were noted, indicating a potential communication gap regarding the model's progression.

- CmdR+Play Bot Takes a Break: The CmdR+Play Bot is currently on a break, as confirmed by a member following an inquiry about its status.

- Users were advised to stay tuned for future updates regarding the bot's availability.

- Aya-expanse Instruction Performance: A user questioned if aya-expanse, part of the command family, has enhanced its instruction processing performance.

- The discussion didn't yield a clear answer on its performance improvements.

- API 403 Errors Linked to Trial Keys: Members reported encountering a 403 error when making API requests, suggesting it may be related to trial key limitations.

- Trial keys often have restrictions that limit access to specific features or endpoints.

Torchtune Discord

- Config Clash Conundrum: A user sought a simple method for merging conflicting configuration files, opting to use 'accept both changes' for all files. They shared a workaround by replacing conflict markers with an empty string.

- This approach sparked discussions on best practices for handling configuration merges in collaborative projects.

- PR #2139 Puzzle: The community discussed PR #2139, focusing on concerns around

torch.utils.swap_tensorsand its role in initialization.- Contributors agreed on the necessity for further conversations regarding the definition and initialization of

self.magnitude.

- Contributors agreed on the necessity for further conversations regarding the definition and initialization of

- Empty Initialization Enhancement: Proposals emerged to improve the

to_emptyinitialization method, aiming to maintain expected user experiences while managing device and parameter captures.- Members debated how to balance best practices without causing disruptions in existing codebases.

- Tensor Tactics: Device Handling: There was emphasis on effective device management during tensor initialization and swaps, particularly concerning parameters like

magnitude.- Participants highlighted the importance of using APIs such as

swap_tensorsto maintain device integrity during operations.

- Participants highlighted the importance of using APIs such as

- Parameters and Gradients Clarified: Contributors clarified that using

copy_is acceptable when device management is handled correctly, emphasizing the importance of therequires_gradstate.- They discussed integrating error checks in initialization routines to prevent common issues like handling tensors on meta devices.

DSPy Discord

- LangWatch Optimization Studio Launch: LangWatch Optimization Studio launches as a new low-code UI for building DSPy programs, simplifying LM evaluations and optimizations. The tool is now open source on GitHub.

- The studio has transitioned out of private beta, encouraging users to star the GitHub repository to show support.

- DSPy Documentation Access Issues: A member reported difficulties accessing the DSPy documentation, especially the API reference link. Another member clarified that most syntax is available on the landing page and special modules for types are no longer needed.

- Community discussions indicated that documentation has been streamlined, with syntax examples moved to the main page for easier access.

- Impact of O1 Series Models on DSPy: Inquiry was made on how the O1 series models affect DSPy workflows, particularly regarding parameters for MIPRO optimization modules. Adjustments like fewer optimization cycles might be required.

- Members are seeking insights and recommendations on optimizing DSPy workflows with the new O1 series models.

- Optimization Error Reporting in DSPy: A member reported encountering a generic error during optimization in DSPy and posted details in a specific channel. They are seeking attention to address the issue.

- The community is aware of the reported optimization error, with members looking to assist in troubleshooting the problem.

LAION Discord

- Grassroots Science Initiative Launches: A collaboration between several organizations is set to launch Grassroots Science, an open-source initiative aimed at developing multilingual LLMs by February 2025.

- They aim to collect data through crowdsourcing, benchmark models, and use open-source tools to engage grassroots communities in multilingual research.

- AI Threat Awareness Campaign Initiated: A member emphasized the importance of educating individuals about the dangers of AI-generated content, suggesting the use of MKBHD's latest upload to illustrate these capabilities.

- The initiative aims to protect tech-illiterate individuals from falling victim to increasingly believable AI-generated scams.

- Feasibility of Training 7B Models on 12GB Data: A member questioned the feasibility of training a 7B parameter model on just 12GB of data, sparking discussions on its potential performance in practical applications.

- This ambitious approach challenges traditional data requirements for large-scale models, raising questions about efficiency and effectiveness.

- Excitement Over Hyperefficient Small Models: Members expressed enthusiasm for hyperefficient small models, highlighting their performance and advantages over larger counterparts.

- One fan stated, 'I love hyperefficient small models! They rock!', emphasizing the potential of models that reduce resource requirements without sacrificing capabilities.

OpenInterpreter Discord

- 01's Voice Takes the Stage: A member announced that 01 is a voice-enabled spinoff of Open Interpreter, available as both a CLI and desktop application. It includes instructions for simulating the 01 Light Hardware and running both the server and client.

- Instructions provided cover simulating the 01 Light Hardware and managing both server and client operations.

- OI Integration with GPT o1 Pro: A member hypothesized that using OI in OS mode could control GPT o1 Pro through the desktop app or browser, potentially enabling web search and file upload capabilities. They expressed interest in exploring this idea, noting the powerful implications it could have.

- Community members are interested in the potential to enhance GPT o1 Pro with features like web search and file uploads via OI's OS mode.

- 01 App Beta Access for Mac: It was clarified that the 01 app is still in beta and requires an invite to access, currently available only for Mac users. One member reported they sent a direct message to a user to gain access, indicating a very high demand.

- The limited beta access for Mac users highlights the high interest in the 01 app within the community.

- Website Functionality Concerns: A member expressed frustration regarding issues with the Open Interpreter website, showing a screenshot but not detailing the specific problems. Community members have begun discussing website navigation and functionality as part of their ongoing experience with Open Interpreter.

- Ongoing discussions about website navigation and functionality stem from reported issues by community members.

Mozilla AI Discord

- Web Applets Kickoff Session: An upcoming session on web applets is scheduled to start soon, led by a prominent member.

- This event aims to enhance understanding of the integration and functionality of web applets in modern development.

- Theia-ide Exploration: Tomorrow, participants can explore Theia-ide, which emphasizes openness, transparency, and flexibility in development environments.

- The discussion will be led by an expert, showcasing the advantages of using Theia-ide compared to traditional IDEs.

- Evolution of Programming Interviews: A comment highlighted how programming interviews have evolved, noting that candidates used to write a bubble sort on a whiteboard.

- Now, candidates can instruct their IDE to build one, emphasizing the shift towards more practical skills in real-time coding.

- Jonas on Theia-ide Vision: A shared interview with Jonas provides insight into the vision behind Theia-ide, accessible here.

- This interview offers a deeper understanding of the features and philosophy guiding the development of Theia-ide.

The tinygrad (George Hotz) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!