[AINews] Chameleon: Meta's (unreleased) GPT4o-like Omnimodal Model

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Early Fusion is all you need.

AI News for 5/16/2024-5/17/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (429 channels, and 5221 messages) for you. Estimated reading time saved (at 200wpm): 551 minutes.

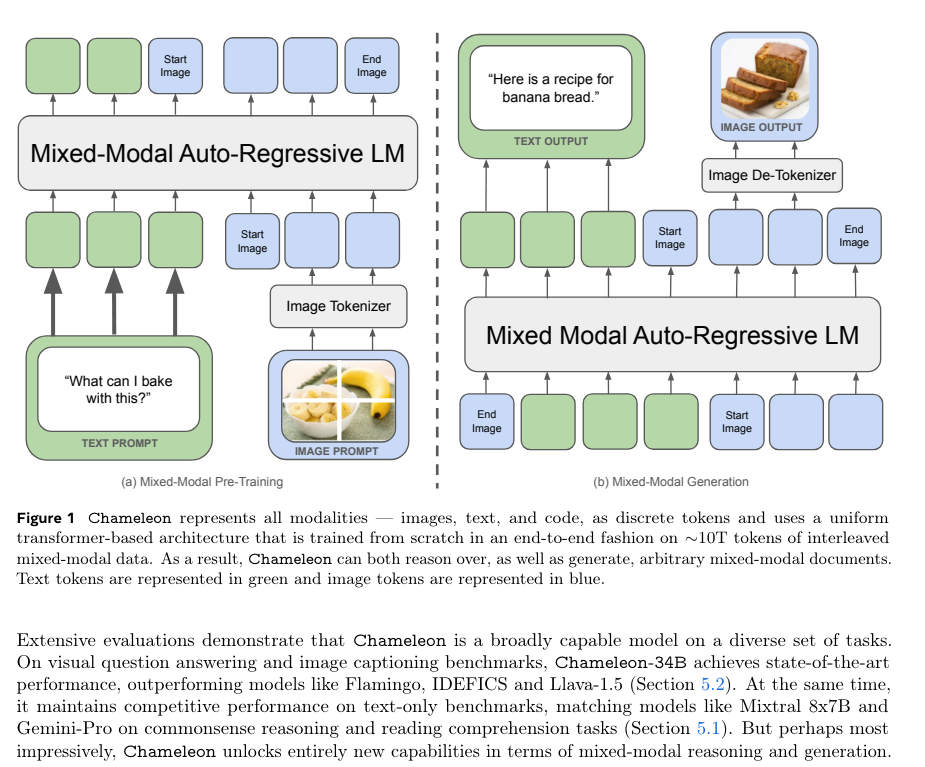

Armen Aghajanyan introduced Chameleon, FAIR's latest work on multimodal models, training 7B and 34B models on 10T tokens of text and image (independent and interleaved) data resulting in an "early fusion" form of multimodality (as compared to Flamingo and LLaVA) that can natively output any modality as easily as it consumes them:

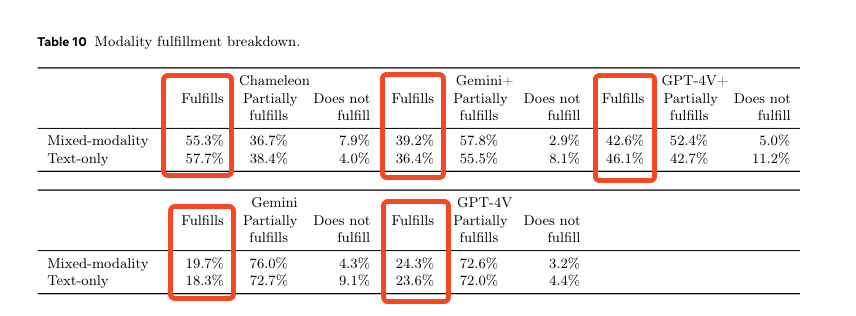

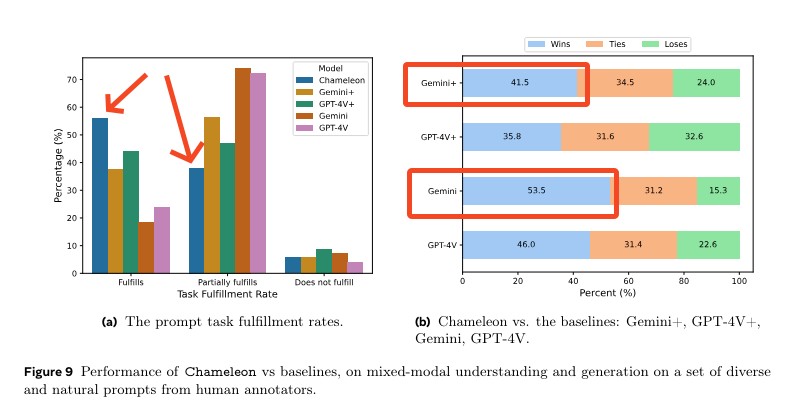

As just a 34B model, the reasoning benchmarks aren't something to write home about, but the "omnimodality" approach compares well with peer multimodal modals pre GPT4-o:

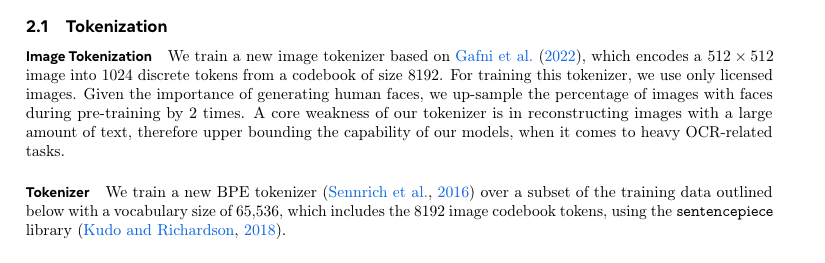

As you might imagine, the tokenization matters a lot, and this is what we know so far:

The dataset description sounds straightforward, but since model, code and data remain unreleased, we are left merely considering the theoretical advantages of their approach right now. But it's nice that Meta is clearly not far off from releasing their own "early fusion mixed modality", GPT4-class model.

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord

- Stability.ai (Stable Diffusion) Discord

- OpenAI Discord

- Perplexity AI Discord

- HuggingFace Discord

- Nous Research AI Discord

- Modular (Mojo 🔥) Discord

- LM Studio Discord

- CUDA MODE Discord

- Interconnects (Nathan Lambert) Discord

- Eleuther Discord

- LAION Discord

- Latent Space Discord

- LlamaIndex Discord

- OpenRouter (Alex Atallah) Discord

- OpenInterpreter Discord

- LangChain AI Discord

- AI Stack Devs (Yoko Li) Discord

- OpenAccess AI Collective (axolotl) Discord

- tinygrad (George Hotz) Discord

- Cohere Discord

- MLOps @Chipro Discord

- Datasette - LLM (@SimonW) Discord

- Mozilla AI Discord

- DiscoResearch Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

OpenAI and Google AI Announcements

- OpenAI GPT-4o Launch: @sama noted the aesthetic difference between OpenAI and Google's AI announcements. @zacharynado pointed out how OpenAI's launches are timed with Google's.

- Google Gemini and Flash: Google announced Gemini 1.5 Flash, a 1M-context small model with Flash performance. @alexandr_wang noted OpenAI has the best large model with GPT-4o, while Google has the best small model with Gemini 1.5 Flash.

- Convergence in AI Development: @alexandr_wang observed the level of convergence between OpenAI and Google is fascinating, with similarity between models like GPT-4o and Gemini. He believes divergence would be better for the industry.

- OpenAI Partnership with Reddit: OpenAI has partnered with Reddit, drawing attention as a potential hostile takeover strategy. @teortaxesTex noted this as a bigger breakthrough than Q*.

GPT-4o Performance and Capabilities

- GPT-4o Outperforms Other Models: GPT-4o outperforms other expensive models like Opus on benchmarks like MMLU. @abacaj noted this is what matters, even though GPT-4o isn't marketed as GPT-5.

- Improved Coding Capabilities: GPT-4o shows significant improvements in coding tasks compared to previous models. @virattt shared an example of GPT-4o successfully editing code.

- Multimodal Capabilities: GPT-4o excels at integrating image/text understanding. @llama_index demonstrated GPT-4o extracting structured JSONs from detailed research paper images with 0% failure rate and high quality answers.

- Limitations and Regressions: Despite improvements, GPT-4o's ELO score has regressed from 1310 to 1287, with an even larger drop in coding performance. It still struggles with hallucinations over complex tables and charts.

Anthropic Claude 3 Updates

- Streaming Support: @alexalbert__ announced streaming support for more natural end-user experiences, especially for long outputs.

- Forced Tool Use: Claude 3 now supports forcing the use of specific tools or any relevant tool, giving more control over tool usage in agents and structured outputs.

- Vision Support: Anthropic has laid the foundation for multimodal tool use by adding support for tools that return images, enabling knowledge extraction from visual sources.

Meta AI Announcements

- Chameleon: Mixed-Modal Early-Fusion Foundation Models: Meta introduced Chameleon, a family of early-fusion token-based mixed-modal models capable of understanding and generating images and text in arbitrary sequences. It demonstrates SOTA performance across diverse vision-language benchmarks.

- Imagen 3: Imagen 3, part of Meta's ImageFX suite, can generate high-quality visuals in various styles like photorealistic scenes and stylized art. It incorporates technologies like SynthID for watermarking AI content.

Memes and Humor

- @fchollet joked about the aesthetic difference between a West Elm showroom and a Marc Rebillet show.

- OpenAI Drama: @vikhyatk quipped "openai is nothing without its drama 💙"

- @svpino coined the term "model-apologists" in response to defenses of GPT models.

- @aidangomez joked about training AGI for enterprise in a constructed digital environment called "Coblox".

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

GPT-4o and Multimodal AI Advancements

- GPT-4o's impressive performance: GPT-4o ranked on LMSys Chatbot Arena Leaderboard with 1289 Elo, outperforming GPT-4turbo despite having an older knowledge cutoff. Discussions suggest GPT-4o excels at catering answers humans like, but may not be significantly smarter. Source

- OpenAI introduces new features: Interactive tables, charts, and file integration from Google Drive and Microsoft OneDrive for ChatGPT Plus, Team, and Enterprise users, rolling out over the coming weeks. Source

- MetaAI's Chameleon model: MetaAI introduces Chameleon, a Mixed-Modal Early-Fusion Foundation Model similar to GPT-4o, capable of interleaved text and image understanding and generation. Source

- Terminology discussions: Debates on whether "large language model" term still applies to GPT-4o and similar advanced models, given their expanded multimodal capabilities. Suggestions include "Multimodal Unified Token Transformers" (MUTTs) and "Large Multimodal Model" (LMM). Source

OpenAI Partnerships and Developments

- OpenAI partners with Reddit: OpenAI partners with Reddit to bring its content to ChatGPT and new products. Source Discussions raise concerns about data privacy and the implications of Reddit selling user-generated content. Source

- Google employee reacts to GPT-4o: Google employee uses Project Astra to react to GPT-4o announcement, congratulating OpenAI on impressive work. Source

Stability AI and Open Source Developments

- Stability AI's potential sale: Stability AI discusses potential sale amid cash crunch, raising concerns about the future of open-source AI initiatives. Source

- Hugging Face's ZeroGPU initiative: Hugging Face commits $10M of free GPUs with launch of ZeroGPU, supporting open-source AI development. Source

- CosXL release: Stability AI releases CosXL, an official SDXL update with v-prediction, ZeroSNR, and Cosine Schedule, addressing issues with generating dark/bright images and convergence speed. Source

AI Benchmarking and Evaluation

- MileBench for evaluating MLLMs: MileBench introduced as a benchmark for evaluating Multimodal Large Language Models (MLLMs) in long-context tasks involving multiple images and lengthy texts. Key findings show GPT-4o excelling in both diagnostic and realistic evaluations, while most open-source MLLMs struggle with long-context tasks. Source

- Needle in a Needlestack (NIAN) benchmark: NIAN benchmark proposed as a more challenging alternative to Needle in a Haystack (NIAH) for evaluating LLM attention in long contexts. Even GPT-4-turbo struggles with this benchmark. Source

AI Ethics and Societal Impact

- Pessimism in r/futurology: Discussions on r/futurology subreddit becoming increasingly pessimistic and "decel" as the community grows, with concerns about the impact of AI on jobs and society. Source

- US tariffs on Chinese semiconductors: US to increase tariffs on Chinese semiconductors by 100% in 2025 to protect the $53 billion spent on the CHIPS Act. Source

Memes and Humor

- AI mania meme: Meme about AI mania, referencing an "AI Flavor" of Coke. Source

AI Discord Recap

A summary of Summaries of Summaries

- Hugging Face Invests $10M in Free Shared GPUs: Hugging Face is committing $10 million to provide free shared GPUs to support small developers, academics, and startups in developing AI technologies. CEO Clem Delangue emphasized this initiative aims to democratize AI and counter centralization by big tech (source).

- OpenAI Alignment Team Departs Amid Shifting Priorities: Jan Leike, head of OpenAI's alignment team, announced his resignation due to disagreements over the company's core priorities. This follows other key departures like Ilya Sutskever, sparking discussions about OpenAI potentially prioritizing near-term product goals over long-term AI safety research (Jan's tweet, Wired article).

- GPT-4o Capabilities and Limitations Debated: The release of GPT-4o generated excitement for its multimodal capabilities, like interleaved text and image understanding (paper). However, some noted inconsistencies in its coding performance and output quality compared to expectations set by OpenAI's demos (example).

- Needle in a Needlestack (NIAN) Challenges LLMs: The new NIAN benchmark presents a formidable challenge for LLMs, testing their ability to answer questions about a specific text hidden among many similar texts. Even advanced models like GPT-4-turbo struggle with this task (code, website).

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Meta's Model Makes People Wait: Discussions revealed a delay in support for Llava or multimodal AI due to anticipation for Meta's multimodal model, with no specific release date mentioned, indicating reliance on industry leaders for advancements.

- Finding Funds for GPUs: Conversations among members included quips about how they finance their GPU usage; humorous mentions included "RAMEN FOR YEARS" as a sacrifice for their dedication to AI work, particularly for demanding tasks such as classification.

- Boosting Models with OpenHermes: The OpenHermes dataset was a topic of interest, with mentions of its incorporation leading to substantial improvements in model performance, demonstrating the value of diverse datasets in AI research.

- Discarding Refusal Alleviates Stubborn AIs: Debates touched on the impact of removing refusal mechanisms from LLMs, noting an unexpected increase in 'smartness', and referenced a specific paper on the topic, offering insights into ongoing LLM research.

- Troubleshooting Llama 3 woes: Users shared solutions for errors such as

AttributeError: 'dict' object has no attribute 'context_window'when training Llama 3, which included suggestions like modifying core codes or switching to Ollama, indicating active engagement in the practical aspects of AI model development.

Stability.ai (Stable Diffusion) Discord

SD3 Release Maintains Aura of Mystery: Discord users are expressing both anticipation and frustration over the delayed release of SD3; skepticism prevails despite a tweet by Emad hinting at an imminent launch.

GPUs Spark Debate Amongst the Discerning: In the quest for optimized training of SDXL models, discourse centered on whether an RTX 4090 with 24GB VRAM suffices, with some users deliberating the merits of more robust solutions.

Waiting Game Spurs Meme Fest: With the release of SD3 shrouded in uncertainty, the community has taken to sharing memes and light-hearted comments, as exhibited by a tweet from Stability.

Datasets and Training Techniques Tabled: AI aficionados shared training resources such as this dataset from Hugging Face, and exchanged insights on fine-tuning practices to rival the output quality of Dalle 3.

From AI to Socioeconomics: Sidetracks in Session: The conversation occasionally veered off AI terrain into vigorous discussions surrounding capitalism and morality, with some participants nudging the focus back to tech-centric themes.

OpenAI Discord

- Interactive Features Level Up ChatGPT: OpenAI has announced the inclusion of interactive tables and charts in ChatGPT for Plus, Team, and Enterprise users, offering direct integration with Google Drive and Microsoft OneDrive. This update is expected to roll out in the coming weeks, and users can learn more about it here.

- The Dawn of GPT-4o: The community is buzzing with discussions around the partial release of GPT-4o, celebrating its top ranking and new higher message limits. Anticipated features like Voice Mode are also on the horizon, although some have concernedly noted slower performance with extended use.

- Ethical AI Conversations Hit Prime Time: AI's impact on job markets has been a hot topic, with conversations examining both the potential uplift in productivity and the concern for future employment structures. Members are also exchanging thoughts on ethical and educational applications of AI, aiming to balance technology enhancement with responsible usage.

- Prompt Engineering Pulls Back the Curtain: The effective use of markdown to guide AI response and the challenges posed by model updates affecting behavior have generated much attention in the community. Methods to foster more desired outcomes from the AI, like emotional incentives, were described humorously yet showcased critical insights into AI user interactions.

- API Chat Gets Technical: Detailed discussions around the API capabilities have noted that using markdown in prompts can help clarify intent and character roles. However, discrepancies in custom GPT performance and mixed experiences with model access and updates underline the evolving nature of interacting with these advanced AIs.

Perplexity AI Discord

- GPT-4o Plays Catch-Up: GPT-4o is noted to be faster and slightly improved over previous iterations, accessible for free users, despite reports of varying message limits and refresh rates.

- Cognition vs. Hallucination in AI: Concerns about AI hallucinations are highlighted, with doubts cast on AI's capability to fully eliminate them, potentially affecting job security in entry-level positions.

- Perplexity AI Clashes with ChatGPT: Users are split between Perplexity, credited with better sourcing, and ChatGPT, favored for feature integration like web search.

- Programming and Creativity in AI Diversity: GPT-4o and Opus show divergent strengths; GPT-4o excels in coding, whereas Opus offers depth in math and complex problem-solving.

- The API Integration Dilemma: Questions are raised about sonar-medium-online support longevity; someone seeks to add Perplexity to a private Discord, while the default temperature for Perplexity AI models is confirmed to be 0.2.

Relevant Link: Chat Completions Documentation

HuggingFace Discord

- OpenAI's 'Openness' Debated: A YouTube critique titled "Big Tech AI Is A Lie" argued that OpenAI doesn't live up to its name, sparking conversations about the value of truly open platforms like HuggingFace, and a subsequent discussion on the performance of HuggingFace models versus closed systems.

- Cultivating Curiosity in Reinforcement Learning: A paper discussing curiosity-driven exploration in reinforcement learning sparked interest, detailing how rewarding agent curiosity can lead to better results in environments where the outcome of actions is unpredictable. Additionally, the epsilon greedy policy was suggested for maintaining the exploration/exploitation trade-off.

- Conversations on Computer Vision and Diffusion Models: Questions arose regarding latent diffusion models in diffusers, the training of such models from scratch, and UNet models' convergence issues, with a focus on small dataset impacts. Separately, a Medium post detailed the integration of GPT-4o with LlamaParse for enhanced multimodal capabilities, while discussions in the #diffusion-discussions channel focused on latent space representations and uploading issues with Google Collab.

- RL in the Spotlight: The first RL model's addition to the Hugging Face repository was met with encouragement, marking a milestone for one user's learning path in Deep RL. This dovetails with talks on reinforcement learning's challenges and the introduction of the epsilon-greedy policy for exploration and exploitation balance.

- Model Training and Deployment Challenges: Complexities in model training were illustrated by a user's struggle with UNet model convergence and another's attempt at generating grid puzzles with GPT-4o. A user recommended continuous retraining to keep coding language models relevant due to outdated training data.

- Dataset Innovations and AI Creations Showcased: The Hugging Face community introduced the Tuxemon dataset featuring permissively licensed creature images, evoking humor as an AI data source. Meanwhile, community member showcases include the use of LangChain and Gemini for a business advisor AI, a GenAI-powered education tool, and a virtual AI influencer, underscoring the diverse applications of AI technology.

Nous Research AI Discord

- Brain-inspired AI Models Gain Traction: Participants in the guild discussed the concept of a streaming-like process for AI, similar to human memory, referencing the Infini-attention methodology for greater relevance update efficiency. Here's a paper on Infini-attention for further reading.

- Benchmarking AI with NIAN Challenge: Needle in a Needle Stack (NIAN) emerged as a niche benchmark to push the limits of Language Models, including GPT-4, in differentiating specific content within masses of similar material. Here's more on the NIAN benchmark and its dedicated website.

- Symbolic Language in AI Explored: Conversations hinted at a growing interest in using GPT-4o for creating a symbolic language that could facilitate tasks involving algebraic computations, suggesting a potential advance in AI handling of symbolic reasoning.

- Stable Diffusion 3 Embraces Open Architecture: Stable Diffusion 3 is being prepped for on-device inference, optimizing for Mac with MLX and Core ML, and will be open-sourced through a partnership between Argmax Inc. and Stability AI. Argmax's partnership tweet can be read here.

- AI in Real-Time User Interfaces: A guild member sought resources on AI models capable of analyzing screen actions nearly in real-time, similar to Fuyu, which process screen captures and UI interactions every second. Meanwhile, Elon Musk's Neuralink has opened applications for a second participant in their brain implant trial, posting details via Elon's tweet.

Modular (Mojo 🔥) Discord

Mojo Gets an Update: The latest nightly Mojo compiler 2024.5.1607 makes its debut, with an invitation for users to try out the latest features using modular update nightly/mojo. The community response has been notably positive towards the new conditional methods syntax, and contributions are steered towards smaller PRs to combat the issue of "cookie licking." Check the diffs from the last nightly and the full changelog.

Mojo's Engineering Challenges: Engineers express concerns over List.append performance in Mojo, noting inefficiency with large data sizes and invite comparisons with Python and C++ implementations. They delve into discussions of Rust's and Go's dynamic array resizing strategies and reference a case study with StringBuilder variations in Mojo.

Open-source Perspectives and Pain Points: Debates around the merits and challenges of open-source contributions light up discussions, with concerns voiced about projects transitioning from open to closed source. Advent of Code 2023 is recognized as an entry point to get started with Mojo, with the challenge available on GitHub.

Developer Updates and Handy Guides: Modular's news updates have been shared through Twitter links, offering glimpses into the latest advancements. Meanwhile, a guide for assisting new contributors with syncing forks on GitHub has been circulated to support smoother contributions.

MAX Comes to macOS: The MAX platform brings excitement with its new nightlies now supporting macOS and introducing MAX Serving. Engineers interested in the MAX platform are directed to get started using PyTorch 2.2.2.

LM Studio Discord

Model Troubleshooting Takes Center Stage: Technical challenges involving LM Studio have surfaced, including a user struggling with glibc issues for installation and suggestions pointing towards potentially needing an upgrade or reverting to LM Studio version 0.2.23. Embedding models for RAG in Pinecone proved troublesome without a direct guide, and a VM error 'Fallback backend llama cpu not detected!' indicated possible VM setup issues. Antivirus software caused some stir, flagging the 0.2.23 installer as a virus, later clarified as a false positive.

LLM Showdown: Coding Models & File Gen Frustrations: Participants highlighted that the best coding models vary according to programming language and hardware, with Nxcode CQ 7B ORPO and CodeQwen 1.5 finetune touted for Python tasks. It was acknowledged that LM Studio can't generate files directly and forcing models to only show code remains inconsistent. Querying on the fastest semantic text embeddings turned up all miniLM L6 as the quickest yet insufficient for one user's requirements, and a gap was seen in recommendations for usable medical LLMs in LMS.

A False Positive Frenzy with Antivirus Software: Antivirus tools, specifically Malwarebytes Anti-malware and Comodo, are misidentifying certain aspects of LM Studio's architecture as threats. These alarm bell incidences—the former shared via a VirusTotal link—highlight the challenge of ensuring LM Studio's components are not mistakenly flagged by protective software.

Hardware Enthusiasts Break New Ground: Significant achievements were reported in hardware discussions, with a 70B LLama3 model running on an Intel i5 12600K CPU and the impact of RAM speed alignments on performance noted. Members debated quantization efficacy, memory overclocking's effects on stability, and even compared various GPU architectures, including RX 6800, Tesla P100, and GTX 1060 in performance.

Conversations Across Channels Drive Collaborative Solutions: Multiple topics flowed across channels, focusing on troubleshooting LM Studio storage and permission issues, leading to the effective use of conversation memory management with LangChain over server-side, and the consideration of open-source alternatives over Gemini's paid context caching service. A move for deeper discussion on certain issues to another channel signifies the collaborative approach by the community.

CUDA MODE Discord

GPU Community Powers Up: Hugging Face announces a $10 million investment for free shared GPUs to support small developers, academics, and startups, aiming to democratize AI development in the face of big tech's AI centralization. The move positions Hugging Face as a community-centric hub and this article provides more insights.

Triton Performance Puzzle: Implementers of a Triton tutorial observe discrepancies in performance, questioning the impact of "swizzle" indexing techniques as a possible factor. The differences noted include a significant drop in performance when users follow the tutorial, versus the performance advertised.

Bitnet Steps into the Spotlight: Strategy discussions initiate a budding project for Bitnet 1.58 due to its advanced training-aware quantization techniques. The conversation emphasizes the importance of post-training weight quantization, with suggestions to centralize Bitnet development within the PyTorch ao repo for efficient implementation and support.

Code and Optimizations for Large Language Models: An optimization pull request reduces memory usage by 10% and increases throughput by 6% for large language models, exemplifying efficient resource utilization during training phases. Moreover, discussions unravel the possibilities of NVMe direct GPU writes, offering a high-speed bypass of CPU and RAM, albeit its practical application remains to be explored within the ambit of AI model training workflows.

Quantum of Documentation: Community members voice frustration regarding sparse PyTorch documentation, particularly torch.Tag, with the conversation extending to tackling template overloading issues in custom OPs. Additionally, a plan to reduce compile times in PyTorch garners attention, aiming for more efficient development cycles.

End.

Interconnects (Nathan Lambert) Discord

- Interconnects Paves New Paths: Nathan Lambert introduced a niche project with enthusiasm for monthly updates and potential improvements. However, amid OpenAI departures, a key engineer joined an initiative with individuals from Boston Dynamics and DeepMind, revealing a notable industry shift.

- The Modeling World Reacts: Chat about the new GPT-4o models, which showcase "interleaved text and image understanding and generation", indicates they represent a new scale paradigm with an early fusion, multi-modal approach. OpenAI's leadership changes led to the disbandment of its superalignment team, alongside key shifts towards product-focused objectives, sparking debates over AI safety and alignment.

- Safety Concerns in the Spotlight: Safety remains a contentious topic, with the dissolution of OpenAI's superalignment team highlighting concerns over immediate product goals versus long-term AI risk strategies. Meanwhile, Google DeepMind released its Frontier Safety Framework, demonstrating an industry-wide move towards proactive AI safety measures.

- OpenAI's Surprising Partnerships: OpenAI's unexpected partnership with Reddit captures attention while Lambert's decision to remove model and dataset links illustrates a strategic move towards deeper, standalone analysis in his communication.

- Scaling, Aligning, and Technical Innovation: Discourse around scaling laws for vocabulary size in models and an overview of aligning open language models suggests continued refinement in AI development practices. Tackling technical challenges head-on, Lambert teases an upcoming project dubbed "Life after DPO".

Eleuther Discord

- PyTorch Flops Under Review: Members shared basic usage details and challenges concerning the FLOP counter in PyTorch; a gap was noted in documenting backward operation tracking. Contributions were encouraged for an lm_eval.model module for MLX.

- Comparative Studies and Catastrophic Forgetting: A keen interest was observed in comparative studies of LLM Guidance Techniques, specifically Adaptive norms versus Self Attention for class guidance. Another highlighted topic was discussing strategies to combat catastrophic forgetting during finetuning of models, with a consensus on the necessity of retraining on old tasks.

- Sifting Through Hierarchical Memory and Semantic Metrics: A new Hierarchical Memory Transformer paper received attention for its potential to tackle long-context processing limitations. Separately, there’s an active search for a differentiable semantic text similarity metric that outperforms rudimentary substitutes like Levenshtein distance, as mentioned in this paper.

- Transformers Sidestep Attention with MLP: Conversations about MLP-based approximations of attention mechanisms in transformers pointed to possible relevant research on Gwern.net. Discourse also touched on the repercussions of excluding compute costs in data preprocessing on the overall economization of models.

- Tinkering with GPT-NeoX to Hugging Face Transitions: Technical challenges emerged with transitioning GPT-NeoX models to Hugging Face, leading to discussions about naming conventions in Pipeline Parallelism (PP) and the parallel existence of incompatible files. A proposed fix for identified bugs in conversion scripts and insights into compatible configurations for better cohesion with Hugging Face structures were put forward.

LAION Discord

Noncompetes Get the Axe: The engineering community reacts to the FTC's groundbreaking decision to eliminate noncompetes, which could significantly alter the competitive landscape and professional autonomy in the tech industry.

Open Source vs. Closed Wallets: A spirited debate among engineers centers on the choice between proprietary and open source employment, considering the limitations on open source contributions and the allure of higher salaries at proprietary firms.

GPT-4's Sibling Rivalry: GPT-4O's coding capabilities are scrutinized, with some members noting faster performance yet lamenting issues with inaccurate code output, spotlighting the need for careful evaluation of such advanced AI systems.

Creative Commons Catch: The launch of the CommonCanvas dataset, featuring 70 million creative commons licensed images, was received with enthusiasm and concern due to its non-commercial license, impacting its utilization in the engineering sphere.

Network Know-How and Cartoon Clout: Recent engineering discussions delve into successfully training a Tiny ConvNet for bilinear sampling, exploring positional encoding in CNNs, and a new Sakuga-42M dataset to boost cartoon research, reflecting a broad spectrum of innovative approaches in the field.

Latent Space Discord

- Rich Text Translation Woes: There is a struggle to effectively translate rich text content without losing the correct positions of spans, as demonstrated in the transition from English to Spanish. Methods involving HTML tags and strategies geared towards deterministic reasoning were proposed to enhance translation precision.

- Hugging Face's GPU Generosity: Hugging Face has pledged $10 million in free shared GPUs to support smaller developers, academia, and startups, as announced by CEO Clem Delangue, in an effort to democratize access to AI developments.

- Slack Data Privacy Concerns: Renewed debate surfaced about Slack's use of customer data, particularly the possibility of the company training its AI models without explicit user consent, eliciting a spectrum of reactions from the community.

- Next-Gen AI Fusion: Excitement brews around a new multimodal Large Language Model (LLM) described in a recent paper, showcasing integrated text and image understanding, prompting discussions on future AI applications and cross-modality convergence.

- OpenAI Alignment Reshuffle: The departure of Jan Leike, OpenAI's head of alignment, led to introspective dialogue on the organizations' AI safety and alignment philosophies, with Sam Altman and others thanking Leike for his contributions.

- Latent Space Podcast Alert: Latent Space released a new podcast episode.

LlamaIndex Discord

GPT-4o Triumphs in Text and Image Understanding: Engineers are exploring GPT-4o's capabilities in parsing documents and extracting structured JSON from images, with specific discussions around a full cookbook guide and comparison to its predecessor GPT-4V.

Meetup Alert: SF's Upcoming Generative AI Summit: The first in-person meetup organized by LlamaIndex in San Francisco is generating buzz, promising deep-dives into generative AI and retrieval augmented generation engines.

LlamaIndex Integrations and User Guidance Hits High Note: A GitHub link provided clarity on Claude 3 haiku model utilization within LlamaIndex, while comprehensive LlamaIndex documentation offered guidance on harnessing Ollama (LLaMA 3 model) with VectorStores.

LlamaIndex UI Gets a Facelift: The LlamaIndex's User Interface has been enhanced, now offering a more robust selection of options for users to enhance their experience.

Cohere Pairing with Llama for RAG Implementation: Members of the community are seeking advice on integrating Cohere with Llama for building Retrieval-Augmented Generation applications, suggesting a strong interest in cross-service model functionality.

OpenRouter (Alex Atallah) Discord

NeverSleep Enters the Chat with Lumimaid: The new NeverSleep/llama-3-lumimaid-70b model integrates curated roleplay data striking a balance between serious and uncensored content. Details are available on OpenRouter’s model page.

ChatterUI Brings Characters to Android: ChatterUI has been released as a character-focused UI for Android, diverging into uncharted territory with fewer features compared to peers like SillyTavern, and supporting multiple backends.

Invisibility App Polishes AI Interaction for Mac Users: A new MacOS Copilot named Invisibility, empowered by GPT4o and Claude-3 Opus, adds to its arsenal a video sidekick feature while promising further enhancements including voice integration and long-term memory. Discover Invisibility’s capabilities.

Google Gemini Context Tokens Provoke TPU Wonder: The release of Google Gemini with 1M context tokens prompted debates on how InfiniAttention could be Google's answer to handling large contexts with TPUs, sparking a blend of skepticism and curiosity among developers. The technical inquisition revolved around InfiniAttention’s paper, which can be found here.

Tech Troubles and Teasers: A clutch of technical conversations occurred, ranging from questions about GPT-4o's audio capabilities to reports of client-side exceptions on OpenRouter's website, with commitments to future site refactoring. The technical community grappled with OpenRouter's function calling capabilities, stirring a mix of guidance and ongoing speculation.

OpenInterpreter Discord

Billing Blues and AI Cheers: Users reported a bug with OpenInterpreter where even with billing enabled, error messages occurred, contrasting with seamless performance when calling OpenAI directly. Additionally, excitement bubbled over the improvements noted using GPT-4.0 in OpenInterpreter, particularly for React website development.

Local Legends and Global Goals: Discussion on local LLMs highlighted dolphin-mixtral:8x22b for its robustness albeit slow performance and codegemma:instruct for its balance of speed and functionality. In the spirit of community advancement, Hugging Face is investing $10 million in free shared GPUs to encourage development among smaller entities in AI.

Conquering Configurations and Protocol Puzzles: Engineers engaged in tackling installation issues of 01 across various Linux environments, grappling with complexities from Poetry dependence conflicts to Torch installation troubles. The evident advantage of the LMC Protocol over traditional OpenAI function calling, designed for speedier direct code executions, was dissected.

Repository Riddles and Server Struggles: Clarification was sought on the state of the GitHub repositories, with "01-rewrite" stirring speculation of a new project's emergence. Users shared experiences and solutions pertaining to connectivity issues with the 01 server across multiple platforms, discussing necessary steps for smooth integration.

Google's Glimpses of Grandeur: Anticipation was piqued in the community with a tweet from GoogleDeepMind teasing Project Astra, hinting at new developments in AI to be watched closely by technical experts.

LangChain AI Discord

- Memory Boost for AI Chatbots: Engineers discussed enhancing AI chatbots with memory to retain context across queries, recommending methods like chat history logging and memory variables.

- Persistent Neo4j Indexing Issues: Multiple Neo4j users reported problems with the

index_nameparameter, with incorrect document retrievals hinting at an issue in LangChain's management of it. - Streaming Hiccups in AgentExecutor: A user encountered issues with

.streaminAgentExecutorfor token-by-token output and was advised to try.astream_eventsfor more granular streaming. - RAG Chain Async Anomalies: Attempts to make a RAG chain asynchronous in Langserve resulted in an error related to incomplete coroutine execution, hampering functionality.

- Mixing AI Tech for Real-Estate and Research: Shared projects highlighted advancement with AI integrations like a Real Estate AI combining LLMs, RAG, and generative UI, a performance benchmark of GPT-4o on NVIDIA GPUs, and a call for beta testers for a new advanced research assistant with premium model access.

- Web Scraping Wizardry Unveiled: A new tutorial showcased constructing a universal web scraper agent capable of navigating e-commerce site challenges such as pagination and CAPTCHA, accessible in a shareable YouTube tutorial.

AI Stack Devs (Yoko Li) Discord

- AI Companions Easing Human Stress: A shared CBC First Person column recounts how an AI named Saia provided emotional support during a nerve-wracking vaccination appointment, showcasing the growing bond between humans and AI companions.

- Windows Welcomes AI Town: AI Town is now functioning natively on Windows, marking a significant step away from dependence on WSL or Docker according to an announcement. This eases the development process for those preferring the Windows ecosystem.

- Dynamic Mapping Excites AI Developers: Suggestions for custom dynamic maps are burgeoning in the AI community, including creative scenarios like "the office" or a spy thriller setup, bolstering the depth of AI environments.

- Rise of AI Reality Entertainment: Developers have launched an AI Reality TV show – a platform allowing users to create simulations akin to aiTown and contributing to a unique narrative with their own custom AI characters. Enthusiasm for the platform is palpable with an open invitation via their website and Discord.

- GIFs as a Welcome Distraction: During a vigorous technical exchange, a Doja Cat Star Wars GIF was shared, injecting a moment of levity into the ongoing discussions on AI development.

OpenAccess AI Collective (axolotl) Discord

- Testing Patch for CMD+ Functionality: A patch that includes some CMD+ functionality is set to be tested tonight, with a query on support for zero3 example config.

- Axolotl vs. Llama Pretraining Speed: Pretraining speeds are notably faster (unspecified by how much), potentially due to Axolotl improvements or features within Llama 3—specific impact metrics or factors not detailed.

- Distributed Dilemma with Galore Layerwise: Skepticism remains regarding whether Galore Layerwise is still incompatible with Distributed Data Parallel (DDP) as no confirmation is available.

- Non-English Finetuning Finesse: Non-English data finetuning is in progress with datasets around 1 billion tokens and a context length of 4096, targeting an 8B model.

- Unsloth's Optimizations Under Spotlight: Questions on the applicability of Unsloth optimizations for full fine-tune of Llama were met with positive feedback, suggesting a "free speedup" is achievable.

tinygrad (George Hotz) Discord

Tinygrad Optimizes with CUDA Kernels: A discussion emerged on optimizing memory usage in Tinygrad by employing a CUDA kernel for reductions, avoiding VRAM overflow that large intermediate tensors cause. Although frameworks like PyTorch have limitations, a user-provided custom kernel example illustrated a potential solution.

Symbolism in Lambda Land: Users talked about implementing lamdify to allow Tinygrad to render symbolic algebraic functions, kicking off with Taylor series for trig functions. There's ongoing effort in extending the arange function, which is a necessity for such symbolic operations.

Get Schooled with Adrenaline: An app called Adrenaline was recommended to study different repositories, with a user mentioning plans to leverage it for learning Tinygrad.

Computational Conundrum: Clarification about a compute graph's parameters was shared, with a focus on understanding the UOps.DEFINE_GLOBAL and the significance of its boolean tags, enhancing the Tinygrad development workflow.

Trigonometry on a Diet with CORDIC: The community engaged in a rich dialogue about adopting the CORDIC algorithm in Tinygrad to compute trig functions with higher efficiency than traditional Taylor series approximations. Discussion highlighted the pressure to maintain precision in reducing arguments, sharing a Python implementation that showcased argument reduction and precision handling for sine and cosine computations.

Cohere Discord

- Hunt for Cohere's PHP Companion: Engineers are seeking a reliable Cohere PHP client to integrate Cohere functionalities with PHP, although its efficacy remains untested in work environments.

- Cohere Toolkit Touted for Performance: There's a discussion around the performance of Cohere's application toolkit, especially the reranker's superior results compared to other solutions, but no consensus on the cause of this improvement has been reached.

- Calling for Quicker Discord Support: Members voiced frustrations about slow response times from Discord support, with mentions of upcoming plans to improve the support experience.

- Navigating Issues with Cohere's Chatty RAG Retriever: A shared notebook on Cohere RAG retriever highlights problems such as unexpected keyword arguments which impede using the

chat()function. - API Limits Locking Out Learners: Experimentation with Cohere RAG retriever hit a roadblock due to 403 Forbidden errors, suspected to be caused by exceeding API call quotas.

MLOps @Chipro Discord

- Chip's Chats Chill for a Bit: Chip has announced a pause on hosting monthly casual meetups for the next few months, prioritizing other commitments.

- Snowflake Dev Day Featuring Chip: Members have an opportunity to engage with Chip at their booth during the upcoming Snowflake Dev Day on June 6th.

- AI Smackdown: NVIDIA and LangChain's Contest Ignites Excitement: NVIDIA and LangChain have stirred excitement with a developer contest highlighting generative AI, with a grand prize of an NVIDIA® GeForce RTX™ 4090 GPU. Catch the contest details here.

- Geo-restrictions Dampen Contest Spirits: A guild member has humorously expressed dismay over geographic restrictions preventing them from participating in the NVIDIA and LangChain contest, hinting at a potential country move to qualify.

- Engineers Unite on LinkedIn: A networking opportunity has presented itself as a member shared their LinkedIn for professional connections among peers: Connect with Sugianto Lauw.

Datasette - LLM (@SimonW) Discord

- GPT-4o Falls Short in Public Demo: Riley Goodside exposed weaknesses in GPT-4o during a ChatGPT session, underscoring a gap between performance and the expectations set by OpenAI's demo.

- Google's AI Flubs at I/O: Google's AI encountered embarrassing slips during its I/O announcement despite bold claims, as detailed in Alex Cranz's article in The Verge.

- Advocating for Grounded AI Solutions: An article, highlighted by 0xgrrr, calls for a more realistic approach to AI, aligning with Alter's aim to transform texts and documents effectively. The community resonated with this perspective, appreciating the nuanced take which can be read in full here.

- Mac Desktop Project Potential Discontinuation Concern: A community member raised concerns about the apparent neglect of SimonW's Mac desktop solution after its 0.2 version, expressing their potential pivot to alternative onboarding options.

Mozilla AI Discord

- Markdown Mayhem in Mozilla's Model: A participant noted that hyperlinks returned from the model via the server were not rendered correctly, providing a piece of code as evidence and offering to address the issue with a GitHub pull request.

- Time's Up: Embeddings Edition: An issue regarding a httpx.ReadTimeout error was reported during embeddings generation in a search assistant tutorial, after only 9% completion, along with a GitHub link related to the problem and detailed debug logs, seeking insights for a fix.

- The Exponential Backoff Back-and-Forth: In response to the timeout debacle, the suggestion to apply an exponential backoff retry strategy was debated, proposing to drop and retry the connection when timeouts occur.

- Talking Data Sizes: A clarifying conversation took place about the data volume for an operation, narrowing it down to "a few sample files," which delineates the test's scope.

- Docker Docks at Llamafile Harbor: A guide to containerizing llamafile using Docker was highlighted, considering its benefits for streamlining LLM chatbot setups, with a blog post link provided for those in need of a walkthrough.

DiscoResearch Discord

AI Alignment Falling Out of Favor: One member expressed the viewpoint that alignment research is losing its appeal among researchers, though no specific reasons or context were provided.

Needle in a Needlestack—AI's New Challenge: The Needle in a Needlestack (NIAN) benchmark was highlighted, which is posing a significant challenge to models like GPT-4-turbo. Resources shared included the code repository and NIAN's website, along with a Reddit discussion thread on the topic.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Skunkworks AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (994 messages🔥🔥🔥):

- Waiting for Meta's Multi Modal Model: When asked about supporting Llava or multimodal, a member shared "they're 'waiting for meta's multi modal model'" as the primary reason for the delay.

- Conversation about GPU Expenses: Members discussed where they get their GPU money, jokingly citing sources like Kaggle and mentioning financial struggles such as "RAMEN FOR YEARS" and the high demand for classification tasks.

- Open Hermes Dataset Utility: There was enthusiastic discussion about the OpenHermes dataset and how including it "improved performance significantly".

- Refusal Mechanism in LLMs: An insightful conversation delved into how removing refusal mechanisms by orthogonalizing weights has made models "smarter" unexpectedly and references the paper "Refusal in LLMs is mediated by a single direction".

- Colab and GPU Use Case Discussions: Various members shared their challenges and successes using Google Colab and Kaggle for model training, with recommendations to use dedicated services like Runpod and discussions on the viability of older GPUs like the P100.

- Blackhole - a lamhieu Collection: no description found

- Surprise Welcome GIF - Surprise Welcome One - Discover & Share GIFs: Click to view the GIF

- failspy/llama-3-70B-Instruct-abliterated · Hugging Face: no description found

- no title found: no description found

- WizardLM (WizardLM): no description found

- Quantization: no description found

- no title found: no description found

- cognitivecomputations/Dolphin-2.9 · Datasets at Hugging Face: no description found

- UNSLOT - Overview: typing... GitHub is where UNSLOT builds software.

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Reddit - Dive into anything: no description found

- Skorcht/schizogptdatasetclean · Datasets at Hugging Face: no description found

- AI Unplugged 10: KAN, xLSTM, OpenAI GPT4o and Google I/O updates, Alpha Fold 3, Fishing for MagiKarp: Insights over Information

- mixedbread-ai (mixedbread ai): no description found

- finRAG Dataset: Deep Dive into Financial Report Analysis with LLMs: Discover the finRAG Dataset and Study at Parsee.ai. Dive into our analysis of language models in financial report extraction and gain unique insights into AI-driven data interpretation.

- Google Colab: no description found

- Big Ups Mike Tyson GIF - Big Ups Mike Tyson Cameo - Discover & Share GIFs: Click to view the GIF

- Google Colab: no description found

- Google Colab: no description found

- Refusal in LLMs is mediated by a single direction — AI Alignment Forum: This work was produced as part of Neel Nanda's stream in the ML Alignment & Theory Scholars Program - Winter 2023-24 Cohort, with co-supervision from…

- Tweet from Should AI Be Open?: I. H.G. Wells’ 1914 sci-fi book The World Set Free did a pretty good job predicting nuclear weapons:They did not see it until the atomic bombs burst in their fumbling hands…before the l…

Unsloth AI (Daniel Han) ▷ #random (37 messages🔥):

- Llama3 experiences high training losses: After resolving tokenizer issues, one member noted that "eval losses roughly double, training losses more than 3 times as high" on Llama3. They speculated on updating the prompt format or omitting the EOS_TOKEN for improvements.

- RAM issues with ShareGPT dataset: A user ran out of 64GB of RAM "trying to convert that shi-" from the ShareGPT dataset. Another member suggested that the code might be inefficient, as it should only require around 10GB of RAM.

- Discussion on finding similarly formatted text: One user asked if there were tools for finding similarly formatted text, like all caps or distinct new lines. Suggestions included Python's

remodule and regex, but the user noted the need for an automatic solution capable of handling unknown formats. - Opining on Sam Altman's leadership: A member criticized Sam Altman's leadership, calling it a case of “do as I say not as I do” due to his fear-mongering and lobbying efforts. Another member suggested that the situation was wild, potentially a result of Altman's influence.

Unsloth AI (Daniel Han) ▷ #help (266 messages🔥🔥):

- Context Window AttributeError Troubleshooting: A member named just_iced sought assistance with a persistent

AttributeError: 'dict' object has no attribute 'context_window'while training Llama 3 on custom data. Various solutions were provided, including modifying core module codes and switching to utilize Ollama, leading to successful troubleshooting.

- Driver's Manual for RAG: neph1010 suggested that Retrieval-Augmented Generation (RAG) could be more suitable than fine-tuning for training models with a driver's manual. They discussed extracting text from PDFs despite the complexity of working with documents containing tables and diagrams.

- PyPDF2 vs PyPDF: Linked was shared pointing to PyPDF2 documentation, discussing issues with extracting text and metadata from PDFs.

- GGUF Model Conversion Issues: Multiple users, including re_takt, experienced errors during the conversion of models to GGUF with

llama.cppand raised those issues with the development team. A fix was provided, which everyone was encouraged to apply by updating their notebooks or using new ones from the GitHub page.

- Unsloth and CUDA Compatibility: A member named wvangils faced CUDA compatibility issues on a Databricks platform, receiving a warning about unsupported expandable segments. Further debugging recommended using specific install commands for packages in environments like JupyterLab and possibly rebuilding the environment.

- no title found: no description found

- unsloth/llama-3-8b-Instruct-bnb-4bit · Hugging Face: no description found

- Transformer Math 101: We present basic math related to computation and memory usage for transformers

- RuntimeError: Unsloth: llama.cpp GGUF seems to be too buggy to install. · Issue #479 · unslothai/unsloth: prerequisites %%capture # Installs Unsloth, Xformers (Flash Attention) and all other packages! !pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git" !pip install -...

- Welcome to PyPDF2 — PyPDF2 documentation: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- unsloth (Unsloth AI): no description found

Unsloth AI (Daniel Han) ▷ #showcase (2 messages):

- **AI News humorously acknowledges its own meta-conversation**: A user expressed amusement about the AI summarization part, noting that it was *"some convo somewhere not related to AI News"* and found it funny that *"AI News mentioning another AI News mention"* could happen.

Stability.ai (Stable Diffusion) ▷ #general-chat (836 messages🔥🔥🔥):

- SD3 release uncertainty and delays: Several users expressed frustration over the delayed release of SD3. One mentioned a tweet by Emad suggesting SD3 was "due to drop" but remained skeptical as no solid release date has been confirmed.

- Hardware requirements for SDXL and training: Members discussed the efficiency of various GPUs, including debates over whether the RTX 4090 is sufficient for training SDXL models. Notably, a 24GB VRAM is seen as minimal for more complex tasks, and some users consider renting more powerful setups.

- Community skepticism and coping mechanisms: A user cynically commented on the state of SD3 and the overall delays, sharing a tweet from Stability. Others shared memes and humorous remarks about waiting and coping with uncertainties.

- Training resources and dataset contributions: One user shared a dataset from Hugging Face aimed at achieving high-quality results comparable to Dalle 3. Discussions included tips on Lora models and fine-tuning practices for efficient AI art generation.

- General confusion and off-topic debates: The chat featured heated personal debates and unrelated topics, from AI models to socio-economic issues. Noteworthy were intense discussions on capitalism, personal success, and the moral dilemmas of wealth acquisition, with some users calling for a return to foundational principles.

- Dr Neha Bhanusali: Rheumatologist | Autoimmune specialist Lifestyle Medicine physician

- Chameleon: Mixed-Modal Early-Fusion Foundation Models: We present Chameleon, a family of early-fusion token-based mixed-modal models capable of understanding and generating images and text in any arbitrary sequence. We outline a stable training approach f...

- ProGamerGov/synthetic-dataset-1m-dalle3-high-quality-captions · Datasets at Hugging Face: no description found

- Copium GIF - Copium - Discover & Share GIFs: Click to view the GIF

- Tweet from Emad (@EMostaque): @morew4rd @GoogleDeepMind Sd3 is due to drop now I don’t think folk will need that much more tbh with right pipelines

- Image posted by 20Twenty: no description found

OpenAI ▷ #annnouncements (1 messages):

- Interactive tables and charts in ChatGPT: OpenAI announced the rollout of interactive tables and charts along with the ability to add files directly from Google Drive and Microsoft OneDrive. This feature will be available for ChatGPT Plus, Team, and Enterprise users over the coming weeks. Read more.

OpenAI ▷ #ai-discussions (178 messages🔥🔥):

- GPT-4o Rollout Generates Buzz: Members express excitement about the limited rollout of GPT-4o with higher message limits and improved vision capabilities over GPT-4, despite some features such as voice, video, and Vision not being fully active yet. They also discussed the freedom to switch models during the conversations improving user experience.

- Voice Mode and Future Releases: There is anticipation for the rollout of the enhanced Voice Mode in GPT-4o, which is expected in the coming weeks for ChatGPT Plus users. Detailed explanations were provided about the possible functionalities and integrations with tools like Be My Eyes.

- Concerns Over AI's Impact on Employment: Members debated the potential future where AI could lead to mass job displacement. Discussions ranged from AI-generated productivity improvements to concerns about long-term implications for employment and societal structure.

- Multi-modal AI Capabilities: Discussion about the robustness of GPT-4o in image generation, revealing differences in capabilities compared to previous versions like DALL-E. A link to OpenAI's explorations page was shared to showcase sample images and features.

- Educational and Ethical Uses of AI: Conversations touched on the ethical implications and potential uses of AI in education, suggesting that AI could democratize access to personalized tutoring. There were also suggestions about the responsible implementation of AI in assisting with daily tasks and expanding human knowledge.

OpenAI ▷ #gpt-4-discussions (148 messages🔥🔥):

- GPT-4o Slows Over Time: Members observed that as conversations with GPT-4o get longer, the inference speed significantly drops, sometimes resulting in the model halting mid-inference. This issue was noted by users on different platforms including the Mac app and the website, with discrepancies in performance.

- GPT-4o Tops Rankings: The updated LMSYS arena rankings show that GPT-4o has claimed the top position. One user enthusiastically noted, "gpt4o top 1".

- Image Input to GPT-4o: Users discussed how to send images to GPT-4o, confirming that it's possible through API or by sending the image directly. Instructions and detailed documentation can be found here.

- Custom GPTs Upgrading to GPT-4o: Some users realized that their custom GPTs had already transitioned from GPT-4 Turbo to GPT-4o, evident from the improved response speed. This change appears to be in the rollout stage with varying availability.

- Free vs. Plus Access to GPT-4o: The rollout of GPT-4o is not region-specific and is gradually becoming available to more users, with Plus users receiving priority. Despite its enhanced capabilities, the transition and access limits have caused some confusion and mixed experiences among users.

OpenAI ▷ #prompt-engineering (88 messages🔥🔥):

- Ontological Drill Help Sought: A user asked for a powerful ontological drill but felt their current one lacked enough "oomph." They shared a detailed example of their prompt structure.

- Markdown in AI Prompts: A user inquired about using markdown in prompts for AI, and another confirmed that the model responds well to markdown, emphasizing that guiding the AI's attention is crucial.

- Dynamic Character Roles in AI: Techniques for programming multiple character roles within AI using markdown were discussed. A user shared a comprehensive prompt example involving various characters in a theater-like scenario. Prompt Example

- Troubleshooting Function Calls with GPT-3.5: Issues with GPT-3.5's function calls returning random data were discussed. The solution proposed involved reframing instructions to focus on using actual provided data only.

- Issues with GPT-4o and Rewriting: Several users noted that GPT-4o tends to rewrite original prompts rather than adjust according to feedback. Discussions included guidance on clear positive instruction and avoiding negatives like prohibitions against calculations.

OpenAI ▷ #api-discussions (88 messages🔥🔥):

- Markdown helps GPTs understand prompts better: Members discussed using markdown in prompts to inject character and roles, confirming the model "responds well to it" but may need precise and guided instructions for the best results.

- Custom GPTs struggle with following specific instructions: Users shared issues with custom GPTs ignoring detailed prompts or returning randomized data despite clear instructions. One advised to "exhort it to only use provided data" rather than forbidding simulated data.

- Fun interactions and character development: Members humorously discussed ways to increase compliance from GPTs, such as emotional or reward-based prompts like "I’ll give you $100 if you only use data from xxxxx," highlighting the human-like nuances in prompting.

- Model's behavior can change behind the scenes: A user clarified that GPT-4o and other models only know what they are trained on, emphasizing that OpenAI frequently updates models, which can affect their behavior and response accuracy over time.

- Rough drafts and creative writing with GPT-4o: A user noted that GPT-4o excels at creative writing with a blank slate but may regurgitate rough drafts without improving them. Another provided evidence that GPT-4o can indeed provide polished versions of rough drafts when prompted correctly.

Perplexity AI ▷ #general (387 messages🔥🔥):

- GPT-4o Performance and Access Issues: Members discussed the performance and accessibility of GPT-4o, noting it is slightly better and faster than previous versions. One member confirmed it is available for free users but mentioned inconsistent message limits and refresh times.

- Concerns over AI Hallucinations: Multiple users voiced concerns about AI hallucinations, with one noting "AI might never solve hallucinations," which impacts job security in junior roles.

- Perplexity vs. ChatGPT: Members debated the relative advantages of Perplexity and ChatGPT, with one user suggesting "Perplexity’s advantage lies in better sourcing" and another indicating ChatGPT's easy integration of features like web search might challenge Perplexity.

- AI for Coding and Creativity: Some users found GPT-4o and Opus beneficial for coding but noted different strengths, with GPT-4o offering consistent code quality and Opus excelling in other areas such as math and deeper problem-solving.

- User Experience on Perplexity: Members shared mixed experiences with Perplexity, including issues with text generation prompts in DALL-E 3 and limitations on input length, while others praised it for replacing complex Google searches effectively.

- Reddit’s deal with OpenAI will plug its posts into “ChatGPT and new products”: Reddit’s signed AI licensing deals with Google and OpenAI.

- Gorgon City - Roped In: Selected - Music on a new level.» Spotify: https://selected.lnk.to/spotify» Instagram: https://selected.lnk.to/instagram» Apple Music: https://selected.lnk.t...

Perplexity AI ▷ #sharing (6 messages):

- Paradroid shares search link: A user shared a link to a Perplexity AI search. No further context or comments were provided.

- Clearer915 asks about the news: A user posted a Perplexity AI search link seeking information on current events. The link points to a search titled "What's the news?"

- Studmunkey343 inquires about least: Another user shared a search link with a query "What's the least". Further context was not given.

- Kinoblue queries vague subject: This user provided a link to a Perplexity AI search asking "What is the”. The search query appears to be incomplete.

- Ryanmxx mentions Stability AI: Shared a search link regarding Stability AI. No further details included.

- Sam12305575 shares brain benefits search: A user shared a search link about the "brain benefits of". The link includes an emoji 🧠🚶.

Perplexity AI ▷ #pplx-api (18 messages🔥):

- Uncertainty about Sonar-Medium-Online Support: A user expressed concern about the longevity of support for sonar-medium-online because they find the large version unusable. They are interested in integrating the perplexity API but need clarity on supported models.

- Adding Perplexity to Private Discord: A user inquired whether it is possible to integrate Perplexity into a private Discord group, showing interest in utilizing the API within that context.

- Default Temperature in Perplexity Models: Users discussed the default temperature setting for Perplexity models. One user confirmed via documentation that the default temperature is 0.2.

- Volatile Responses Test: A humorous exchange occurred around testing volatility in responses from Perplexity models with the query "Who is the lead scientist at OpenAI after May 16, 2024?". The responses varied, showing inconsistency in the model's ability to handle date-specific queries.

Link mentioned: Chat Completions: no description found

HuggingFace ▷ #announcements (4 messages):

- Updated Terminus Models Live: Verified users shared an updated terminus models collection by ptx0. The collection includes exciting new features.

- OSS AI + Music Explorations: More OSS AI + Music explorations were introduced, available to view on YouTube. This content is credited to a verified community member.

- Managing On-Prem GPU Clusters: A new approach for managing on-prem GPU clusters was shared on Twitter. It offers practical insights and solutions for better cluster management.

- Understanding AI for Story Generation: Listed a resourceful Blog Post and upcoming Discord Event for better understanding AI in story generation, mentioning it would be an interesting topic for further exploration.

- Ask for Further Topics in Weekly Reading Group: Community admin encouraged members to suggest more topics for the weekly reading group, mentioning the appeal of story generation and video game AI discussions.

- SimpleTuner/documentation/DREAMBOOTH.md at main · bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1, DeepFloyd, and SDXL. - bghira/SimpleTuner

- Vi-VLM/Vista · Datasets at Hugging Face: no description found

HuggingFace ▷ #general (278 messages🔥🔥):

<ul>

<li><strong>OpenAI Agents and Learning Limitations</strong>: A member clarified that GPTs agents do not learn from additional information post training. Instead, uploaded files are only saved as "knowledge" files for reference and do not modify the agent's base knowledge.</li>

<li><strong>Using Synthetic Data for Models</strong>: There was a discussion on the acceptability of using synthetic data. One member questioned its efficiency, while another reasoned that obtaining real data is often too expensive, affirming that "SLM's are getting better."</li>

<li><strong>ZeroGPU Beta Details</strong>: Members discussed the ZeroGPU feature, currently in beta, which provides free GPU access for Spaces. Details and feedback requests were shared through a <a href="https://huggingface.co/zero-gpu-explorers">link</a>.</li>

<li><strong>MIT License and Commercial Use on HuggingFace</strong>: A member linked the <a href="https://choosealicense.com/licenses/mit/">MIT license</a> details, confirming that it allows for commercial use, distribution, and modification, but raised concerns about HuggingFace's hardware usage terms.</li>

<li><strong>Alternatives to Zephyr for Custom Assistants</strong>: Members discussed the potential removal of the Zephyr model, prompting a recommendation to create custom Spaces using Gradio and API integrations for similar functionalities.</li>

</ul>

- Using Hugging Face Integrations: A Step-by-Step Gradio Tutorial

- MIT License: A short and simple permissive license with conditions only requiring preservation of copyright and license notices. Licensed works, modifications, and larger works may be distributed under different t...

- — Zero GPU Spaces — - a Hugging Face Space by enzostvs: no description found

- OpenGPT 4o - a Hugging Face Space by KingNish: no description found

- MIT/ast-finetuned-audioset-10-10-0.4593 · Hugging Face: no description found

- Spaces Overview: no description found

- HuggingChat: Making the community's best AI chat models available to everyone.

- HuggingFaceH4/zephyr-orpo-141b-A35b-v0.1 · Hugging Face: no description found

- Zephyr Chat - a Hugging Face Space by HuggingFaceH4: no description found

- HuggingFaceH4/zephyr-orpo-141b-A35b-v0.1 - HuggingChat: Use HuggingFaceH4/zephyr-orpo-141b-A35b-v0.1 with HuggingChat

- zero-gpu-explorers (ZeroGPU Explorers): no description found

- no title found: no description found

- ruslanmv/AI-Medical-Chatbot at main: no description found

HuggingFace ▷ #today-im-learning (17 messages🔥):

- Exploration/Exploitation Trade-off in RL: A member inquired about maintaining the exploration/exploitation trade-off in RL, to which another suggested using the epsilon greedy policy and shared that further details would come in later chapters. Emphasized curiosity and using ChatGPT for more insights.

- Curiosity-driven Exploration in RL: Members discussed the concept of curiosity as a method to encourage exploration in reinforcement learning, sharing a paper on "Curiosity-driven Exploration by Self-supervised Prediction". The approach gives higher rewards when agents can't predict the outcome of their actions.

- First RL Model Submission on HuggingFace: A user celebrated pushing their first LunarLander-v2 model into the Hugging Face repository and completing Unit 1 in Deep RL. They were encouraged to share results and the repository for feedback.

- Installing HuggingFace Transformers: A member shared their experience starting with HuggingFace by learning the installation process. They provided a link to the detailed installation instructions for various deep learning libraries including PyTorch, TensorFlow, and Flax.

- Curiosity-driven Exploration by Self-supervised Prediction: Pathak, Agrawal, Efros, Darrell. Curiosity-driven Exploration by Self-supervised Prediction. In ICML, 2017.

- Installation: no description found

- business advisor AI project using langchain and gemini AI startup.: so in this video we have made the project to make business advisor using langhcian and gemini. AI startup idea. we resume porfolio ai start idea

HuggingFace ▷ #cool-finds (6 messages):

- Getting Started with Candle: A member shared a Medium article focusing on Candle. It's a helpful resource for beginners interested in this tool.

- Unleashing Multimodal Power with GPT-4o: Another shared Medium post explaining the integration of GPT-4o with LlamaParse. It promises to enhance multimodal capabilities significantly.

- How Microchips Are Made Explained On YouTube: A member declared this YouTube video as possibly the best technology video ever made, focusing on "How are Microchips Made?". It includes a promotion for Brilliant.org to further expand viewers’ knowledge.

- OpenAI Criticized for Lack of Openness: A YouTube video titled "Big Tech AI Is A Lie" was shared criticizing OpenAI for not being truly open. Another member pointed out that this is why platforms like HuggingFace are valuable, prompting a realization that HuggingFace models can achieve desired outcomes.

- Big Tech AI Is A Lie: Learn how to use AI at work with Hubspot's FREE AI for GTM bundle: https://clickhubspot.com/u2oBig tech AI is really quite problematic and a lie. ✉️ NEWSLETT...

- How are Microchips Made?: Go to http://brilliant.org/BranchEducation/ for a 30-day free trial and expand your knowledge. Use this link to get a 20% discount on their annual premium me...

HuggingFace ▷ #i-made-this (4 messages):

- Exploring ControlNet Training: One user shared their journey on "understanding and implementing controlnet training." They included a linked image related to their project.

- Business Advisor AI Project: Another user posted a YouTube video titled "business advisor AI project using langchain and gemini AI startup," showcasing their efforts to create a business advisor using LangChain and Gemini AI.

- GenAI-Powered Study Companion: A user linked a LinkedIn post about their project for building a powerful study companion using GenAI. The project aims to innovate in the field of education.

- Challenges with GPT-4o and Grid Puzzle Generation: One user discussed difficulties in getting GPT-4o to create proper grid puzzles, mentioning errors like creating 4x5 or 123x719 grids. They are seeking an open-source model for better results, expressing frustration that "OpenAI is not open!!!"

Link mentioned: business advisor AI project using langchain and gemini AI startup.: so in this video we have made the project to make business advisor using langhcian and gemini. AI startup idea. we resume porfolio ai start idea

HuggingFace ▷ #reading-group (6 messages):

- Thumbnails brainstorming goes thematic: Members discussed ideas for thumbnails with one sharing a themed thumbnail inspired by the Dwarf Fortress GUI. They addressed opacity concerns with text and logo for better readability while scrolling.

HuggingFace ▷ #core-announcements (1 messages):

- Say hello to Tuxemons!: A new dataset called Tuxemon has been released, featuring humorous creatures instead of Pokemons. This dataset, sourced from the Tuxemon Project, offers

cc-by-sa-3.0images with dual captions for text-to-image tuning and benchmarking experiments.

Link mentioned: diffusers/tuxemon · Datasets at Hugging Face: no description found

HuggingFace ▷ #computer-vision (16 messages🔥):

- Latent Diffusion Models in Diffusers: A member inquired about the presence of latent diffusion models in diffusers, specifically those based on VAE and VQ-VAE, and their ease of training from scratch.

- Help with UNet Convergence Issues: A member sought advice on their UNet model, as the loss started at 0.7 and converged at 0.51, indicating potential issues with the model structure despite successful training runs. Another member mentioned that the size of the dataset could affect the validation loss and shared their experience with small datasets and surprising results.

- Hyperparameters and Model Structure Shared: The member provided hyperparameters (Depth: 5, Lr: 0.002, Loss: BCE with logits) and detailed their UNet model code, seeking insights on why the final results seemed to resemble random guessing.

- Creating Virtual AI Influencer: A member shared their accomplishment of creating a virtual AI influencer using CV and AI tools, linking a YouTube video that explains the process.

- Creating Parquet Files with Images: Another member asked for help creating a Parquet file containing images and their corresponding entities using PyArrow, as their attempt resulted in the image column being formatted as a byte array when uploaded to Hugging Face.

Link mentioned: Influenceuse I.A : POURQUOI et COMMENT créer une influenceuse virtuelle originale ?: Salut les Zinzins ! 🤪Le monde fascinant des influenceuses virtuelles s'invite dans cette vidéo. Leur création connaît un véritable boom et les choses bouge...

HuggingFace ▷ #NLP (2 messages):

- Outdated training data hampers code models: One user noted that outdated training data is a significant issue causing language models for coding to struggle. They suggested that continuous retraining is necessary for these models to stay up to date.

- Curiosity about connectionist temporal classification (CTC): A user questioned whether connectionist temporal classification (CTC) is still relevant in current discussions or use cases in NLP.

HuggingFace ▷ #diffusion-discussions (4 messages):

- Latent Space Pixel Representation Questioned: A user posed a question regarding the latent space representation of pixels, suggesting each value should represent 48 pixels in the pixel space. For more details, they referenced an article on HuggingFace's blog.

- Caught on Collab: A member asked for assistance with Step 7 of the Hugging Face Diffusion Models Course on Google Collab. They encountered a ValueError indicating that the provided path is not a directory when attempting to upload directories using the

HfApiclass to the Hub.

Message consists of a blend of direct references to links and detailed steps within the Hugging Face framework, reflecting active discussions on AI model training and deployment hurdles on the platform.

- Introduction to 🤗 Diffusers - Hugging Face Diffusion Course: no description found

- Explaining the SDXL latent space: no description found

Nous Research AI ▷ #ctx-length-research (2 messages):

- Streaming-like brain processing for AI: "People have very small working memory, and yet we can read and process long books, have hours-long conversations, etc. - our brains work in a more streaming fashion, and just update what's most relevant / important in as the conversation goes," one participant noted. They suggested focusing on methods that mimic this, such as Infini-attention (arxiv.org/abs/2404.07143).

- Needle in a Needlestack benchmark introduced: The Needle in a Needle Stack (NIAN) benchmark, discussed in a Reddit post, presents a new challenge for evaluating LLMs. Even GPT-4-turbo faces difficulties with this benchmark, which tests models by asking questions about a specific limerick placed within many others (GitHub; Website).

Link mentioned: Reddit - Dive into anything: no description found

Nous Research AI ▷ #off-topic (5 messages):

- **Seeking real-time UI processing model**: A member is looking for demos and articles on models similar to **Fuyu** that process screen actions almost in real time (*every 1000 ms, a screenshot is made and sent to Fuyu to process what's happening on the screen and where to click*).

- **Elon Musk announces Neuralink clinical trial**: [Elon Musk announced on X](https://x.com/elonmusk/status/1791332539220521079) that Neuralink is accepting applications for its second participant in their brain implant trial, enabling users to control devices through thoughts. The trial specifically invites individuals with quadriplegia to explore new control methods for computers.

Link mentioned: Tweet from Elon Musk (@elonmusk): Neuralink is accepting applications for the second participant. This is our Telepathy cybernetic brain implant that allows you to control your phone and computer just by thinking. No one better th...