[AINews] Cerebras Inference: Faster, Better, AND Cheaper

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Wafer-scale engines are all you need.

AI News for 8/27/2024-8/28/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (215 channels, and 2366 messages) for you. Estimated reading time saved (at 200wpm): 239 minutes. You can now tag @smol_ai for AINews discussions!

A brief history of superfast LLM inference in 2024:

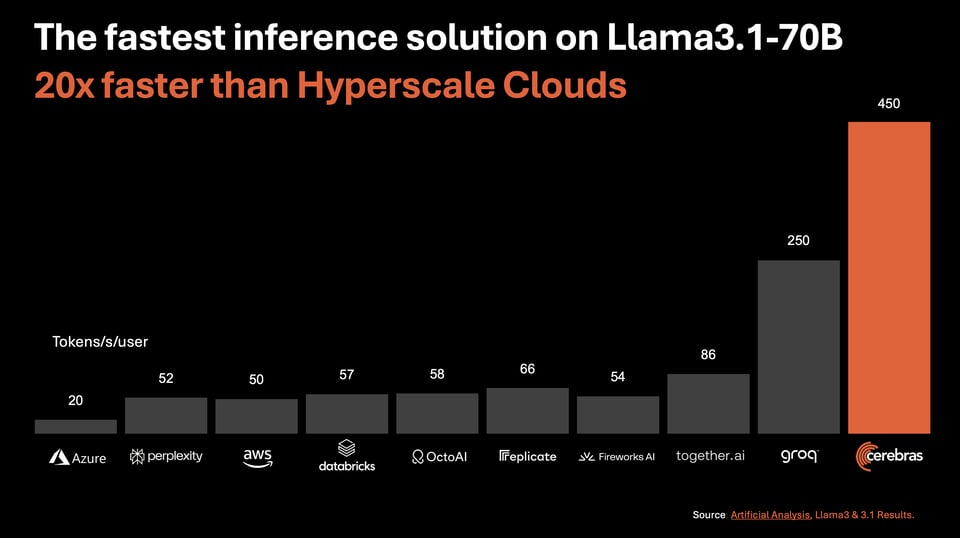

- Groq dominated the news cycle in Feb (lots of scattered discussion here) by achieving ~450 tok/s for Mixtral 8x7B (240 tok/s for Llama 2 70b).

- In May, Cursor touted a specialized code edit model (developed with Fireworks) that hit 1000 tok/s.

It is now finally Cerebras' turn to shine. The new Cerebras Inference service is touting Llama3.1-8b at 1800 tok/s at $0.10/mtok and Llama3.1-70B at 450 tokens/s at $0.60/mtok at full precision. Needless to say, Cerebras pricing at full precision AND their unmatched speed is suddenly a serious player in this market. To take their marketing line: "Cerebras Inference runs Llama3.1 20x faster than GPU solutions at 1/5 the price." - not technically true - most inference providers like Together and Fireworks tend to guide people towards the quantized versions of their services, with FP8 70B priced at $0.88/mtok and INT4 70B priced at $0.54. Indisputably better, but not 5x cheaper, not 20x faster.

Note: one should also note their very generous free tier of 1 million free tokens daily.

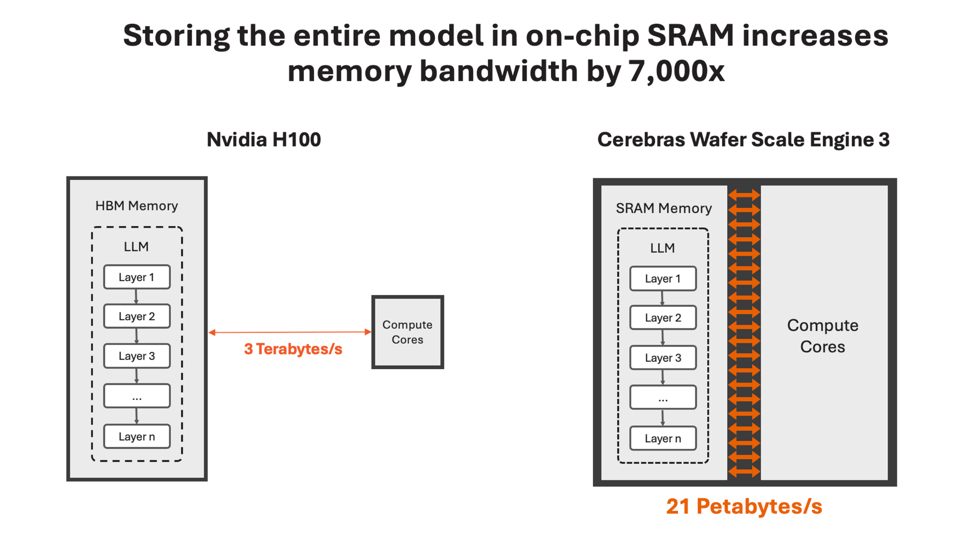

The secret, of course, is Cerebras' wafer-scale chips (what else would you expect them to say?). Similar to Groq's LPU argument, Cerebras says putting the entire model in SRAM is the key:

Your move, Groq and Sambanova.

Today's sponsor: Solaris

Solaris, an office for early stage AI startups in SF, has new desk and office openings! It’s been HQ to founders backed by Nat Friedman, Daniel Gross, Sam Altman, YC and more.**

Swyx's comment: I’ve been here for the last 9 months and have absolutely loved it. If you’re looking for a quality place to build the next great AI startup, book a time with the founders here, and tell them we sent you.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Updates and Benchmarks

- Gemini 1.5 Performance: Google's latest Gemini 1.5 models (Pro/Flash/Flash-9b) showed significant improvements in benchmarks, with Gemini-1.5-Flash climbing from #23 to #6 overall. The new Gemini-1.5-Pro demonstrated strong gains in coding and math tasks. @lmsysorg shared detailed results from over 20K community votes.

- Open-Source Models: New open-source models were released, including CogVideoX-5B for text-to-video generation, running on less than 10GB VRAM. @_akhaliq highlighted its high quality and efficiency. Rene 1.3B, a Mamba-2 language model, was also released with impressive performance on consumer hardware. @awnihannun noted its speed of almost 200 tokens/sec on an M2 Ultra.

- Cerebras Inference: Cerebras announced a new inference API claiming to be the fastest for Llama 3.1 models, with speeds of 1,800 tokens/sec for the 8B model and 450 tokens/sec for the 70B model. @AIatMeta verified these impressive performance figures.

AI Development and Infrastructure

- Prompt Caching: Jeremy Howard highlighted the importance of prompt caching for improving performance and reducing costs. @jeremyphoward noted that Anthropic's Claude now supports caching, with cached tokens being 90% cheaper and faster.

- Model Merging: A comprehensive timeline of model merging techniques was shared, tracing the evolution from early work in the 90s to recent applications in LLM alignment and specialization. @cwolferesearch provided a detailed overview of various stages and approaches.

- Distributed Training: The potential for distributed community ML training was discussed, with the idea that the next open-source GPT-5 could be built by millions of people contributing small amounts of GPU power. @osanseviero outlined recent breakthroughs and future possibilities in this area.

AI Applications and Tools

- Claude Artifacts: Anthropic made Artifacts available for all Claude users, including on iOS and Android apps. @AnthropicAI shared insights into the development process and widespread adoption of this feature.

- AI-Powered Apps: The potential for mobile apps created in real-time by LLMs was highlighted, with examples of simple games being replicated using Claude. @alexalbert__ demonstrated this capability.

- LLM-Based Search Engines: A multi-agent framework for web search engines using LLMs was mentioned, similar to Perplexity Pro and SearchGPT. @dl_weekly shared a link to more information on this topic.

AI Ethics and Regulation

- AI Regulation Debate: Discussions around AI regulation continued, with some arguing that being pro-AI regulation doesn't necessarily mean supporting every proposed bill. @AmandaAskell emphasized the importance of good initial regulations.

- OpenAI's Approach: Reports of OpenAI's development of a powerful reasoning model called "Strawberry" and plans for "Orion" (GPT-6) sparked discussions about the company's strategy and potential impact on competition. @bindureddy shared insights on these developments.

Miscellaneous AI Insights

- Micro-Transactions in AI: Andrej Karpathy proposed that enabling very small transactions (e.g., 5 cents) could unlock significant economic potential and improve the flow of value in the digital economy. @karpathy argued this could lead to more efficient business models and positive second-order effects.

- AI Cognition Research: The importance of studying AI cognition, rather than just behavior, was emphasized for understanding generalization in AI systems. @RichardMCNgo drew parallels to the shift from behaviorism in psychology.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Open-Source Text-to-Video AI: CogVideoX 5B Breakthrough

- CogVideoX 5B - Open weights Text to Video AI model (less than 10GB VRAM to run) | Tsinghua KEG (THUDM) (Score: 91, Comments: 13): CogVideoX 5B, an open-weights Text to Video AI model developed by Tsinghua KEG (THUDM), can run on less than 10GB VRAM with the 2B model functioning on a 1080TI and the 5B model on a 3060 GPU. The model collection, including the 2B version released under Apache 2.0 license, is available on Hugging Face, along with a demo space and a research paper.

Theme 2. Advancements in Efficient AI Models: Gemini 1.5 Flash 8B

- Gemini 1.5 Flash 8b, (Score: 95, Comments: 24): Google has released Gemini 1.5 Flash 8B, a new small-scale AI model that demonstrates impressive capabilities despite its compact size of 8 billion parameters. The model achieves state-of-the-art performance across various benchmarks, including outperforming larger models like Llama 2 70B on certain tasks, while being significantly more efficient in terms of inference speed and resource requirements.

- Gemini 1.5 Flash 8B was initially discussed in the third edition of the Gemini 1.5 Paper from June. The new version is likely a refined model with improved benchmark performance compared to the original experiment.

- Google's disclosure of the 8 billion parameter count was praised. There's speculation about whether Google will release the weights, but it's deemed unlikely as Gemini models are typically closed-source, unlike the open-source Gemma models.

- Discussion arose about Google's use of standard transformers for Gemini, which surprised some users expecting custom architectures. The model's performance sparked comparisons with GPT-4o-mini, suggesting potential advancements in parameter efficiency.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Advancements and Releases

- Google DeepMind's GameNGen: A neural network-powered game engine that can interactively simulate the classic game DOOM in real-time with high visual quality. This demonstrates the potential for AI to generate interactive game environments. Source

- OpenAI's "Strawberry" AI: Reportedly being prepared for launch as soon as fall 2024. OpenAI has shown this AI to national security officials and is using it to develop another system called "Orion". Details on capabilities are limited. Source 1, Source 2

- Google's Gemini 1.5 Updates: Google has rolled out Gemini 1.5 Flash-8B, an improved Gemini 1.5 Pro with better coding and complex prompt handling, and an enhanced Gemini 1.5 Flash Model. Source

AI in Image Generation and Manipulation

- Flux AI Model: A new AI model for image generation that has quickly gained popularity, particularly for creating photorealistic images. Users are experimenting with training custom LoRA models on personal photos to generate highly realistic AI-created images of themselves. Source 1, Source 2

Robotics and Physical AI

- Galbot G1: A first-generation robot by Chinese startup Galbot, designed for generalizable, long-duration tasks. Details on specific capabilities are limited. Source

Scientific Breakthroughs

- DNA Damage Repair Protein: Scientists have discovered a protein called DNA damage response protein C (DdrC) that can directly halt DNA damage. It appears to be "plug and play", potentially able to work in any organism, making it a promising candidate for cancer prevention research. Source

AI Ethics and Societal Impact

- AI-Generated Content in Media: Discussions around the increasing prevalence of AI-generated content in social media and entertainment, raising questions about authenticity and the future of creative industries. Source

AI Discord Recap

A summary of Summaries of Summaries by GPT4O (gpt-4o-2024-05-13)

1. LLM Advancements and Benchmarking

- Llama 3.1 API offers free access: Sambanova.ai provides a free, rate-limited API for running Llama 3.1 405B, 70B, and 8B models, compatible with OpenAI, allowing users to bring their own fine-tuned models.

@usershared that the API offers starter kits and community support to help accelerate development.

- Google's New Gemini Models: Google announced three experimental models: Gemini 1.5 Flash-8B, Gemini 1.5 Pro, and an improved Gemini 1.5 Flash.

@OfficialLoganKhighlighted that the Gemini 1.5 Pro model is particularly strong in coding and complex prompts.

2. Model Performance Optimization and Benchmarking

- OpenRouter's DeepSeek Caching: OpenRouter is adding support for DeepSeek's context caching, expected to reduce API costs by up to 90%.

@usershared information about the upcoming feature aimed at optimizing API cost efficiency.

- Hyperbolic's BF16 Llama 405B: Hyperbolic released a BF16 variant of the Llama 3.1 405B base model, adding to the existing FP8 quantized version on OpenRouter.

@hyperbolic_labstweeted about the new variant, highlighting its potential for more efficient model performance.

3. Open-Source AI Developments and Collaborations

- IBM's Power Scheduler: IBM introduced a novel learning rate scheduler called Power Scheduler, agnostic to batch size and number of training tokens.

@_akhaliqtweeted that this scheduler consistently achieves impressive performance across various model sizes and architectures.

- Daily Bots for Real-Time AI: Daily Bots launched an ultra low latency cloud for voice, vision, and video AI, supporting the RTVI standard.

@trydailyhighlighted that this platform combines the best tools for real-time AI applications, including voice-to-voice interactions with LLMs.

4. Multimodal AI and Generative Modeling Innovations

- GameNGen: Neural Game Engine: GameNGen, the first game engine powered entirely by a neural model, enables real-time interaction with complex environments.

@usershared that GameNGen can simulate DOOM at over 20 frames per second on a single TPU, achieving a PSNR of 29.4, comparable to lossy JPEG compression.

- Artifacts on iOS and Android: Artifacts, a project by Anthropic, has launched on iOS and Android, allowing for real-time creation of simple games with Claude.

@alexalbert__highlighted the significance of this mobile release in bringing the power of LLMs to mobile apps.

5. Fine-tuning Challenges and Prompt Engineering Strategies

- Unsloth's Continued Pretraining: Unsloth's Continued Pretraining feature allows for pretraining LLMs 2x faster and with 50% less VRAM than Hugging Face + Flash Attention 2 QLoRA.

@unslothshared a Colab notebook for continually pretraining Mistral v0.3 7b to learn Korean.

- Finetuning with Synthetic Data: The emerging trend of using synthetic data in finetuning models has gained momentum, highlighted by examples like Hermes 3.

- A user mentioned that synthetic data training requires a sophisticated filtering pipeline, but it's becoming increasingly popular.

PART 1: High level Discord summaries

HuggingFace Discord

- Gamify Home Training?: A member proposed a 'gamified home training' benchmark tool, claiming it for their job application.

- Triton Configuration Headache: A member encountered an issue where response generation wouldn't stop using llama3 instruct with triton and tensorrt-llm or vllm backend.

- Using vllm hosting directly worked flawlessly, indicating a potential issue with their triton configuration.

- Loss of 0.0 - Logging Error?: Discussions centered around the significance of a 'loss curve' in model training.

- One member suggested a loss of 0.0 might indicate a logging error, questioning the feasibility of a perfect model with a loss of 0.0 due to rounding.

- Finetuning Gemma2b on AMD - Experimental Struggles: A member struggled finetuning a Gemma2b model on AMD, attributing the issue to potential logging errors.

- Other members pointed to ROCm's experimental nature as a contributing factor to the difficulties.

- Model Merging Tactics: UltraChat and Mistral: A member proposed applying the difference between UltraChat and base Mistral to Mistral-Yarn as a model merging tactic.

- While some expressed skepticism, the member remained optimistic, citing past successes with 'cursed model merging'.'

Unsloth AI (Daniel Han) Discord

- VLLM on Kaggle is Working!: A user reported success running VLLM on Kaggle using wheels from this dataset.

- This was achieved with VLLM 0.5.4, a version that is considered relatively new, as 0.5.5 has been released but is not yet widely available.

- Mistral Struggles Expanding Beyond 8k: Members confirmed that Mistral cannot be extended beyond 8k without continued pretraining and this is a known issue.

- They also discussed potential avenues for future performance enhancements, including mergekit and frankenMoE finetuning.

- Homoiconic AI: Weights as Code?: A member shared a [progress report on "Homoiconic AI"] (https://x.com/neurallambda/status/1828214178567647584?s=46) which uses a hypernet to generate autoencoder weights and then improves those weights through in-context learning.

- The report suggests that this "code-is-data & data-is-code" approach may be required for reasoning and even isomorphic to reasoning.

- Unsloth's Continued Pretraining Capabilities: A member shared a link to Unsloth's blog post on Continued Pretraining, highlighting its ability to continually pretrain LLMs 2x faster and with 50% less VRAM than Hugging Face + Flash Attention 2 QLoRA.

- The blog post also mentions the use of a Colab notebook to continually pretrain Mistral v0.3 7b to learn Korean.

- Unsloth vs OpenRLHF: Speed & Memory Efficiency: A user inquired about the differences between Unsloth and OpenRLHF, specifically regarding their support for finetuning unquantized models.

- A member confirmed that Unsloth supports unquantized models and plans to add 8bit support soon, emphasizing its significantly faster speed and lower memory usage compared to other finetuning methods.

aider (Paul Gauthier) Discord

- Aider 0.54.0: Gemini Models and Shell Command Improvements: The latest version of Aider (v0.54.0) introduces support for

gemini/gemini-1.5-pro-exp-0827andgemini/gemini-1.5-flash-exp-0827models, along with enhancements to shell and/runcommands, now allowing for interactive execution in environments with a pty.- A new switch,

--[no-]suggest-shell-commands, allows for customized configuration of shell command suggestions, while improved autocomplete functionality in large and monorepo projects boosts Aider's performance.

- A new switch,

- Aider Automates Its Own Development: Aider played a significant role in its own development, contributing 64% of the code for this release.

- This release also introduces a

--upgradeswitch to easily install the latest Aider version from PyPI.

- This release also introduces a

- Gemini 1.5 Pro Benchmarks Show Mixed Results: Benchmark results for the new Gemini 1.5 Pro model were shared, demonstrating a pass rate of 23.3% for whole edit format and 57.9% for diff edit format.

- The benchmarks were run with Aider using the

gemini/gemini-1.5-pro-exp-0827model andaider --model gemini/gemini-1.5-pro-exp-0827command.

- The benchmarks were run with Aider using the

- GameNGen: The First Neural Game Engine: The paper introduces GameNGen, the first game engine powered entirely by a neural model, enabling real-time interaction with a complex environment over long trajectories at high quality.

- The model can interactively simulate the classic game DOOM at over 20 frames per second on a single TPU, achieving a PSNR of 29.4, comparable to lossy JPEG compression.

- OpenRouter: Discord Alternative?: A member asked if OpenRouter is the same as Discord.

- Another member confirmed that both services work fine for them, citing the OpenRouter status page: https://status.openrouter.ai/ for reference.

LM Studio Discord

- LM Studio 0.3.1 Released: The latest version of LM Studio is v0.3.1, available on lmstudio.aido.

- LM Studio on Linux Issues: A user reported that running the Linux version of LM Studio through Steam without the

--no-sandboxflag caused an SSD corruption. - Snapdragon NPU Not Supported: A user confirmed that the NPU on Snapdragon is not working in LM Studio, even though they have installed LM Studio on Snapdragon.

- LM Studio's AMD GPU Support: LM Studio's ROCM build currently only supports the highest-end AMD GPUs, and does not support GPUs like the 6700XT, causing compatibility issues.

- LM Studio's Security Tested: A user tested LM Studio's security by prompting an LLM to download a program, which resulted in a hallucinated response, suggesting no actual download took place.

Perplexity AI Discord

- Perplexity Pro Users Stuck in Upload Limbo: Users are experiencing issues uploading images and files, with some losing their Pro subscriptions despite continued access in certain browsers.

- This issue is causing frustration among Pro users, especially with a lack of information and estimated timeframe for a fix, leading to humorous responses like a 'this is fine' GIF.

- Claude 3.5's Daily Message Limit: 430: Claude 3.5 and other Pro models are subject to a daily message limit of 430, except Opus which has a 50-message limit.

- While some users haven't reached the combined limit, many find the closest they've gotten is around 250 messages.

- Image Upload Issues - AWS Rekognition to Blame: The inability to upload images is attributed to reaching the AWS Rekognition limits on Cloudinary, a service used for image and video management.

- Perplexity is currently working on resolving this issue, but there's no estimated timeframe for a fix.

- Perplexity Search: Better Than ChatGPT? Debatable: Some users claim Perplexity's search, especially with Pro, is superior to other platforms, citing better source citations and less hallucination.

- However, others argue ChatGPT's customization options, RAG, and chat UX are more advanced, and Perplexity's search is slower and less functional, particularly when compared to ChatGPT's file handling and conversation context.

- Perplexity's Domain-Specific Search Chrome Extension: The Perplexity Chrome extension offers domain-specific search capabilities, allowing users to find information within a specific website without manually searching.

- This feature is praised by some users for its advantage in finding information on a particular domain or website.

Nous Research AI Discord

- DisTrO vs. SWARM: Efficiency Considerations: While DisTrO is highly efficient under DDP, for training very large LLMs (greater than 100B parameters) on a vast collection of weak devices, SWARM may be a more suitable option.

- A member asked if DisTrO could be used to train these large LLMs, suggesting a use case of a billion old phones, laptops, and desktops, but another member recommended SWARM.

- DPO Training and AI-predicted Responses: Exploring Theory of Mind: A member pondered the potential effects of utilizing an AI model's predicted user responses, rather than the actual ones, in DPO training.

- They suggested that this approach might lead to improved theory of mind capabilities within the model.

- Model Merging: A Controversial Tactic: A member proposed merging the differences between UltraChat and base Mistral into Mistral-Yarn as a potential strategy, citing past successes with a similar approach.

- While others expressed doubts, the member remained optimistic about the effectiveness of this "cursed model merging" technique.

- Hermes 3 and Llama 3.1: A Head-to-Head Comparison: A member shared a comparison of Hermes 3 and Llama 3.1, highlighting Hermes 3's competitive performance, if not superiority, in general capabilities.

- Links to benchmarks were provided, showcasing Hermes 3's strengths and weaknesses relative to Llama 3.1.

- Finetuning with Synthetic Data: The Future of Training?: Members discussed the emerging trend of using synthetic data in finetuning models, highlighting Hermes 3 and rumored "strawberry models" as examples.

- While not always recommended, synthetic data training has gained momentum, especially with models like Hermes 3, but requires a sophisticated filtering pipeline.

OpenRouter (Alex Atallah) Discord

- OpenRouter API Briefly Degraded: OpenRouter experienced a five minute period of API degradation, but a patch was rolled out and the incident appears recovered.

- Llama 3.1 405B BF16 Endpoint Available: Llama 3.1 405B (base) has been updated with a bf16 endpoint.

- Hyperbolic Deploys BF16 Llama 405B Base: Hyperbolic released a BF16 variant of the Llama 3.1 405B base model.

- This comes in addition to the existing FP8 quantized version on OpenRouter.

- LMSys Leaderboard Relevance Questioned: A user questioned the relevance of the LMSys leaderboard, suggesting that it might be becoming outdated.

- They pointed to newer models like Gemini Flash performing exceptionally well.

- OpenRouter DeepSeek Caching Coming Soon: OpenRouter is working on adding support for DeepSeek's context caching.

- This feature is expected to reduce API costs by up to 90%.

Eleuther Discord

- Free Llama 3.1 405B API: A member shared a link to Sambanova.ai which provides a free, rate-limited API for running Llama 3.1 405B, 70B, and 8B.

- The API is OpenAI-compatible, allows users to bring their own fine-tuned models, and offers starter kits and community support to help accelerate development.

- TRL.X is Depreciated: A member pointed out that TRL.X is very depreciated and has not been updated for a long time.

- Another member inquired about whether it's still being maintained or if there's a replacement.

- Model Training Data - Proprietary or Public?: A member asked about what kind of data people use to train large language models.

- They wanted to know if people use proprietary datasets or public ones like Alpaca and then apply custom DPO or other unsupervised techniques to improve performance, or if they just benchmark with N-shot on non-instruction tuned models.

- Reversing Monte Carlo Tree Search: A member suggested training a model to perform Monte Carlo tree search in reverse.

- They proposed using image recognition and generation to generate the optimal tree search option instead of identifying it.

- Computer Vision Research: Paper Feedback: A member shared they are working on a computer vision diffusion project and are looking for feedback on their paper draft.

- They mentioned that large-scale tests are costly and requested help finding people who could review their work.

LlamaIndex Discord

- LlamaIndex supports GPT-4o-mini?: A user asked if

llama_index.llms.openaisupports using thegpt-4o-miniOpenAI model.- Another member confirmed it does not support this model and shared the list of supported models:

gpt-4,gpt-4-32k,gpt-4-1106-preview,gpt-4-0125-preview,gpt-4-turbo-preview,gpt-4-vision-preview,gpt-4-1106-vision-preview,gpt-4-turbo-2024-04-09,gpt-4-turbo,gpt-4o,gpt-4o-2024-05-13,gpt-4-0613,gpt-4-32k-0613,gpt-4-0314,gpt-4-32k-0314,gpt-3.5-turbo,gpt-3.5-turbo-16k,gpt-3.5-turbo-0125,gpt-3.5-turbo-1106,gpt-3.5-turbo-0613,gpt-3.5-turbo-16k-0613,gpt-3.5-turbo-0301,text-davinci-003,text-davinci-002,gpt-3.5-turbo-instruct,text-ada-001,text-babbage-001,text-curie-001,ada,babbage,curie,davinci,gpt-35-turbo-16k,gpt-35-turbo,gpt-35-turbo-0125,gpt-35-turbo-1106,gpt-35-turbo-0613,gpt-35-turbo-16k-0613.

- Another member confirmed it does not support this model and shared the list of supported models:

- LlamaIndex's OpenAI Library Needs an Update: A member reported getting an error related to the

gpt-4o-miniOpenAI model when using LlamaIndex.- They were advised to update the

llama-index-llms-openailibrary to resolve the issue.

- They were advised to update the

- Pydantic v2 Broke LlamaIndex, But It's Being Fixed: A member encountered an issue related to LlamaIndex's

v0.11andpydantic v2where the LLM was hallucinating thepydanticstructure.- They shared a link to the issue on GitHub and indicated that a fix was under development.

- GraphRAG Authentication Errors Solved With OpenAILike: A member experienced an authentication error while using GraphRAG with a custom gateway for interacting with OpenAI API.

- The issue was traced back to direct OpenAI API calls made within the GraphRAG implementation, and they were advised to use the

OpenAILikeclass to address this issue.

- The issue was traced back to direct OpenAI API calls made within the GraphRAG implementation, and they were advised to use the

- Building a Multi-Agent NL to SQL Chatbot: A member sought guidance on using LlamaIndex tools for building a multi-agent system to power an NL-to-SQL-to-NL chatbot.

- They were advised to consider using workflows or reAct agents, but no definitive recommendation was given.

Torchtune Discord

- QLoRA & FSDP1 are not compatible: A user discussed whether QLoRA is compatible with FSDP1 for distributed finetuning, and it was determined that they are not.

- This is a point to consider for future development if the compatibility is needed.

- Torch.compile vs. Liger Kernels for Torchtune: A user questioned the value of Liger kernels in Torchtune, but a member responded that they prefer using

torch.compile. - Model-Wide vs. Per-Layer Compilation Performance: The discussion focused on the performance of

torch.compilewhen applied to the entire model versus individual layers. - Impact of Activation Checkpointing: Activation Checkpointing (AC) was found to impact compilation performance significantly.

- Balancing Speed and Optimization with Compilation Granularity: The discussion covered the granularity of model compilation, with different levels impacting performance and optimization potential.

- The goal is to find the right balance between speed and optimization.

OpenAI Discord

- GPT-4 Confidently Hallucinates: A member discussed the challenge of GPT-4 confidently providing wrong answers, even after being corrected.

- They suggested prompting with specific web research instructions, using pre-configured LLMs like Perplexity, and potentially setting up a custom GPT with web research instructions.

- Mini Model vs. GPT-4: One member pointed out that the Mini model is cheaper and seemingly performs better than GPT-4 in certain scenarios.

- They argued that this is primarily a matter of human preference and that the benchmark used doesn't reflect the use cases people actually care about.

- SearchGPT vs. Perplexity: A member inquired about the strengths of Perplexity compared to SearchGPT.

- Another member responded that they haven't tried Perplexity but consider SearchGPT to be accurate, with minimal bias, and well-suited for complex searches.

- AI Sentience?: A member discussed the idea of AI experiencing emotions similar to humans, suggesting that attributing such experiences to AI may be a misunderstanding.

- They expressed that understanding AGI as requiring human-like emotions might be an unrealistic expectation.

- Orion Model Access Concerns: A member expressed concern about the potential consequences of restricting access to Orion models like Orion-14B-Base and Orion-14B-Chat-RAG to the private sector.

- They argued that this could exacerbate inequality, stifle innovation, and limit broader societal benefits, potentially leading to a future where technological advancements serve only elite interests.

Interconnects (Nathan Lambert) Discord

- Google Releases Three New Gemini Models: Google has released three experimental Gemini models: Gemini 1.5 Flash-8B, Gemini 1.5 Pro, and Gemini 1.5 Flash.

- These models can be accessed and experimented with on Aistudio.

- Gemini 1.5 Pro Focuses on Coding and Complex Prompts: The Gemini 1.5 Pro model is highlighted as having improved capabilities for coding and complex prompts.

- The original Gemini 1.5 Flash model has been significantly improved.

- API Usability Concerns and Benchmark Skepticism: There is discussion around the API's usability with a user expressing frustration about its lack of functionality.

- The user also mentioned that they tried evaluating the 8B model on RewardBench, but considers it a fake benchmark.

- SnailBot Delivers Timely Notifications for Shortened Links: SnailBot notifies users via Discord before they receive an email when a link is shortened using livenow.youknow.

- However, the user also noted that SnailBot did not recognize a URL change, demonstrating that the tool has limitations.

- Open Source Data Availability Parallels Code Licensing Trends: A user predicted that the open-source debate about data availability will follow a similar trajectory to the status quo on code licensing.

Cohere Discord

- Cohere API Errors & Token Counting: A user reported a 404 error when using Langchain and Cohere TypeScript to make subsequent calls to the Cohere API.

- The error message indicated a "non-json" response, which suggests that the Cohere API returned a 404 page instead of a JSON object. The user also asked about token counting for the Cohere API.

- Aya-23-8b Inference Speed: A user asked if the Aya-23-8b model can achieve an inference time under 500 milliseconds for about 50 tokens.

- Model quantization was suggested as a potential solution to achieve faster inference times.

- Persian Tourist Attractions App: A Next.js app was launched that combines Cohere AI with the Google Places API to suggest tourist attractions in Persian language.

- The app features detailed information, including descriptions, addresses, coordinates, and photos, in high-quality Persian.

- App Features & Functionality: The app leverages the power of Cohere AI and the Google Places API to deliver accurate and engaging tourist suggestions in Persian.

- Users can explore tourist attractions with detailed information, including descriptions, addresses, coordinates, and photos, all formatted in high-quality Persian.

- Community Feedback & Sharing: Several members of the community expressed interest in trying out the app, praising its functionality and innovative approach.

- The app was shared publicly on GitHub and Medium, inviting feedback and collaboration from the community.

Modular (Mojo 🔥) Discord

- Mojo Compiler Eliminates Circular Imports: Mojo is a compiled language so it can scan each file and determine the shapes of structs before compiling, resolving circular imports because it has all the functions it needs to implement

to_queue.- Python's approach to circular imports differs, as it runs everything in sequence during compilation, leading to potential problems that Mojo's compiler avoids.

- Mojo Compiler Optimizes Struct Sizes: Mojo's compiler knows the size of a pointer, allowing it to figure out the shape of

Listwithout needing to look atQueue.- Mojo uses an

Arcor some other type of pointer to break the circular import loop.

- Mojo uses an

- Mojo's Potential for Top Level Statements: Mojo currently doesn't have top-level statements, but they are expected to handle circular imports by running the top-level code before

mainstarts in the order of imports.- This will ensure that circular imports are resolved correctly and efficiently.

- Mojo's Unusual Performance Curve: A user observed a sharp increase in Mojo's performance at around 1125 fields, speculating that a smallvec or arena might be overflowing.

- Another user suggested that a 1024 parameter single file might be the cause.

- Mojo's Named Return Slots Explained: Mojo supports named return slots, allowing for syntax like

fn thing() -> String as result: result = "foo".- This feature appears to be intended for "placement new" within the callee frame, although the syntax may change.

Latent Space Discord

- Google Unveils New Gemini Models: Google released three experimental Gemini models: Gemini 1.5 Flash-8B, Gemini 1.5 Pro, and a significantly improved Gemini 1.5 Flash model.

- Users can explore these models on aistudio.google.com.

- Anthropic's Claude 3.5 Sonnet: A Coding Powerhouse: Anthropic's Claude 3.5 Sonnet, released in June, has emerged as a strong contender for coding tasks, outperforming ChatGPT.

- This development could signal a shift in LLM leadership, with Anthropic potentially taking the lead.

- Artifacts Takes Mobile by Storm: Anthropic's Artifacts, an innovative project, has launched on iOS and Android.

- This mobile release allows for the creation of simple games with Claude, bringing the power of LLMs to mobile apps.

- Cartesia's Sonic: On-Device AI Revolution: Cartesia, focused on ubiquitous AI, introduced its first milestone: Sonic, the world's fastest generative voice API.

- Sonic aims to bring AI to all devices, facilitating privacy-preserving and rapid interactions with the world, potentially transforming applications in robotics, gaming, and healthcare.

- Cerebras Inference: Speed Demon: Cerebras launched its inference solution, showcasing impressive speed gains in AI processing.

- The solution, powered by custom hardware and memory techniques, delivers speeds up to 1800 tokens/s, surpassing Groq in both speed and setup simplicity.

OpenInterpreter Discord

- OpenInterpreter Gets a New Instruction Format: A member suggested setting

interpreter.custom_instructionsto a string usingstr(" ".join(Messages.system_message))instead of a list, to potentially resolve an issue.- This change might improve the handling of custom instructions within OpenInterpreter.

- Daily Bots Launches with Real-Time AI Focus: Daily Bots, an ultra low latency cloud for voice, vision, and video AI, has launched with a focus on real-time AI.

- Daily Bots, which is open source and supports the RTVI standard, aims to combine the best tools, developer ergonomics, and infrastructure for real-time AI into a single platform.

- Bland's AI Phone Calling Agent Emerges from Stealth: Bland, a customizable AI phone calling agent that sounds just like a human, has raised $22M in Series A funding and is now emerging from stealth.

- Bland can talk in any language or voice, be designed for any use case, and handle millions of calls simultaneously, 24/7, without hallucinating.

- Jupyter Book Metadata Guide: A member shared a link to Jupyter Book documentation on adding metadata to notebooks using Python code.

- The documentation provides guidance on how to add metadata to various types of content within Jupyter Book, such as code, text, and images.

- OpenInterpreter Development Continues: A member confirmed that OpenInterpreter development is still ongoing and shared a link to the main OpenInterpreter repository on GitHub.

- The commit history indicates active development and contributions from the community.

OpenAccess AI Collective (axolotl) Discord

- Axlotl on Apple Silicon (M3): A user confirmed that Axlotl can be used on Apple Silicon, specifically the M3 chip.

- They mentioned using it on a 128GB RAM Macbook without any errors, but provided no details on training speed or if any customization was necessary.

- IBM Introduces Power Scheduler: A New Learning Rate Approach: IBM has introduced a novel learning rate scheduler called Power Scheduler, which is agnostic to batch size and number of training tokens.

- The scheduler was developed after extensive research on the correlation between learning rate, batch size, and training tokens, revealing a power-law relationship. This scheduler consistently achieves impressive performance across various model sizes and architectures, even surpassing state-of-the-art small language models. Tweet from AK (@_akhaliq)

- Power Scheduler: One Learning Rate for All Configurations: This innovative scheduler allows for the prediction of optimal learning rates for any given token count, batch size, and model size.

- Using a single learning rate across diverse configurations is achieved by employing the equation: lr = bsz * a * T ^ b! Tweet from Yikang Shen (@Yikang_Shen)

- QLora FSDP Parameters Debate: A discussion arose regarding the proper setting of

fsdp_use_orig_paramsin QLora FSDP examples.- Some members believe it should always be set to

true, while others are unsure and suggest it might not be a strict requirement.

- Some members believe it should always be set to

- Uncommon Token Behavior in Model Training: A member asked if tokens with unusual meanings in their dataset compared to the pre-training dataset should be identified by the model.

- The member suggested that tokens appearing more frequently than the normal distribution might be an indicator of effective training.

DSPy Discord

- DSPy's "ImageNet Moment": DSPy's "ImageNet" moment is attributed to @BoWang87 Lab's success at the MEDIQA challenge, where a DSPy-based solution won two Clinical NLP competitions with significant margins of 12.8% and 19.6%.

- This success led to a significant increase in DSPy's adoption, similar to how CNNs became popular after excelling on ImageNet.

- NeurIPS HackerCup: DSPy's Next "ImageNet Moment"?: The NeurIPS 2024 HackerCup challenge is seen as a potential "ImageNet moment" for DSPy, similar to how convolutional neural networks gained prominence after excelling on ImageNet.

- The challenge provides an opportunity for DSPy to showcase its capabilities and potentially gain even greater adoption.

- DSPy's Optimizers for Code Generation: DSPy is being used for code generation, with a recent talk covering its use in the NeurIPS HackerCup challenge.

- This suggests that DSPy is not only effective for NLP but also for other domains like code generation.

- Getting Started with DSPy: For those interested in DSPy, @kristahopsalong recently gave a talk on Weights & Biases about getting started with DSPy for coding, covering its optimizers and a hands-on demo using the 2023 HackerCup dataset.

- The talk provides a great starting point for anyone interested in learning more about DSPy and its applications in coding.

- Changing OpenAI Base URL & Model: A user wanted to change the OpenAI base URL and model to a different LLM (like OpenRouter API), but couldn't find a way to do so.

- They provided code snippets demonstrating their attempts, which included setting the

api_baseandmodel_typeparameters indspy.OpenAI.

- They provided code snippets demonstrating their attempts, which included setting the

tinygrad (George Hotz) Discord

- Tinygrad Ships to Europe: Tinygrad is now offering shipping to Europe! To request a quote, send an email to support@tinygrad.org with your address and which box you would like.

- Tinygrad is committed to making shipping as accessible as possible, and will do their best to get you the box you need!

- Tinygrad CPU Error: "No module named 'tinygrad.runtime.ops_cpu'": A user reported encountering a "ModuleNotFoundError: No module named 'tinygrad.runtime.ops_cpu'" error when running Tinygrad on CPU.

- A response suggested using device "clang", "llvm", or "python" to run on CPU, for example:

a = Tensor([1,2,3], device="clang").

- A response suggested using device "clang", "llvm", or "python" to run on CPU, for example:

- Finding Device Count in Tinygrad: A user inquired about a simpler method for obtaining the device count in Tinygrad than using

tensor.realize().lazydata.base.realized.allocator.device.count.- The user found that

from tinygrad.device import DeviceandDevice["device"].allocator.device.countprovides a more straightforward solution.

- The user found that

LAION Discord

- LAION-aesthetic Dataset Link is Broken: A member requested the Hugging Face link for the LAION-aesthetic dataset, as the link on the LAION website is broken.

- Another member suggested exploring the CaptionEmporium dataset on Hugging Face as a potential alternative or source of related data.

- LAION-aesthetic Dataset Link Alternatives: The LAION-aesthetic dataset is a dataset for captioning images.

- The dataset includes various aspects of aesthetic judgment, potentially making it valuable for image captioning and image generation models.

Gorilla LLM (Berkeley Function Calling) Discord

- Llama 3.1 Benchmarking on Custom API: A user requested advice on benchmarking Llama 3.1 using a custom API, specifically for a privately hosted Llama 3.1 endpoint and their inference pipeline.

- They are seeking guidance on how to effectively benchmark the performance of their inference pipeline in relation to the Llama 3.1 endpoint.

- Gorilla's BFCL Leaderboard & Model Handler Optimization: A user raised a question about whether certain optimizations they are implementing for their function-calling feature might be considered unfair to other models on the BFCL Leaderboard.

- They are concerned about the BFCL Leaderboard's stance on model handler optimizations that may not be generalizable to all models, particularly regarding their use of system prompts, chat templates, beam search with constrained decoding, and output formatting.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!