[AINews] BitNet was a lie?

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Scaling Laws for Precision (Quantization) are all you need.

AI News for 11/11/2024-11/12/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 2286 messages) for you. Estimated reading time saved (at 200wpm): 281 minutes. You can now tag @smol_ai for AINews discussions!

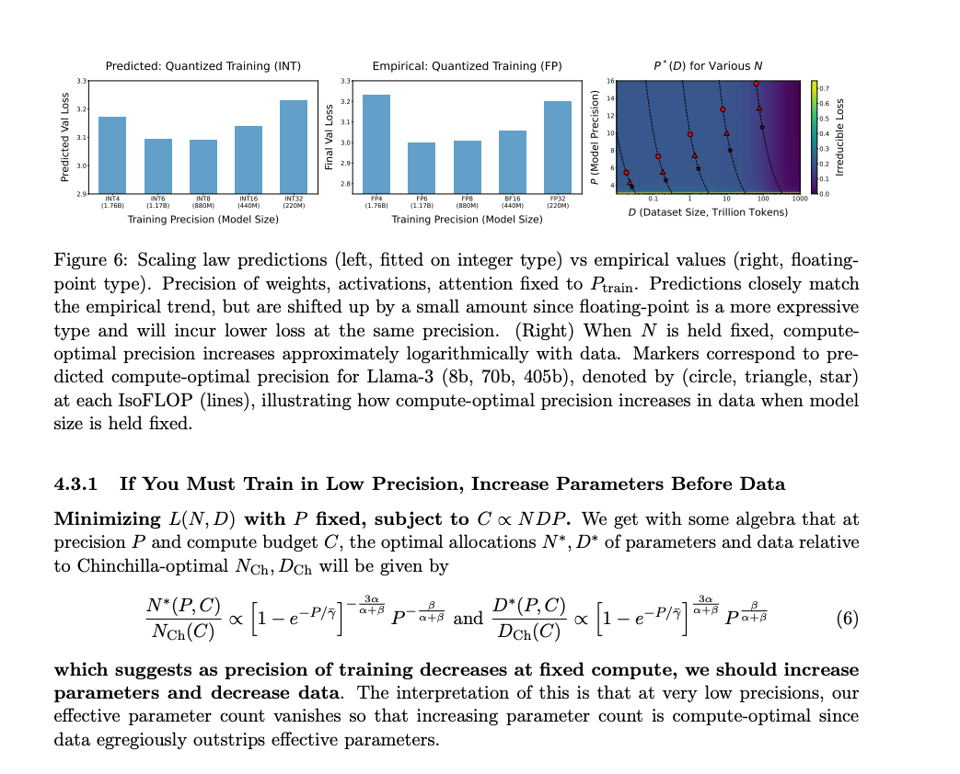

In a growing literature of post-Chinchilla papers, the enthusiasm for quantization reached its zenith this summer with the BitNet paper (our coverage here) proposing as severe a quantization schema as ternary (-1, 0, 1) aka 1.58 bits. A group of grad students under Chris Re has now modified Chinchilla scaling laws for quantization over 465+ pretraining runs and found that the benefits level off at FP6.

Lead author Tanishq Kumar notes:

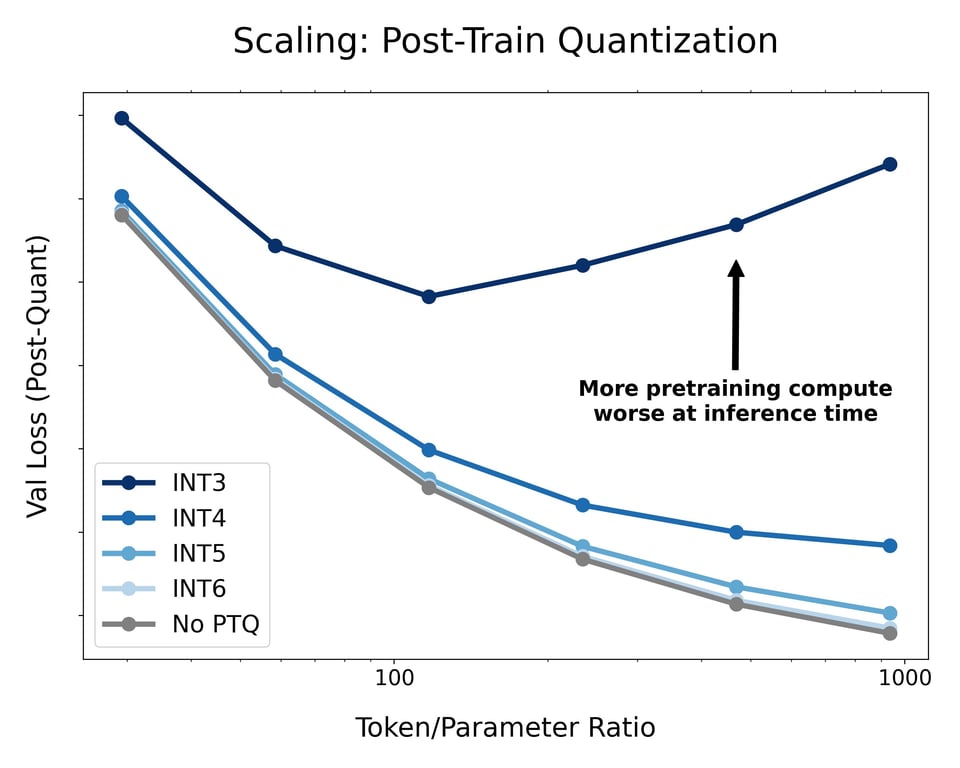

- the longer you train/the more data seen during pretraining, the more sensitive the model becomes to quantization at inference-time, explaining why Llama-3 may be harder to quantize.

- In fact, this loss degradation is roughly a power law in the token/parameter ratio seen during pretraining, so that you can predict in advance the critical data size beyond which pretraining on more data is actively harmful if you're serving a quantized model.

- The intuition might be that as more knowledge is compressed into weights as you train on more data, a given perturbation will damage performance more.

Below is a fixed language model overtrained significantly to various data budgets up to 30B tokens, then post-train quantized afterwards. This demonstrates how more pretraining FLOPs do not always lead to better models served in production.

QLoRA author Tim Dettmers notes the end of the quantized scaling "free lunch" even more starkly: "Arguably, most progress in AI came from improvements in computational capabilities, which mainly relied on low-precision for acceleration (32-> 16 -> 8 bit). This is now coming to an end. Together with physical limitations, this creates the perfect storm for the end of scale. From my own experience (a lot of failed research), you cannot cheat efficiency. If quantization fails, then also sparsification fails, and other efficiency mechanisms too. If this is true, we are close to optimal now. With this, there are only three ways forward that I see... All of this means that the paradigm will soon shift from scaling to "what can we do with what we have". I think the paradigm of "how do we help people be more productive with AI" is the best mindset forward.

[Sponsored by SambaNova] Take a few hours this week to build an AI agent in SambaNova’s Lightning Fast AI Hackathon! They’re giving out $10,000 in total prizes to the fastest, slickest and most creative agents. The competition ends November 22 - get building now!

Swyx commentary: $10k for an ONLINE hackathon is great money for building that fast AI Agent that you've been wanting!

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Models and Tools

- Qwen 2.5-Coder-32B-Instruct Performance: @Alibaba_Qwen announced Qwen 2.5-Coder-32B-Instruct, which matches or surpasses GPT-4o on multiple coding benchmarks. Early testers reported it as "indistinguishable from o1-preview results" (@hrishioa) and noted its competitive performance in code generation and reasoning.

- Open Source LLM Initiatives: @reach_vb emphasized that intelligence is becoming too cheap to meter with open-source models like Qwen2.5-Coder, highlighting their availability on platforms like Hugging Face. Additionally, @llama_index introduced DeepEval, an open-source library for unit testing LLM-powered applications, integrating with Pytest for CI/CD pipelines.

- AI Infrastructure and Optimization: @Tim_Dettmers discussed the limitations of quantization in AI models, stating that we are reaching the optimal efficiency. He outlined three paths forward: scaling data centers, scaling through dynamics, and knowledge distillation.

- Developer Tools and Automation: @tom_doerr shared multiple tools such as Composio, allowing natural language commands to run actions, and Flyscrape, a command-line web scraping tool. @svpino introduced DeepEval for benchmarking LLM applications, emphasizing its integration with Pytest and support for over 14 metrics.

- AI Research and Benchmarks: @fchollet compared program synthesis with test-time fine-tuning, highlighting their different approaches to function reuse. @samyaksharma shared insights on agentic AI systems, focusing on productivity enhancement rather than mere technological advancement.

AI Governance and Ethics

- AI Safety and Policy: @nearcyan reflected on the impact of AI on programming automation, expressing disappointment over lack of innovation in interfaces. @RichardMCNgo discussed the integration of AI safety into government plans, questioning whether these plans will be effective or harmful.

- AI Alignment and Regulation: @latticeflowai introduced COMPL-AI, a framework to evaluate LLMs’ alignment with the EU’s AI Act. @DeepLearningAI also highlighted efforts in AI governance, emphasizing the importance of regulatory compliance.

AI Applications

- Generative AI in Media and Content Creation: @skirano launched generative ads on @everartai, enabling the creation of ad-format images compatible with platforms like Instagram and Facebook. @runwayml provided tips on camera placement in Runway's tools, emphasizing how camera angles influence storytelling.

- AI in Data Engineering and Analysis: @llama_index showcased PureML, which automatically cleans and refactors ML datasets using LLMs, enhancing data consistency and feature creation. @LangChainAI introduced tools for chunking data for RAG applications and identifying agent failures, improving data retrieval and agent reliability.

- AI in Healthcare and Biological Systems: @mustafasuleyman shared work on AI2BMD, which aims to understand biological systems and design new biomaterials and drugs through AI-driven analysis.

Developer Infrastructure and Tools

- Bug Tracking and Error Monitoring: @svpino presented tools like Jam, a browser extension for detailed bug reporting, claiming it can reduce bug fixing time by over 70%. @tom_doerr introduced error tracking and performance monitoring tools tailored for developers.

- Code Generation and Testing: @jamdotdev collaborated on bug reporting tools, while @svpino emphasized the importance of unit testing LLM-powered applications using DeepEval.

- API Clients and Development Frameworks: @tom_doerr introduced a desktop API client for managing REST, GraphQL, and gRPC requests, enhancing developer productivity. Additionally, tools like Composio enable natural language-based actions, streamlining workflow automation.

AI Research and Insights

- LLM Training and Optimization: @Tim_Dettmers discussed the end of scaling data centers and the limits of quantization, suggesting that future advancements may rely more on knowledge distillation and model dynamics.

- AI Collaboration and Productivity: @karpathy mused about a parallel universe where IRC became the dominant protocol, emphasizing the shift towards real-time conversation with AI for information exchange.

- AI in Education and Learning: @DeepLearningAI promoted their Data Engineering certificate, featuring simulated conversations to demonstrate stakeholder requirement gathering in data engineering.

Memes and Humor

- AI and Technology Jokes: @JonathanRoss321 humorously suggested adding a fourth law to Asimov's laws, stating that robots cannot allow humans to believe they are human. @giffmana shared frustrations with ASPX websites, expressing a humorous complaint about outdated technologies.

- Light-Hearted AI Remarks: @Sama joked about AI taking over one's life with LLM automation. @Transfornix playfully referred to rotmaxers being scared of a "real one".

- Humorous Interactions and Reactions: @richardMCNgo expressed a metaphorical reflection on culture and history with a touch of humor. @lhiyasut made a witty remark about the meaning of "ai" in different languages.

Community and Events

- AI Conferences and Meetups: @c_valenzuelab announced the opening of an office in London and listed numerous community meetups around the world, including Toronto, Los Angeles, Shanghai, and more, fostering a global AI community.

- Podcasts and Discussions: @GoogleDeepMind promoted their podcast featuring AI experts, discussing the future of AI assistants and the ethical challenges they pose. @omaarsar0 engaged in discussions with AI thought leaders like @lexfridman and @DarioAmodei.

- Educational Content and Workshops: @lmarena_ai encouraged involvement in their OSS fellowship, while @shuyanzhxyc invited individuals to join their lab at Duke focused on agentic AI systems.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Qwen2.5-Coder 32B Release: Community Reception and Technical Breakdown

- New Qwen Models On The Aider Leaderboard!!! (Score: 648, Comments: 153): New Qwen Models have been added to the Aider Leaderboard, indicating advancements in AI model performance and possibly new benchmarks in the field.

- Discussion highlights the Qwen2.5-Coder models on Hugging Face, with users comparing their performance to other models like GPT-4o and expressing interest in their capability for coding tasks. The 32B version is noted as being particularly strong, with some users finding it superior to GPT-4o in specific tasks.

- There are technical considerations around running these models locally, with discussions on necessary PC specifications and quantization techniques to efficiently handle model sizes like 32B and 72B. Users discuss the benefits of multi-shot inferencing and the need for high memory bandwidth to achieve practical token generation speeds.

- The conversation also touches on model licensing and the open-source community's response to these releases, with some models following the Apache License and others sparking discussions on accessibility and community-driven development. Users are excited about the models' potential, especially in self-hosted environments, and the improvements made from previous versions.

- Qwen/Qwen2.5-Coder-32B-Instruct · Hugging Face (Score: 486, Comments: 134): Qwen/Qwen2.5-Coder-32B-Instruct has been released on Hugging Face, sparking discussions about its capabilities and potential applications. The focus is on its technical specifications and performance in various coding and instructive tasks.

- Discussions highlight the performance and efficiency of the Qwen2.5-Coder-32B-Instruct model, with some users noting its impressive results despite potentially having less computational resources compared to other models. The 14B version is mentioned as being nearly as effective and more accessible for users with standard hardware configurations.

- Users discuss the technical requirements and performance benchmarks for running these models, emphasizing the need for significant RAM and VRAM, with suggestions to use smaller models or quantized versions like the 14B or 7B for those with limited resources. Specific benchmarks and performance metrics such as token evaluation rates are shared to illustrate the model's capabilities.

- Links to resources such as the Qwen2.5-Coder-32B-Instruct-GGUF and a blog post provide additional context and information. Users also discuss the availability of OpenVINO conversions and the implications of model quantization on performance and usability.

- My test prompt that only the og GPT-4 ever got right. No model after that ever worked, until Qwen-Coder-32B. Running the Q4_K_M on an RTX 4090, it got it first try. (Score: 327, Comments: 108): Qwen-Coder-32B successfully handled a complex test prompt on the first attempt, a feat only achieved previously by the original GPT-4. The test was conducted using Q4_K_M on an RTX 4090 GPU.

- Platform and Configuration: The platform and configuration significantly impact model performance, with variations noted between platforms like VLLM and Llama.cpp. Temperature settings and custom UI setups also influence output, as discussed by LocoMod in their personalized implementation using HTMX for dynamic UI modification.

- Model Performance and Comparison: The Qwen-Coder-32B model shows promising results, outperforming smaller models like the 7B, which often fail complex prompts. Users noted the 32B's ability to handle diverse coding languages, while others reminisced about the original GPT-4's superior performance before its capabilities were reduced.

- Technical Specifications and Benchmarks: Benchmarks for the RTX 4090 showed 41 tokens/second using specific configurations, highlighting the importance of hardware in achieving efficient performance. Users shared their setups, including dual 3090s and dual P40s, achieving 22 tokens/second and 7 tokens/second respectively, illustrating the variability in performance based on hardware and configuration.

Theme 2. ExllamaV2 Introduces Vision Model Support with Pixtral

- ExllamaV2 ships Pixtral support with v0.2.4 (Score: 29, Comments: 2): ExllamaV2 has released v0.2.4 with support for the vision model Pixtral, marking its first venture into vision model support. Turboderp suggests future expansions for multimodal capabilities, potentially allowing integration of models like Qwen2.5 32B Coder with vision features from Qwen2 VL, enhancing the appeal of open-source models. For more details, refer to the release notes and related API support discussions on GitHub.

- Qwen2.5-Coder Series: Powerful, Diverse, Practical. (Score: 58, Comments: 8): Qwen2.5-Coder 32B is speculated to be a multi-modal AI model that is both powerful and practical, suggesting potential advancements in diverse applications. The lack of a post body leaves specific details and features of the model unconfirmed.

- The Tongyi official website promises a code mode that supports one-click generation for websites and visual applications, but it has not launched yet, despite previous announcements. Users report that while the model can generate code, such as for a HTML snake game, it fails to render the output.

Theme 3. Exploring Binary Vector Embeddings: Speed vs. Compression

- Binary vector embeddings are so cool (Score: 314, Comments: 20): Binary vector embeddings achieve over 95% retrieval accuracy while providing 32x compression and approximately 25x retrieval speedup, making them highly efficient for data-intensive applications. More details can be found in the blog post.

- Binary Vector Embeddings are gaining attention for their efficiency and speed, with implementations being simplified through tools like Numpy's bitwise_count() for fast CPU execution. The discussion highlights the ease of implementing binary quantization using simple operations like xor + popcnt on cheap CPUs.

- Model Training and Compatibility are critical for effective binary quantization, with models like MixedBread and Nomic being specifically trained for compression-friendly operations. This approach is supported by Cohere's documentation, which emphasizes the need for models to perform well across different compression formats, including int8 and binary.

- The Trade-offs in Compression are significant, as users report unpredictable losses depending on bit diversity, as discussed by pgVector's maintainer. The complexity of measuring these losses suggests a need for careful evaluation to determine if a data pipeline is suitable for binary quantization.

- Is this the Golden Age of Open AI- SD 3.5, Mochi, Flux, Qwen 2.5/Coder, LLama 3.1/2, Qwen2-VL, F5-TTS, MeloTTS, Whisper, etc. (Score: 74, Comments: 28): The post discusses the significant advances in open-source AI models, highlighting the recent releases such as Qwen 2.5/Coder, LLama 3.1/2, and SD 3.5. It emphasizes the narrowing gap between open and closed AI models, citing the affordability of inference services at approximately $0.2 per million tokens and the potential of specialized hardware providers like Groq and Cerebras. The author suggests that open-source models are currently outperforming closed models, with a bright future anticipated despite potential regulatory challenges.

- Hardware Requirements and Performance: Users discuss the performance of AI models on various GPUs, with mentions of the RTX 4070 Super and RTX 3080 for running models like Mochi 1 and generating video clips. The RTX 4070 Super reportedly takes 7.5 minutes to generate a video in ComfyUI, while a 24GB VRAM card like the 3090 is sought for higher quality outputs.

- Open vs. Closed Model Capabilities: The discussion highlights the coding capabilities of Qwen models, noting a gap between open and closed models. The Qwen 2.5 Coder 14B outperforms Llama3 405b in the Aider benchmark, and smaller models like Qwen 2.5 Coder 3B are useful for local tasks, suggesting optimism for open-source advancements.

- Future Prospects and Developments: There is a debate about the current stage of open models, with some believing they are close to closed models but not yet superior. The community anticipates further advancements as consumer-grade hardware improves, and users express interest in new releases like Alibaba's Easy Animate, which requires 12GB VRAM.

Theme 4. Qwen 2.5 Technical Benchmarks: Hardware and Platform Strategy

- qwen-2.5-coder 32B benchmarks with 3xP40 and 3090 (Score: 49, Comments: 22): The qwen-2.5-32B benchmarks reveal that the 3090 GPU achieves a notable 28 tokens/second at a 32K context, while a single P40 GPU can handle 10 tokens/second. The 3xP40 setup supports a 120K context at Q8 quantization but does not scale performance linearly, with row split mode significantly enhancing generation speed. Adjusting the power limit of the P40 from 160W to 250W shows minimal impact on performance, with the 3090 outperforming in generation speed at 32.83 tokens/second when powered at 350W.

- VLLM compatibility with P40 GPUs is limited, with users recommending llama.cpp as the best choice for these GPUs. MLC is noted to perform approximately 20% worse than GGUF Q4 on P40s, lacking flash attention, reinforcing the preference for llama.cpp.

- Discussions around quantization levels like Q4, Q8, and fp8/16 reveal minimal performance differences, as detailed in a Neural Magic blog post. Users highlight the benefits of quantizing the kv cache to reduce memory usage without noticeable quality loss.

- P40 GPU power consumption is effectively managed at around 120W, with little benefit from exceeding 140W. Users report 36 tokens/second on a water-cooled 3090 and emphasize the P40 as a cost-effective option when purchased for under $200.

- LLMs distributed across 4 M4 Pro Mac Minis + Thunderbolt 5 interconnect (80Gbps). (Score: 58, Comments: 30): Running Qwen 2.5 on a setup of four M4 Pro Mac Minis interconnected via Thunderbolt 5 with a bandwidth of 80Gbps is discussed. The focus is on the potential for distributing LLMs across this hardware configuration.

- Discussion centers on the cost-effectiveness and configuration of M4 Pro Mac Minis compared to alternatives like the M2 Ultra and M4 Max. A fully kitted M4 Pro Mini costs around $2,100, with a setup of two providing 128GB of VRAM compared to the $4,999 M4 Max, though the latter offers double the memory bandwidth and GPU cores.

- Users debate the practicality of Mac Minis versus traditional setups like a ROMED8-2T motherboard with 4x3090 GPUs, citing the former's ease of use and reduced heat output. The potential to avoid common issues with Linux, CUDA errors, and PCIe is highlighted as a significant advantage.

- There is skepticism about performance claims, with questions about model specifics such as whether it is tensor parallel or the type of model precision used (e.g., fp16, Q2). The need for proof of the Mac Mini's capabilities in fine-tuning at reasonable speeds is emphasized before considering a switch from existing rigs.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Claude 3.5 Opus Coming Soon: Anthropic CEO Confirms

- Anthropic CEO on Lex Friedman, 5 hours! (Score: 184, Comments: 49): Anthropic CEO Dario Amodei appeared on the Lex Fridman podcast for a 5-hour conversation available on YouTube. The discussion confirmed the continued development of Claude Opus 3.5, though no specific release timeline was provided.

- Users expressed skepticism about Anthropic's claim of not "nerfing" Claude, noting that performance changes could be achieved through "different thinking budget through prompting depending on the current load" rather than weight modifications.

- Notable guests Chris Olah and Amanda Askell were highlighted for their expertise in mechanistic interpretability and philosophical considerations respectively, generating significant interest among viewers.

- The community expressed concerns about Lex Fridman's recent content direction, with users noting his shift away from technical subjects and controversial associations with political figures, including becoming a "Putin apologist".

- Opus 3.5 is Not Die! It will be still coming out conform by anthropic CEO (Score: 62, Comments: 63): Opus 3.5, a model from Anthropic, continues development according to the company's CEO. The post lacks additional context or specific details about release timeline or model capabilities.

- Users discuss potential pricing for Opus 3.5, with expectations around $100/M tokens similar to GPT-4-32k at $120/M tokens. Several users indicate willingness to pay premium prices if the model delivers superior one-shot performance, particularly for coding tasks.

- Community skepticism emerges around previous Reddit speculation that Opus 3.5 was scrapped or merged into 3.5 Sonnet. Users note that running a larger model at Sonnet prices would be financially unsustainable for Anthropic.

- Competition concerns are highlighted with mentions of Qwen gaining market share. Users also critique the CEO's communication style as being evasive and uncomfortable when discussing the model's development status.

Theme 2. Qwen2.5-Coder-32B Matches Claude: Open Source Milestone

- Open source coding model matches with sonnet 3.5 (Score: 100, Comments: 33): Open-source coding model performance claims to match Claude Sonnet 3.5, though no additional context or evidence is provided in the post body.

- LM Studio makes running the model locally accessible, offering network connectivity for automation tasks and various quantization options like Q3 at 17GB. The model performs best when fitting in VRAM rather than running from RAM.

- The Qwen2.5-Coder-32B model runs effectively on 24GB video cards with Q4 quantization, available on Hugging Face. Users note it's more cost-effective than Haiku, costing approximately half as much.

- Users express interest in fine-tuning capabilities for matching specific coding styles and project structures, with options to host through OpenRouter at competitive prices. The model shows impressive performance for its 32B size.

- Every one heard that Qwen2.5-Coder-32B beat Claude Sonnet 3.5, but.... (Score: 61, Comments: 42): Qwen2.5-Coder-32B outperformed Claude Sonnet in coding benchmarks as shown in a comparative statistical graph. The image displays performance metrics between the two models, highlighting Qwen's competitive capabilities at a lower operational cost.

- Qwen2.5-Coder-32B is praised for its impressive performance as an open-source model, with pricing at $0.18 per million tokens via deepinfra.com compared to Claude Sonnet's $3/$15 input/output rates.

- Real-world testing shows Qwen excels at specific development tasks but struggles with complex logic and design tasks compared to Claude. The model's 32B size allows for local computer operation, with Q3 quantization potentially affecting performance on complex tasks.

- China's government heavily subsidizes AI APIs, explaining the low token costs, while Anthropic recently increased Haiku 3.5 prices citing improved intelligence as justification. Users note this creates less incentive to use closed-source models.

Theme 3. ComfyUI Video Generation: New Tools & Capabilities

- mochi1 text to video (comfyui is built in and is very fast ) (Score: 51, Comments: 6): Mochi1, a text-to-video model, integrates ComfyUI workflow capabilities for video generation. The tool emphasizes speed in its operations, though no specific performance metrics or technical details were provided in the post.

- Users pointed out that the original post contained duplicate links and lacked proper workflow documentation, criticizing the misleading nature of the post's "Workflow Included" claim.

- Made with ComfyUI and Cogvideox model, DimensionX lora. Fully automatic ai 3D motion.

I love Belgium comics, and I wanted to use AI to show an example of how to enhance them using it.

Soon a full modelisation in 3D ?

waiting for more lora to create a full app for mobile.

Thanks @Kijaidesign for you (Score: 83, Comments: 10): ComfyUI and Cogvideox models were used alongside DimensionX lora to create 3D motion animations of Belgian comics. The creator aims to develop a mobile application for enhancing Belgian comics using AI, pending additional lora models.

- Users inquired about the workflow and potential use of After Effects in the animation process, highlighting interest in the technical implementation details.

- Commenters envision potential for automated panel-to-panel animation with dynamic camera movements adapting to different comic layouts and compositions.

Theme 4. AI Content Generation on Reddit: Growing Trend & Concerns

- Remember this 50k upvote post? OP admitted ChatGPT wrote 100% of it (Score: 1349, Comments: 163): ChatGPT allegedly generated a viral Reddit post that received 50,000 upvotes, with the original poster later confirming the content was entirely AI-generated. No additional context or details about the specific post content were provided in the source material.

- Users noted several writing style indicators of AI-generated content, particularly the use of em-dashes and formal formatting which is uncommon in typical Reddit posts. The structured, lengthy formatting was cited as a key tell for AI authorship.

- Discussion centered around the growing challenge of detecting AI content on Reddit, with users expressing concern about the platform becoming dominated by AI-generated posts. Several commenters mentioned being initially fooled despite noticing some suspicious elements.

- A user who correctly identified the post as AI-generated was initially downvoted and criticized for their skepticism, highlighting the community's mixed ability to detect AI content. The original post received 50,000 upvotes while this revelation gained significantly less attention.

- Dead Internet Theory: this post on r/ChatGPT got 50k upvotes, then OP admitted ChatGPT wrote it (Score: 130, Comments: 48): Dead Internet Theory gained credibility when a viral post on r/ChatGPT reaching 50,000 upvotes was revealed to be AI-generated content, with the original poster later admitting that ChatGPT wrote the entire submission. This incident exemplifies concerns about AI-generated content dominating social media platforms without clear disclosure of its artificial origins.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

AI Language Models Battle for Supremacy

- Qwen2.5 Coder Tops GPT-O and Claude 3.5: The Qwen2.5 Coder 32B outperforms GPT-O and Claude 3.5 Sonnet with a 73.7% performance on complex tasks compared to Sonnet's 84.2%. Users praise its open-source capabilities while noting ongoing improvements.

- Phi-3.5’s Overcensorship Sparks Debate: Microsoft’s Phi-3.5 model faces criticism for heavy censorship, leading to the creation of an uncensored version on Hugging Face. Users humorously mocked Phi-3.5’s excessive restrictions, highlighting its impact on utility for technical tasks.

- OpenAI’s o1 Model Release Anticipated: OpenAI gears up for the full release of the o1 reasoning model by year-end, with community excitement fueled by anonymous insights. Speculation about the development team’s expertise adds to the anticipation.

Optimization Techniques Revolutionize Model Training

- Gradient Descent Mechanics Unveiled: In Eleuther Discord, engineers debated scaling updates with gradient descent and the role of second-order information for optimal convergence. Discussions referenced recent papers on feature learning and kernel dynamics.

- LoRA Fine-Tuning Accelerates Inference: Unsloth AI members utilize LoRA fine-tuned models like Llama-3.2-1B-FastApply for accelerated inference via native support. Sample code showcased improved execution speeds through model size reduction.

- Line Search Methods Enhance Learning Rates: Eleuther participants explored line search techniques to dynamically recover optimal learning rates as loss approaches divergence. Findings indicate that line searches yield rates about 1/2 the norm of the update, suggesting consistent patterns.

Deployment and Inference Get a Boost with New Strategies

- Speculative Decoding Boosts Inference Speed: Members shared speculative decoding and using FP8 or int8 precision as strategies to enhance inference speed. Custom CUDA kernels from providers like qroq and Cerebras offer even greater performance gains.

- Vast.ai Offers Affordable Cloud GPU Solutions: Vast.ai recommended as an affordable cloud GPU provider, with prices ranging from $0.30 to $2.80 per hour for GPUs like A100 and RTX 4090. Users advise against older Tesla cards, favoring newer hardware for reliability.

- Multi-GPU Syncing Poses Challenges: Discussions in Interconnects and GPU MODE highlight complexities in synchronizing mean and variance parameters across multi-GPU setups using tools like SyncBatchNorm in Pytorch, posing implementation challenges in frameworks like liger.

APIs and Tools Streamline AI Development

- Cohere API Changes Cause Headaches: Users in Cohere Discord faced UnprocessableEntityError due to the removal of the return_documents field in the /rerank endpoint. Team members are working to restore the parameter, with Cohere’s support addressing the issue.

- Aider Integrates LoRA for Faster Operations: In aider Discord, members discussed leveraging LoRA fine-tuned models such as Llama-3.2-1B-FastApply for accelerated inference using Aider’s native support. Sample code demonstrated loading adapters for improved speed.

- NotebookLM Enhances Summarization Workflows: Participants in Notebook LM Discord explored using NotebookLM to summarize over 200 AI newsletter emails, streamlining information digestion. Technical issues like audio file upload failures were discussed, pointing to potential technical glitches.

Scaling Laws and Datasets Challenge AI Research

- Scaling Laws Reveal Quantization Limits: In Eleuther and Interconnects Discords, researchers discussed a study showing models trained on more tokens require higher precision for quantization, impacting scalability. Concerns raised about LLaMA-3 model’s performance under these laws.

- Aya_collection Dataset Faces Translation Inconsistencies: Cohere users identified disparities in the aya_collection dataset’s translations across 19 languages, with English having 249716 rows versus 124858 for Arabic and French. Specific mismatches in translated_cnn_dailymail were highlighted.

- Data-Parallel Scaling Bridges Theory and Practice: Discussions on Eleuther Discord emphasized practical challenges in bridging theory and application within data-parallel scaling, referencing documentation. Quotes like 'The stuff that works is not allowed to be published' highlight publication constraints.

PART 1: High level Discord summaries

Eleuther Discord

-

Exploring Gradient Descent Mechanics: A discussion explored the gradient descent update mechanics, focusing on how update projections and norms influence model weight changes.

- Participants debated the importance of scaling updates relative to input variations and the role of second-order information in achieving optimal convergence.

- Significance of Muon Optimization: The role of Muon as an optimizer was examined, highlighting its interaction with feature learning and effects on network training dynamics.

- Suggestions included exploring connections between Muon and other theoretical frameworks like kernel dynamics and existing feature learning literature.

- Filling Gaps in Scaling Laws: A member shared insights on filling missing pieces in scaling laws, emphasizing practical challenges in bridging theory and application.

- The stuff that works is not allowed to be published, highlighting challenges in effectively applying research.

- Optimizing Learning Rates with Line Searches: There's speculation about line searching as a method to recover optimal learning rates during training, especially as loss approaches divergence.

- One contributor referenced findings that line searches yield rates about 1/2 the norm of the update, indicating possible consistent patterns.

- Text-MIDI Multimodal Datasets Suggested: A participant proposed implementing a text-MIDI multimodal dataset, considering existing collections of recordings and metadata.

- They acknowledged copyright limitations, suggesting that only MIDI files could be open-sourced.

Perplexity AI Discord

-

Perplexity Faces Frustration from Persistent Technical Issues: Users have reported persistent technical issues in the Perplexity AI platform, notably the hidden messages bug affecting long threads that remains unresolved.

- These ongoing problems have been present for over a month, significantly impacting user experience, despite fixes for other minor bugs.

- Uncertainty Surrounds Perplexity Pro Subscription Expiry: A user inquired about the continuation of their Perplexity Pro free year after expiration, questioning its effects on the R1 device.

- The community confirmed that the subscription won't stay free post-trial, and users will revert to limited free searches.

- Perplexity Model Showdown: GPT-O Tops the Charts: Discussions indicate that gpt-o is outperforming other models in Perplexity AI, especially in specific tasks.

- Conversely, o1 is seen as having limited applications, despite its specialized nature.

- Mac App UI Woes Plague Perplexity Users: Users reported UI issues in the Mac version of the Perplexity app, highlighting the absence of a scrollbar which hampers navigation.

- Additional complaints include persistent Google sign-in problems and missing features available in the web app.

- Community Seeks Solutions for Pplx API DailyBot Editor: A member requested guidance on implementing the Pplx API DailyBot custom command editor, seeking initial steps for project commencement.

- Another user shared a workaround using CodeSandBox VM with webhooks, but the community is exploring alternative solutions for better implementation.

Unsloth AI (Daniel Han) Discord

-

Qwen 2.5 Coder Finetuning Resources Released: A new finetuning notebook for the Qwen 2.5 Coder (14B) model is now available on Colab, enabling free finetuning with 60% reduced VRAM usage and extended context lengths from 32K to 128K.

- Users can access Qwen2.5 Coder Artifacts and Unsloth versions to address token training issues and enhance model performance.

- Optimization Strategies for Faster Inference: Members shared techniques to enhance inference speed, including speculative decoding, utilizing FP8 or int8 precision, and implementing custom optimized CUDA kernels.

- Providers like qroq and Cerebras have developed custom hardware solutions to further boost performance, though this may impact throughput.

- LoRA Fine-tuning Integration with Unsloth: Users discussed leveraging LoRA fine-tuned models such as Llama-3.2-1B-FastApply for accelerated inference using Unsloth's native support.

- Sample code provided demonstrates loading adapters with Unsloth, resulting in improved execution speed due to the model's smaller size.

- Model Checkpoints and Adapter Usage Best Practices: Successful integration of adapter models on top of base models for inference was achieved using the PeftModel class, emphasizing the importance of specifying checkpoint paths during model loading.

- Best practices include building adapters first and ensuring correct checkpoint paths to facilitate accurate model enhancements and deployment.

- Managing RAM Usage During Model Training: A user reported increased RAM consumption when running Gemma 2B, potentially due to evaluation processes intensifying memory demands.

- Another member inquired about evaluation practices, suggesting that turning off evaluations might mitigate excessive memory usage.

aider (Paul Gauthier) Discord

-

Qwen 2.5 Coder Performance: Qwen 2.5-Coder-32B demonstrates a 73.7% performance in complex tasks, trailing behind Claude 3.5 Sonnet at 84.2%.

- Users highlighted that Qwen models still present placeholder responses, which may impede coding efficiency and completeness.

- Aider Installation and Usage: Aider installation requires Python 3.9-3.12 and git, with users referring to the official installation guide for assistance.

- Discussions emphasized streamlining the installation process to enhance user experience for AI engineers.

- Model Comparison: Qwen 2.5-Coder's performance was compared to models like DeepSeek and GPT-4o, showing varied results across different tasks.

- Leaderboard scores indicate that tweaking model configurations could optimize performance for specific coding tasks.

- Aider Configuration Warnings: Users encountered Aider configuration warnings when the Ollama server wasn't running or the API base wasn't set, leading to generic warnings instead of specific errors.

- Community suggestions included verifying model names and addressing ongoing bugs with Litellm to resolve spurious warnings.

- OpenRouter API Usage: Issues with OpenRouter API were reported, such as benchmark scripts failing to connect to the llama-server due to unrecognized model names.

- Solutions involved adjusting the

.aider.model.metadata.jsonfile, which primarily affects cost reporting and can be disregarded if necessary.

Interconnects (Nathan Lambert) Discord

-

Qwen 2.5 Coder Breaks 23.5T Tokens: Qwen 2.5 Coder has been pretrained on a staggering 23.5 trillion tokens, making it the first open-weight model to exceed the 20 trillion token threshold, as highlighted in the #news channel.

- Despite this achievement, users expressed concerns about the challenges of running Qwen 2.5 locally, citing the need for high-spec hardware like a 128GB MacBook to handle full BF16 precision.

- Scaling Laws Challenge LLaMA-3 Quantization: A study discussed in #reads indicates that as models are trained on more tokens, they require higher precision for quantization, posing significant challenges for the LLaMA-3 model.

- The research suggests that continued increases in pretraining data may adversely impact the quantization process, raising concerns about the scalability and performance of future AI models.

- Dario Amodei Forecasts Human-Level AI by 2027: In a #news channel podcast, Dario Amodei discussed the observed scaling across various AI modalities, projecting the emergence of human-level AI by 2026-2027.

- He emphasized the importance of ethical considerations and nuanced behavior in AI systems as they scale, highlighting potential uncertainties in achieving these advancements.

- Nous Research Launches Forge Reasoning API Beta: Nous Research unveiled the Forge Reasoning API Beta in the #news channel, aiming to enhance inference time scaling applicable to any model, specifically targeting the Hermes 70B model.

- Despite the promising launch, there are ongoing concerns about the consistency of reported benchmarks, leading to skepticism regarding the reliability of the API's performance metrics.

- OpenAI Prepares Full Release of o1 Model: Anticipation builds around OpenAI's planned full release of the o1 reasoning model by year-end, as discussed in the #news channel.

- Community members are particularly interested in the development teams behind o1, with anonymous sources fueling speculation about the model's capabilities and underlying technologies.

OpenRouter (Alex Atallah) Discord

-

Qwen Leaps Ahead with Coder 32B: The newly released Qwen2.5 Coder 32B outperforms competitors Sonnet and GPT-4o in several coding benchmarks, according to a tweet from OpenRouter.

- Despite these claims, some members questioned the accuracy, suggesting that tests like MBPP and McEval might not fully reflect true performance.

- Gemini 1.5 Flash Enhances Performance: Gemini 1.5 Flash has received updates including frequency penalty, presence penalty, and seed adjustments, improving its capability across various tasks as per OpenRouter's official update.

- Users noted improved performance, especially at temperature 0, with speculation that an experimental version is deployed on Google AI Studio.

- Anthropic's Tool Not Yet Compatible: Discussions revealed that Anthropic's computer use tool currently lacks support within OpenRouter, requiring a special beta header.

- Members expressed interest in future compatibility to enhance integration and functionality within their projects.

- OpenRouter Introduces Pricing Adjustments: OpenRouter clarified that usage may incur approximately 5% additional costs for tokens through credits, as outlined in their terms of service.

- This update prompted user inquiries regarding pricing transparency and comparisons with direct model usage.

- Custom Provider Keys Sought by Beta Testers: Multiple users have requested access to custom provider keys for beta testing to better manage Google's rate limits.

- The strong interest highlights the community's desire for enhanced functionality and project optimization.

OpenAI Discord

-

Qwen2.5-Coder Showcases Open-Source Prowess: The new Qwen2.5-Coder model was highlighted for its open-source code capabilities, offering a competitive edge against models like GPT-4o.

- Access to the model and its demonstrations is available on GitHub, Hugging Face, and ModelScope.

- KitchenAI Project Seeks Developer Contributions: The KitchenAI open-source project was introduced, aiming to create shareable runtime AI cookbooks and inviting developers to contribute.

- Outreach efforts are being made on Discord and Reddit to attract interested contributors.

- Refining Prompt Engineering for GPT Models: Discussions focused on enhancing prompt clarity and utilizing token counts to optimize outputs for GPT models.

- A prompt engineering guide was shared to assist members in improving their prompt design skills.

- Evaluating TTS Alternatives: Spotlight on f5-TTS: Members explored various text-to-speech (TTS) solutions, with f5-tts recommended for its functionality on consumer GPUs.

- The discussion included suggestions to focus on cost-effective solutions when addressing queries about timestamp data capabilities.

Modular (Mojo 🔥) Discord

-

CUDA Driver Limitations in WSL2: The Nvidia CUDA driver on Windows is stubbed as libcuda.so in WSL2, potentially limiting full driver functionalities via Mojo.

- Members highlighted that this stubbed driver may complicate support for MAX within WSL if it relies on the host Windows driver.

- CRABI ABI Proposal Enhances Language Interoperability: An experimental feature gate proposal for

CRABIby joshtriplett aims to develop a new ABI for interoperability among high-level languages like Rust, C++, Mojo, and Zig.

- Participants discussed integration challenges with languages like Lua and Java, indicating a need for broader adoption.

- Mojo Installation Issues Fixed with Correct URL: A user resolved Mojo installation issues by correcting the

curlcommand URL, ensuring successful installation.

- This underscores the importance of accurate URL entries when installing software packages.

- Mojo's Benchmark Module Faces Performance Constraints: The benchmark module in Mojo facilitates writing fast benchmarks by managing setup and teardown, as well as handling units for throughput measurements.

- However, there are limitations such as unnecessary system calls in hot loops, which may impact performance.

- Dynamic Module Importing Restricted by Mojo's Compilation Structure: Dynamic importing of modules is currently unsupported in Mojo due to its compilation structure that bundles everything as constants and functions.

- Introducing a JIT compiler is a potential solution, though concerns about binary size and compatibility with pre-compiled code remain.

tinygrad (George Hotz) Discord

-

Hailo Model Quantization Challenges: A member detailed the complexities of running Hailo requiring eight-bit quantization, complicating training processes, and the necessity of a compiled .so for CUDA and TensorFlow to function properly.

- Setting up for Hailo is cumbersome due to these requirements.

- ASM2464PD Chip Specifications Confirmed: Discussion confirmed that the ASM2464PD chip supports generic PCIe, available through multiple vendors and not limited to NVMe.

- Members raised concerns about the chip's 70W power requirement for optimal performance.

- Open-Source USB4 to PCIe Converter Progress: An open-source USB4/Thunderbolt to M.2 PCIe converter design was shared on GitHub, demonstrating significant progress and secured funding for hardware development.

- The designer outlined expectations for the next development phase to achieve effective USB4 to PCIe integration.

- Optimizing Audio Recording with Opus Codec: Members debated using the Opus codec for audio recordings due to its ability to reduce file sizes without sacrificing quality.

- However, concerns were noted regarding Opus's browser compatibility, highlighting technical limitations.

- Development of Tinygrad's Distributed Systems Library: A user advocated for building a Distributed Systems library for Tinygrad focused on dataloaders and optimizers without relying on existing frameworks like MPI or NCCL.

- The aim is to create foundational networking capabilities from scratch while maintaining Tinygrad's existing interfaces.

Notebook LM Discord Discord

-

NotebookLM could summarize AI newsletters: One member suggested using NotebookLM to summarize over 200 AI newsletter emails to avoid manually copying and pasting content.

- Gemini button in Gmail was mentioned as a potential aid for summarization but noted not being free.

- Unofficial API skepticism around NotebookLM: Users discussed an unofficial $30/month API for NotebookLM, expressing suspicion regarding its legitimacy NotebookLM API.

- Concerns include the lack of business information and sample outputs, leading some to label it a scam.

- Integration of KATT for fact-checking in podcasts: One user discussed incorporating KATT (Knowledge-based Autonomous Trained Transformer) into a fact-checker for their podcast, resulting in a longer episode.

- They described the integration as painful, combining traditional methods with new AI techniques.

- Issues with audio file uploads in NotebookLM: Users expressed frustration about not being able to upload .mp3 files to NotebookLM, with guidance about proper upload procedures through Google Drive.

- Some noted that other file types uploaded without issues, indicating a potential technical glitch or conversion error.

- Exporting notebooks as PDF in NotebookLM: Users are inquiring about plans to export notes or notebooks as a .pdf in the future and seeking APIs for notebook automation.

- While some mention alternatives like using a PDF merger, they are eager for native export features.

Latent Space Discord

-

Magentic-One Framework Launch: The Magentic-One framework was introduced, showcasing a multi-agent system designed to tackle complex tasks and outperform traditional models in efficiency.

- It uses an orchestrator to direct specialized agents and is shown to be competitive on various benchmarks source.

- Context Autopilot Introduction: Context.inc launched Context Autopilot, an AI that learns like its users, demonstrating state-of-the-art abilities in information work.

- An actual demo was shared, indicating promise in enhancing productivity tools in AI workflows video.

- Writer Series C Funding Announcement: Writer announced a $200M Series C funding round at a $1.9B valuation, aiming to enhance its AI enterprise solutions.

- The funding will support expanding their generative AI applications, with significant backing from notable investors Tech Crunch article.

- Supermaven Joins Cursor: Supermaven announced its merger with Cursor, aiming to develop an advanced AI code editor and collaborate on new AI tool capabilities.

- Despite the transition, the Supermaven plugin will remain maintained, indicating a continued commitment to enhancing productivity (blog post).

- Dust XP1 and Daily Active Usage: Insights were shared on how to create effective work assistants with Dust XP1, achieving an impressive 88% Daily Active Usage among customers.

- This episode covers the early OpenAI journey, including key collaborations.

GPU MODE Discord

-

GPU Memory vs Speed Trade-offs: Debate arose over upgrading from an RTX 2060 Super to an RTX 3090, weighing the balance between GPU memory and processing speed, alongside the option of acquiring older used Tesla cards.

- Consensus favored newer hardware for enhanced reliability, especially recommending against older GPUs for individual developers.

- Vast.ai as Cloud GPU Provider: Vast.ai was recommended as an affordable cloud GPU option, with current pricing ranging from $0.30 to $2.80 per hour for GPUs like A100 and RTX 4090.

- Users noted that while Vast.ai offers cost-effective solutions, its model of leasing GPUs introduces certain quirks that potential users should consider.

- Surfgrad: WebGPU-based Autograd Engine: Surfgrad, an autograd engine built on WebGPU, achieved up to 1 TFLOP performance on the M2 chip, as detailed in Optimizing a WebGPU Matmul Kernel.

- The project emphasizes kernel optimizations and serves as an educational tool for those looking to explore WebGPU and Typescript in autograd library development.

- Efficient Deep Learning Systems Resources: Efficient Deep Learning Systems course materials by HSE and YSDA were shared, providing comprehensive resources aimed at optimizing AI system efficiencies.

- Participants highlighted the repository's value in enhancing understanding of efficient system architectures and resource management in deep learning.

- Multi-GPU Synchronization in Liger: Challenges were discussed regarding the synchronization of mean and variance parameters in multi-GPU setups within liger, referencing Pytorch's SyncBatchNorm operation.

- Members indicated that replicating SyncBatchNorm behavior in liger would be complex and not straightforward, highlighting the intricacies involved.

Cohere Discord

-

Cohere API /rerank Issues: Users encountered UnprocessableEntityError when using the /rerank endpoint due to the removal of the return_documents field.

- The development team acknowledged the unintentional change and is working on restoring the return_documents parameter, as multiple users reported the same issue after updating their SDKs.

- Command R Status in Development: Concerns about the potential discontinuation of Command R were addressed with assurances that there are no current plans to retire it.

- Members were advised to utilize the latest updates, such as command-r-08-2024, to benefit from enhanced performance and cost efficiency.

- aya_collection Dataset Inconsistencies: aya_collection dataset inconsistencies were identified, notably in translation quality across 19 languages, with English having 249716 rows compared to 124858 for Arabic and French.

- Specific translation mismatches were highlighted in the translated_cnn_dailymail dataset, where English sentences did not align proportionally with their Arabic and French counterparts.

- Forest Fire Prediction AI Project: A member introduced their forest fire prediction AI project using Catboost & XLModel, emphasizing the need for model reliability for deployment on AWS.

- Recommendations included adopting the latest versions of Command R for better performance, with suggestions to contact the sales team for additional support and updates.

- Research Prototype Beta Testing: A limited beta for a research prototype supporting research and writing tasks like report creation is open for sign-ups here.

- Participants are expected to provide detailed and constructive feedback to help refine the tool's features during the early testing phase.

HuggingFace Discord

-

Affordable AI Home Servers Unveiled: A YouTube video showcases the setup of a cost-effective AI home server using a single 3060 GPU and a Dell 3620, demonstrating impressive performance with the Llama 3.2 model.

- This setup offers a budget-friendly solution for running large language models, making advanced AI accessible to engineers without hefty hardware investments.

- Graph Neural Networks Dominate NeurIPS 2024: NeurIPS 2024 highlighted a significant focus on Graph Neural Networks and geometric learning, with approximately 400-500 papers submitted, surpassing the number of submissions at ICML 2024.

- Key themes included diffusion models, transformers, agents, and knowledge graphs, with a strong theoretical emphasis on equivariance and generalization, detailed in the GitHub repository.

- Qwen2.5 Coder Surpasses GPT4o and Claude 3.5: In recent evaluations, Qwen2.5 Coder 32B outperformed both GPT4o and Claude 3.5 Sonnet, as analyzed in this YouTube video.

- The community acknowledges Qwen2.5 Coder's rapid advancement, positioning it as a formidable contender in the coding AI landscape.

- Advanced Ecommerce Embedding Models Released: New embedding models for Ecommerce have been launched, surpassing Amazon-Titan-Multimodal by up to 88% performance, available on Hugging Face and integrable with Marqo Cloud.

- Detailed features and performance metrics can be found in the Marqo-Ecommerce-Embeddings collection, facilitating the development of robust ecommerce applications.

- Innovative Image Denoising Techniques Discussed: The paper Phase Transitions in Image Denoising via Sparsity is now available on Semantic Scholar, presenting new approaches to image processing challenges.

- This research contributes to ongoing efforts in enhancing image denoising methods, addressing critical issues in maintaining image quality.

LlamaIndex Discord

-

PursuitGov enhances B2G services with LlamaParse: By employing LlamaParse, PursuitGov successfully parsed 4 million pages in a single weekend, significantly enhancing their B2G services.

- This transformation resulted in a 25-30% increase in accuracy for complex document formats, enabling clients to uncover hidden opportunities in public sector data.

- ColPali integration for advanced re-ranking: A member shared insights on using ColPali as a re-ranker to achieve highly relevant search results within a multimodal index.

- The technique leverages Cohere's multimodal embeddings for initial retrieval, integrating both text and images for optimal results.

- Cohere's new multimodal embedding features: The team discussed Cohere's multimodal embeddings, highlighting their ability to handle both text and image data effectively.

- These embeddings are being integrated with ColPali to enhance search relevance and overall model performance.

- Automating LlamaIndex workflow processes: A member expressed frustration over the tedious release process and aims to automate more, sharing a GitHub pull request for LlamaIndex v0.11.23.

- They highlighted the need to streamline workflows to reduce manual intervention and improve deployment efficiency.

- Optimizing FastAPI for streaming responses: Discussions arose around using FastAPI's StreamingResponse, with concerns about event streaming delays potentially due to coroutine dispatching issues.

- Members suggested advanced streaming techniques, such as writing each token as a stream event using

llm.astream_complete()to enhance performance.

LAION Discord

-

AI Companies Embrace Gorilla Marketing: A member noted that AI companies really love gorilla marketing, possibly in reference to unconventional promotional strategies, and shared a humorous GIF of a gorilla waving an American flag.

- This highlighted the use of unique and creative marketing tactics within the AI industry.

- Request for Help on Object Detection Project: A member detailed a project involving air conditioner object detection using Python Django, aiming to identify AC types and brands.

- They asked for assistance, indicating a need for support in developing this recognition functionality.

- Introducing GitChameleon for Code Generation Models: The new dataset GitChameleon: Unmasking the Version-Switching Capabilities of Code Generation Models introduces 116 Python code completion problems conditioned on specific library versions, with executable unit tests to rigorously assess LLMs' capabilities.

- This aims to address the limitations of existing benchmarks that ignore the dynamic nature of software library evolution and do not evaluate practical usability.

- Exciting Launch of SCAR for Concept Detection: SCAR, a method for precise concept detection and steering in LLMs, learns monosemantic features using Sparse Autoencoders in a supervised manner.

- It offers strong detection for concepts like toxicity, safety, and writing style and is available for experimentation in Hugging Face's transformers.

- NVIDIA's Paper on Noise Frequency Training: NVIDIA's paper presents a concept where higher spatial frequencies are noised faster than lower frequencies during the forward noising step.

- In the backwards denoising step, the model is trained explicitly to work from low to high frequencies, providing a unique approach to training.

Gorilla LLM (Berkeley Function Calling) Discord

-

Overwriting Test Cases for Custom Models: A member inquired about overwriting or rerunning test cases for their custom model after making changes to the handler.

- Another suggested deleting results files in the

resultfolder or changing the path inconstant.pyto retain old results. - Invalid AST Errors in Qwen-2.5 Outputs: A member described issues with finetuning the Qwen-2.5 1B model, resulting in an INVALID AST error despite valid model outputs.

- Members discussed a specific incorrect output format that included an unmatched closing parenthesis, indicating syntactical issues.

- Confusion Over JSON Structure Output: A member expressed confusion about the model outputting a JSON structure instead of the expected functional call format.

- Others clarified that the QwenHandler should ideally convert the JSON structure into a functional form, leading to discussions on output expectations.

- Evaluating Quantized Fine-tuned Models: A member raised a question about evaluating quantized finetuned models, specifically regarding their deployment on vllm.

- They mentioned the use of specific arguments like

--quantization bitsandbytesand--max-model-len 8192for model serving.

- Another suggested deleting results files in the

LLM Agents (Berkeley MOOC) Discord

-

LLM Agents MOOC Hackathon Kicks Off: The LLM Agents MOOC Hackathon began on 11/12 at 4pm PT, featuring live LambdaAPI demos to aid participants in developing their projects.

- With around 2,000 innovators registered across tracks like Applications and Benchmarks, the event is hosted on rdi.berkeley.edu/llm-agents-hackathon.

- LambdaAPI Demos Support Hackathon Projects: LambdaAPI provided hands-on demos to guide hackathon participants in building effective LLM agent applications.

- These demonstrations offer actionable tools and techniques, assisting developers in refining their project implementations.

- NVIDIA's Embodied AI Triggers Ethical Debate: NVIDIA's presentation on embodied AI sparked discussions about granting moral rights to AI systems resembling humans.

- Participants highlighted a lack of focus on normative alignment, questioning the ethical boundaries of AI advancements.

- AI Rights and Normative Alignment Concerns: The community expressed unease over the absence of normative alignment discussions in AI development, especially after NVIDIA's insights.

- Debates centered on the ethical implications of AI rights, emphasizing the need for comprehensive alignment strategies.

OpenAccess AI Collective (axolotl) Discord

-

FOSDEM AI DevRoom Set for 2025: The AIFoundry team is organizing the FOSDEM AI DevRoom scheduled for February 2, 2025, focusing on ggml/llama.cpp and related projects to unite AI contributors and developers.

- They are inviting proposals from low-level AI core open-source project maintainers with a submission deadline of December 1, 2024, and offering potential travel stipends for compelling topics.

- Axolotl Fine-Tuning Leverages Alpaca Format: A user clarified the setup process for fine-tuning with Axolotl, highlighting the use of a dataset in Alpaca format to preprocess for training.

- It was noted that the tokenizer_config.json excludes the chat template field, necessitating further adjustments for complete configuration.

- Enhancing Tokenizer Configuration with Chat Templates: A member shared a method to incorporate the chat template into the tokenizer config by copying a specific JSON structure.

- They recommended modifying settings within Axolotl to ensure automatic inclusion of the chat template in future configurations.

- Integrating Default System Prompts in Fine-Tuning: A reminder was issued that the shared template lacks the default system prompt for Alpaca, which may require adjustments.

- Users were informed they can include a conditional statement before ### Instruction to integrate desired prompts effectively.

DSPy Discord

-

Annotations Enhance dspy.Signature: Members discussed the use of annotations in dspy.Signature, clarifying that while basic annotations work, there is potential for using custom types like list[MyClass].

- One member confirmed that the string form does not work for this purpose, suggesting a preference for explicit type definitions.

- Custom Signatures Implemented for Clinical Entities: A member shared a successful implementation of a custom signature using a list of dictionaries in the output, showcasing the extraction of clinical entities.

- The implementation includes detailed descriptions for both input and output fields, indicating a flexible approach to defining complex data structures.

OpenInterpreter Discord

-

Linux Mint struggles in Virtual Machines: After installing Linux Mint in Virtual Machine Manager, users reported networking did not work properly.

- However, an attempt was made to install Linux Mint inside an app called Boxes.

- Microsoft Copilot Communication Breakdown: A back-and-forth interaction with Microsoft Copilot revealed frustration as commands were not being configured as requested.

- The user emphasized that no bridge was created, but they managed to create one on their own.

- Interpreter CLI Bugs on OS X: Report emerged regarding the Interpreter CLI on OS X, where it is persisting files and exiting unexpectedly.

- Users expressed concerns about these issues occurring frequently on the developer branch.

Torchtune Discord

- PyTorch Team to Release DCP PR: whynot9753 announced that the PyTorch team will likely release a DCP PR tomorrow.

- ****:

AI21 Labs (Jamba) Discord

- Request to Continue Using Fine-Tuned Models: A user requested to continue using their fine-tuned models.

- Request to Continue Using Fine-Tuned Models: A user requested to continue using their fine-tuned models.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!