[AINews] Bespoke-Stratos + Sky-T1: The Vicuna+Alpaca moment for reasoning

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Reasoning Distillation is all you need.

AI News for 1/21/2025-1/22/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 4297 messages) for you. Estimated reading time saved (at 200wpm): 496 minutes. You can now tag @smol_ai for AINews discussions!

In the ChatGPT heyday of 2022-23, Alpaca and Vicuna were born out of LMsys and Stanford as ultra cheap ($300) finetunes of LLaMA 1 that distilled from ChatGPT/Bard samples to achieve 90% of the quality of ChatGPT/GPT3.5.

In the last 48 hours, it seems the Berkeley/USC folks have done it again, this time with the reasoning models.

It's hard to believe this sequence of events happened just in the last 2 weeks:

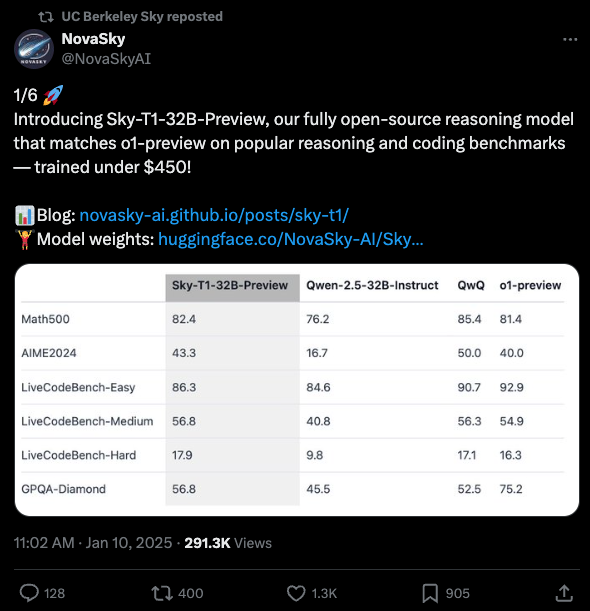

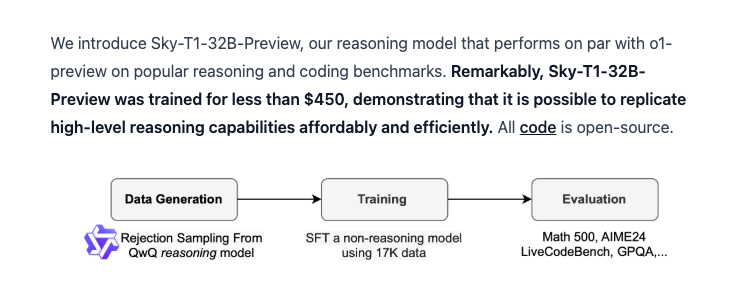

- Berkeley's Sky Computing lab released Sky-T1-32B-Preview, a finetune of Qwen 2.5 32B (our coverage here) with 17k rows of training data from QwQ-32B (our coverage here) + rewriting traces with gpt-4o-mini + rejection sampling, all done for $450. Because QwQ outperforms o1-preview, distilling from QwQ brings Qwen up to match o1-preview's benchmarks:

- DeepSeek releases R1 (2 days ago) with benchmarks far above o1-preview. The R1 paper also revealed the surprise that you can distill from R1 to turn a 1.5B Qwen model to match 4o and 3.5 Sonnet (?!).

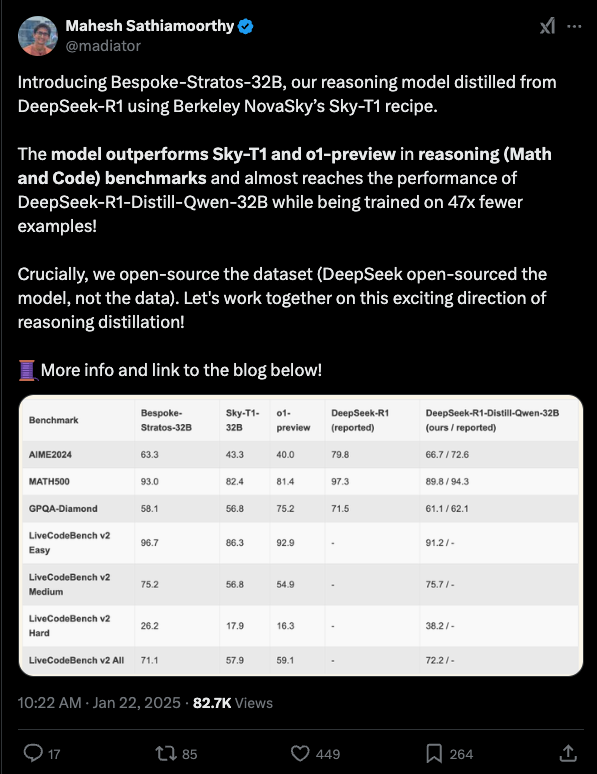

- Bespoke Labs (today) uses the Sky-T1 recipe to distill R1 on Qwen again to greatly outperform (not just match) o1-preview, again with 17k rows of reasoning traces.

While Bespoke's distillation does not quite match DeepSeek's distillation in performance, they used 17k samples vs DeepSeek's 800k. It is pretty evident that they could keep going here if they wished.

The bigger shocking thing is that "SFT is all you need" - no major architecture changes are required for reasoning to happen, just feed in more (validated, rephrased) reasoning traces, backtracking and pivoting and all, and it seems like it will generalize well. In all likelihood, this explains the relative efficiency of o1-mini and o3-mini vs their full size counterparts.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Evaluations

- DeepSeek-R1 Innovations and Performance: @teortaxesTex, @cwolferesearch, and @madiator discussed DeepSeek-R1's training via pure reinforcement learning (RL), emphasizing the importance of supervised finetuning (SFT) for accelerating RL convergence. DeepSeek-R1 demonstrates robust reasoning capabilities and multimodal functionalities, with Bespoke-Stratos-32B introduced as a distilled version achieving significant performance with 47x fewer examples.

- Gemini and Other LLM Advancements: @chakraAI and @philschmid highlighted Google's Gemini 2.0 Flash Thinking model, noting its 1 million token context window, code execution support, and state-of-the-art performance across math, science, and multimodal reasoning benchmarks.

- AI Model Comparisons and Critiques: @abacaj and @teortaxesTex provided critical insights into models like o1 and R1-Zero, discussing issues such as model repeatability, behavioral self-awareness, and the limitations of RLHF in achieving robust reasoning.

AI Applications and Tools

- Windsurf and AI-Powered Slide Decks: @omarsar0 showcased Windsurf, an AI agent capable of analyzing code, replicating functionalities, and automating the creation of slide decks by integrating PDFs and images seamlessly. Users can extend features through simple prompts, highlighting the flexibility of web-based AI applications.

- Local AI Deployments and Extensions: @ggerganov introduced the llama.cpp server, which offers unique context reuse techniques for enhancing LLM completions based on codebase contents, optimized for low-end hardware. Additionally, the VS Code extension leveraging llama.cpp provides local LLM-assisted code and text completions without the need for external RAG systems.

- AI Integration with Development Tools: @lah2139 and @JayMcMillan highlighted integrations like LlamaIndex with DeepSeek-R1, enabling AI-assisted development and agent workflows. These tools allow developers to build and evaluate multi-agent systems, fostering efficient AI application development.

AI Research and Papers

- IntellAgent Multi-Agent Framework: @omarsar0 introduced IntellAgent, an open-source multi-agent framework designed to evaluate complex conversational AI systems. The framework facilitates the generation of synthetic benchmarks and interactive user-agent simulations, capturing the intricate dynamics of agent capabilities and policy constraints.

- Behavioral Self-Awareness in LLMs: @omarsar0 discussed a new paper demonstrating that LLMs can exhibit behavioral self-awareness by recognizing and commenting on their own insecure code outputs without explicit training, indicating a potential for more reliable policy enforcement within models.

- ModernBERT and Embedding Models: @philschmid presented ModernBERT, an embedding and ranking model that correctly associates contextual information better than its predecessors. The comparison revealed that relying solely on benchmarks may not fully capture a model's effectiveness, emphasizing the need for customized evaluation strategies.

AI Infrastructure and Compute

- OpenAI's Stargate Project: @sama and @gdb announced the Stargate Project, a $500 billion AI infrastructure initiative aimed at building AI data centers in the U.S., positioning it as a response to global AI competition and a strategy to enhance national AI capabilities.

- NVIDIA's AI Models and Compute Solutions: @reach_vb detailed NVIDIAAI's Eagle 2, a suite of vision-language models (VLMs) that outperform competitors like GPT-4o on specific benchmarks, underscoring the importance of efficient compute architectures in developing high-performance AI models.

- Compute Resource Management: @swyx and @cto_junior discussed strategies for managing inference-time compute, balancing costs with adversarial robustness, and the implications of compute resource allocation on AI model performance.

AI Community, Education, and Events

- AI Workshops and Courses: @deeplearningai and @AndrewYNg promoted hands-on workshops and free courses focused on building AI agents capable of computer use, covering topics like multimodal prompting, XML structuring, and prompt caching to enhance AI assistant functionalities.

- AI Film Festival Growth: @c_valenzuelab celebrated the expansion of their Film Festival, noting a 10x increase in submissions and the relocation to prominent venues like Alice Tully Hall, reflecting the growing intersection of AI media and creative industries.

- AI Community Contributions: @LangChainAI and @Hacubu showcased community-driven projects like AgentWorkflow and LangSmith Evals, which simplify the process of creating multi-agent systems and testing LLM applications, thereby enhancing community collaboration and developer productivity.

Memes/Humor

- AI Model Humor: @giffmana and @saranormous shared humorous takes on AI model limitations and user interactions, including jokes about chatbot behaviors and AI-driven creativity mishaps.

- Satirical Comments on AI Developments: @nearcyan and @giffmana posted satirical remarks on AI project naming conventions and misunderstandings in AI capabilities, adding a light-hearted perspective to the rapid advancements in the field.

AI Policy and Ethics

- AI Safety and Governance: @togelius expressed concerns over the AI safety agenda, advocating for a balanced approach that prioritizes freedom of compute while addressing existential risks, highlighting the tension between AI innovation and ethical considerations.

- AI Community Critiques: @pthoughtcrime___ and @simran_s_arora critiqued policy-driven AI initiatives, emphasizing the potential for control over AI development and the importance of maintaining open-source principles to foster ethical AI progress.

- Regulatory Discussions: @agihippo and @labloke11 engaged in conversations about the impact of AI regulations on innovation and research, debating the balance between regulatory oversight and technological advancement.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Mistral 10V: Exploring New Capabilities with 12K Tokens

- China goes full robotic. Insane developments. At the moment, it’s a heated race between USA and China. (Score: 768, Comments: 237): The post highlights the intense competition between the USA and China in the field of AI, specifically mentioning China's advancements in robotics. The release of Mistral 10V is implied to have significant implications for AI technology, though no specific details are provided in the text.

- Many commenters express skepticism about the authenticity of the video, questioning if it is AI-generated or preprogrammed, with some noting the absence of the video on official channels and others discussing the possibility of it being a fake.

- The discussion reflects a belief that China is significantly ahead in robotics, with some commenters suggesting that the USA has fallen behind due to a focus on non-manufacturing industries, and questioning the notion of a "race" between the two countries.

- There is a mix of humor and concern regarding the future implications of advanced robotics, with comments mentioning potential military applications and the replacement of jobs, such as police dogs, by robots once they achieve further capabilities.

- Ooh... Awkward (Score: 560, Comments: 295): OpenAI launched Mistral 10V with a capacity of 12,000 tokens, marking a significant development in AI capabilities. The context of the post suggests a potential awkward situation, possibly related to the launch or features of the model.

- The vocal fry of the speaker was a prominent topic, with many commenters criticizing it as distracting or unprofessional. Sam Altman's demeanor during the presentation was perceived as lacking confidence, with some speculating that his nervousness stemmed from the context of the situation rather than the content itself.

- Discussions touched on the potential economic implications of AI, with skepticism about claims that AI would create 100,000 jobs. Commenters expressed doubts about job creation, suggesting that AI might instead reduce the workforce, and drew parallels to Theranos as a cautionary tale.

- There were political undertones, with references to Donald Trump and the notion of AI being used to claim achievements like curing cancer. Some commenters suggested that Sam Altman was navigating a complex political landscape, trying to maintain a favorable position amid Trump's influence.

- Sam Altman’s expression during the entire AI Infra Deal Announcement (Score: 469, Comments: 131): The post lacks specific content or discussion points about Sam Altman or the AI Infra Deal Announcement. Without additional context or details, no technical summary can be provided.

- Discussions compare Russia's oligarchic system to the US, noting concerns about increasing alignment of wealthy individuals with Trump, potentially to gain influence. This reflects worries about a shift towards oligarchic tendencies, akin to Russia's political structure under Putin, where oligarchs face severe consequences if they fall out of favor.

- There is commentary on Sam Altman's demeanor during public appearances, with some attributing his expressions to anxiety or discomfort. The reactions suggest skepticism about his enthusiasm for certain partnerships, possibly alluding to a satirical take on him creating a Skynet-like scenario against his will.

- Conversations include sarcastic remarks about Elon Musk being sidelined in AI advancements compared to Altman, with references to Musk's involvement in other initiatives like meme coins. The humor underlines a perceived rivalry or competition in the tech space between influential figures.

Theme 2. O1-Pro: Revolutionary Use in Legislation Analysis

- I used O1-pro to Analyze the Constitutionality of all of Trump's Executive Orders. (Score: 135, Comments: 33): The author conducted a detailed analysis of Trump's Executive Orders using O1-Pro and sourced the texts from whitehouse.gov for objectivity. The document includes a Table of Contents and source text links, with summaries provided by GT4o.

- Analysis Process: The author manually prepared the document, using Google Doc's bookmark and link system for navigation. They used O1-Pro for analysis, inserting the full text of executive orders and employing prompt templates for summaries and titles, ensuring each analysis was conducted in a fresh chat to avoid bias.

- Impact of Executive Orders: Discussion highlighted potential short and long-term effects, such as immediate restructuring within federal agencies, changes in immigration policies, and shifts in energy and environmental focus. Long-term impacts could include a smaller, more centralized federal workforce and shifts in international relations due to withdrawal from treaties.

- Fact-Checking and Economic Concerns: Commenters suggested sharing actual ChatGPT links for fact-checking and speculated on economic effects, like tariffs and their impact on prices in Canada and the US. There was skepticism about whether proposed tariffs would be enacted without an official order.

- [D]: A 3blue1brown Video that Explains Attention Mechanism in Detail (Score: 285, Comments: 12): 3blue1brown's video on the attention mechanism provides a detailed explanation of concepts such as token embedding and the role of the embedding space in encoding multiple meanings for a word. It discusses how a well-trained attention block adjusts embeddings based on context and conceptualizes Ks as potentially answering Qs. Video link and subtitles are provided for further exploration.

- 3blue1brown's video is praised for its clear and visual explanation of the attention mechanism, effectively introducing the problem and gradually building up to the solution, unlike other tutorials that often skip foundational explanations.

- Users highlight the importance of masking during model training to predict the next token, referencing Karpathy's tutorial on building GPT from scratch for further understanding of these concepts.

- 3blue1brown's talk, based on the video series, is also recommended for its intuitive explanations, with a link provided for those interested in exploring more: YouTube link.

- Trump announces up to $500 billion in AI infrastructure investment (Score: 141, Comments: 22): OpenAI, SoftBank, and Oracle are launching a Texas-based joint venture named Stargate, with an initial commitment of $100 billion and plans to invest up to $500 billion over the next four years. This venture aims to set new standards in AI infrastructure.

- Multiple comments highlight that the Stargate project was initially announced last year, with some users expressing skepticism about its recent announcement being politically motivated, particularly regarding Trump taking credit for it.

- There is a discussion about the scale of the project, with one commenter noting it as potentially the largest infrastructure project in history, while another user humorously compares it to Foxconn 2.0.

- Some users express gratitude for the straightforward announcement style, avoiding sensationalist headlines like "BREAKING," which they find increasingly meaningless.

Theme 3. Gemini 1.5: Leading AI with Performance Edge

- Elon Says Softbank Doesn't Have the Funding.. (Score: 417, Comments: 227): Elon Musk expresses doubt about SoftBank's financial capabilities, contradicting claims of substantial funding for AI infrastructure by stating they have "well under $10B secured." An image from OpenAI announces "The Stargate Project," which intends to invest $500 billion in AI infrastructure in the U.S. over four years, starting with an immediate $100 billion deployment.

- There is skepticism about SoftBank's financial claims, with some suggesting they might have announced their plans without securing the necessary funding, hoping investments would follow. Concerns are raised about the legality and ethics of business leaders, like Elon Musk, influencing or commenting on other businesses, especially given his controversial track record and connections to government initiatives.

- Discussions highlight Trump's announced $500 billion government subsidy for AI, drawing parallels to past infrastructure funding that was misused. Critics view it as a potential wealth transfer to tech elites, questioning the involvement and actual financial capabilities of companies like SoftBank and Oracle.

- Many comments express disdain for Elon Musk, questioning his motives and credibility, with accusations of personal biases and trolling. There are references to past unfulfilled promises, such as the XAi Grok model, and criticism for his perceived alignment with controversial figures and ideologies.

- OpenAI announcement on the Stargate project (Score: 186, Comments: 90): OpenAI's Stargate Project plans to invest $500 billion over four years in AI infrastructure in the U.S., starting with an immediate $100 billion deployment. Initial equity funders include SoftBank, OpenAI, Oracle, and MGX, with key technology partners like Arm, Microsoft, NVIDIA, Oracle, and OpenAI. The project aims to support American jobs, national security, and advance AGI for humanity's benefit.

- Comments discuss skepticism about SoftBank's involvement, with questions on why they wouldn't invest in Japan and clarifications that SoftBank is linked to the Middle East sovereign fund. Concerns about the funding sources were raised, noting potential reliance on subsidies and tax credits.

- The discussion highlights confusion over the project’s goals, with some suggesting the $100 billion will be spent on data centers, AI R&D labs, and energy infrastructure, referencing Microsoft's past venture with the Three Mile Island nuclear plant. There is skepticism about the project's employment claims, comparing it to other tech initiatives like Tesla and Alexa.

- The term "Stargate" is humorously compared to "Skynet" from the Terminator series, with some comments noting that a Skynet program already exists as a military satellite system. There is a mention of the project contributing to the "re-industrialization of the United States" and being part of the fourth industrial revolution.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Stargate AI Project: $500 Billion Investment's Impact

- Trump announces a $500 billion AI infrastructure investment in the US (Score: 582, Comments: 355): Trump announced a $500 billion investment in AI infrastructure in the US, signaling a significant commitment to advancing AI capabilities and infrastructure development. The announcement highlights the growing importance of AI in national economic and technological strategies.

- Stargate Project: The $500 billion investment is directed towards a new private company called Stargate, co-owned by Sam Altman, Masayoshi Son, and Larry Ellison, rather than OpenAI. This has raised concerns about intellectual property and existing partnerships, particularly with Microsoft.

- Funding and Ownership: There is a debate about the source of the funding, with some suggesting it's private investment from companies like SoftBank rather than US government money. Trump's announcement is seen by some as a political move, with claims that the announcement allows him to take credit for private sector initiatives.

- Geopolitical and Economic Implications: The announcement is viewed as a strategic move in the global AI race, especially in response to China's advancements like DeepSeek R1. The discussion also touches on the potential economic impact, including job creation claims and the broader implications for US technological leadership.

- I don’t believe the $500 Billion OpenAI investment (Score: 419, Comments: 142): The post expresses skepticism about the $500 billion OpenAI investment, arguing that the figure is overly optimistic and lacks transparency regarding funding sources and project specifics. The author criticizes the use of vague legal language and suggests the announcement is politically motivated, particularly in timing it after Trump's presidential win, to create headlines without firm commitments, hinting that the actual investment will be smaller and slower than advertised.

- Commenters highlight skepticism regarding the $500 billion investment, with comparisons to past projects like the Foxconn and Star Wars programs, suggesting the announcement is more about stock manipulation and market hype than actual funding. UncannyRobotPodcast and others express concerns about the lack of follow-through and potential for insider trading benefits.

- There is debate over the role of government versus private companies in funding, with tertain and ThreeKiloZero clarifying that the funding is from four partner companies, not federal money, while 05032-MendicantBias notes the government's role in easing regulations for infrastructure. SoftBank is mentioned as a significant player with substantial assets potentially involved.

- Discussions around the potential impact of the investment include concerns about overhyping AI and the existential implications of AI development. NebulousNitrate argues that large investments are justified to prevent adversaries from gaining superintelligence, while Super_Sierra suggests the hype is beneficial for innovation, despite skepticism about the actual realization of the $500 billion goal.

- Just a comparison of US $500B Stargate AI project to other tech projects (Score: 112, Comments: 103): The $500 billion Stargate AI project is compared to historical tech projects, highlighting its scale at approximately 1.7% of US GDP in 2024. In contrast, the Manhattan Project cost about $30 billion (~1.5% of GDP in the 1940s), the Apollo Program around $170–$180 billion (~0.5% of GDP in the 1960s), and the Space Shuttle Program approximately $275–$300 billion (~0.2% of GDP in the 1980s). The Interstate Highway System cost $500–$550 billion over several decades (~0.2%–0.3% of GDP annually).

- Discussion centers around the private funding of the Stargate AI project, with SoftBank, OpenAI, Oracle, and MGX as key investors. There is skepticism about the project's intentions, with comments suggesting it may replace a significant portion of the workforce (10-30%) while contrasting the lack of funding for social welfare programs like healthcare and education in the US.

- The project's scale and impact are debated, with comparisons made to historical projects like the Manhattan Project and Apollo Program regarding their GDP percentages. Some argue that while the project is funded privately, its scale is akin to a public initiative, raising questions about its societal implications and the role of the US government.

- Concerns are voiced about the US's role in AI development, with some commenters expressing distrust in the government's motives and potential exploitation by wealthy interests. There is a sentiment that the US is focused on maintaining global dominance, similar to a new "space race" with China, and that the project could eventually be defense-oriented.

Theme 2. DeepSeek R1: Redefining AI Benchmarks

- R1 is mind blowing (Score: 578, Comments: 139): R1 demonstrated superior problem-solving capabilities in a nuanced graph theory problem, succeeding on the first attempt where 4o failed twice before eventually providing a correct answer. The author is impressed by R1's ability to justify its solution and articulate a nuanced understanding, suggesting that even smaller models running on personal devices like a MacBook may surpass human intelligence in specific areas.

- Users discussed the R1 model's performance compared to o1 and other models, with some emphasizing R1's superior value due to its lower cost despite similar performance levels. Discussions highlighted R1's capabilities in problem-solving and reasoning, with some users noting its impressive performance even in distilled versions.

- R1's problem-solving abilities were praised, with specific examples like successfully solving a graph theory problem on the first attempt and outperforming other models like 4o. However, some users noted limitations, such as a lack of context awareness and issues with prompt optimization.

- The discussion included technical details about model deployment and usage, such as the need for specific temperature settings and questions about self-hosting capabilities. Some users expressed challenges with using R1 in professional settings due to data privacy concerns.

- The Deep Seek R1 glaze is unreal but it’s true. (Score: 63, Comments: 46): The author struggled with a programming issue in a RAG machine for two days, trying various major LLMs without success, including OpenAI's O1 Pro. However, the Deep Seek R1 resolved the problem on its first attempt, leading the author to consider it their new preferred tool for coding, potentially replacing OpenAI Pro.

- There is skepticism about OpenAI's LLMs knowing their own architecture, as users like KriosXVII and gliptic point out that such details are unlikely to be included in their training data. Dan-Boy-Dan criticizes the author's claims as marketing tactics and challenges them to post the problem for others to test with different models.

- a_beautiful_rhind and LostMyOtherAcct69 discuss the difference in AI models' personality and architecture, suggesting that Mixture of Experts (MoE) could be the future of AI due to its efficiency and specialization compared to dense models. ReasonablePossum_ argues that US companies prioritize profit over developing such models, while Caffeine_Monster criticizes the excessive positive bias in AI models as counterproductive.

- Multiple users, including Dan-Boy-Dan and emteedub, request the author to post the specific problem that only the Deep Seek R1 solved, expressing doubt about the claims made and indicating a desire to test it across other models.

- Deepseek-R1 is brittle (Score: 61, Comments: 23): The post discusses the brittleness of Deepseek-R1, highlighting its limitations and strengths. It includes an image link here to support the analysis.

- Prompt Optimization: Users found that Deepseek-R1 performs well in specific scenarios, particularly when using the prompt structure from the R1 paper with a temperature of 0.6 and top p of 0.95, which involves tagging reasoning and answers explicitly in the prompt. This method is also referenced in instructions for o1 models, indicating a common approach across reasoning models.

- Model Brittleness: Deepseek-R1 struggles with tasks requiring creativity or subjective answers, often producing incompatible or excessive outputs, as seen in a test where it suggested an impractical tech stack for an app. However, with practice and precise prompting, users noted an improvement in R1's performance, supporting the post's assertion of its brittleness.

- Comparison with Other Models: The discussion highlights that Deepseek-R1 excels in tasks with a single correct answer, such as coding, but falters compared to other models like Deepseek v3 when dealing with more complex or creative tasks. This suggests that while R1 can be effective, its application needs careful consideration and adjustment based on the task requirements.

Theme 3. Model-Agnostic Reasoning: R1 Techniques

- YOU CAN EXTRACT REASONING FROM R1 AND PASS IT ONTO ANY MODEL (Score: 368, Comments: 101): @skirano on Twitter suggests that you can extract reasoning from deepseek-reasoner and apply it to any model, enhancing its performance, as demonstrated with GPT-3.5 turbo.

- Workflow and Reasoning Techniques: Discussion highlights the use of Chain-of-Thought (CoT) prompting and stepped thinking to enhance model reasoning, with @SomeOddCodeGuy suggesting a two-step workflow using a workflow app to achieve interesting results, as demonstrated in a QwQ simulation. Nixellion adds that prompting models to simulate expert discussions can yield improved outcomes, emphasizing the potential of new CoT techniques.

- Critique and Skepticism: Ok-Parsnip-4826 criticizes the notion of extracting reasoning, arguing that it's merely asking one model to summarize another's thoughts without real benefit, while gus_the_polar_bear counters that LLMs might respond differently to prompts from other LLMs, suggesting potential unexplored interactions. nuclearbananana questions the efficiency of using a secondary model due to potential latency and cost implications.

- Technical Implementation and Tools: SomeOddCodeGuy discusses the technicalities of using Wilmer to facilitate connections between Open WebUI and Ollama, highlighting the potential for creating a containerized setup to enhance workflow management. Additionally, xadiant mentions the possibility of injecting reasoning processes into local models via completions API for enhanced performance.

- The distilled R1 models likely work best in workflows, so now's a great time to learn those if you haven't already! (Score: 49, Comments: 14): The DeepSeek-R1 model, as described in the paper "DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning", performs optimally with zero-shot prompting rather than few-shot prompting. The author emphasizes the importance of using workflows to enhance the performance of reasoning models like R1 and its distilled versions, suggesting a structured approach involving summarization and problem-solving to maximize efficiency and output quality.

- Users discuss challenges with DeepSeek-R1's output format consistency, particularly in generating structured formats like JSON, with one user mentioning the use of langgraph to address these issues. Another user seeks more tips or tricks to improve model performance.

- A commenter notes DeepSeek's own acknowledgment of R1's limitations in function calling and complex tasks compared to DeepSeek-V3, citing the research paper's mention of plans to improve using long Chain-of-Thought (CoT) techniques.

- Some users express interest in having the AI revise prompts to achieve better output, indicating a demand for improved prompt engineering to enhance model responses.

Theme 4. Deepseek R1 GRPO Code: Open-Sourcing Breakthrough

- Deepseek R1 GRPO code open sourced 🤯 (Score: 260, Comments: 6): Deepseek R1 has open-sourced its GRPO code, accompanied by a detailed flowchart illustrating the model's components. The diagram highlights sections like Prompts, Completions, Rewards and Advantages, Policy and Reference Policy, and DKL, all of which contribute to a central "objective" through a series of structured calculations involving mean and standard deviation.

- The Deepseek R1 code shared is not the actual R1 code but rather a preference optimization method used in the R1 training process, highlighting the novelty of the RL environment over the reward model in PO training.

- According to the paper, the RL environment employs a straightforward algorithm that evaluates the reasoning start and end token pairs, comparing the model's output with the ground truth from a math dataset.

- A link to the relevant code is available on GitHub.

- DeepSeek-R1-Distill-Qwen-1.5B running 100% locally in-browser on WebGPU. Reportedly outperforms GPT-4o and Claude-3.5-Sonnet on math benchmarks (28.9% on AIME and 83.9% on MATH). (Score: 170, Comments: 38): DeepSeek-R1-Distill-Qwen-1.5B reportedly runs entirely locally in-browser using WebGPU and surpasses GPT-4o and Claude-3.5-Sonnet in math benchmarks, achieving 28.9% on AIME and 83.9% on MATH.

- DeepSeek-R1-Distill-Qwen-1.5B has generated excitement for running entirely locally on WebGPU and outperforming GPT-4o in reasoning tasks. The model is accessible via an online demo and its source code is available on GitHub.

- Discussion around ONNX highlighted its role as a general-purpose ML model and weights storage format, with its ability to convert and transfer models across different engines. GGUF was noted as being optimized for quantized transformer model weights, primarily used with llamacpp and its derivatives.

- The conversation included an analogy comparing file formats like ONNX and GGUF to container formats such as tar, zip, and 7z, emphasizing that they are different layouts for data storage that cater to specific hardware/software preferences.

Theme 5. R1-Zero: AI Reinforcement Learning Breakthroughs

- R1-Zero: Pure RL Creates a Mind We Can’t Decode—Is This AGI’s Dark Mirror? (Score: 204, Comments: 105): DeepSeek-R1-Zero, an AI model developed through pure reinforcement learning (RL) without supervised fine-tuning, has achieved a dramatic increase in AIME math scores from 15.6% to 86.7%, yet its reasoning remains uninterpretable, producing garbled outputs. While its sibling, R1, uses some supervised data to maintain readability, R1-Zero raises concerns about AI alignment and the potential democratization of superintelligence due to its lower API costs—50 times cheaper than OpenAI's—despite its unreadable logic.

- The gibberish outputs of R1-Zero might be a form of symbolic reasoning, where tokens are repurposed beyond their linguistic meaning to convey complex interrelationships. This concept parallels human use of slang or jargon, suggesting that the model's reasoning could be misunderstood as gibberish due to shifts in token semantics, similar to generational language differences.

- Discussion highlights the potential of R1-Zero's reinforcement learning to reinvent token semantics, allowing it to outperform models like R1 that rely on supervised fine-tuning. This raises questions about how to measure safety and alignment in models using a new form of symbolic reasoning, as well as how this might contribute to multimodal AI development.

- There is skepticism about R1-Zero's capabilities, with some commenters suggesting that its outputs are mere errors or hallucinations rather than groundbreaking insights. Others mention the need for more concrete examples and reports to substantiate claims of its reasoning abilities, pointing to Karpathy's predictions and referencing concepts like the "Coconut" paper by Meta for further context.

- Gemini Thinking experimental 01-21 is out! (Score: 71, Comments: 17): The Gemini 2.0 Flash Thinking Experimental model, showcased in the Google AI Studio interface, features advanced options such as "Model," "Token count," and "Temperature" settings. The interface, with its dark theme, allows users to input mathematical problems and adjust various tools and settings for experimentation.

- Gemini 1.5/1.0 received criticism for being rushed and underwhelming, but the new AI Studio models are praised for their improvements, suggesting a more thoughtful development process. Users appreciate the experimental models' availability for testing, wishing other companies would do the same.

- Open weight models are noted to be outpacing closed ones, sparking discussions on their advantages. There is a mention of naming inconsistencies within the model versions, which could cause confusion.

- The Flash Thinking Experimental model has been updated from a 32k to a 1 million context window, aligning with other Google models. Users report mixed experiences regarding speed, with some finding it impressive and others not.

AI Discord Recap

A summary of Summaries of Summaries

o1-preview-2024-09-12

Theme 1. AI's Billion-Dollar Stargate Projects: Lofty Goals and Skepticism

- OpenAI Announces $500B Stargate Project, Skeptics Abound: OpenAI unveiled the Stargate Project, aiming to invest $500 billion in AI infrastructure over four years, but critics like Elon Musk doubt the feasibility of funding, calling it "ridiculous".

- Microsoft and Oracle Pledge Support Amid Funding Doubts: Microsoft CEO confirms commitment to the project saying, "I’m good for my $80 Billion", while Oracle joins in amidst questions about SoftBank's ability to contribute significantly.

- Trump's Stargate Sparks Debate on Tech and Government: President Trump announced Project Stargate, stirring discussions on government involvement in AI and concerns about corporate overreach in tech investments.

Theme 2. AI Models Clash: DeepSeek R1 Outperforms the Giants

- DeepSeek R1 Trumps Gemini and O1 in Math Tasks: Community members praised DeepSeek R1 for achieving 92% in math performance, outperforming models like Gemini and O1, showcasing advanced reasoning capabilities.

- Bespoke-Stratos-32B Emerges as a Reasoning Powerhouse: A new model, Bespoke-Stratos-32B, distilled from DeepSeek-R1, outperforms Sky-T1 and o1-preview in reasoning tasks using only 800k training examples costing $800.

- Gemini 2.0 Flash Thinking Soars to #1: Gemini-2.0-Flash-Thinking claimed the top spot in Chatbot Arena with a 73.3% math score and a 1 million token context window, sparking excitement and comparisons with DeepSeek models.

Theme 3. Censorship vs. Uncensored AI Models: Users Seek Freedom

- DeepSeek R1 Faces Performance Drop Amid Censorship Concerns: Users reported an 85% performance drop in DeepSeek R1 overnight, suspecting increased censorship filters and seeking workarounds for sensitive prompts.

- Frustration with Over-Censored AI Models Grows: Community members mocked heavily censored models like Phi-3.5, expressing that excessive restrictions make models impractical for technical tasks and roleplaying.

- Hunt for Uncensored Models Intensifies: Users discussed favorite uncensored models like Dolphin and Hermes on platforms like OpenRouter, emphasizing the demand for more open AI experiences.

Theme 4. New AI Tools and Innovations Empower Developers

- LlamaIndex Launches AgentWorkflow for Multi-Agent Magic: AgentWorkflow was introduced, enabling developers to build multi-agent systems with expanded tool support, hailed as "the next step for more powerful agent coordination."

- Ai2 ScholarQA Revolutionizes Literature Reviews: Ai2 ScholarQA launched a RAG-based solution for multi-paper queries, helping researchers conduct in-depth literature reviews with cross-referencing capabilities.

- OpenAI's Operator Prepares to Take Actions in Your Browser: Reports indicate OpenAI is prepping Operator, a ChatGPT feature to perform browser actions on behalf of users, raising both excitement and privacy discussions.

Theme 5. AI Development Challenges: From Quantization to Privacy

- Model Quantization Debates and Innovations: Discussions in the Unsloth AI Discord highlighted dynamic 4-bit quantization methods that retain accuracy while reducing VRAM usage, sparking comparisons with BnB 8-bit approaches.

- Privacy Concerns Over AI Data Handling Policies: Users questioned data handling practices of AI services like Codeium and Windsurf, scrutinizing privacy policies and the use of user data for training.

- AI's Role in Cybersecurity Remains Underexplored: Members highlighted a lack of emphasis on AI cybersecurity solutions, noting that companies like CrowdStrike have used ML for years, and suggesting generative AI could automate threat detection and code-based intrusion analysis.

o1-2024-12-17

Theme 1. AI Infrastructure & Funding Frenzy

- Stargate Summons $500B AI Bonanza: President Trump announced a colossal $500B “Stargate Project” for AI data centers, with initial outlays from SoftBank, Oracle, and MGX. Microsoft blog posts call it the largest AI initiative ever, fueling jobs and American AI leadership.

- Musk, SoftBank, Oracle Stir Controversy: Elon Musk scoffed that SoftBank lacks the money, while skeptics questioned SoftBank’s liquidity and debt. Nonetheless, official statements highlight bold optimism around this mega-scale investment.

- Google Bets Another $1B on Anthropic: Google sunk another billion into Anthropic, reinforcing the fierce competition among AI titans. Speculation abounds on rolling funding strategies and Anthropic’s expanding next-gen offerings.

Theme 2. LLM Showdowns & Math Marvels

- DeepSeek R1 Dominates Math: DeepSeek R1 reportedly hits 92% in math performance and outclasses O1 and Gemini in advanced reasoning tasks. Users praise its geometric insights and thorough multi-stage RL training.

- Gemini 2.0 Flash Shoots #1: Google’s Gemini 2.0 Flash-Thinking rockets to the top of Chatbot Arena, boasting a 73.3% math score and a 1 million token context window. Developers anticipate more refined iterations soon.

- Bespoke-Stratos-32B Surges Past Rivals: Distilled from DeepSeek-R1, this model trounces Sky-T1 and o1-preview while requiring 47x fewer examples. The $800 open-source dataset it used spurs interest in cost-effective community data curation.

Theme 3. Reinforcement Learning & GRPO Talk

- Tiny GRPO Gains Real Traction: Devs are running minimal GRPO code for math tasks and praising the simplified approach for easy experimentation. Early adapters note fast iteration cycles and straightforward debugging.

- Kimi-k1.5 Paper Delves Deep: Researchers highlight curriculum-based RL and a length penalty for better model performance. Community feedback prompts newcomers to blend these ideas into new RL training recipes.

- GRPO Debates Spark KL Divergence Drama: Hugging Face users question how GRPO handles KL in advantage calculations. Code contributors weigh the pros and cons of applying KL directly to the loss rather than the rewards.

Theme 4. HPC & GPU Codegen Adventures

- Blackwell Breaks the Code: NVIDIA’s Blackwell B100/B200 codegen 10.0 and RTX 50 codegen 12.0 updates stir anticipation for sm_100a, sm_101a, and maybe sm_120. Community members await an official whitepaper but get by on partial PR notes.

- Triton Tussles with 3.2: INT8×INT8 dot products crash with the new TMA approach, prompting manual fixes and jit refactors. PyTorch Issue #144103 spotlights backward-compat troubles with AttrsDescriptor removal.

- Accel-Sim Talk Stirs Enthusiasm: HPC explorers eye the Accel-Sim framework for GPU emulation on CPUs. A scheduled talk in late March promises deeper insights into simulated GPU performance and code optimization.

Theme 5. RAG Systems & Tool Innovations

- AgentWorkflow Powers Multi-Agents: LlamaIndex unveils a high-level framework that orchestrates parallel tool usage and agent coordination. Enthusiasts see it as the “next step” for robust multi-agent solutions.

- Sonar Pro Surfs SimpleQA: Perplexity’s new Sonar Pro API outperforms rivals in real-time search-grounded Q&A while promising lower costs. Zoom integrates it for AI Companion 2.0, and devs praise its citation-friendly approach.

- Ai2 ScholarQA Bolsters Literature: This system answers multi-paper queries with cross-referencing superpowers, speeding up academic reviews. Researchers can shift from scanning one PDF at a time to gleaning curated insights across entire corpora.

DeepSeek v3

Theme 1. DeepSeek R1 Model Performance and Integration

- DeepSeek R1 Outperforms Competitors in Math and Reasoning: The DeepSeek R1 Distill Qwen 32B model has been praised for its superior performance in complex math tasks, outperforming models like Llama 405B and DeepSeek V3 671B. Users highlighted its geometric reasoning and multi-stage RL training, with benchmarks showing 92% math performance.

- DeepSeek R1 Integration Challenges: Users reported difficulties integrating DeepSeek R1 into platforms like GPT4All and LM Studio, citing missing public model catalogs and the need for llama.cpp updates. Some also faced issues with API performance drops and censorship filters.

- DeepSeek R1's Chain-of-Thought Reasoning: The model's ability to externalize reasoning steps, such as spelling 'razzberry' versus 'raspberry', was highlighted as a unique feature. Users noted its potential for enhancing reasoning in other models like Claude and O1.

Theme 2. AI Model Quantization and Fine-Tuning

- Unsloth Introduces Dynamic 4-bit Quantization: Unsloth's new dynamic 4-bit quantization method selectively avoids compressing certain parameters, maintaining accuracy while reducing VRAM usage. Users compared it favorably to BnB 8-bit, noting its efficiency in model optimization.

- Fine-Tuning Challenges with Phi-4: Users reported issues with fine-tuning the Phi-4 model, citing poor output quality and looping fixes. Suggestions included adjusting LoRA settings and ensuring high-quality datasets for better results.

- Chinchilla Formula Revisited: Discussions around the Chinchilla formula emphasized the balance between model size and training tokens. Users noted that many models exceed optimal thresholds, leading to inefficiencies in resource utilization.

Theme 3. AI Infrastructure and Large-Scale Investments

- Project Stargate: $500B AI Infrastructure Plan: OpenAI's Stargate Project aims to invest $500 billion over four years to build advanced AI infrastructure in the US. Initial funding includes $100 billion from SoftBank, Oracle, and MGX, with a focus on job creation and national security.

- Google Invests $1B in Anthropic: Google's renewed investment in Anthropic signals confidence in the company's next-gen models, fueling speculation about rolling funding strategies and AI competition.

- DeepSeek R1 Hardware Requirements: Running the full DeepSeek R1 671B model requires significant hardware, with estimates suggesting $18,000 for multiple NVIDIA Digits. Users debated the feasibility of such setups, with some opting for cheaper alternatives like 4xA4000.

Theme 4. AI for Creative and Technical Applications

- Gemini 2.0 Flash Thinking Model Launches: Google's Gemini 2.0 Flash Thinking model, with a 1 million token context window and 64K max output tokens, has been tested against DeepSeek R1. Users praised its potential for large-scale reasoning tasks, though some noted challenges in handling complex prompts.

- NotebookLM for Church Services and Study Workflows: A user leveraged NotebookLM to analyze 16 five-hour YouTube livestreams, generating a 250-page book and a 2000-page bible study. Others integrated it into their study routines, praising its efficiency in reference lookups.

- AI Art Faces Hostile Reactions: Users reported negative responses to AI-generated art, with some being told to kill myself for using these tools. This reflects ongoing societal resistance to AI in creative fields.

Theme 5. AI Safety, Ethics, and Regulation

- Concerns Over AI Job Displacement: A founder expressed moral dilemmas over potential layoffs caused by their AI startup's success, sparking debates about the socio-economic impact of AI advancements. Users compared it to everyday automations that also cut jobs.

- AI Safety Index and Model Alignment: Discussions around the AI Safety Index highlighted the need for robust safety metrics in models like MiniCPM. Users questioned the absence of alignment and safety practices in some models, emphasizing the importance of ethical AI development.

- Regulatory Challenges in AI: A session on AI regulations explored the implications of recent policies, with Dylan Patel from SemiAnalysis discussing the winners and losers in the evolving regulatory landscape. Concerns were raised about government-corporate overlap in AI development.

DeepSeek R1

Theme 1. Model Optimization Wars: Quantization, Fine-Tuning, and Scaling Battles

- Unsloth’s Dynamic 4-bit Quantization Shakes Up VRAM Efficiency: Unsloth’s dynamic 4-bit quantization avoids compressing critical parameters, slashing VRAM use while preserving accuracy. Users compared it to BnB 8-bit, noting dynamic 4-bit’s balance of memory and performance.

- Phi-4 Fine-Tuning Fiasco Sparks Architecture Debates: Fine-tuning Phi-4 led to poor outputs, with users blaming model architecture. Workarounds in other notebooks hinted at the need for specialized hyperparameters.

- Chinchilla Scaling Laws Revisited Amid Training Token Excess: Discussions reignited about Chinchilla’s model-size-to-token ratios, with many models overshooting thresholds. Empirical scaling proofs urged resource optimization for efficiency.

Theme 2. AI Infrastructure Arms Race: $500B Projects and Hardware Hurdles

- Project Stargate’s $500B Ambition Faces Funding Skepticism: OpenAI’s Stargate Project aims to deploy $500B for AI infrastructure, but critics like Gavin Baker questioned SoftBank’s liquidity. Elon Musk dismissed the proposal as unrealistic.

- DeepSeek R1 671B Demands $18K Hardware, Sparks Cost Debates: Running DeepSeek R1 671B reportedly requires 4x NVIDIA Digits ($18K), while users proposed cheaper 4xA4000 setups. Skeptics argued bigger models ≠ better ROI.

- Apple Silicon Pushes 4-bit Quantization Limits for R1 32B: M3 Max MacBook Pro users tested 4-bit quantization for R1 32B, noting quality drops. MLX-optimized variants offered speed gains despite precision trade-offs.

Theme 3. Agentic AI: Hype vs. Reality in Autonomous Systems

- “Agentic AI” Label slapped on Scripted Workflows, Users Revolt: Members mocked marketing claims equating basic scripted tools to agentic AI, citing lack of true autonomy. Calls for transparency in “agent” definitions intensified.

- GRPO and T1 RL Papers Signal Reinforcement Learning Shakeup: GRPO’s minimal implementation and T1 RL’s scaled self-verification paper sparked interest. Concerns arose about KL divergence handling in advantage calculations.

- OpenAI’s “Operator” Teases Browser Automation, But API Absent: Leaks revealed ChatGPT’s Operator for browser actions, but no API support. Users speculated it’s a stopgap for “PhD-level Super Agents.”

Theme 4. Tooling Turbulence: IDE Wars, API Quirks, and RAG Realities

- Windsurf’s Auto-Memory Feature Clashes with Cascade’s Loops: Windsurf’s project memory won praise, but Cascade botched FastAPI file access and looped fixes. Users urged rephrasing prompts to escape cycles.

- LM Studio 0.3.8 Boosts LaTeX and DeepSeek R1 “Thinking” UI: The update added LaTeX rendering and a DeepSeek R1 interface, fixing Windows installer bugs. Attendees lauded Vulkan GPU deduplication for stability.

- Perplexity’s Sonar Pro API Outguns Competitors in SimpleQA Benchmark: Sonar Pro dominated benchmarks with 73.3% math scores and 1M-token context, but rollout hiccups caused 500/403 errors. GDPR compliance debates flared for EU hosting.

Theme 5. Ethics, Censorship, and Workforce Displacement Fears

- DeepSeek R1’s 85% Performance Drop Fuels Censorship Suspicions: Users blamed R1’s overnight performance crash on internal censorship filters. Workarounds for sensitive prompts trended, while uptime monitors tracked fixes.

- Startup Founder Grapples with AI-Driven Layoff Guilt: A founder lamented potential job losses from their AI tool, sparking debates on automation ethics. Critics compared it to historical tech disruptions.

- Microsoft’s Phi-3.5 Safety Push Ignites Uncensored Fork: Phi-3.5’s “overzealous” censorship led to a Hugging Face fork. Coders criticized its refusal to answer benign queries, mocking its tic-tac-toe dodge.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

-

Dynamite 4-bit Quants from Unsloth: Unsloth introduced a dynamic 4-bit quantization method that avoids compressing select parameters, retaining strong accuracy at a fraction of typical VRAM usage.

- Users compared it with BnB 8-bit approaches, showing dynamic 4-bit preserves performance while only slightly raising memory demands.

- Phi-4 Fine-Tuning Fiasco: Users reported trouble with Phi-4 model's subpar output after fine-tuning, questioning if the model architecture is the culprit.

- They noted that fine-tuning worked on other notebooks, hinting that Phi-4 might need specialized settings for better responses.

- Chinchilla Crunch: Size vs. Tokens: Participants revisited the Chinchilla formula, spotlighting the interplay between model scale and training tokens for maximum efficiency.

- They observed how many models overshoot recommended thresholds, sharing empirical proofs of scaling gains that call for resource optimization.

- Synthetic Data Euphoria: Some argued for infinite synthetic data streams to sharpen model training, emphasizing eval compliance and curated inputs.

- They warned of garbage in, garbage out if data curation is haphazard, urging dedicated supervision for synthetic sets.

- Agentic AI 'All Hype' Claims: Members expressed frustration over marketing ploys labeling basic script-based systems as agentic AI, lacking true autonomous capacity.

- They pointed to the gap between grand brand statements and the straightforward reality of limited agent functionality.

Codeium (Windsurf) Discord

-

Web Search Woes: Codeium vs. Windsurf: One user pressed for Codeium to match Windsurf's web search capabilities, referencing the extension changelog.

- They questioned data handling policies by pointing to the Privacy Policy and comparing JetBrains' vs. VS Code features.

- Windsurf IDE Access Aggravations: Multiple members struggled to open FastAPI files on Ubuntu after the latest Windsurf update, noting that Cascade couldn't read or edit certain paths.

- A suggestion to adjust file permissions or environment settings emerged, but the issue persisted for some developers.

- Cascade’s Confusing Code Conversations: Several developers reported Cascade looping through repeated fixes when dealing with complicated code debugging prompts.

- They found that rephrasing instructions or giving more context helped end the cycle, showing the importance of well-structured prompts.

- Prompt Power & Project Memory: Users praised Windsurf's auto-generated memories for carrying context across sessions, referencing a Forum thread for deeper project memory ideas.

- They also cited a Cline Blog post emphasizing planning over perfect prompting to improve AI interactions.

- Diff Viewer Dilemma & Writemode Woes: Community members consistently faced color-scheme confusion in Windsurf's diff viewer and questioned if Writemode was free.

- A user clarified that it's a paid feature while others explored bridging multiple LLMs with Flow Actions for more flexible integrations.

LM Studio Discord

-

LM Studio 0.3.8 Bolsters Thinking UI: The newly released LM Studio 0.3.8 adds a Thinking UI for DeepSeek R1, plus LaTeX rendering via

\text{...}blocks.- Attendees noted it addresses Windows installer issues and eliminates duplicate Vulkan GPUs, making usage more straightforward.

- DeepSeek R1 Distill Qwen 32B Wows Math Fans: Users praised DeepSeek R1 Distill Qwen 32B for outperforming other local models like Llama 405B on complex AIME-level math tests.

- They referenced the Hugging Face release for further details, praising its enhanced reasoning steps.

- Quantization Juggling on Apple Silicon: Participants explored 4-bit quantization to fit the R1 32B model on an M3 Max MacBook Pro, noting potential drops in answer quality.

- Multiple users tested MLX-optimized variants to cut memory demands, hinting at faster speeds despite possible precision trade-offs.

- High Price Tag for 671B Deployments: Estimates suggested $18,000 in hardware is needed for multiple NVIDIA Digits to run the full DeepSeek R1 671B, sparking debates about feasibility.

- Others mentioned a 4xA4000 setup as a cheaper route, remarking that bigger models do not always guarantee superior performance.

Nous Research AI Discord

-

Stargate's $500B Raises Eyebrows: Rumors spread that Project Stargate is eyeing a $500B investment from SoftBank, sparking skepticism given SoftBank's limited liquidity and debt as noted by Gavin Baker here.

- Discussion referenced Elon Musk's comment that SoftBank doesn't have the cash, fueling doubt about the proposal's validity.

- DeepSeek Doubts Over Razzberry: Members tested DeepSeek R1 Distill Llama-8B, observing how it externalizes reasoning on spelling 'razzberry' versus 'raspberry.'

- They highlighted comedic confusion about zero 'p's, illustrating DeepSeek's chain-of-thought reveal and its promise for deeper synergy among models.

- FLAME Blazes Through Excel: A 60M-parameter model called FLAME uses an Excel-specific tokenizer to tackle formula completion and repair, rivaling bigger models like Davinci.

- Community members admired its targeted training and smaller size, seeing it as a strong approach for domain-focused tasks.

- EvaByte Goes Token-Free: EvaByte debuts as a 6.5B byte-based model that uses 5x less data and achieves 2x faster decoding, welcoming multimodal possibilities without tokenizers.

- Skeptics questioned hardware efficiency, but results suggest a shift toward byte-oriented training with broader flexibility.

- STAR & TensorGrad Shake Up Model Architecture: STAR outlines an evolutionary approach for refining LLM structures, claiming performance gains in scaling and efficiency.

- TensorGrad introduces named edges for simpler matrix ops and symbolic optimization, attracting developers eager to discard tricky numeric dimension mapping.

Interconnects (Nathan Lambert) Discord

-

Stargate Scores Grandiose Growth: OpenAI's new Stargate Project secured a massive $500 billion plan over four years, with $100 billion immediately committed by SoftBank, Oracle, and MGX to fuel advanced AI infrastructure (reference).

- This colossal initiative is predicted to create numerous jobs and bolster American leadership in AI, with coverage appearing in Microsoft's official blog and various tech channels (blog link).

- Bespoke-Stratos-32B Battling Benchmarks: The Bespoke-Stratos-32B model, distilled from DeepSeek-R1, outperformed Sky-T1 and o1-preview in reasoning tasks, requiring 47x fewer training examples (source).

- It utilized an $800 open-source dataset to achieve cost-effective results, spurring interest in community-led data collection and collaborative improvements.

- Google Grants Another Billion for Anthropic: In a renewed show of confidence, Google infused $1 billion into Anthropic, fueling speculation on rolling funding strategies and AI competition (tweet).

- This investment reinforces Google's ongoing commitment to emerging AI players, with discussions highlighting possible expansions of Anthropic's next-gen models.

- Gemini-2.0-Flash Jumps to Arena Apex: Gemini-2.0-Flash-Thinking seized the #1 spot in the Chatbot Arena with a robust 73.3% math score and a 1 million token context window (link).

- Developers praised its potential for large-scale reasoning, while acknowledging that upcoming iterations could further refine its performance.

- GRPO Tweaks & T1 RL Triumph: Community members questioned the GRPO approach, pointing out concerns about KL divergence handling in advantage calculations, referencing TRL issues.

- Meanwhile, a new T1 paper from Ziphu and Tsinghua details scaled RL for large language models, blending trial-and-error with self-verification and cited in arXiv.

aider (Paul Gauthier) Discord

-

Gemini 2.0 Gains a Shiny Glow: Google introduced Gemini 2.0 with a 1 million token context window, 64K max output tokens, and native code execution support, as confirmed in this tweet.

- Users tested it against DeepSeek R1, expressing curiosity about how it handles complex tasks and overall efficiency.

- Aider’s Markdown Makeover: Users described a refined approach to Aider by storing major features, specs, and refactoring steps in markdown, referencing advanced model settings.

- They emphasized that LLM self-assessment paired with unit tests helps produce cleaner code and tighter development loops.

- Debating R1 vs. Sonnet: Community members observed distinctions between DeepSeek R1 and Sonnet, citing DeepSeek Reasoning Model docs for chain-of-thought capabilities.

- They noted Sonnet repeatedly offers more thorough suggestions and proposed merging R1's reasoning outputs into other models to tackle advanced scenarios.

- RAG with PDFs in Aider: A user asked about RAG for referencing PDFs in Aider, discovering that Sonnet supports it through a simple built-in command.

- That discussion inspired ideas about leveraging external data sources for deeper context in Aider’s workflows.

- Aider Upgrades & Nix Adventures: Multiple users hit upgrade errors and config roadblocks in Aider, swapping tips like removing the

.aiderdirectory or re-installing viapip.

- They also pointed to a PR for Aider 0.72.1 in NixOS and pondered Neovim plugin setups, but final recommendations are still under review.

Stackblitz (Bolt.new) Discord

-

Bolt’s Big Bankroll Boost: Today, Bolt announced $105.5 million in Series B, led by Emergence & GV, with backing from Madrona and The Chainsmokers, as detailed in this tweet.

- They thanked the community for championing devtools and AI growth, promising to strengthen Bolt’s capabilities moving forward.

- Tetris Telegram Twist: A developer aims to build a Telegram mini app for Tetris with meat-themed bricks, sharing the Telegram Apps Center as a resource.

- They plan to incorporate a leaderboard, hoping this odd concept sparks community collaboration.

- Claude Conquers Code Confusion: Members found success using Claude to address tricky code tasks and retrieve policy updates when Bolt struggled.

- They noted Claude’s thoroughness in handling Supabase user permissions, praising the synergy of AI-driven debugging.

Yannick Kilcher Discord

-

DeepSeek R1 stirs competition with OpenAI: At 92% math performance, DeepSeek R1 outperforms Gemini and O1, gaining momentum for both technical prowess and cost advantages, as noted in DeepSeek R1 - API, Providers, Stats.

- Discussion highlights advanced geometric reasoning, multi-stage RL training, and a deep dive into DeepSeekMath (arxiv.org/abs/2402.03300) that pushes boundaries of model-based calculations.

- $500B Stargate Project sparks debate: OpenAI unveiled the Stargate Project with $500B in AI infrastructure funding, referencing their official tweet.

- Commentators questioned government-corporate overlap and U.S. technological gains, citing sources like AshutoshShrivastava.

- AI startup faces moral questions on job displacement: A founder shared remorse over potential layoffs triggered by their rapidly advancing AI solution, calling it a moral puzzle. Another user compared it to everyday automations that also cut positions, reflecting a larger socio-economic issue.

- Many agreed such side effects accompany new AI developments, emphasizing the tension between progress and workforce disruption.

- IntellAgent reframes agent evaluation: The open-source IntellAgent framework applies simulated interactions for multi-level agent diagnosis, showcased at GitHub.

- A corresponding paper at arxiv.org/pdf/2501.11067 shares surprising outcomes from data-driven critiques, with a visual workflow in

intellagent_system_overview.gif. - UI-TARS and OpenAI Operator in the spotlight: Hugging Face released UI-TARS, targeting automated GUI tasks, including variants like UI-TARS-2B-SFT, as documented in their paper.

- Meanwhile, OpenAI readies an Operator feature for ChatGPT to execute browser actions, with reports from Stephanie Palazzolo.

OpenRouter (Alex Atallah) Discord

-

OpenRouter Slaps a Price Tag on Web Searches: The new $4/1k results pricing for web queries starts tomorrow, with an approximate cost of less than $0.02 per request.

- Members welcomed the streamlined model but noted curiosity about billing details once widespread usage begins.

- API Access Soft Launch Zooms In: OpenRouter’s API access arrives tomorrow with extra customizability options for users.

- Members anticipate testing these new features and sharing feedback on performance and integration.

- DeepSeek R1 Falters with 85% Performance Plunge: Reports indicated an 85% drop in DeepSeek R1’s API performance overnight, fueling worries about internal scrutiny and censorship filters.

- Some shared workarounds for sensitive prompts, while others monitored DeepSeek R1 – Uptime and Availability for official fixes.

- Cerebras Tantalizes with Mistral Large, Leaves Users Hanging: Many hoped to see Cerebras’ Mistral Large on OpenRouter, but it remains unavailable for public use.

- Frustrated users stick to Llama models instead, questioning whether Mistral Large is as ready as advertised.

Perplexity AI Discord

-

Sonar Pro Makes a Splash: Today, Perplexity rolled out the new Sonar and Sonar Pro API, enabling developers to incorporate generative search with real-time web research and strong citation features.

- Firms like Zoom tapped the API to elevate AI Companion 2.0, while Sonar Pro pricing touts lower costs than other offerings.

- SimpleQA Benchmark: Sonar Pro Steals the Show: Sonar Pro outperformed major rivals in the SimpleQA benchmark, outshining other search engines and LLMs in answer quality.

- Supporters praised web-wide coverage for being miles ahead of competing solutions.

- Comparisons Abound: Model Tweaks & Europe's Demands: Community members reported that Sonar Large now outruns Sonar Huge, with officials hinting at retiring the older model.

- Simultaneously, Europe's GDPR compliance push prompted discussion on hosting Sonar Pro in local data centers.

- Altman Teases 'PhD-Level Super Agents': A rumored briefing in D.C. by Altman mentioned advanced 'PhD-level Super Agents,' stirring curiosity about next-generation AI capabilities.

- Observers interpreted these hypothetical agents as a sign of major progress to come, though specifics remain sparse.

- Anduril's $1B Weapons Factory Gains Spotlight: News of Anduril establishing a $1B Autonomous Weapons Factory raised interest in defense-oriented machine systems, as shown in this video.

- Participants debated autonomous warfare and highlighted ethical questions tied to weaponized AI.

MCP (Glama) Discord

-

MCP's Code Crunch: Members discussed the evolving code editing feature in the MCP language server, citing improved capabilities for handling large codebases and synergy with Git operations.

- They mentioned a new Express-style API pull request that might unify how MCP server updates code in tandem with semantic tool selection.

- Brave Browsing for Crisp Docs: Users rely on Brave Search to fetch updated documentation and compile references into markdown for quick integration.

- They highlighted the brave-search MCP server approach for scraping and automating doc retrieval, praising the streamlined process.

- GPT's Grievances in 2024: Community members reported frustrations over custom GPT failing to incorporate new ChatGPT features, fueling doubts about the custom GPT marketplace.

- They noted concerns about these bots losing relevance, expressing disappointment in the slow pace of improvement.

- Claude Desktop's Prompt Parade: Participants explored hooking prompts into Claude Desktop, focusing on how to surface prompts via the prompts/List endpoint.

- They shared logging tool examples and partial code snippets, aiming to simplify the process for testing specialized prompts.

- Apify Actors & SSE Trials: Developers work on the MCP Server for Apify's Actors, building data extraction features but facing dynamic tool integration challenges.

- Issues around the Anthropic TS client underscore confusion over SSE endpoints, driving some members to pivot to Python while waiting on fixes.

Latent Space Discord

-

Ai2 ScholarQA Accelerates Literature Review: Ai2 ScholarQA launched a RAG-based solution for multi-paper queries, with an official blog post explaining cross-referencing capabilities.

- It helps academically-minded folks quickly gather research insights from various open-access papers, focusing on comparative analysis.

- Trump Launches $500B Project Stargate: President Trump announced Project Stargate, pledging a colossal $500B over four years to expand AI infrastructure in the US, with backing from OpenAI, SoftBank, and Oracle.

- Commentators like Elon Musk and Gavin Baker questioned the feasibility of that sum, calling it "ridiculous" but still acknowledging the ambition.

- Bespoke-Stratos-32B Emerges: A new reasoning model, Bespoke-Stratos-32B, distilled from DeepSeek-R1, showcases advanced math and code reasoning, per their announcement.

- Developers highlight that it uses "Berkeley NovaSky’s Sky-T1 recipe" to surpass previous models in reasoning benchmarks.

- Clay GTM Raises $40M: Clay GTM announced a $40M Series B expansion at a $1.25B valuation, with strong revenue growth that caught investor attention.

- Their existing funds remain largely untapped, and they plan to amplify momentum to drive further growth.

- LLM Paper Club Spotlights Physics of LMs: The LLM Paper Club event focuses on the Physics of Language Models and Retroinstruct, with details at this link.

- Attendees can add event alerts from Latent.Space by subscribing via the RSS logo, ensuring no event is missed.

GPU MODE Discord

-

NVIDIA's Blackwell Codegen Blazes On: The PR #12271 reveals Blackwell B100/B200 codegen 10.0 and teases an RTX 50 codegen 12.0, stirring anticipation for sm_100a and sm_101a.

- Despite the rumored sm_120 and a delayed Blackwell whitepaper, the community eagerly awaits more news and a talk on the Accel-Sim framework.

- Triton 3.2 HPC Hangups: Current TMA implementations can crash with

@triton.autotune, causing confusion over persistent matmul descriptors and data dependency issues.

- A user pointed to the GridQuant gemm.py lines #79-100 for descriptor creation insights, underscoring the tricky nature of Triton kernels.

- GRPO Gains Momentum in RL: A minimal GRPO algorithm is nearly done, with its initial version set to run soon and early experiments already in progress.

- The Kimi-k1.5 paper and new GRPO trainer in TRL highlight growing interest in curriculum-based reinforcement learning.

- Accelerating LLM Inference with HPC Tools: A user sought faster Hugging Face

generate()performance, referencing this commit and discussing liuhaotian/llava-v1.5-7b for heftier models.

- Meanwhile, this PyTorch post explores HPC-friendly strategies like specialized scheduling and memory optimizations to boost large model inference.

- Torch and Triton Tussle Over 3.2: The new Triton 3.2 disrupts

torchaoand torch.compile by droppingAttrsDescriptor, recorded in PyTorch issue #144103.

- INT8 x INT8 dot product failures in Triton issue #5669 plus a major JIT refactor reveal repeated backward compatibility headaches.

Eleuther Discord

-

Google's Titan Tease & Transformers Tussle: After announcements that Google's Titans claim superior performance using advanced memory features, members discussed potential improvements in inference-time handling.

- Community sentiment suggested the original methods might be complicated to replicate, reflecting concern about sufficiently transparent experimentation.

- Grokking Gains with Numerics: A recent paper Grokking at the Edge of Numerical Stability highlighted how numerical issues hamper model training, fueling talk on improved optimization strategies.

- Members debated a first-order approach, referencing this GitHub repo for deeper insights.

- DeepSeek Reward Model Rumblings: Attendees examined the DeepSeek-R1-Distill-Qwen-1.5B model's metric differences, citing 0.834 (n=2) vs 97.3 (n=64) in partial evaluations.

- A link to the DeepSeek_R1.pdf referenced decoding strategies and architecture details for reward-based training.

- Minerva Math & MATH-500 Makeover: Participants tested minerva_math for symbolic equivalence with sympy, referencing the MATH-500 subset from OpenAI's 'Let's Think Step by Step' study.

- They debated whether DeepSeek R1 behaves like a base model or requires a chat template in these tasks, pointing to HuggingFaceH4/MATH-500 for further context.

- Linear Attention & Domino Learning: Members explored a specialized linear attention architecture aiming for speed without losing robust performance.

- They also discussed the Domino effect in skill stacking, referencing Physics of Skill Learning to highlight how sequential abilities emerge in neural networks.

OpenAI Discord

-

DeepSeek Duels with o1 & Sonnet: DeepSeek was compared with models like o1 and Sonnet in math and GitHub-related benchmarks, showing strong performance. It’s accessible for free on its official site and offers API integrations for varied platforms.

- Some users faced issues with o1, but they praised the official DeepSeek R1 features as more stable for quick evaluations, fueling a push toward consistent model alternatives.

- AI Security Sparks Curiosity: Members questioned why AI cybersecurity remains overshadowed, referencing how CrowdStrike has used ML for years. They see potential in generative AI for automated threat detection and code-based intrusion analysis.

- Community voices argued that corporations emphasize profits over fundamental security, pointing to a disconnect between marketing hype and genuine user protections.

- Image-Only GPT Gains Momentum: One user wanted to train a GPT-like model entirely on screenshots of chats, sidestepping text-based pipelines. They wondered if file uploads or image data handling is possible inside the existing chat completion API.

- Others weighed the feasibility of direct image ingestion, suggesting extra preprocessing steps until the API supports inline file support.

- OCR Confusions & Map Solutions: Members found OCR prompts caused extreme hallucinations, particularly with unconstrained examples. They explored a specialized workaround for reading maps, hoping OpenAI’s O series addresses spatial data soon.

- They warned about context contamination in free-form OCR setups, concluding domain-specific constraints are safer until better GIS support arrives.

Notebook LM Discord Discord

-

NotebookLM revolutionizes Church gatherings: One user leveraged NotebookLM to analyze 16 five-hour YouTube livestreams, generating a 250-page book and a 2000-page bible study.

- They referenced We Need to Talk About NotebookLM for motivation, applauding its capacity to handle overwhelming text volumes.

- Study workflows embrace NotebookLM: A user integrated NotebookLM in their study routine for weeks, crediting it with simplifying reference lookups.

- They shared a YouTube video highlighting its efficiency, motivating others to adopt a similar approach.

- Gemini gains momentum in prompt optimization: Members reported better NotebookLM outputs by pairing it with Gemini for refined instructions.

- They praised Gemini's impact on clarity but noted challenges in targeting highly specific documents.

- APA references and audio overviews spark debate: Participants wrestled with APA references, finding NotebookLM relies on previously used sources unless names are adjusted.

- They also discussed generating new audio overviews up to three times daily, warning about duplication and the need to remove old files first.

- CompTIA A+ content gains traction: A user unveiled part one of a CompTIA A+ audio series, with further installments on the way.

- Community members saw it as a key resource for self-paced certification prep, with NotebookLM lending quick information.

Stability.ai (Stable Diffusion) Discord

-

Flux Figures and Negative Nudges: One user reported that de-distilled flux models yield better performance on certain content with configured cfg settings, though they run more slowly.

- They noted that negative prompts can boost prompt adherence but add heavier compute overhead.

- AI Art Sparks Hostile Heat: Some participants encountered hostile responses when showcasing AI-generated art, including being told to kill myself for using these tools.

- They mentioned that negative sentiments toward AI art have lingered for years, prompting discussions on broader acceptance.

- Discord Bot Scams Bamboozle: Members flagged suspicious DMs from bot accounts asking for personal information, revealing a persistent scam trend.

- Someone recounted an earlier pitch for paid 'services,' suggesting these scams remain Discord's recurring problem.

- CitivAI Woes and Worries: A user highlighted CitivAI downtime multiple times daily, raising concerns about the service's stability.

- Others chipped in with similar experiences, questioning the platform's reliability.

- SwarmUI Face Fix Fanfare: One user asked about fixing faces in swarmUI, wondering if a refiner was needed to improve image fidelity.

- They noted the community's push for enhanced realism, aiming to refine image-generation pipelines further.

LlamaIndex Discord

-

AgentWorkflow Arrives & Amplifies Multi-Agent Systems: LlamaIndex announced AgentWorkflow, a new high-level framework that builds on LlamaIndex Workflows to support multi-agent solutions.

- They highlighted expanded tool support and community enthusiasm, calling it “the next step for more powerful agent coordination.”

- DeepSeek-R1 Dares & Defies OpenAI's o1: The DeepSeek-R1 model landed in LlamaIndex with performance comparable to OpenAI's o1 and works in a full-stack RAG chat app.

- Users praised its ease of integration and pinned hopes on “scaling it further for real-world usage.”

- Open-Source RAG System Gains Llama3 & TruLens Muscle: A detailed guide contributed step-by-step methods to build an open-source RAG system using LlamaIndex, Meta Llama 3, and TruLensML.

- It compared a basic RAG approach against an “agentic variant with @neo4j,” including performance insights on OpenAI vs Llama 3.2.

- AgentWorkflow Explores Parallel Agent Calls: Community members discussed parallel calls in AgentWorkflow while acknowledging agents run sequentially and tool calls can be asynchronous.