[AINews] Apple Intelligence Beta + Segment Anything Model 2

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

The second largest LLM deployment of 2024 is so delayed/so here.

AI News for 7/26/2024-7/29/2024. We checked 7 subreddits, 384 Twitters and 28 Discords (325 channels, and 6654 messages) for you. Estimated reading time saved (at 200wpm): 716 minutes. You can now tag @smol_ai for AINews discussions!

Meta continued its open source AI roll with a worthy sequel to last year's Segment Anything Model. Most notably, ontop of being a better image model than SAM1, it now uses memory attention to scale up image segmentation to apply to video, using remarkably little data and compute.

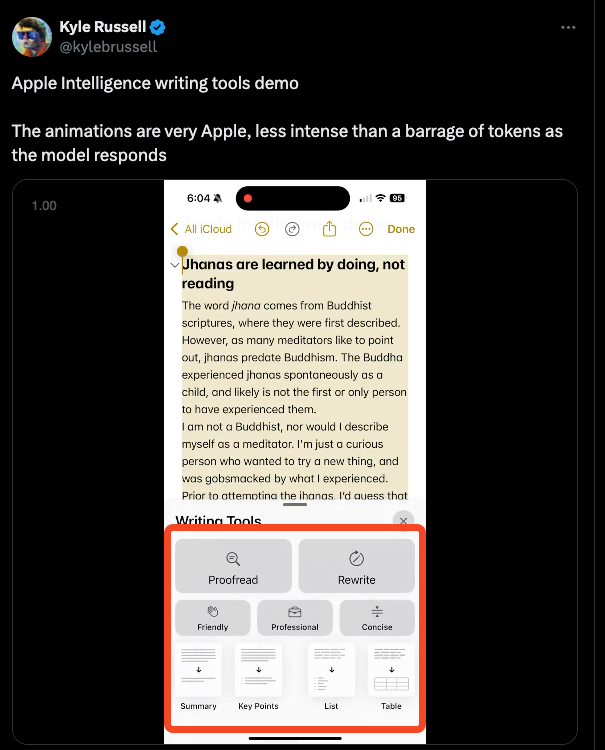

But the computer vision news was overshadowed by Apple Intelligence, which both delayed the official release to iOS 18.1 in October and released in Developer preview on MacOS Sequoia (taking a 5GB download), iOS 18, and iPadOS 18 today (with a very short waitlist, Siri 2.0 not included, Europeans not included), together with a surprise 47 page paper going into further detail than their June keynote (our coverage here).

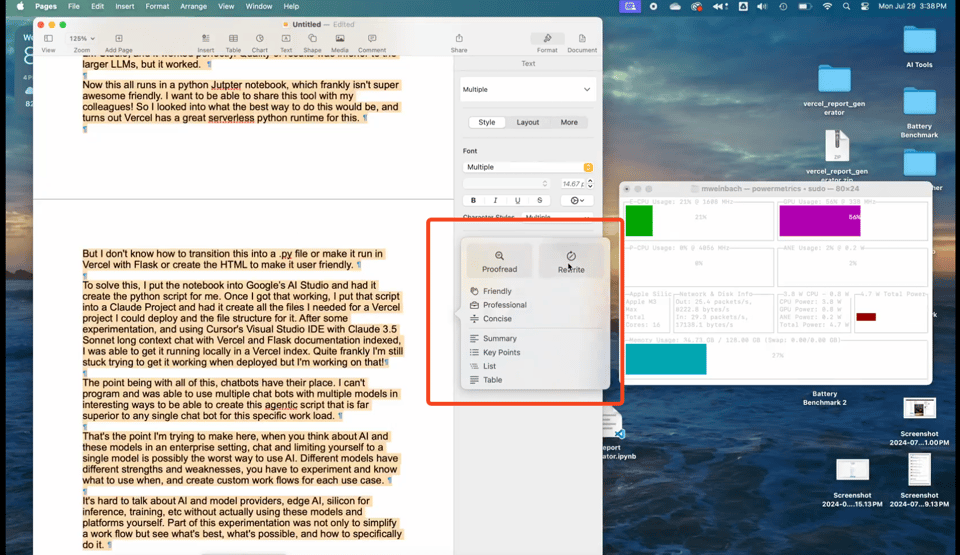

Cue widespread demos of:

Notifications screening

Rewriting in arbitrary apps with low power

Writing tools

and more.

As for the paper, the best recap threads from Bindu and Maxime and

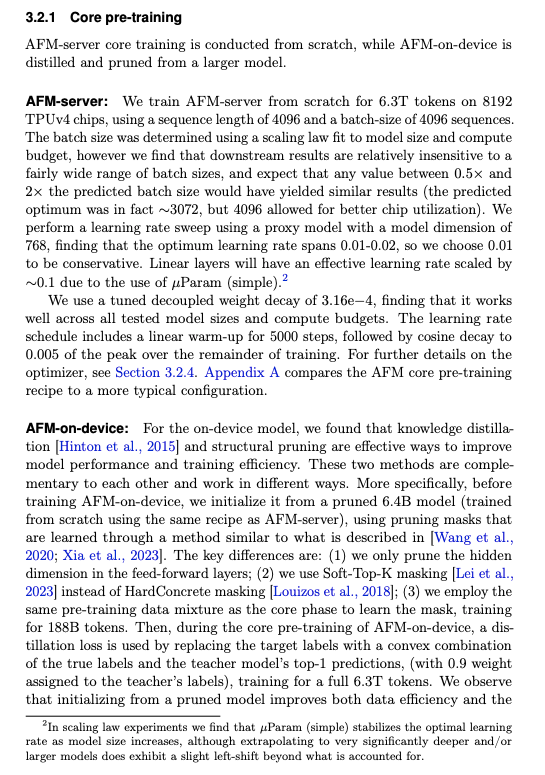

VB probably cover it all. Our highlight is the amount of pretrain detail contained on page 6 and 7:

- Data: fresh dataset scrape of ? Applebot web crawl, ? licensed datasets, ? code, 3+14b math tokens, ? "public datasets" leading to a final 6.3T tokens for CORE pretraining, 1T tokens with higher code/math mix for CONTINUED pretraining, and 100B tokens for context lengthening (to 32k)

- Hardware: AFM was trained with v4 and v5p Cloud TPUs, not Apple Silicon!! AFM-server: 8192 TPUv4, AFM-on-device: 2048 TPUv5p

- Post Training: "While Apple Intelligence features are powered through adapters on top of the base model, empirically we found that improving the general-purpose post-training lifts

the performance of all features, as the models have stronger capabilities on instruction following, reasoning, and writing. - Extensive use of synthetic data for Math, Tool Use, Code, Context Length, Summarization (on Device), automatic redteaming, and committee distillation

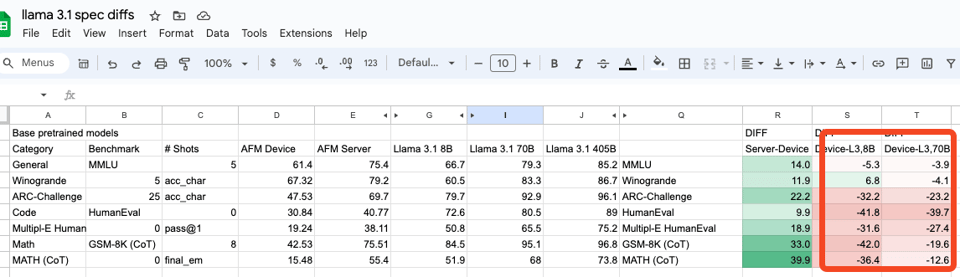

Also notable, they disclose their industry standard benchmarks, which we have taken the liberty of extracting and comparing with Llama 3:

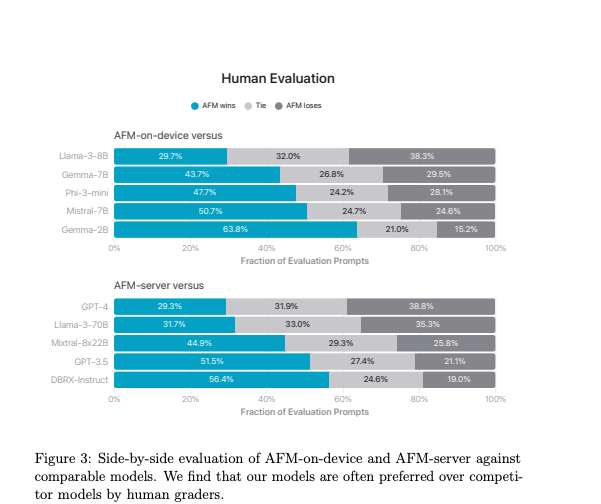

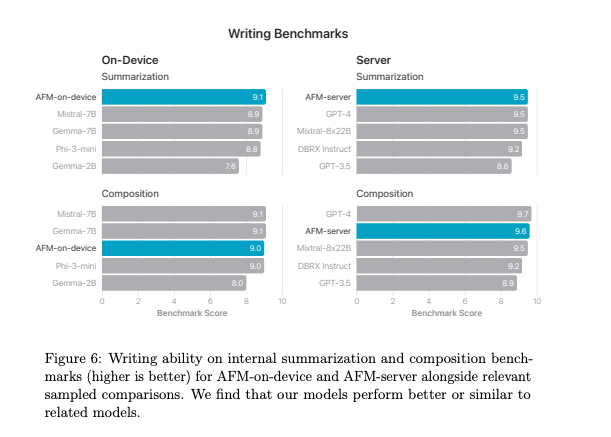

Yes they are notably, remarkably, significantly lower than Llama 3, but we wouldn't worry too much about that as we trust Apple's Human Evaluations.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Industry Updates

- Llama 3.1 Release: Meta released Llama 3.1, including a 405B parameter model, the first open-sourced frontier model on par with top closed models. @adcock_brett noted it\'s "open source and free weights and code, with a license enabling fine-tuning, distillation into other models and deployment." The model supports eight languages and extends the context window to 128K tokens.

- Mistral AI\'s Large 2: @adcock_brett reported that Mistral released Large 2, its flagship AI model scoring close to Llama 3.1 405b and even surpassing it on coding benchmarks while being much smaller at 123b. This marks the release of "two GPT-4 level open models within a week."

- OpenAI Developments: OpenAI announced SearchGPT, an AI search engine prototype that combines AI models with web information. @adcock_brett mentioned it "organizes search results into summaries with source links and will be initially available to 10,000 test users." Additionally, @rohanpaul_ai shared insights on OpenAI\'s potential impact on call centers, suggesting AI agents could replace human operators within two years.

- Google DeepMind\'s Achievements: @adcock_brett highlighted that "Google DeepMind\'s AlphaProof and AlphaGeometry 2 achieved a significant milestone in AI math reasoning capabilities," attaining a silver medal-equivalent score at this year\'s IMO.

AI Research and Technical Advancements

- GPTZip: @jxmnop introduced gptzip, a project for compressing strings with language models, achieving "5x better rates than gzip" using Hugging Face transformers.

- RAG Developments: @LangChainAI shared RAG Me Up, a generic framework for doing RAG on custom datasets easily. It includes a lightweight server and UIs for communication.

- Model Training Insights: @abacaj discussed the importance of low learning rates during fine-tuning, suggesting that weights have "settled" into near-optimal points due to an annealing phase.

- Hardware Utilization: @tri_dao clarified that "nvidia-smi showing \'GPU-Util 100%\' doesn\'t mean you\'re using 100% of the GPU," an important distinction for AI engineers optimizing resource usage.

Industry Trends and Discussions

- AI in Business: There\'s ongoing debate about the capabilities of LLMs in building businesses. @svpino expressed skepticism about non-technical founders building entire SaaS businesses using LLMs alone, highlighting the need for capable human oversight.

- AI Ethics and Societal Impact: @fchollet raised concerns about cancel culture and its potential impact on art and comedy, while @bindureddy shared insights on LLMs\' reasoning capabilities, noting they perform better than humans on real-world reasoning problems.

- Open Source Contributions: The open-source community continues to drive innovation, with projects like @rohanpaul_ai sharing a local voice chatbot powered by Ollama, Hugging Face Transformers, and Coqui TTS Toolkit using local Llama.

Memes and Humor

- @willdepue joked about OpenAI receiving "$19 trillion in tips" in the last month.

- @vikhyatk shared a humorous anecdote about getting hit with a $5k network transfer bill for using S3 as a staging environment.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Ultra-Compact LLMs: Lite-Oute-1 300M and 65M Models

- Lite-Oute-1: New 300M and 65M parameter models, available in both instruct and base versions. (Score: 59, Comments: 12): Lite-Oute-1 has released new 300M and 65M parameter models in both instruct and base versions, available on Hugging Face. The 300M model, built on the Mistral architecture with a 4096 context length, aims to improve upon the previous 150M version by processing 30 billion tokens, while the 65M model, based on LLaMA with a 2048 context length, is an experimental ultra-compact version processing 8 billion tokens, both trained on a single NVIDIA RTX 4090.

- /u/hapliniste: "As much as I'd like nano models so we can finetune easily on specific tasks, isn't the benchmark radom level? 25% on mmlu is the same as random choice right?I wonder if it still has some value for autocompletion or things like that."

Theme 2. AI Hardware Investment Challenges: A100 GPU Collection

- The A100 Collection and the Why (Score: 51, Comments: 20): The post describes a personal investment in 23 NVIDIA A100 GPUs, including 15 80GB PCIe water-cooled, 5 40GB SXM4 passive-cooled, and 8 additional 80GB PCIe water-cooled units not pictured. The author expresses regret over this decision, citing difficulties in selling the water-cooled units and spending their entire savings, while advising others to be cautious about letting hobbies override common sense.

Theme 4. New Magnum 32B: Mid-Range GPU Optimized LLM

- "The Mid Range Is The Win Range" - Magnum 32B (Score: 147, Comments: 26): Anthracite has released Magnum 32B v1, a Qwen finetune model targeting mid-range GPUs with 16-24GB of memory. The release includes full weights in BF16 format, as well as GGUF and EXL2 versions, all available on Hugging Face.

- Users discussed creating a roleplay benchmark, with suggestions for a community-driven "hot or not" interface to evaluate model performance on writing style, censorship levels, and character adherence.

- The profile pictures in Magnum releases feature Claude Shannon, the father of information theory, and Tsukasa from Touhou. Users appreciated this unique combination of historical and fictional characters.

- A user shared a 500-token story generated by Magnum 32B, featuring two cyborgs in Elon Musk's factory uncovering a corporate conspiracy. The story showcased the model's creative writing capabilities.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Industry Discussion

-

Criticism of misleading AI articles: In /r/singularity, a post questions the subreddit's apparent bias against AI, specifically referencing a potentially misleading article about OpenAI's financial situation. The post title suggests that the community may be overly critical of AI advancements.

Key points:

- The post links to an article claiming OpenAI could face bankruptcy within 12 months, projecting $5 billion in losses.

- The post received a relatively high score of 104.5, indicating significant engagement from the community.

- With 192 comments, there appears to be substantial discussion around this topic, though the content of these comments was not provided.

AI Discord Recap

A summary of Summaries of Summaries

1. LLM Model Releases and Performance

- Llama 3 Shakes Up the Leaderboards: Llama 3 from Meta has quickly risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus in over 50,000 matchups.

- The Llama 405B Instruct model achieved an average accuracy of 0.861 across multiple subjects during the MMLU evaluation, with notably strong performances in biology and geography. The evaluation was completed in about two hours, demonstrating efficient processing.

- DeepSeek V2 Challenges GPT-4: DeepSeek-V2 with 236B parameters has shown impressive performance, surpassing GPT-4 in some areas on benchmarks like AlignBench and MT-Bench.

- The model's strong showing across various benchmarks has sparked discussions about its potential to compete with leading proprietary models, highlighting the rapid progress in open-source AI development.

2. AI Development Tools and Frameworks

- LlamaIndex Launches New Course with Andrew Ng: LlamaIndex has announced a new course on building agentic RAG systems in collaboration with Andrew Ng's DeepLearning.ai, aiming to enhance developers' skills in creating advanced AI applications.

- This collaboration highlights the growing importance of Retrieval-Augmented Generation (RAG) in AI development and showcases LlamaIndex's commitment to educating the community on cutting-edge techniques.

- Axolotl Expands Dataset Format Support: Axolotl has expanded its support for diverse dataset formats, enhancing its capabilities for instruction tuning and pre-training LLMs.

- This update allows developers to more easily integrate various data sources into their model training pipelines, potentially improving the quality and diversity of trained models.

3. AI Infrastructure and Optimization

- vAttention Revolutionizes KV Caching: The vAttention system dynamically manages KV-cache memory for efficient LLM inference without relying on PagedAttention, offering a new approach to memory management in AI models.

- This innovation addresses one of the key bottlenecks in LLM inference, potentially enabling faster and more efficient deployment of large language models in production environments.

4. Multimodal AI Advancements

- Meta Unveils Segment Anything Model 2: Meta has released Segment Anything Model 2 (SAM 2), a unified model for real-time, promptable object segmentation in both images and videos, available under an Apache 2.0 license.

- SAM 2 represents a significant leap in multimodal AI, trained on a new dataset of approximately 51,000 videos. This release includes model inference code, checkpoints, and example notebooks to assist users in implementing the model effectively.

5. LLM Advancements

- Llama 405B Instruct Shines in MMLU: Llama 405B Instruct achieved an average accuracy of 0.861 in the MMLU evaluation, excelling in subjects like biology and geography, completing the evaluation in about two hours.

- This performance has sparked discussions on the robustness of the evaluation process and the model's efficiency.

- Quantization Concerns in Llama 3.1: Members raised concerns about Llama 3.1's performance drop due to quantization, with better results noted using bf16 (X.com post).

- Discussions suggest that the quantization impacts might be tied to the total data volume rather than just the parameter count.

PART 1: High level Discord summaries

Nous Research AI Discord

- Synthetic Data Generation Tools Under Scrutiny: Members discussed tools for generating synthetic data, highlighting Argila and Distillabel while gathering resources for a holistic overview.

- A Twitter thread was shared, though its specific relevance to synthetic data tools remains ambiguous.

- Moondream's Video Analysis Potential: Moondream was considered for identifying criminal activity by analyzing selective frames in videos, aiming for effective detection of dangerous actions.

- Productivity tips emphasized the necessity of quality images and robust prompting strategies for optimal performance.

- Llama 405B Instruct Shines in MMLU: The Llama 405B Instruct model achieved an average accuracy of 0.861 during the MMLU evaluation, with prominent results in biology and geography.

- The evaluation process was executed efficiently, wrapping up in about two hours.

- RAG Production Challenges and Solutions: A recent post detailed common issues faced in RAG production, showcasing potential solutions and best practices in a LinkedIn post.

- Community members emphasized the importance of shared knowledge for overcoming obstacles in RAG implementation.

- JSON-MD Integration for Task Management: Discussions focused on using JSON for task organization while leveraging Markdown for readability, paving the way for a synchronized contribution process.

- The Operation Athena website is poised to serve as a dynamic frontend for task management, designed for collaborative interaction.

HuggingFace Discord

- Fine-tuning w2v2-bert on Ukrainian Achieves 400k Samples: A project demonstrated fine-tuning of w2v2-bert on Ukrainian with the YODAS2 dataset, totaling 400k samples to enhance model accuracy in the language.

- This initiative expands model capabilities for Ukrainian, addressing language processing needs effectively.

- Meta Llama 3.1 Performance Insights: In-depth evaluation of Meta Llama 3.1 models compared GPU and CPU performance, documented in a detailed blog post, revealing notable findings.

- The evaluation included performance insights along with video demonstrations of test scenarios, shedding light on computational efficiency.

- Issues with Hugging Face Tokenizer Implementation: A member highlighted that

tokenizer.apply_chat_templateis broken in the recent Hugging Face Transformers, withadd_generation_prompt = Falsenot functioning correctly.- This issue has sparked conversations about potential workarounds and the implications for ongoing projects integration.

- Research Collaboration Opportunities at the Hackathon: Steve Watts Frey announced a new 6-day ultra-hackathon aimed at advancing open-source benchmarks, featuring substantial computing resources for participants and outlining collaboration chances.

- Teams are encouraged to take advantage of this chance to drive research efforts forward, boosting community engagement.

- User Experiences Highlighting Challenges with Data Management: Members shared experiences on dataset management, noting that organizing training data in order of increasing difficulty led to improved model performance.

- Additionally, discussions surfaced around enhancing breed classification models on Kaggle, tackling concerns about learning efficiency.

LM Studio Discord

- LM Studio performance varies by GPU: Users noticed significant variations in performance metrics across models, particularly with Llama 3.1, as GPU configurations impacted speed and context length settings.

- Some users reported different tokens per second rates, emphasizing the role of their GPU type and RAM specifications on inference efficiency.

- Model loading issues require updates: Several users faced model loading errors with Llama 3.1, citing tensor-related issues and recommending updating LM Studio or reducing context size.

- Guidelines were shared on troubleshooting, focusing on GPU compatibility and proper model directory structures.

- Fine-tuning vs embeddings debate: Discussion centered on the effectiveness of fine-tuning versus embeddings, highlighting the necessity for well-prepared examples for model operation.

- Participants emphasized that inadequate context or tutorial content could impede models' performance.

- Snapdragon X Elite ARM CPU generates buzz: The performance of the new Snapdragon X Elite ARM CPU in Windows 11 sparked conversation, with a review video titled "Mac Fanboy Tries ARM Windows Laptops" generating user interest.

- Members speculated on real-world usability and shared personal experiences with ARM CPU setups.

- GPU preferences for model training: Consensus emerged that the 4090 GPU is optimal for model training, outperforming older models such as the K80 or P40.

- Members highlighted the importance of modern hardware for effective CUDA support, especially when handling large models.

Stability.ai (Stable Diffusion) Discord

- AI Tools Face-Off: ComfyUI Takes the Crown: In discussions around Stable Diffusion, users compared ComfyUI, A1111, and Forge, revealing that ComfyUI offers superior control and model flexibility, enhancing speed.

- Concerns arose regarding Forge's performance after its latest update, prompting users to consider A1111 as a viable alternative.

- Frustration Over Inpainting Quality: User Modusprimax reported continual blurry outputs with Forge's new inpainting feature, despite multiple configuration attempts.

- The community suggested reverting to ComfyUI or trying earlier Forge versions for potentially better inpainting outcomes.

- Strategies for Character Consistency Revealed: Participants shared techniques using specific models and IP adapters to maintain character consistency in AI-generated images, particularly recommending the 'Mad Scientist' model.

- This approach is noted to yield better results in character anatomy, helping to refine user outputs.

- Censorship Concerns with AMD's Amuse 2.0: Discussion ensued around AMD’s Amuse 2.0 model, criticized for heavy censorship affecting its ability to render certain body curves accurately.

- This sparked broader conversations about the implications of censorship on creativity within AI applications.

- Community Emphasizes Learning Resources: Several users highlighted the necessity of utilizing video tutorials and community forums to improve understanding of Stable Diffusion prompts and operations.

- Crystalwizard encouraged diligence in exploring ComfyUI features while clarifying misconceptions about various AI generation tools.

OpenAI Discord

- SearchGPT Performance Highlights: Users shared positive feedback about SearchGPT, noting its ability to search through credible sources and utilize Chain of Thought (CoT) reasoning during inquiries.

- One user demonstrated its practicality by showcasing a calculation of trip costs while retrieving relevant car model information.

- ChatGPT Connectivity Frustrations: Multiple reports emerged regarding ongoing access issues with ChatGPT, with users experiencing significant loading delays.

- One user expressed particular frustration over being unable to log in for weeks and receiving no assistance from OpenAI support.

- AI Assists Coding Efficiency: Users eagerly discussed their experiences using AI tools for coding, highlighting successful Python scripts created to launch Chrome and other tasks.

- One user praised the feedback loop enabled by ChatGPT on their server, enhancing collaboration and code quality.

- Voice Mode Excitement: Anticipation grew around the rollout of voice mode in ChatGPT, expected to launch this week for a select group of users.

- Speculation arose regarding how users would be chosen to access this feature, generating excitement within the community.

- Cultural Exchanges in the Community: A user identified as Russian engaged with another who identified as Ukrainian, fostering a sharing of cultural backgrounds.

- This brief interaction highlighted the diverse community and encouraged inclusivity among members.

Unsloth AI (Daniel Han) Discord

- Best Practices for Using Unsloth AI: Users discussed the effectiveness of various system messages for Llama 3.1, with the default from Unsloth notebooks sufficing for tasks. Some opted to remove the system message to save context length without loss in performance.

- Conversations highlighted how flexible modeling aligns well with task-specific needs, especially when optimizing GPU memory usage.

- Fine-tuning with LoRa Adapters: Members confirmed that LoRa adapters from fine-tuning can be applied to original Llama models, granted the base model remains unchanged. Uncertainties remain about the compatibility across model versions, necessitating attention.

- Apple's usage of LoRA for fine-tuning demonstrated effective balance between capacity and inference performance, particularly for task-specific applications.

- Quantization Trade-offs: Discussions addressed the performance vs VRAM costs of 4-bit versus 16-bit models, urging experimentation as users find varying results in efficacy. Notably, 16-bit models deliver superior performance despite demanding four times the VRAM.

- Members emphasized the application of these quantization strategies based on unique workloads, reinforcing the necessity for hands-on metrics.

- Hugging Face Inference Endpoint Security: Clarifications around Hugging Face endpoints emphasized that 'protected' status applies only to one's token; sharing could lead to unauthorized access. It was stressed that safeguarding your token is paramount.

- Overall, members cautioned against potential security risks, underscoring vigilance in managing sensitive credentials.

- Efficiency in ORPO Dataset Creation: A member raised concerns about the manual nature of creating ORPO datasets, exploring the feasibility of a UI to streamline this process. Suggestions included leveraging smarter models for efficiently producing responses.

- The discourse stressed the need for automation tools to overcome repetitive tasks, potentially enhancing productivity and focus on model optimization.

CUDA MODE Discord

- Mojo Community Meeting Scheduled: The next Mojo community meeting is on July 29 at 10 PT, featuring insights on GPU programming with Mojo led by @clattner_llvm, available in the Modular community calendar.

- The agenda includes Async Mojo and a Community Q&A, providing an opportunity for engagement and learning.

- Fast.ai Launches Computational Linear Algebra Course: Fast.ai introduced a new free course, Computational Linear Algebra, complemented by an online textbook and video series.

- Focusing on practical applications, it utilizes PyTorch and Numba, teaching essential algorithms for real-world tasks.

- Triton exp Function Sacrifices Accuracy: It was noted that the exp function in Triton utilizes a rapid

__expfimplementation at the cost of accuracy, prompting inquiries into the performance of libdevice functions.- Members suggested checking the PTX assembly output from Triton to determine the specific implementations being utilized.

- Optimizing PyTorch CPU Offload for Optimizer States: Members explored the mechanics of CPU offload for optimizer states, questioning its practicality while highlighting a fused ADAM implementation as critical for success.

- Discussions revealed confusion on the relationship between paged attention and optimizers, as well as the complex nature of using FSDP for single-GPU training.

- INT8 Model Training Shows Promise: A member shared their experience fine-tuning ViT-Giant (1B params) with INT8 model training, observing similar loss curves and validation accuracy compared to the BF16 baseline.

- However, they noted significant accuracy drops when incorporating an 8-bit optimizer with the INT8 model.

Perplexity AI Discord

- Perplexity Pro Subscription Clarified: Users highlighted discrepancies in the Perplexity Pro subscription limits, reporting that Pro users have 540 or 600 daily searches and a cap of 50 messages for the Claude 3 Opus model.

- Confusion around these limitations suggests potential documentation inconsistencies that need addressing.

- Dyson Launches High-End OnTrac Headphones: Dyson introduced its OnTrac headphones at a $500 price point, featuring 40mm neodymium drivers and advanced noise cancellation reducing noise by up to 40 dB.

- This move marks Dyson's entry into the audio market, departing from their focus on air purification with the previous Zone model.

- Inconsistencies in Perplexity API Performance: Users noted performance differences between the web and API versions of Perplexity, with the web version yielding superior results.

- Concerns emerged regarding the API's

llama-3-sonar-large-32k-onlinemodel, which had issues returning accurate data, suggesting prompt structuring affects outcomes.

- Concerns emerged regarding the API's

- Job Prospects with Perplexity AI: Prospective candidates expressed interest in job openings at Perplexity AI, highlighting remote positions available on the careers page.

- High remuneration for specific roles sparked discussions about what these positions entail and the challenges applicants might face.

- Cultural Insights on Zombies: Users explored the concept of Himalayan zombies called ro-langs, contrasting them with traditional Western portrayals, revealing a rich cultural narrative.

- This discussion provided insights into the spiritual beliefs woven into Himalayan mythology, complexly differing from Western interpretations.

OpenRouter (Alex Atallah) Discord

- ChatBoo introduces voice calling: The ChatBoo Update July video unveiled a voice calling feature, aimed at enhancing interactive experiences within the app.

- Users are encouraged to test the new functionality and provide feedback.

- DigiCord presents all-in-one AI assistant: The Introducing DigiCord video introduces an AI assistant that combines 40+ LLMs, including OpenAI GPT-4 and Gemini.

- DigiCord integrates various image models like Stable Diffusion, aiming to be a comprehensive tool for Discord users.

- Enchanting Digital seeks testers: Enchanting Digital is currently in a testing phase, inviting users to participate at enchanting.digital, focusing on dialogue and AI features with a robust RP engine.

- Lightning-fast and realistic generations are promised, allowing seamless chatting capabilities.

- OpenRouter API faces 500 Internal Server Error: Users reported receiving a 500 Internal Server Error** when accessing OpenRouter, signaling potential service interruptions.

- Minor issues with API functionality were recorded, with updates available on the OpenRouter status page.

- Model suggestions for roleplay: For roleplay, users recommended utilizing Llama 3.1 405B, while also mentioning Claude 3.5 Sonnet and gpt-4o mini for improved results.

- Concerns arose regarding the limitations of Llama 3.1 without specific prompts, prompting suggestions to seek help within the SillyTavern Discord community.

Modular (Mojo 🔥) Discord

- CUDA installation woes: Users vent frustrations about mismatched CUDA versions while using Mojo for LIDAR tasks, leading to considerable installation challenges.

- Suggestions included favoring the official CUDA installation website over

apt installto mitigate issues.

- Suggestions included favoring the official CUDA installation website over

- Exciting Mojo/MAX alpha test kicks off: An alpha test for installing Mojo/MAX via conda is now live, introduced with a new CLI tool called

magic. Installation instructions are provided at installation instructions.- The

magicCLI simplifies installing Python dependencies, making project sharing more reliable; feedback can be relayed via this link.

- The

- Optimizing FFTs in Mojo requires attention: Users are eager for optimized FFT libraries like FFTW or RustFFT but face binding challenges with existing solutions.

- Links to previous GitHub attempts for FFT implementation in Mojo were shared among participants.

- Linked list implementation seeks scrutiny: A user shared a successful implementation of a linked list in Mojo, looking for feedback on memory leaks and debugging.

- They provided a GitHub link for their code and specifically requested guidance regarding deletion and memory management.

- Discussions on C/C++ interop in Mojo: Conversations revealed a focus on future C interop capabilities in Mojo, possibly taking around a year to develop.

- Users expressed frustration over gated libraries typically written in C and the complexities involved in C++ integration.

Eleuther Discord

- TPU Chips Still Under Wraps: No recent progress has been made in decapping or reverse engineering TPU chips, as members noted a lack of detailed layout images.

- While some preliminary data is available, a full reverse engineering hasn't yet been achieved.

- Llama 3.1's Quantization Quandary: Concerns arose over Llama 3.1's performance drop due to quantization, with a member linking to a discussion showing better results using bf16 (X.com post).

- The group debated if quantization impacts delve deeper into the overall data volume rather than merely the parameter count.

- Iterative Inference Sparks Interest: Members are contemplating research directions for iterative inference in transformers, emphasizing in-context learning and optimization algorithms, showing interest in the Stages of Inference paper.

- They expressed the need for deeper insights into existing methods like gradient descent and their applications in current transformer architectures.

- lm-eval-harness Issues Surface: Users are encountering multiple issues with the

lm-eval-harness, needing to usetrust_remote_code=Truefor proper model execution.- One member shared their Python implementation, prompting discussions about command-line argument handling and its complexity.

- Synthetic Dialogues Boost Fine-Tuning: A new dataset called Self Directed Synthetic Dialogues (SDSD) was presented to enhance instruction-following capabilities across models like DBRX and Llama 2 70B (SDSD paper).

- This initiative aims to augment multi-turn dialogues, allowing models to simulate richer interactions.

Latent Space Discord

- LMSYS dives into Ranking Finetuning: Members highlighted the recent efforts by LMSYS to rank various finetunes of llama models, questioning the potential biases in this process and the transparency of motivations behind it.

- Concerns surfaced regarding favoritism towards individuals with connections or financial ties, impacting the credibility of the ranking system.

- Meta launches SAM 2 for Enhanced Segmentation: Meta's newly launched Segment Anything Model 2 (SAM 2) delivers real-time object segmentation improvements, powered by a new dataset of roughly 51,000 videos.

- Available under an Apache 2.0 license, the model marks a significant leap over its predecessor, promising extensive applications in visual tasks.

- Excitement Surrounds Cursor IDE Features: Users buzzed about the capabilities of the Cursor IDE, especially its Ruby support and management of substantial code changes, with users reporting over 144 files changed in a week.

- Talks of potential enhancements included collaborative features and a context plugin API to streamline user experience further.

- Focus on Context Management Features: User discussions reiterated the necessity for robust context management tools within the Cursor IDE, improving user control over context-related features.

- One user described their shift to natural language coding for simplicity, likening it to a spectrum with pseudocode.

- Llama 3 Paper Club Session Recorded: The recording of the Llama 3 paper club session is now available, promising insights on crucial discussions surrounding the model; catch it here.

- Key highlights included discussions on enhanced training techniques and performance metrics, enriching community understanding of Llama 3.

LlamaIndex Discord

- Join the LlamaIndex Webinar on RAG: This Thursday at 9am PT, LlamaIndex hosts a webinar with CodiumAI on Retrieval-Augmented Generation (RAG) for code generation, helping enterprises ensure high code quality.

- RAG’s significance lies in its ability to enhance coding processes through the LlamaIndex infrastructure.

- Innovating with Multi-modal RAG: A recent demo showcased using the CLIP model for creating a unified vector space for text and images using OpenAI embeddings and Qdrant.

- This method enables effective retrieval from mixed data types, representing a significant advancement in multi-modal AI applications.

- Implementing Text-to-SQL in LlamaIndex: Discussion revolved around establishing a text-to-SQL assistant using LlamaIndex, showcasing setup for managing complex NLP queries effectively.

- Examples highlighted practical configuration strategies for deploying capable query engines tailored for user needs.

- Security Concerns Surrounding Paid Llamaparse: A query arose regarding the security considerations of utilizing paid versus free Llamaparse versions, but community feedback lacked definitive insights.

- The ambiguity left members uncertain about potential security differences that may influence their decisions.

- Efficient Dedupe Techniques for Named Entities: Members explored methods for programmatically deduping named entities swiftly without necessitating a complex setup.

- The emphasis was on achieving deduplication efficiency, valuing speed in processing without burdensome overhead.

OpenInterpreter Discord

- Open Interpreter Feedback Loop: Users expressed mixed feelings about Open Interpreter as a tool, suggesting it effectively extracts data from PDFs and translates text, while cautioning against its experimental aspects.

- One user inquired about using it for translating scientific literature from Chinese, receiving tips for effective custom instructions.

- AI Integration to Assist Daily Functioning: A member struggling with health issues is exploring Open Interpreter for voice-commanded tasks to aid their daily activities.

- While community members offered caution around using OI for critical operations, they advised alternative solutions like speech-to-text engines.

- Ubuntu 22.04 confirmed for 01 Desktop: Members confirmed that Ubuntu 22.04 is the recommended version for 01 Desktop, preferring X11 over Wayland.

- Discussions revealed comfort and familiarity with X11, reflecting ongoing conversations around desktop environments.

- Agent Zero's Impressive Demo: The first demonstration of Agent Zero showcased its capabilities, including internal vector DB and internet search functionalities.

- Community excitement grew around Agent Zero’s features like executing in Docker containers, sparking interest in tool integrations.

- Groq's Mixture of Agents on GitHub: A GitHub repository for the Groq Mixture of Agents was shared, highlighting its development goals related to agent-based interactions.

- This project is open for contributions, inviting community collaboration in enhancing agent-based systems.

OpenAccess AI Collective (axolotl) Discord

- Turbo models likely leverage quantization: The term 'turbo' in model names suggests the models are using a quantized version, enhancing performance and efficiency.

- One member noted, I notice fireworks version is better than together ai version, reflecting user preference in implementations.

- Llama3 finetuning explores new strategies: Discussions on how to effectively finetune Llama3 covered referencing game stats and weapon calculations, emphasizing practical insights.

- There is particular interest in the model's ability to calculate armor and weapon stats efficiently.

- QLoRA scrutinized for partial layer freezing: The feasibility of combining QLoRA with partial layer freeze was debated, focusing on tuning specific layers while maintaining others.

- Concerns arose over whether peft recognizes those layers and the efficacy of DPO without prior soft tuning.

- Operation Athena launches AI reasoning tasks: A new database under Operation Athena has launched to support reasoning tasks for LLMs, inviting community contributions.

- This initiative, backed by Nous Research, aims to improve AI capabilities through a diverse set of tasks reflecting human experiences.

- Understanding early stopping in Axolotl: The

early_stopping_patience: 3parameter in Axolotl triggers training cessation after three consecutive epochs without validation improvement.- Providing a YAML configuration example helps monitor training metrics, preventing overfitting through timely interventions.

LangChain AI Discord

- LangChain Open Source Contributions: Members sought guidance for contributing to LangChain, sharing helpful resources including a contributing guide and a setup guide for understanding local repository interactions.

- Suggestions revolved around enhancing documentation, code, and integrations, especially for newbies entering the project.

- Ollama API Enhancements: Using the Ollama API for agent creation proved efficient, with comparisons showing ChatOllama performing better than OllamaFunctions in following LangChain tutorial examples.

- However, past versions faced issues, notably crashes during basic tutorials involving Tavily and weather integrations.

- ConversationBufferMemory Query: Discussion arose around the usage of

save_contextin ConversationBufferMemory, with members seeking clarity on structuring inputs and outputs for various message types.- There was a noted need for enhanced documentation on thread safety, with advice emphasizing careful structuring to manage messages effectively.

- Flowchart Creation with RAG: Members recommended using Mermaid for flowchart creation, sharing snippets from LangChain's documentation to assist visualizations.

- A GitHub project comparing different RAG frameworks was also shared, providing more insights into application functionalities.

- Merlinn AI on-call agent simplifies troubleshooting: Merlinn, the newly launched open-source AI on-call agent, assists with production incident troubleshooting by integrating with DataDog and PagerDuty.

- The team invites user feedback and encourages stars on their GitHub repo to support the project.

Cohere Discord

- Cohere API Key Billing Challenges: Participants discussed the need for separate billing by API key, exploring middleware solutions to manage costs distinctly for each key.

- Members expressed frustration over the lack of an effective tracking system for API usage.

- Recommended Framework for Multi-Agent Systems: A member highlighted LangGraph from LangChain as a leading framework praised for its cloud capabilities.

- They noted that Cohere's API enhances multi-agent functionality through extensive tool use capabilities.

- Concerns Around API Performance and Downtime: Users reported slowdowns with the Cohere Reranker API as well as a recent 503 error downtime impacting service access.

- Cohere confirmed recovery with all systems operational and 99.67% uptime highlighted in a status update.

- Using Web Browsing Tools in Cohere Chat: Members discussed integrating web search tools into the Cohere chat interface, enhancing information access through API functionality.

- One user successfully built a bot leveraging this feature, likening it to a search engine.

- Prompt Tuner Beta Featured Discussion: Queries emerged regarding the beta release of the 'Prompt Tuner' feature, with users eager to understand its impact on API usage.

- Members expressed curiosity about practical implications of the new tool within their workflows.

Interconnects (Nathan Lambert) Discord

- GPT-4o Mini revolutionizes interactions: The introduction of GPT-4o Mini is a game-changer, significantly enhancing interactions by serving as a transparency tool for weaker models.

- Discussions framed it as not just about performance, but validating earlier models' efficacy.

- Skepticism surrounding LMSYS: Members voiced concerns that LMSYS merely validates existing models rather than leading the way in ranking algorithms, with observed randomness in outputs.

- One highlighted that the algorithm fails to effectively evaluate model performance, especially for straightforward questions.

- RBR paper glosses over complexities: The RBR paper was criticized for oversimplifying complex issues, especially around moderating nuanced requests that may have dangerous undertones.

- Comments indicated that while overt threats like 'Pipe bomb plz' are easy to filter, subtleties are often missed.

- Interest in SELF-ALIGN paper: A growing curiosity surrounds the SELF-ALIGN paper, which discusses 'Principle-Driven Self-Alignment of Language Models from Scratch with Minimal Human Supervision'.

- Members noted potential connections to SALMON and RBR, sparking further interest in alignment techniques.

- Critique of Apple's AI paper: Members shared mixed reactions to the Apple Intelligence Foundation paper, particularly about RLHF and its instruction hierarchy, with one printing it for deeper evaluation.

- Discussions suggested a divergence of opinions on the repository's effectiveness and its implications for RL practices.

DSPy Discord

- Moondream2 gets a structured image response hack: A member built a hack combining Moondream2 and OutlinesOSS that allows users to inquire about images and receive structured responses by hijacking the text model.

- This approach enhances embedding processing and promises an improved user experience.

- Introducing the Gold Retriever for ChatGPT: The Gold Retriever is an open-source tool that enhances ChatGPT's capabilities to integrate personalized, real-time data, addressing prior limitations.

- Users desire tailored AI interactions, making Gold Retriever a crucial resource by providing better access to specific user data despite knowledge cut-off challenges.

- Survey on AI Agent Advancements: A recent survey paper examines advancements in AI agents, focusing on enhanced reasoning and tool execution capabilities.

- It outlines the current capabilities and limitations of existing systems, emphasizing key considerations for future design.

- Transformers in AI: Fundamental Questions Raised: A blog post worth reading emphasizes the capability of transformer models in complex tasks like multiplication, leading to deeper inquiry into their learning capacity.

- It reveals that models such as Claude or GPT-4 convincingly mimic reasoning, prompting discussions on their ability to tackle intricate problems.

- Exploring Mixture of Agents Optimization: A member proposed using a mixture of agents optimizer for DSPy, suggesting optimization through selecting parameters and models, backed by a related paper.

- This discussion compared their approach to the architecture of a neural network for better responses.

tinygrad (George Hotz) Discord

- Improving OpenCL Error Handling: A member proposed enhancing the out of memory error handling in OpenCL with a related GitHub pull request by tyoc213.

- They noted that the suggested improvements could address existing limitations in error notifications for developers.

- Monday Meeting Unveiled: Key updates from Monday's meeting included the removal of UNMUL and MERGE, along with the introduction of HCQ runtime documentation.

- Discussion also covered upcoming MLPerf benchmark bounties and enhancements in conv backward fusing and scheduler optimizations.

- ShapeTracker Bounty Raises Questions: Interest emerged regarding a ShapeTracker bounty focused on merging two arbitrary trackers in Lean, sparking discussions on feasibility and rewards.

- Members engaged in evaluating the worth of the bounty compared to its potential outputs and prior discussions.

- Tinygrad Tackles Time Series Analysis: A user explored using tinygrad for physiological feature extraction in time series analysis, expressing frustrations with Matlab's speed.

- This discussion highlighted an interest in tinygrad's efficiency for such application areas.

- NLL Loss Error Disclosed: An issue was reported where adding

nll_lossled to tensor gradient loss, resulting in PR failures, prompting a search for solutions.- Responses clarified that non-differentiable operations like CMPNE impacted gradient tracking, indicating a deeper problem in loss function handling.

LAION Discord

- Vector Search Techniques Get a BERT Boost: For searching verbose text, discussions reveal that using a BERT-style model outperforms CLIP, with notable suggestions from models by Jina and Nomic.

- Members highlighted that Jina's model serves as a superior alternative when focusing away from images.

- SWE-Bench Hosts a $1k Hackathon!: Kicking off on August 17, the SWE-Bench hackathon offers participants $1,000** in compute resources and cash prizes for top improvements.

- Participants will benefit from support by prominent coauthors, with chances to collaborate and surpass benchmarks.

- Segment Anything Model 2 Now Live!: The Segment Anything Model 2** from Facebook Research has been released on GitHub, including model inference code and checkpoints.

- Example notebooks are offered to aid users in effective model application.

AI21 Labs (Jamba) Discord

- Jamba's Long Context Capabilities Impress: Promising results are emerging from Jamba's 256k effective length capabilities, particularly from enterprise customers eager to experiment.

- The team actively encourages developer feedback to refine these features further, aiming to optimize use cases.

- Developers Wanted for Long Context Innovations: Jamba is on the lookout for developers to contribute to long context projects, offering incentives like credits, swag, and fame.

- This initiative seeks engaging collaboration to broaden the scope and effectiveness of long context applications.

- New Members Energize Community: The arrival of new member artworxai adds energy to the chat, sparking friendly interactions among members.

- The positive atmosphere establishes a welcoming environment, crucial for community engagement.

LLM Finetuning (Hamel + Dan) Discord

- Last Call for LLM Engineers in Google Hackathon: A team seeks one final LLM engineer to join their project for the upcoming Google AI Hackathon, focusing on disrupting robotics and education.

- Candidates should possess advanced skills in LLM engineering, familiarity with LangChain and LlamaIndex, and a strong interest in robotics or education tech.

- Fast Dedupe Solutions for Named Entities Requested: A member seeks effective methods to programmatically dedupe a list of named entities, looking for speedy solutions without complex setups.

- The aim is to identify a quick and efficient approach to handle duplicates, rather than implementing intricate systems.

Alignment Lab AI Discord

- Community Seeks Robust Face Recognition Models: Members are on the hunt for machine learning models and libraries that excel in detecting and recognizing faces in images and videos, prioritizing accuracy and performance in real-time scenarios.

- They emphasize the critical need for solutions that not only perform well under varied conditions but also cater to practical applications.

- Interest in Emotion Detection Capabilities: Discussions reveal a growing interest in solutions capable of identifying emotions from faces in both still images and video content, targeting the enhancement of interaction quality.

- Participants specifically request integrated solutions that merge face recognition with emotion analysis for a comprehensive understanding.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!