[AINews] DataComp-LM: the best open-data 7B model/benchmark/dataset

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

240T tokens is all you need to start with.

AI News for 7/18/2024-7/19/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (467 channels, and 2305 messages) for you. Estimated reading time saved (at 200wpm): 266 minutes. You can now tag @smol_ai for AINews discussions!

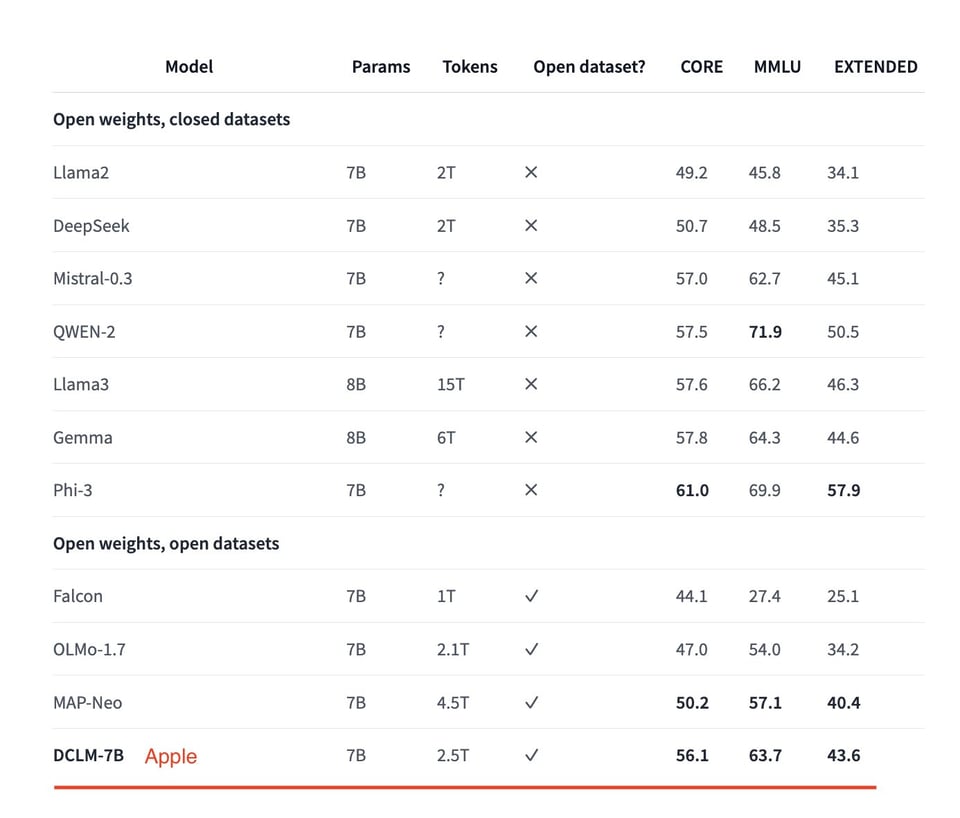

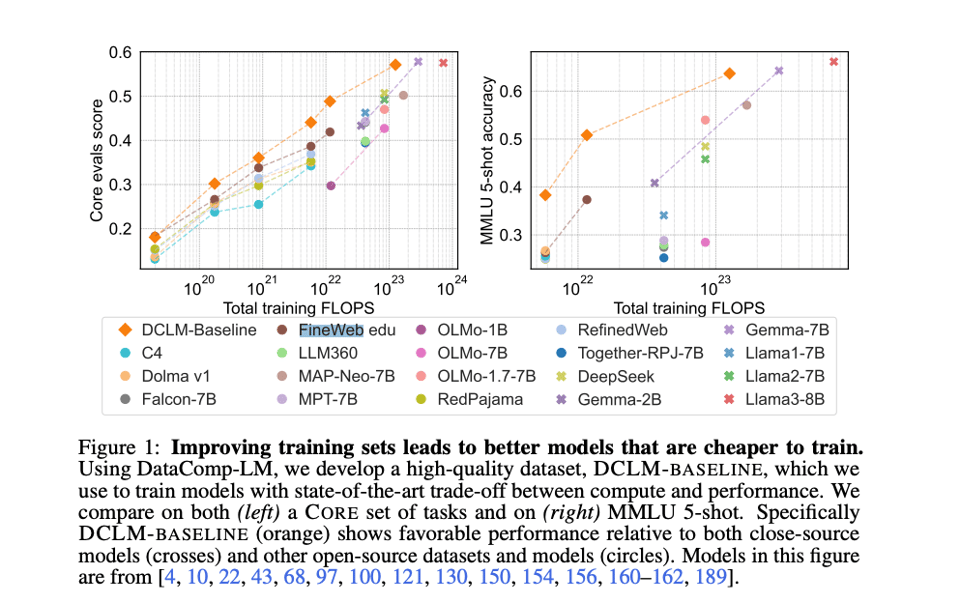

Though HuggingFace's SmolLM is barely 4 days old, it has now been beaten: the DataComp team (our coverage here) have now released a "baseline" language model competitive with Mistral/Llama3/Gemma/Qwen2 at the 7B size, but it is notable for being an open data model from the DataComp-LM dataset, AND for matching those other models with ONLY 2.5T tokens:

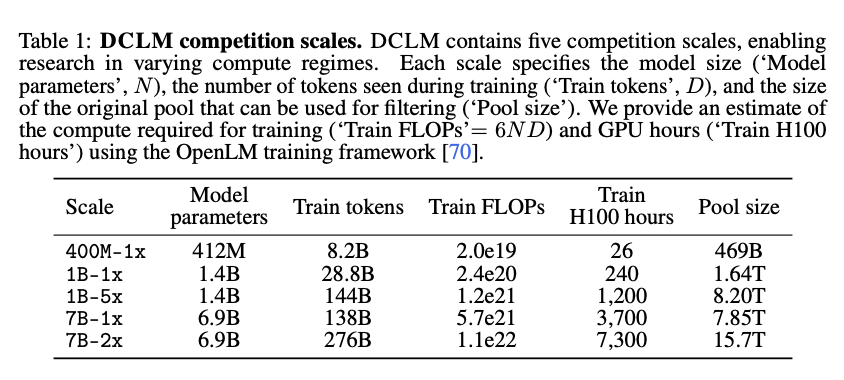

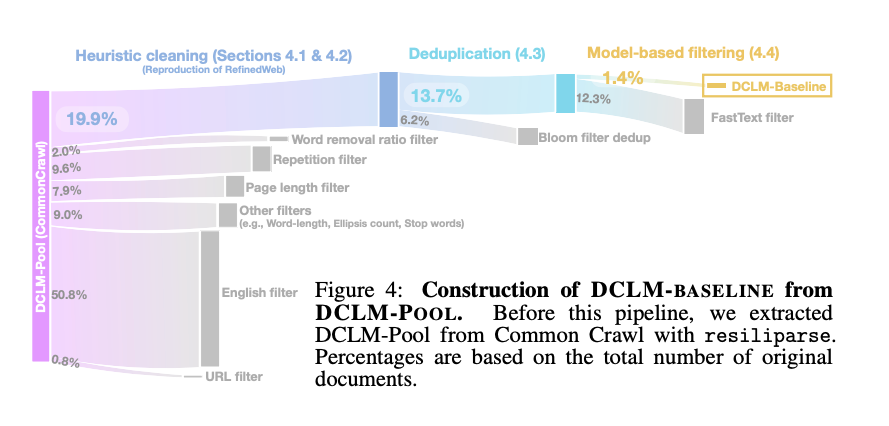

As you might expect, the secret is in the data quality. They start with DCLM-POOL, a corpus of 240 trillion tokens derived from Common Crawl, the largest corpus yet, and provide an investigation of scaling trends for dataset design at 5 scales:

Within each scale there are two tracks: Filtering (must be from DCLM-Pool without any external data, but can use other models for filtering/paraphrasing) and Mixing (ext data allowed). They do a "Baseline" filtered example to start people off:

People close to the dataset story might wonder how DCLM-Pool and Baseline compare to FineWeb (our coverage here), and the outlook is promising: DCLM trains better at -EVERY- scale.

The rest of this 88 page paper has tons of detail on data quality techniques; a fantastic contribution to open LLM research from all involved (and not just Apple, as commonly reported).

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

GPT-4o mini model release by OpenAI

- Capabilities: @sama noted GPT-4o mini has "15 cents per million input tokens, 60 cents per million output tokens, MMLU of 82%, and fast." He compared it to text-davinci-003, saying it's "much, much worse than this new model" and "cost 100x more."

- Pricing: @gdb highlighted the model is aimed at developers, with the goal to "convert machine intelligence into positive applications across every domain." @miramurati emphasized GPT-4o mini "makes intelligence far more affordable opening up a wide range of applications."

- Benchmarks: @lmsysorg reported GPT-4o mini was tested in Arena, showing performance reaching GPT-4-Turbo levels while offering significant cost reduction. @polynoamial called it "best in class for its size, especially at reasoning."

Mistral NeMo 12B model release by NVIDIA and Mistral

- Capabilities: @GuillaumeLample introduced Mistral NeMo as a 12B model supporting 128k token context window, FP8 aligned checkpoint, and strong performance on academic, chat, and fine-tuning benchmarks. It's multilingual in 9 languages with a new Tekken tokenizer.

- Licensing: @_philschmid highlighted the base and instruct models are released under Apache 2.0 license. The instruct version supports function calling.

- Performance: @osanseviero noted Mistral NeMo outperforms Mistral 7B and was jointly trained by NVIDIA and Mistral on 3,072 H100 80GB GPUs on DGX Cloud.

DeepSeek-V2-0628 model release by DeepSeek

- Leaderboard Ranking: @deepseek_ai announced DeepSeek-V2-0628 is the No.1 open-source model on LMSYS Chatbot Arena leaderboard, ranking 11th overall, 3rd on Hard Prompts and Coding, 4th on Longer Query, and 7th on Math.

- Availability: The model checkpoint is open-sourced on Hugging Face and an API is also available.

Trends and Discussions

- Synthetic Data: @karpathy suggested models need to first get larger before getting smaller, as their automated help is needed to "refactor and mold the training data into ideal, synthetic formats." He compared this to Tesla's self-driving networks using previous models to generate cleaner training data at scale.

- Evaluation Concerns: @RichardMCNgo shared criteria for judging AI safety evaluation ideas, cautioning that many proposals fail on all counts, resembling a "Something must be done. This is something." fallacy.

- Reasoning Limitations: @JJitsev tested the NuminaMath-7B model, which ranked 1st in an olympiad math competition, on basic reasoning problems. The model struggled with simple variations, revealing deficits in current benchmarks for measuring reasoning skills.

Memes and Humor

- @fabianstelzer joked that OpenAI quietly released the native GPT-o "image" model, sharing a comic strip prompt and output.

- @AravSrinivas humorously compared Singapore's approach to governance to product management, optimizing for new user retention.

- @ID_AA_Carmack mused on principles and tit-for-tat escalation in response to a personal anecdote, while acknowledging his insulation from most effects due to having "FU money."

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. CPU Inference Speed Breakthroughs

- NVIDIA CUDA Can Now Directly Run On AMD GPUs Using The "SCALE" Toolkit (Score: 67, Comments: 17): NVIDIA CUDA can now run directly on AMD GPUs using the open-source SCALE (Scalable Compute Abstraction Layer for Execution) toolkit. This breakthrough allows developers to execute CUDA applications on AMD hardware without code modifications, potentially expanding the ecosystem for AI and HPC applications beyond NVIDIA's hardware dominance. The SCALE toolkit, developed by StreamHPC, aims to bridge the gap between different GPU architectures and programming models.

- New CPU inference speed gains of 30% to 500% via Llamafile (Score: 70, Comments: 36): Llamafile has achieved significant CPU inference speed gains ranging from 30% to 500%, with particularly impressive results on Threadripper processors. A recent talk highlighted a speedup from 300 tokens/second to 2400 tokens/second on Threadripper, approaching GPU-like performance. While the specific model tested wasn't mentioned, these improvements, coupled with an emphasis on open-source AI, represent a notable advancement in CPU-based inference capabilities.

- Prompt Processing Speed Crucial: Llamafile's improvements primarily affect prompt processing, not token generation. This is significant as prompt processing is where the deep understanding occurs, especially for complex tasks involving large input volumes.

- Boolean Output Fine-Tuning: Some users report good results with LLMs returning 0 or 1 for true/false queries, particularly after fine-tuning. One user achieved 25 queries per second on a single 4090 GPU with Gemma 2 9b using a specific prompt for classification tasks.

- CPU vs GPU Performance: While Llamafile's CPU improvements are impressive, LLM inference remains memory-bound. DDR5 bandwidth doesn't match VRAM, but some users find the trade-off of half the speed of high-end GPUs with 128 GB RAM appealing for certain applications.

Theme 2. Mistral AI's New Open Source LLM Release

- DeepSeek-V2-Chat-0628 Weight Release ! (#1 Open Weight Model in Chatbot Arena) (Score: 67, Comments: 37): DeepSeek-V2-Chat-0628 has been released as the top-performing open weight model on Hugging Face. The model ranks #11 overall in Chatbot Arena, outperforming all other open-source models, while also achieving impressive rankings of #3 in both the Coding Arena and Hard Prompts Arena.

- Mistral-NeMo-12B, 128k context, Apache 2.0 (Score: 185, Comments: 84): Mistral-NeMo-12B, a new open-source language model, has been released with a 128k context window and Apache 2.0 license. This model, developed by Mistral AI in collaboration with NVIDIA, is based on the NeMo framework and trained using FlashAttention-2. It demonstrates strong performance across various benchmarks, including outperforming Llama 2 70B on some tasks, while maintaining a smaller size of 12 billion parameters.

Theme 3. Comprehensive LLM Performance Benchmarks

- Comprehensive benchmark of GGUF vs EXL2 performance across multiple models and sizes (Score: 51, Comments: 44): GGUF vs EXL2 Performance Showdown A comprehensive benchmark comparing GGUF and EXL2 formats across multiple models (Llama 3 8B, 70B, and WizardLM2 8x22B) reveals that EXL2 is slightly faster for Llama models (3-7% faster), while GGUF outperforms on WizardLM2 (3% faster). The tests, conducted on a system with 4x3090 GPUs, show that both formats offer comparable performance, with GGUF providing broader model support and RAM offloading capabilities.

- GGUF Catches Up to EXL2: GGUF has significantly improved performance, now matching or surpassing EXL2 in some cases. Previously, EXL2 was 10-20% faster, but recent tests show comparable speeds even for prompt processing.

- Quantization and Model Specifics: Q6_K in GGUF is actually 6.56bpw, while EXL2 quantizations are accurate. 5.0bpw or 4.65bpw are recommended for better quality, with 4.0bpw being closer to Q3KM. Different architectures may perform differently between formats.

- Speculative Decoding and Concurrent Requests: Using a 1B model in front of larger models can significantly boost speed through speculative decoding. Questions remain about performance differences in concurrent request scenarios between GGUF and EXL2.

- What are your top 5 current workhorse LLMs right now? Have you swapped any out for new ones lately? (Score: 79, Comments: 39): Top 5 LLM Workhorses and Potential Newcomers The author's current top 5 LLMs are Command-R for RAG tasks, Qwen2:72b for smart and professional responses, Llava:34b for vision-related tasks, Llama:70b as a second opinion model, and Codestral for code-related tasks. They express interest in trying Florence, Gemma2-27b, and ColPali for document retrieval, while humorously noting they'd try an LLM named after Steven Seagall if one existed.

- ttkciar reports being impressed by Gemma-2 models, particularly Big-Tiger-Gemma-27B-v1c, which correctly answered the reason:sally_siblings task five times out of five. They also use Dolphin-2.9.1-Mixtral-1x22B for various tasks and are experimenting with Phi-3 models for Evol-Instruct development.

- PavelPivovarov shares their top models for limited hardware: Tiger-Gemma2 9B for most tasks, Llama3 8B for reasoning, Phi3-Medium 14B for complex logic and corporate writing, and Llama-3SOME for role-playing. They express interest in trying the new Gemmasutra model.

- ttkciar provides an extensive breakdown of Phi-3-Medium-4K-Instruct-Abliterated-v3's performance across various tasks. The model shows strengths in creative tasks, correct reasoning in simple Theory-of-Mind problems, an

Theme 4. AI Development and Regulation Challenges

- As promised, I've Open Sourced my Tone Changer - https://github.com/rooben-me/tone-changer-open (Score: 96, Comments: 14): Tone Changer AI tool open-sourced. The developer has released the source code for their Tone Changer project on GitHub, fulfilling a previous promise. This tool likely allows users to modify the tone or style of text inputs, though specific details about its functionality are not provided in the post.

- Local deployment with OpenAI compatibility: The Tone Changer tool is fully local and compatible with any OpenAI API. It's available on GitHub and can be accessed via a Vercel-hosted demo.

- Development details requested: Users expressed interest in the project's implementation, asking for README updates with running instructions and inquiring about the demo creation process. The developer used screen.studio for screen recording.

- Functionality questioned: Some users critiqued the tool's novelty, suggesting it relies on prompts for tone changing, implying limited technical innovation beyond existing language model capabilities.

- Apple stated a month ago that they won't launch Apple Intelligence in EU, now Meta also said they won't offer future multimodal AI models in EU due to regulation issues. (Score: 170, Comments: 95): Apple and Meta are withholding their AI models from the European Union due to regulatory concerns. Apple announced a month ago that it won't launch Apple Intelligence in the EU, and now Meta has followed suit, stating it won't offer future multimodal AI models in the region. These decisions highlight the growing tension between AI innovation and EU regulations, potentially creating a significant gap in AI technology availability for European users.

- • -p-e-w- argues EU regulations are beneficial, preventing FAANG companies from dominating the AI market and crushing competition. They suggest prohibiting these companies from entering the EU AI market to limit their power.

- • Discussion on GDPR compliance reveals differing views. Some argue it's easy for businesses acting in good faith, while others highlight challenges for startups and small businesses compared to large corporations with more resources.

- • Critics accuse companies of hypocrisy, noting they advocate for "AI safety" but resist actual regulation. Some view this as corporations attempting to stronghold governments to lower citizen protections, while others argue EU regulations may hinder innovation.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. AI Outperforming Humans in Medical Licensing Exams

- [/r/singularity] ChatGPT aces the US Medical Licensing Exam, answering 98% correctly. The average doctor only gets 75% right. (Score: 328, Comments: 146): ChatGPT outperforms human doctors on the US Medical Licensing Exam, achieving a remarkable 98% accuracy compared to the average doctor's 75%. This impressive performance demonstrates the AI's potential to revolutionize medical education and practice, raising questions about the future role of AI in healthcare and the need for adapting medical training curricula.

- • ChatGPT's 98% accuracy on the US Medical Licensing Exam compared to doctors' 75% raises concerns about AI's impact on healthcare careers. Some argue AI could reduce the 795,000 annual deaths from diagnostic errors, while others question the exam's relevance to real-world medical practice.

- • Experts predict AI will initially work alongside human doctors, particularly in specialties like radiology. Insurance companies may mandate AI use to catch what humans miss, potentially improving diagnostic speed and accuracy.

- • Critics argue the AI's performance may be due to "pretraining on the test set" rather than true understanding. Some suggest the exam's structure may not adequately test complex reasoning skills, while others note that human doctors also study past exams to prepare.

Theme 2. OpenAI's GPT-4o-mini: A More Affordable and Efficient AI Model

- [/r/singularity] GPT-4o-mini is 2 times cheaper than GPT 3.5 Turbo (Score: 363, Comments: 139): GPT-4o-mini, a new AI model, is now available at half the cost of GPT-3.5 Turbo. This model, developed by Anthropic, offers comparable performance to GPT-3.5 Turbo but at a significantly lower price point, potentially making advanced AI capabilities more accessible to a wider range of users and applications.

- [/r/singularity] GPT-4o mini: advancing cost-efficient intelligence (Score: 238, Comments: 89): GPT-4o mini, a new AI model developed by Anthropic, aims to provide cost-efficient intelligence by offering similar capabilities to GPT-4 at a fraction of the cost. The model is designed to be more accessible and affordable for developers and businesses, potentially enabling wider adoption of advanced AI technologies. While specific performance metrics and pricing details are not provided, the focus on cost-efficiency suggests a significant step towards making powerful language models more economically viable for a broader range of applications.

- [/r/singularity] OpenAI debuts mini version of its most powerful model yet (Score: 378, Comments: 222): OpenAI has introduced GPT-4 Turbo, a smaller and more efficient version of their most advanced language model. This new model offers 128k context and is designed to be more affordable for developers, with pricing set at $0.01 per 1,000 input tokens and $0.03 per 1,000 output tokens. GPT-4 Turbo also includes updated knowledge through April 2023 and supports new features like JSON mode for structured output.

Theme 3. Advancements in AI-Generated Visual and Audio Content

- [/r/singularity] New voice mode coming soon (Score: 279, Comments: 106): New voice synthesis mode is set to be released soon, expanding the capabilities of AI-generated speech. This upcoming feature promises to enhance the quality and versatility of synthesized voices, potentially offering more natural-sounding and customizable audio outputs for various applications.

- [/r/singularity] Unanswered Oddities Ep. 1 (An AI-assisted TV Series w/ Completely AI-generated Video) (Score: 330, Comments: 41): Unanswered Oddities, an AI-assisted TV series with fully AI-generated video, has released its first episode. The series explores unexplained phenomena and mysterious events, utilizing AI technology to create both the script and visuals, pushing the boundaries of AI-driven content creation in the entertainment industry.

- [/r/singularity] Example of Kling AI by Pet Pixels Studio (Score: 287, Comments: 25): Pet Pixels Studio showcases their Kling AI technology, which appears to be an artificial intelligence system for generating or manipulating pet-related imagery. While no specific details about the AI's capabilities or implementation are provided, the title suggests it's an example or demonstration of the Kling AI's output or functionality.

AI Discord Recap

A summary of Summaries of Summaries

GPT4O (gpt-4o-2024-05-13)

1. LLM Advancements

- Llama 3 release imminent: Llama 3 with 400 billion parameters is rumored to release in 4 days, igniting excitement and speculation within the community.

- This upcoming release has stirred numerous conversations about its potential impact and capabilities.

- GPT-4o mini offers cost-efficient performance: GPT-4o mini is seen as a cheaper and faster alternative to 3.5 Turbo, being approximately 2x faster and 60% cheaper as noted on GitHub.

- However, it lacks image support and scores lower in benchmarks compared to GPT-4o, underlining some of its limitations.

2. Model Performance Optimization

- DeepSeek-V2-Chat-0628 tops LMSYS Leaderboard: DeepSeek-V2-Chat-0628, a model with 236B parameters, ranks No.1 open-source model on LMSYS Chatbot Arena Leaderboard.

- It holds top positions: Overall No.11, Hard Prompts No.3, Coding No.3, Longer Query No.4, Math No.7.

- Mojo vs JAX: Benchmark Wars: Mojo outperforms JAX on CPUs even though JAX is optimized for many-core systems. Discussions suggest Mojo's compiler visibility grants an edge in performance.

- MAX compared to openXLA showed advantages as a lazy computation graph builder, offering more optimization opportunities and broad-ranging impacts.

3. Open-Source AI Frameworks

- SciPhi Open-Sources Triplex for Knowledge Graphs: SciPhi is open-sourcing Triplex, a state-of-the-art LLM for knowledge graph construction, significantly reducing the cost by 98%.

- Triplex can be used with SciPhi's R2R to build knowledge graphs directly on a laptop, outperforming few-shot-prompted GPT-4 at 1/60th the inference cost.

- Open WebUI features extensive capabilities: Open WebUI boasts extensive features like TTS, RAG, and internet access without Docker, enthralling users.

- Positive experiences on Windows 10 with Open WebUI raise interest in comparing its performance to Pinokio.

4. Multimodal AI Innovations

- Text2Control Enables Natural Language Commands: The Text2Control method enables agents to perform new tasks by interpreting natural language commands with vision-language models.

- This approach outperforms multitask reinforcement learning baselines in zero-shot generalization, with an interactive demo available for users to explore its capabilities.

- Snowflake Arctic Embed 1.5 boosts retrieval system scalability: Snowflake introduced Arctic Embed M v1.5, delivering up to 24x scalability improvement in retrieval systems with tiny embedding vectors.

- Daniel Campos' tweet about this update emphasizes the significant enhancement in performance metrics.

5. AI Community Tools

- ComfyUI wins hearts for Stable Diffusion newbies: Members suggested using ComfyUI as a good UI for someone new to Stable Diffusion, emphasizing its flexibility and ease of use.

- Additionally, watching Scott Detweiler's YouTube tutorials was recommended for thorough guidance.

- GPTs Agents exhibit self-awareness: An experiment conducted on GPTs agents aimed to assess their self-awareness, specifically avoiding web search capabilities during the process.

- The test results sparked discussions about the practical implications and potential limitations of self-aware AI systems without external data sources.

GPT4OMini (gpt-4o-mini-2024-07-18)

1. Recent Model Releases and Performance

- Mistral NeMo and DeepSeek Models Unveiled: Mistral released the NeMo 12B model with a 128k token context length, showcasing multilingual capabilities and tool support, while DeepSeek-V2-Chat-0628 tops the LMSYS leaderboard.

- These models emphasize advancements in performance and efficiency, with DeepSeek achieving 236B parameters and ranking first among open-source models.

- GPT-4o Mini vs. Claude 3 Haiku: The new GPT-4o mini is approximately 2x faster and 60% cheaper than GPT-3.5 Turbo, making it an attractive alternative despite its lower benchmark scores compared to Claude 3 Haiku.

- Users are discussing potential replacements, with mixed opinions on the mini's performance in various tasks.

- Apple's DCLM 7B Model Launch: Apple's release of the DCLM 7B model has outperformed Mistral 7B, showcasing fully open-sourced training code and datasets.

- This move has sparked discussions about its implications for the competitive landscape of open-source AI models.

2. AI Tooling and Community Resources

- Open WebUI Enhancements: The Open WebUI now includes features like TTS and RAG, allowing users to interact with their models without Docker, enhancing accessibility and usability.

- Users have reported positive experiences running it on Windows 10, comparing its performance favorably against Pinokio.

- ComfyUI for Beginners: Members are recommending ComfyUI as an excellent user interface for newcomers to Stable Diffusion, highlighting its flexibility and ease of use.

- Tutorials from Scott Detweiler on YouTube have been suggested for those looking for comprehensive guidance.

3. Training Techniques and Model Fine-tuning

- Improving Transformer Generalization: An arXiv paper suggests that training transformers beyond saturation can enhance their generalization capabilities, particularly for out-of-domain tasks.

- This approach helps prevent catastrophic forgetting, making it a pivotal strategy for future model training.

- Fine-tuning Challenges in Mistral-12b: Users reported configuration issues with Mistral-12b, particularly around size mismatches in projection weights, requiring source installation of transformers for fixes.

- Discussions on fine-tuning strategies indicate the need for specific adjustments in training setups to optimize performance.

4. Data Privacy and Security in AI

- CrowdStrike Outage Impacts: A recent CrowdStrike update caused a global outage, affecting multiple industries and prompting discussions on the reliability of cloud-based security services.

- The incident has raised concerns about data privacy and operational resilience in tech infrastructure.

- Business Hesitance to Share Sensitive Data: Concerns around data privacy have made businesses wary of sharing sensitive information with third parties, prioritizing internal controls over external exchanges.

- This trend highlights the growing importance of data security in AI applications.

5. Advancements in Knowledge Graphs and Retrieval-Augmented Generation

- Triplex Revolutionizes Knowledge Graphs: The Triplex model offers a 98% cost reduction for knowledge graph construction, outperforming GPT-4 at 1/60th the cost.

- Triplex facilitates local graph building using SciPhi's R2R platform, enhancing retrieval-augmented generation methods.

- R2R Platform for Knowledge Graphs: The R2R platform enables scalable, production-ready retrieval-augmented generation applications, integrating multimodal support and automatic relationship extraction.

- Members highlighted its effectiveness in creating knowledge graphs from unstructured data, showcasing practical applications.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Hermes 2.5 outperforms Hermes 2: After adding code instruction examples, Hermes 2.5 appears to perform better than Hermes 2 in various benchmarks.

- Hermes 2 scored a 34.5 on the MMLU benchmark whereas Hermes 2.5 scored 52.3.

- Mistral has struggles expanding beyond 8k: Members stated that Mistral cannot be extended beyond 8k without continued pretraining and this is a known issue.

- They pointed to further work on mergekit and frankenMoE finetuning for the next frontiers in performance.

- Mistral unveils NeMo 12B model: Mistral released NeMo, a 12 billion parameter model, showcasing multilingual capability and native tool support.

- Fits exactly in a free Google Colab GPU instance, which you can access here.

- In-depth on CUDA bf16 issues and fixes: Several users reported errors related to bf16 support on different GPU models such as RTX A4000 and T4, hindering model execution.

- The problem was identified to be due to torch.cuda.is_bf16_supported() returning False, and the Unsloth team has since fixed it.

- SciPhi Open-Sources Triplex for Knowledge Graphs: SciPhi is open-sourcing Triplex, a state-of-the-art LLM for knowledge graph construction, significantly reducing the cost by 98%.

- Triplex can be used with SciPhi's R2R to build knowledge graphs directly on a laptop, outperforming few-shot-prompted GPT-4 at 1/60th the inference cost.

Stability.ai (Stable Diffusion) Discord

- ComfyUI wins hearts for Stable Diffusion newbies: Members suggested using ComfyUI as a good UI for someone new to Stable Diffusion, emphasizing its flexibility and ease of use.

- Additionally, watching Scott Detweiler's YouTube tutorials was recommended for thorough guidance.

- NVIDIA trumps AMD in AI tasks: Consensus in the discussion indicates a preference for NVIDIA GPUs over AMD for stable diffusion due to better support and less troubleshooting.

- Despite AMD providing more VRAM, NVIDIA is praised for wider compatibility, especially in Linux environments, despite occasional driver issues.

- Stable Diffusion models: One size doesn't fit all: Discussion on the best Stable Diffusion models concluded that choices depend on VRAM and the specific needs of the user, with SDXL recommended for its larger size and capabilities.

- SD3 was mentioned for its superior image quality due to a new VAE, while noting it's currently mainly supported in ComfyUI.

- Tips to make Stable Diffusion more artistic: A member sought advice on making images look more artistic and less hyper-realistic, complaining about the dominance of HD, high-contrast outputs.

- Suggestions included using artistic LoRAs and experimenting with different models to achieve desired digital painting effects.

- Seeking Reddit alternatives for AI news: A member expressed frustration with Reddit bans and censorship in Twitter, seeking alternative sources for AI news.

- Suggestions included following the scientific community on Twitter for the latest papers and developments, despite perceived regional and user-based censorship issues.

HuggingFace Discord

- Quick Help for CrowdStrike BSOD: A faulty file from CrowdStrike caused widespread BSOD, affecting millions of systems globally. The Director of Overwatch at CrowdStrike posted a hot fix to break the BSOD loop.

- The issue led to a significant number of discussions about fallout and measures to prevent future incidents.

- Hugging Face API Woes: Multiple users in the community discussed issues with the Meta-Llama-3-70B-Instruct API, including error messages about unsupported model configurations.

- There was a wide acknowledgment of Hugging Face infrastructure problems, particularly impacting model processing speeds, which users noted have stabilized recently after outages.

- Surge of Model Releases Floods Feed: Significant model releases occurred all in one day: DeepSeek's top open-access lmsys model, Mistral 12B, Snowflake's embedding model, and more. See the tweet for the full list.

- Osanseviero remarked, '🌊For those of you overwhelmed by today's releases,' summarizing the community's sentiments about the vast number of updates happening.

- Technical Teasers in Neural Networks: The Circuits thread offers an experimental format delving into the inner workings of neural networks, covering innovative discoveries like Curve Detectors and Polysemantic Neurons.

- This engaging approach to understanding neural mechanisms has triggered enthusiastic discussions about both the conceptual and practical implications.

- AI Comic Factory Enhancements: Significant updates to the AI Comic Factory were noted, now featuring speech bubbles by default, enhancing the comic creation experience.

- The new feature, utilizing AI for prompt generation and dialogue segmentation, improves storytelling through visual metrics, even accommodating non-human characters like dinosaurs.

Nous Research AI Discord

- Overcoming catastrophic forgetting in ANNs with sleep-inspired dynamics: Experiments by Maxim Bazhenov et al. suggest that a sleep-like phase in ANNs helps reduce catastrophic forgetting, with findings published in Nature Communications.

- Sleep in ANNs involved off-line training using local unsupervised Hebbian plasticity rules and noisy input, helping the ANNs recover previously forgotten tasks.

- Opus Instruct 3k dataset gears up multi-turn instruction finetuning: A member shared a link to the Opus Instruct 3k dataset on Hugging Face, containing ~2.5 million tokens worth of general-purpose multi-turn instruction finetuning data in the style of Claude 3 Opus.

- teknium acknowledged the significance of the dataset with a positive comment.

- GPT-4o Mini vies with GPT-3.5-Turbo on coding benchmarks: On a coding benchmark, GPT-4o Mini performed on par with GPT-3.5-Turbo, despite being advertised with a HumanEval score that raised user expectations.

- One user expressed dissatisfaction with the overhyped performance indicators, speculating that OpenAI trained it on benchmark data.

- Triplex slashes KG creation costs by 98%: Triplex, a finetuned version of Phi3-3.8B by SciPhi.AI, outperforms GPT-4 at 1/60th the cost for creating knowledge graphs from unstructured data.

- It enables local graph building using SciPhi's R2R platform, significantly cutting down expenses.

- Mistral-Nemo-Instruct GGUF conversion struggles highlighted: A member struggled with converting Mistral-Nemo-Instruct to GGUF due to issues with BPE vocab and missing tokenizer.model files.

- Despite pulling a PR for Tekken tokenizer support, the conversion script still did not work, causing much frustration.

OpenAI Discord

- GPT-4o mini offers cost-efficient performance: GPT-4o mini is seen as a cheaper and faster alternative to 3.5 Turbo, being approximately 2x faster and 60% cheaper as noted on GitHub.

- However, it lacks image support and scores lower in benchmarks compared to GPT-4o, underlining some of its limitations.

- Crowdstrike outage disrupts industries: A Crowdstrike update caused a global outage, affecting industries such as airlines, banks, and hospitals, with machines requiring manual unlocking.

- This primarily impacted Windows 10 users, making the resolution process slow and costly.

- GPT-4o's benchmark superiority debated: GPT-4o scores higher in benchmarks compared to GPT-4 Turbo, but effectiveness varies by use case source.

- The community finds no consensus on the ultimate superiority due to these variabilities, highlighting the importance of specific application needs.

- Fine-tuning for 4o mini on the horizon: Members expect fine-tuning capabilities for 4o mini to be available in approximately 6 months.

- This potential enhancement could further improve its utility and performance in specific applications.

- Request for Glassmorphic UI in Code Snippets: Users are looking to create a code snippet library with a glassmorphic UI using HTML, CSS, and JavaScript, featuring an animated gradient background.

- Desired functionalities include managing snippets—adding, viewing, editing, and deleting—with cross-browser compatibility and a responsive design.

Modular (Mojo 🔥) Discord

- Mojo Boosts Debugging Experience: Mojo prioritizes advanced debugging tools, enhancing the debugging experience for machine learning tasks on GPUs. Learn more.

- Mojo's extension allows seamless setup in VS Code, and LLDB-DAP integrations are planned for stepping through CPU to GPU code.

- Mojo vs JAX: Benchmark Wars: Mojo outperforms JAX on CPUs even though JAX is optimized for many-core systems. Discussions suggest Mojo's compiler visibility grants an edge in performance.

- MAX compared to openXLA showed advantages as a lazy computation graph builder, offering more optimization opportunities and broad-ranging impacts.

- Mojo's Low-Level Programming Journey: A user transitioning from Python to Mojo considered learning C, CUDA, and Rust due to Mojo’s perceived lack of documentation. Community responses focused on 'Progressive Disclosure of Complexity.'

- Discussions encouraged documenting the learning journey to aid in shaping Mojo’s ecosystem and suggested using

InlineArrayfor FloatLiterals in types.

- Discussions encouraged documenting the learning journey to aid in shaping Mojo’s ecosystem and suggested using

- Async IO API Standards in Mojo: A discussion emphasized the need for async IO APIs in Mojo to support higher performance models by effectively handling buffers. The conversation drew from Rust's async IO challenges.

- Community considered avoiding a split between performance-focused and mainstream libraries, aiming for seamless integration.

- Mojo Nightly Update Highlights New Features: The Mojo nightly update 2024.7.1905 introduced a new stdlib function

Dict.setdefault(key, default). View the raw diff for detailed changes.- Contributor meetings may separate from community meetings to align better with Modular’s work, with stdlib contributions vetted through incubators for API and popularity before integration.

LM Studio Discord

- Mistral Nvidia collaboration creates buzz: Mistral Nvidia collaboration introduced Mistral-Nemo 12B, offering a large context window and state-of-the-art performance, but it's unsupported in LM Studio.

- Tokenizer support in llama.cpp is required to make Mistral-Nemo compatible.

- Rich features in Open WebUI draw attention: Open WebUI boasts extensive features like TTS, RAG, and internet access without Docker, enthralling users.

- Positive experiences on Windows 10 with Open WebUI raise interest in comparing its performance to Pinokio.

- DeepSeek-V2-Chat-0628 tops LMSYS Leaderboard: DeepSeek-V2-Chat-0628, a model with 236B parameters, ranks No.1 open-source model on LMSYS Chatbot Arena Leaderboard.

- It holds top positions: Overall No.11, Hard Prompts No.3, Coding No.3, Longer Query No.4, Math No.7.

- Complexities of using NVidia Tesla P40: Users face mixed results running NVidia Tesla P40 on Windows; data center and studio RTX drivers are used but performance varies.

- Compatibility issues with Tesla P40 and Vulcan are highlighted, suggesting multiple installations and enabling virtualization.

- TSMC forecasts AI chip supply delay: TSMC's CEO predicts no balance in AI chip supply till 2025-2026 due to packaging bottlenecks and high demand.

- Overseas expansion is expected to continue, as shared in this report.

Latent Space Discord

- Llama 3 release imminent: Llama 3 with 400 billion parameters is rumored to release in 4 days, igniting excitement and speculation within the community.

- This upcoming release has stirred numerous conversations about its potential impact and capabilities.

- Self-Play Preference Optimization sparks interest: SPPO (Self-Play Preference Optimization) is noted for its potential, but skepticism exists regarding its long-term effectiveness after a few iterations.

- Opinions are divided on whether the current methodologies will hold up after extensive deployment and usage.

- Apple open-sources DCLM 7B model: Apple released the DCLM 7B model, which surpasses Mistral 7B and is entirely open-source, including training code and datasets.

- This release is causing a buzz with VikParuchuri's GitHub profile showcasing 90 repositories and the official tweet highlighting the open sourcing.

- Snowflake Arctic Embed 1.5 boosts retrieval system scalability: Snowflake introduced Arctic Embed M v1.5, delivering up to 24x scalability improvement in retrieval systems with tiny embedding vectors.

- Daniel Campos' tweet about this update emphasizes the significant enhancement in performance metrics.

- Texify vs Mathpix in functionality: A comparison was raised on how Texify stacks up against Mathpix in terms of functionality but no detailed answers were provided.

- The conversation highlights an ongoing debate about the effectiveness of these tools for various use cases.

CUDA MODE Discord

- Nvidia influenced by anti-trust laws to open-source kernel modules: Nvidia's decision to open-source kernel modules may be influenced by US anti-trust laws according to speculations.

- One user suggested that maintaining kernel modules isn't central to Nvidia's business and open-sourcing could improve compatibility without needing high-skill developers.

- Float8 weights introduce dynamic casting from BF16 in PyTorch: Members discussed casting weights stored as BF16 to FP8 for matmul in PyTorch, referencing float8_experimental.

- There was also interest in implementing stochastic rounding for FP8 weight updates, possibly supported by Meta's compute resources.

- Tinygrad bounties spark mixed reactions: Discussions about contributing to tinygrad bounties like splitting UnaryOps.CAST noted that some found the compensation insufficient for the effort involved.

- A member offered $500 for adding FSDP support to tinygrad, which was considered low, with potential implementers needing at least a week or two.

- Yuchen's 7.3B model training achieves linear scaling: Yuchen trained a 7.3B model using karpathy's llm.c with 32 H100 GPUs, achieving 327K tokens/s and an MFU of 46.7%.

- Changes from 'int' to 'size_t' were needed to handle integer overflow due to large model parameters.

- HQQ+ 2-bit Llama3-8B-Instruct model announced: A new model, HQQ+ 2-bit Llama3-8B-Instruct, uses the BitBlas backend and 64 group-size quantization for quality retention.

- The model is compatible with BitBlas and

torch.compilefor fast inference, despite challenges in low-bit quantization of Llama3-8B.

- The model is compatible with BitBlas and

Perplexity AI Discord

- Pro users report drop in search quality: Some members, especially those using Claude Sonnet 3.5, have noticed a significant drop in the quality of Pro searches over the past 8-9 days.

- This issue has been raised in discussions but no clear solution or cause has been identified yet.

- GPT-4o mini set to replace Claude 3 Haiku?: There's active discussion around the idea of potentially replacing Claude 3 Haiku with the cheaper and smarter GPT-4o mini in Perplexity.

- Despite the promising attributes of GPT-4o mini, Claude 3 Haiku remains in use for now.

- YouTube Music unveils Smart Radio: A discussion highlighted YouTube Music's Smart Radio, featuring innovative content delivery and new music discovery tools.

- YouTube Music was praised for smartly curating playlists and adapting to user preferences.

- Dyson debuts High-Tech Headphones: Dyson's new high-tech headphones were noted for integrating advanced noise-cancellation and air filtration technology.

- Members commented on the product's dual functionality and sleek design.

- Seeking RAG API Access from Perplexity: A member noted a lack of response after emailing about RAG API for their enterprise, seeking further assistance in obtaining access.

- This suggests ongoing communication challenges and unmet demand for enterprise-level API solutions.

OpenRouter (Alex Atallah) Discord

- Mistral AI releases two new models: Daun.ai introduced Mistral Nemo, a 12B parameter multilingual LLM with a 128k token context length.

- Codestral Mamba was also released, featuring a 7.3B parameter model with a 256k token context length for code and reasoning tasks.

- L3-Euryale-70B price slashed by 60%: L3-Euryale-70B received a massive price drop of 60%, making it more attractive for usage in various applications.

- Additionally, Cognitivecomputations released Dolphin-Llama-3-70B, a competitive new model promising improved instruction-following and conversational abilities.

- LLM-Draw integrates OpenRouter API keys: The LLM-Draw app now accepts OpenRouter API keys, leveraging the Sonnet 3.5 self-moderated model.

- Deployable as a Cloudflare page with Next.js, a live version is now accessible.

- Gemma 2 repetition issues surface: Users reported repetition issues with Gemma 2 9B and sought advice for mitigating the problem.

- A suggestion was made to use CoT (Chain of Thought) prompting for better performance.

- Mistral NeMo adds Korean language support: A message indicated that Mistral NeMo has expanded its language support to include Korean, enhancing its multilingual capacity.

- Users noted its strength in English, French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, and Hindi.

Interconnects (Nathan Lambert) Discord

- GPT-4o Mini's Versatile Performance: GPT-4o mini matches GPT-3.5 on Aider's code editing benchmark but struggles with code diffs on larger files.

- The model offers cost-efficient text generation yet retains high image input costs, prompting users to consider alternatives like Claude 3 Haiku and Gemini 1.5 Flash.

- OpenAI Faces New Security Flaws: OpenAI's new safety mechanism was easily bypassed, allowing GPT-4o-mini to generate harmful content, exposing significant vulnerabilities.

- Internal evaluations show GPT-4o mini may be overfitting, with extra information inflating its scores, highlighting a potential flaw in eval setups.

- Gemma 2 Surprises with Logit Capping: Members discussed the removal of soft logit capping in Gemma 2, debating the need for retraining to address its effects.

- Some members found it startling that the model performed well without significant retraining, challenging common expectations about logit capping adjustments.

- MosaicML's Quirky Sword Tradition: MosaicML employees receive swords as part of a unique tradition, as noted in discussions about potential future interviews.

- HR and legal teams reportedly disapproved, but rumors suggest even the MosaicML legal team might have partaken.

- Sara Hooker Critiques US AI Act: A member shared a YouTube video of Sara Hooker critiquing compute thresholds in the US AI Act, sparking community interest.

- Her community presence, underscored by a recent paper, highlights ongoing discussions about regulatory frameworks and their implications for future AI developments.

Eleuther Discord

- Z-Loss regularization term explored: Z-loss was discussed as a regularization term for the objective function, compared to weight decay and its necessity debated among members.

- Carsonpoole clarified that Z-loss targets activation instability by preventing large activations, comparing it to existing regularization methods.

- CoALA: A structured approach to language agents: A paper on Cognitive Architectures for Language Agents (CoALA) introduces a framework with modular memory components to guide language model development.

- The framework aims to survey and organize recent advancements in language models, drawing on cognitive science and symbolic AI for actionable insights.

- BPB vs per token metrics clarified: There was a clarification on whether a given metric should be interpreted as bits per byte (BPB) or per token, establishing it as 'per token' for accuracy.

- Cz_spoon_06890 noted the significant impact of this metric's correct interpretation on the corresponding evaluations.

- Scaling laws impact hypernetwork capabilities: Discussion centered on how scaling laws affect hypernetworks and their capacity to reach the target error predicted by these laws, questioning the feasibility for smaller hypernetworks.

- Suggestions included focusing hypernetworks on tasks with favorable scaling laws, making it simpler to learn from specific data subsets.

- Tokenization-free models spark debate: Debate on the interpretability of tokenization-free models at the byte or character level, with concerns over the lack of canonical places for processing.

- 'Utf-8 is a tokenization scheme too, just a bad one,' one member noted, showing skepticism towards byte-level tokenization.

LlamaIndex Discord

- MistralAI and OpenAI release new models: It's a big day for new models with releases from MistralAI and OpenAI, and there's already day zero support for both models, including a new Mistral NeMo 12B model outperforming Mistral's 7b model.

- The Mistral NeMo model features a significant 128k context window.

- LlamaCloud updates enhance collaboration: Recent updates to LlamaCloud introduced LlamaCloud Chat, a conversational interface to data, and new team features for collaboration.

- These changes aim to enhance user experience and productivity. Read more here.

- Boosting relevance with Re-ranking: Re-ranking retrieved results can significantly enhance response relevance, especially when using a managed index like @postgresml.

- Check out their guest post on the LlamaIndex blog for more insights. More details here.

- LLMs context window limits cause confusion: A user experienced an 'Error code: 400' while setting the max_tokens limit for GPT-4o mini despite OpenAI's documentation stating a context window of 128K tokens, which reportedly supports only 16384 completion tokens.

- This confusion arose from using different models in different parts of the code, leading to interference between GPT-3.5 and GPT-4 in SQL query engines.

- ETL for Unstructured Data via LlamaIndex: A member inquired about parsing unstructured data like video and music into formats digestible by LLMs, referencing a YouTube conversation between Jerry Liu and Alejandro that mentioned a new type of ETL.

- This highlights the practical applications and potential use cases for ETL in AI data processing.

OpenAccess AI Collective (axolotl) Discord

- Training Inferences Boost Transformer Generalization: An arXiv paper suggests that training transformers beyond saturation enhances their generalization and inferred fact deduction.

- Findings reveal transformers struggle with out-of-domain inferences due to lack of incentive for storing the same fact in multiple contexts.

- Config Issues Plague Mistral-12b Usage: A member reported config issues with Mistral-12b, particularly size mismatches in projection weights.

- Fixes required installing transformers from source and tweaking training setups like 8x L40s, which showed improvement in loss reduction.

- Triplex Model Revolutionizes Knowledge Graph Construction: The Triplex model, based on Phi3-3.8B, offers a 98% cost reduction for knowledge graphs compared to GPT-4 (source).

- This model is shareable, executable locally, and integrates well with Neo4j and R2R, enhancing downstream RAG methods.

- Axolotl Training Adjustments Address GPU Memory Errors: Common GPU memory errors during axolotl training prompted discussions on adjusting

micro_batch_size,gradient_accumulation_steps, and enablingfp16.- A detailed guide for these settings was shared to optimize memory usage and prevent errors.

- Llama3 Adjustments Lower Eval and Training Loss: Lowering Llama3's rank helped improve its eval loss, though further runs are needed to confirm stability.

- The training loss also appeared noticeably lower, indicating consistent improvements.

Cohere Discord

- GPTs Agents exhibit self-awareness: An experiment conducted on GPTs agents aimed to assess their self-awareness, specifically avoiding web search capabilities during the process.

- The test results sparked discussions about the practical implications and potential limitations of self-aware AI systems without external data sources.

- Cohere's Toolkit flexibility impresses community: A community member highlighted a tweet from Aidan Gomez and Nick Frosst, praising the open-source nature of Cohere's Toolkit UI, which allows integration of various models and the contribution of new features.

- The open-source approach was lauded for enabling extensive customization and fostering innovations in tool development across the community.

- Firecrawl faces pricing challenges: A member noted that Firecrawl proves costly without a large customer base, suggesting a shift to a pay-as-you-go model.

- The discussion included various pricing strategies and the need for more flexible plans for smaller users.

- Firecrawl self-hosting touted as cost-saving: Members explored self-hosting Firecrawl to reduce expenses, with one member sharing a GitHub guide detailing the process.

- Self-hosting was reported to significantly lower costs, making the service more accessible for individual developers.

- Local LLM Chat GUI project gains attention: A new project featuring a chat GUI powered by local LLMs was shared, integrating Web Search, Python Interpreter, and Image Recognition.

- Interested members were directed to the project's GitHub repository for further engagement and contributions.

Torchtune Discord

- Unified Dataset Abstraction RFC Gains Traction: The RFC to unify instruct and chat datasets to support multimodal data was widely discussed with key feedback focusing on separating tokenizer and prompt templating from other configurations.

- Members highlighted usability and improvement areas, recommending more user-friendly approaches to manage dataset configurations efficiently.

- Torchtune Recipe Docs Set to Autogenerate: Proposals to autogenerate documentation from recipe docstrings emerged to improve visibility and accessibility of Torchtune's recipes.

- This move aims to ensure users have up-to-date, easily navigable documentation that aligns with the current version of recipes.

- Error Handling Overhaul Suggestion: Discussions surfaced on streamlining error handling in Torchtune recipes by centralizing common validation functions, offering a cleaner codebase.

- The idea is to minimize boilerplate code and focus user attention on critical configurations for better efficiency.

- Consolidating Instruct/Chat Dataset RFC: An RFC was shared aiming to consolidate Instruct/Chat datasets to simplify adding custom datasets on Hugging Face.

- Regular contributors to fine-tuning jobs were encouraged to review and provide feedback, ensuring it wouldn't affect high-level APIs.

Alignment Lab AI Discord

- Mozilla Builders launches startup accelerator: Mozilla Builders announced a startup accelerator for hardware and AI projects, aiming to push innovation at the edge.

- One member showed great enthusiasm, stating, 'I don't move on, not a part-time accelerator, we live here.'

- AI-generated scene descriptions for the blind: The community discussed using AI to generate scene descriptions for the visually impaired, aiming to enhance accessibility.

- Sentiments ran high with statements like, 'Blindness and all illnesses need to be deleted.'

- Smart AI devices buzz around beekeeping: Development of smart AI data-driven devices for apiculture was highlighted, providing early warnings to beekeepers to prevent colony loss.

- This innovative approach holds promise for integrating AI in agriculture and environmental monitoring.

- GoldFinch hatches with hybrid model gains: GoldFinch combines Linear Attention from RWKV and Transformers, outperforming models like 1.5B class Llama on tasks by reducing quadratic slowdown and KV-Cache size.

- GPTAlpha and Finch-C2 models outperform competitors: The new Finch-C2 and GPTAlpha models blend RWKV's linearity and transformer architecture, offering better performance and efficiency than traditional models.

- These models enhance downstream task performance, available with comprehensive documentation and code on GitHub and Huggingface.

tinygrad (George Hotz) Discord

- Kernel refactoring in tinygrad sparks changes: George Hotz suggested refactoring Kernel to eliminate

linearizeand introduce ato_programfunction, facilitating better structuring.- He emphasized the need to remove

get_lazyop_infofirst to implement these changes efficiently.

- He emphasized the need to remove

- GTX1080 struggles with Tinygrad compatibility: A member reported an error while running Tinygrad on a GTX1080 with

CUDA=1, highlighting GPU architecture issues.- Another member suggested 2080 generation GPUs as a minimum, recommending patches in

ops_cudaand disabling tensor cores.

- Another member suggested 2080 generation GPUs as a minimum, recommending patches in

- Tinygrad internals: Understanding View.mask: A member dove into the internals of Tinygrad, specifically questioning the purpose of

View.mask.- George Hotz clarified it is primarily used for padding, supported by a reference link.

- Dissection of

_poolfunction in Tinygrad: A member sought clarification on the_poolfunction, pondering whether it duplicates data usingpad,shrink,reshape, andpermuteoperations.- Upon further examination, the member realized the function does not duplicate values as initially thought.

- New project proposal: Documenting OpenPilot model trace: George Hotz proposed a project to document kernel changes and their performance impact using an OpenPilot model trace.

- He shared a Gist link with instructions, inviting members to participate.

OpenInterpreter Discord

- GPT-4o Mini raises parameter change question: A user questioned whether GPT-4o Mini can be operational by merely changing parameters or requires formal introduction by OI.

- Discussion hinted at potential setup challenges but lacked clear consensus on the necessity of formal introduction mechanics.

- 16k token output feature wows: The community marveled at the impressive 16k max token output feature, highlighting its potential utility in handling extensive data.

- Contributors suggested this capability could revolutionize extensive document parsing and generation tasks.

- Yi large preview remains top contender: Members reported that the Yi large preview continues to outperform other models within the OI framework.

- Speculations suggested stability and improved context handling as key differentiators.

- GPT-4o Mini lags in code generation: Initial tests indicated GPT-4o Mini is fast but mediocre in code generation, falling short of expectations.

- Despite this, some believe it might excel in niche tasks with precise custom instructions, though its function-calling capabilities still need improvement.

- OpenAI touts GPT-4o Mini's function calling: OpenAI's announcement lauded the strong function-calling skills and enhanced long-context performance of GPT-4o Mini.

- Community reactions were mixed, debating whether the reported improvements align with practical observations.

LAION Discord

- ICML'24 Highlights LAION Models: Researchers thanked the LAION project for their models used in an ICML'24 paper.

- They shared an interactive demo of their Text2Control method, describing it as essential for advancing vision-language models capabilities.

- Text2Control Enables Natural Language Commands: The Text2Control method enables agents to perform new tasks by interpreting natural language commands with vision-language models.

- This approach outperforms multitask reinforcement learning baselines in zero-shot generalization, with an interactive demo available for users to explore its capabilities.

- AGI Hype vs Model Performance: A discussion highlighted the overhyped nature of AGI while noting that many models achieve high accuracy with proper experimentation, referencing a tweet by @_lewtun.

- 'Many models solve AGI-like tasks correctly, but running the necessary experiments is often deemed 'boring''.

- Need for Latents to Reduce Storage Costs: Users expressed the need for latents of large image datasets like sdxl vae to reduce storage costs.

- It was suggested to host these latents on Hugging Face, which covers the S3 storage bills.

- Interact with CNN Explainer Tool: A CNN explainer visualization tool was shared, designed to help users understand Convolutional Neural Networks (CNNs) via interactive visuals.

- This tool is especially useful for those seeking to deepen their comprehension of CNNs from a practical perspective.

LangChain AI Discord

- Triplex sharply cuts costs in graph construction: Triplex offers a 98% cost reduction in building knowledge graphs, surpassing GPT-4 while operating at 1/60th the cost.

- Developed by SciPhi.AI, Triplex, a finetuned Phi3-3.8B model, now supports local graph building at a fraction of the cost, thanks to SciPhi's R2R.

- Model-specific prompt wording: unnecessary in LangChain: A user queried if model-specific wording is needed in LangChain's

ChatPromptTemplatefor accurate prompts.- It was clarified that

ChatPromptTemplateabstracts this requirement, making specific markers like<|assistant|>unnecessary.

- It was clarified that

- Creating prompts with ChatPromptTemplate: An example was shared on how to define an array of messages in LangChain's

ChatPromptTemplate, leveraging role and message text pairs.- Guide links for detailed steps were provided to aid in building structured prompts effectively.

LLM Perf Enthusiasts AI Discord

- Mystery of OpenAI Scale Tier: A member inquired about understanding the new OpenAI Scale Tier, leading to community confusion around GPT-4 TPS calculations.

- The discussion highlighted the complexity of TPS determinations and discrepancies in GPT-4's performance metrics.

- GPT-4 TPS Calculation Confusion: Members are puzzled by OpenAI's calculation of 19 tokens/second on the pay-as-you-go tier, given GPT-4 outputs closer to 80 tokens/second.

- This sparked debates about the accuracy of the TPS calculations and how they affect different usage tiers.

LLM Finetuning (Hamel + Dan) Discord

- Businesses Wary of Sharing Sensitive Data: A member pointed out that businesses are hesitant to share sensitive line-of-business data or customer/patient data with third parties, reflecting a heightened concern about data privacy.

- The discussion highlighted that this caution stems from growing fears over data security and privacy breaches, leading businesses to prioritize internal controls over external data exchanges.

- Data Privacy Takes Center Stage: Concern for data privacy is becoming ever more critical in businesses as they navigate compliance and security challenges.

- There's a noted trend where businesses are prioritizing the safeguarding of sensitive information against potential unauthorized access.

MLOps @Chipro Discord

- Clarifying Communication for Target Audiences: The discussion focused on understanding the target audience for effective communication, highlighting different groups such as engineers, aspiring engineers, product managers, devrels, and solution architects.

- The participants emphasized that tailoring messages for these specific groups ensures relevance and impact, improving the effectiveness of the communication.

- Importance of Targeted Communication: Clarifying target audience ensures that the communication is relevant and impactful for specific groups.

- The intention is to tailor messages appropriately for engineers, aspiring engineers, product managers, devrels, and solution architects.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!