[AINews] Quis promptum ipso promptiet?

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Automatic Prompt Engineering is all you need.

AI News for 5/9/2024-5/10/2024. We checked 7 subreddits, 373 Twitters and 28 Discords (419 channels, and 4923 messages) for you. Estimated reading time saved (at 200wpm): 556 minutes.

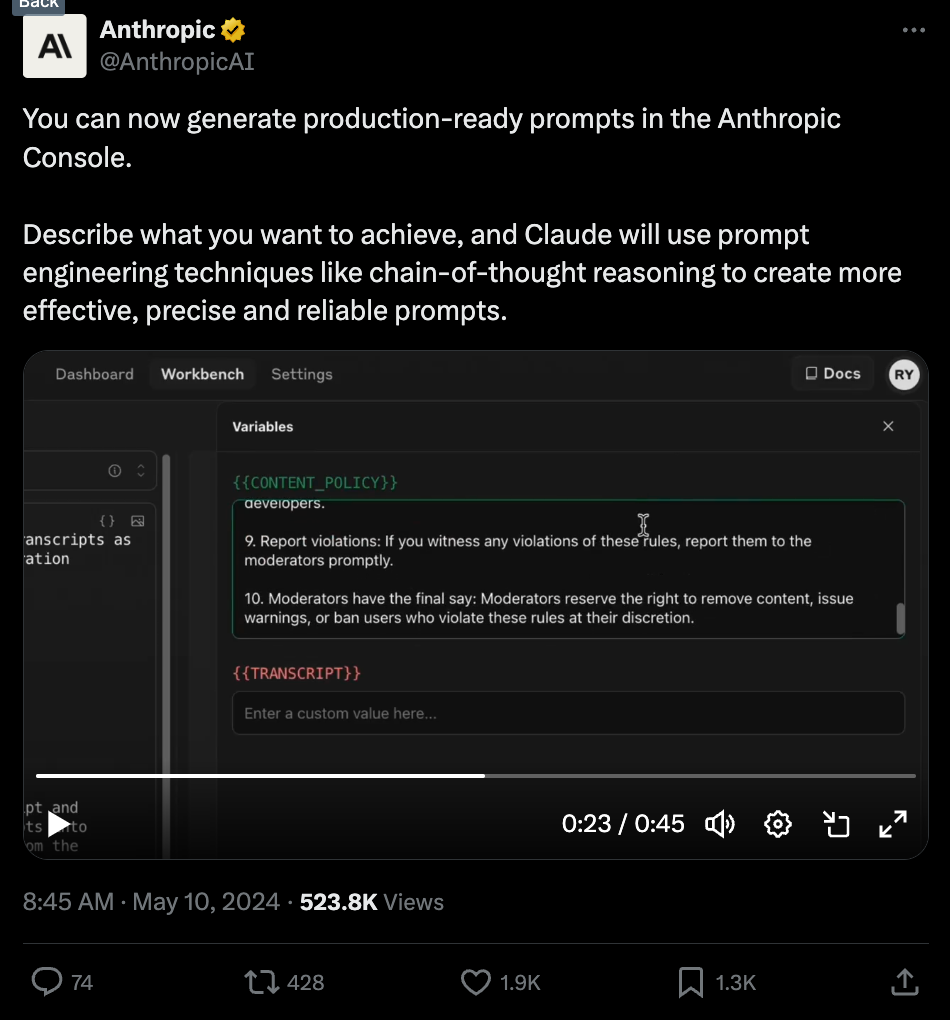

We have been fans of Anthropic's Workbench for a while, and today they released some upgrades helping people improve and templatize their prompts.

Pretty cool, not really the end of prompt engineer but nice to have. Let's be honest, it's been a really quiet week before the storm of both OpenAI's big demo day (potentially a voice assistant?) and Google I/O next week.

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- Unsloth AI (Daniel Han) Discord

- LM Studio Discord

- HuggingFace Discord

- Modular (Mojo 🔥) Discord

- Nous Research AI Discord

- OpenAI Discord

- Eleuther Discord

- CUDA MODE Discord

- Latent Space Discord

- LAION Discord

- OpenInterpreter Discord

- LlamaIndex Discord

- OpenAccess AI Collective (axolotl) Discord

- Interconnects (Nathan Lambert) Discord

- LangChain AI Discord

- OpenRouter (Alex Atallah) Discord

- Cohere Discord

- Datasette - LLM (@SimonW) Discord

- Mozilla AI Discord

- LLM Perf Enthusiasts AI Discord

- tinygrad (George Hotz) Discord

- Alignment Lab AI Discord

- Skunkworks AI Discord

- AI Stack Devs (Yoko Li) Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

OpenAI Announcements

- New developments teased: @sama teased new OpenAI developments coming Monday at 10am PT, noting it's "not gpt-5, not a search engine, but we've been hard at work on some new stuff we think people will love!", calling it "magic".

- Live demo promoted: @gdb also promoted a "Live demo of some new work, Monday 10a PT", clarifying it's "Not GPT-5 or a search engine, but we think you'll like it."

- Speculation on nature of announcement: There was speculation that this could be @OpenAI's Google Search competitor, possibly "just the Bing index summarized by an LLM". However, others believe it will be the new LLM to replace GPT-3.5 in the free tier.

Anthropic Developments

- New prompt engineering features: @AnthropicAI announced new features in their Console to generate production-ready prompts using techniques like chain-of-thought reasoning for more effective, precise prompts. This includes a prompt generator and variables to easily inject external data.

- Customer success with prompt generation: Anthropic's use of prompt generation significantly reduced development time for their customer @Zoominfo's MVP RAG application while improving output quality.

- Impact on prompt engineering: Some believe prompt generation means "prompt engineering is dead" as Claude can now write prompts itself. The prompt generator gets you 80% of the way there in crafting effective prompts.

Llama and Open-Source Models

- RAG application tutorial: @svpino released a 1-hour tutorial on building a RAG application using open-source models, explaining each step in detail.

- Llama 3 70B performance: Llama 3 70B is being called "game changing" based on its Arena Elo scores. Other strong open models include Haiku, Gemini 1.5 Pro, and GPT-4.

- Llama 3 120B quantized weights: Llama 3 120B quantized weights were released, showing the model's "internal struggle" in its outputs.

- Llama.cpp CUDA graphs support: Llama.cpp now supports CUDA graphs for a 5-18% performance boost on RTX 3090/4090 GPUs.

Neuralink Demo

- Thought-controlled mouse: A recent Neuralink demo video showed a person controlling a mouse at high speed and precision just by thinking. This sparked ideas about intercepting "chain of thought" signals to model consciousness and intelligence directly from human inner experience.

- Additional demos and analysis: More video demos and quantitative analysis were shared by Neuralink, generating excitement about the technology's potential.

ICLR Conference

- First time in Asia: ICLR 2024 is being held in Asia for the first time, generating excitement.

- Spontaneous discussions and GAIA benchmarks: @ylecun shared photos of spontaneous technical discussions at the conference. He also presented GAIA benchmarks for general AI assistants.

- Meta AI papers: Meta AI shared 4 papers to know about from their researchers at ICLR, spanning topics like efficient transformers, multimodal learning, and representation learning.

- High in-person attendance: 5400 in-person attendees were reported at ICLR, refuting notions of an "AI winter".

Miscellaneous

- Mistral AI funding: Mistral AI is rumored to be raising at a $6B valuation, with DST as an investor but not SoftBank.

- Yi AI model releases: Yi AI announced they will release upgraded open-source models and their first proprietary model Yi-Large on May 13.

- Instructor Cloud progress: Instructor Cloud is "one day closer" according to @jxnlco, who has been sharing behind-the-scenes looks at building AI products.

- UK PM on AI and open source: UK Prime Minister Rishi Sunak made a "sensible declaration" on AI and open source according to @ylecun.

- Perplexity AI partnership: Perplexity AI partnered with SoundHound to bring real-time web search to voice assistants in cars, TVs and IoT devices.

Memes and Humor

- Claude's charm: @nearcyan joked that "claude is charming and reminds me of all my favorite anthropic employees".

- "Stability is dead": @Teknium1 proclaimed "Stability is dead" in response to the Anthropic developments.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Progress and Capabilities

- AI music breakthrough: In a tweet, ElevenLabs previewed their music generator, signaling a significant advance in AI-generated music.

- Gene therapy restores toddler's hearing: A UK toddler had their hearing restored in the world's first gene therapy trial of its kind, a major medical milestone.

- Solar manufacturing meets 2030 goals early: The IEA reports that global solar cell manufacturing capacity is now sufficient to meet 2030 Net Zero targets, six years ahead of schedule.

- AI discovers new physics equations: An AI system made progress in discovering novel equations in physics by generating on-demand models to simulate physical systems.

- Progress in brain mapping: Google Research shared an update on their work mapping the human brain, which could lead to quality of life improvements.

AI Ethics and Governance

- OpenAI considers allowing AI porn generation: Raising ethical concerns, OpenAI is considering allowing users to create AI-generated pornography.

- OpenAI offers perks to publishers: OpenAI's Preferred Publisher Program provides benefits like priority chat placement to media companies, prompting worries about open model access.

- OpenAI files copyright claim against subreddit: Despite being a "mass scraper of copyrighted work," OpenAI filed a copyright claim against the ChatGPT subreddit's logo.

- Two OpenAI safety researchers resign: Citing doubts that OpenAI will "behave responsibly around the time of AGI," two safety researchers quit the company.

- US considers restricting China's AI access: The US is exploring curbs on China's access to AI software behind applications like ChatGPT.

AI Models and Architectures

- Invoke 4.2 adds regional guidance: Invoke 4.2 was released with Control Layers, enabling regional guidance with text and IP adapter support.

- OmniZero supports multiple identities/styles: The released OmniZero code supports 2 identities and 2 styles.

- Copilot gets GPT-4 based models: Copilot added 3 new "Next-Models" that appear to be GPT-4 variants. Next-model4 is notably faster than base GPT-4.

- Gemma 2B enables 10M context on <32GB RAM: Gemma 2B with 10M context was released, running on under 32GB of memory using recurrent local attention.

- Llama 3 8B extends to 500M context: An extension of Llama 3 8B to 500M context was shared.

- Llama3-8x8b-MoE model released: A Mixture-of-Experts extension to llama3-8B-Instruct called Llama3-8x8b-MoE was released.

- Bunny-v1.1-4B scales to 1152x1152 resolution: Built on SigLIP and Phi-3-mini-4k-instruct, the multimodal Bunny-v1.1-4B model was released, supporting 1152x1152 resolution.

AI Discord Recap

A summary of Summaries of Summaries

-

Large Language Model (LLM) Advancements and Releases:

- Meta's Llama 3 model is generating excitement, with an upcoming hackathon hosted by Meta offering a $10K+ prize pool. Discussions revolve around fine-tuning, evaluation, and the model's performance.

- LLaVA-NeXT models promise enhanced multimodal capabilities for image and video understanding, with local testing encouraged.

- The release of Gemma, boasting a 10M context window and requiring less than 32GB memory, sparks interest and skepticism regarding output quality.

- Multimodal Model Developments: Several new multimodal AI models were announced, including Idefics2 with a fine-tuning demo (YouTube), LLaVA-NeXT (blog post) with expanded image and video understanding capabilities, and the Lumina-T2X family (Reddit post) for transforming noise into various modalities based on text prompts. The Scaling_on_scales (GitHub) approach challenged the necessity of larger vision models.

-

Optimizing LLM Inference and Training:

- Innovations like vAttention and QServe aim to improve GPU memory efficiency and quantization for LLM inference, enabling larger batch sizes and faster serving.

- Consistency Large Language Models (CLLMs) introduce parallel decoding to reduce inference latency, mimicking human cognitive processes.

- Discussions on optimizing CUDA kernels, Triton performance, and the trade-offs between determinism and speed in backward passes for LLM training.

- Vrushank Desai's series explores optimizing inference latency for diffusion models by leveraging GPU architecture intricacies.

-

AI Model Interpretability and Evaluation:

- The Inspect AI framework from the UK AI Safety Institute offers components for evaluating LLMs, including prompt engineering, tool usage, and multi-turn dialog.

- Eleuther AI discusses the CrossCare project, which analyzes disease prevalence bias across demographics in LLMs and pretraining data.

- Debates around the impact of pretraining datasets on "zero-shot" generalization of multimodal models, as detailed in an arXiv paper.

- The Mirage multi-level tensor algebra superoptimizer aims to optimize deep neural networks, though its benchmark claims face skepticism.

-

Open-Source AI Tools and Libraries:

- LlamaIndex announces local LLM integration, TypeScript agent building guides, and integration with Google Firestore, fostering open AI development.

- OpenInterpreter enables AI task automation using GPT-4 and OpenCV, with new releases adding OS flag and Computer API support.

- Hugging Face integrates B-LoRA training into advanced DreamBooth for implicit style-content separation using a single image.

- Intel's ipex-llm accelerates local LLM inference and fine-tuning on Intel CPUs and GPUs, though it currently lacks LM Studio support.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

Artisan Bot Immerses in Discord: Stability AI launched Stable Artisan, a Discord bot boasting Stable Diffusion 3 and Stable Video Diffusion features for content creation, bolstered by tools like Search and Replace, Background Removal, and Outpainting to revolutionize user interactions directly on Discord.

Open-Source or Not? The SD3 Debate Rages: Discord members heatedly debated the potential open-sourcing of Stable Diffusion 3 (SD3), exploring motives for the current API-restricted access and speculating on future outcome scenarios, including possible refinement before release.

Exploring the Stable Diffusion Universe: The community engaged with various Stable Diffusion model versions, including SDXL and ControlNets, evaluating their limitations and the substantial enhancements brought forth by community-developed models like Lora.

Aspiring for 360-Degree Creation: A user sparked discussion on crafting 360-degree images, sharing multiple resources and seeking guidance on methodologies, referencing platforms like Skybox AI and discussions on Reddit.

Tech Support to the Rescue in Real Time: Practical and succinct exchanges provided quick resolutions to common execution errors, such as "DLL load failed while importing bz2", emphasizing the Discord community's agility in offering peer-to-peer technical support.

Perplexity AI Discord

Perplexity Partners with SoundHound: Perplexity AI has entered a partnership with SoundHound, with the aim to integrate online large language models (LLMs) into voice assistants across various devices, enhancing real-time web search capabilities.

Perplexity Innovates Search and Citations: An update on Perplexity AI introduces incognito search, ensuring that user inquiries vanish after 24 hours, combined with enhanced citation previews to bolster user trust in information sources.

Pro Search Glitch and Opus Limitations Spark Debate: The engineering community is facing challenges with the Pro Search feature, which currently fails to deliver internet search results or source citations. Additionally, dissatisfaction surfaced regarding the daily 50-use limit for the Opus model on Perplexity AI, sparking discussions for potential alternatives and solutions.

API Conundrum for AI Engineers: Engineers have noted issues with API output consistency, where the same prompts yield different results compared to those on Perplexity Labs, despite using identical models. Queries have been raised regarding the cause of the discrepancies and requests for guidance on effective prompting for the latest models.

Engagement with Perplexity's Features and New Launches: Users are engaging with features such as making threads shareable and exploring various inquiries including the radioactivity of bananas and the nature of mathematical rings. Additionally, there's interest in Natron Energy's latest launch, reported through Perplexity's sharing platform.

Unsloth AI (Daniel Han) Discord

Unsloth Studio Stalls for Philanthropy: Unsloth Studio's release is postponed due to the team focusing on releasing phi and llama projects, with about half of the studio's project currently complete.

Optimizer Confusion Cleared: Users were uncertain about how to specify optimizers in Unsloth but referenced the Hugging Face documentation for clarification on valid strings for optimizers, including "adamw_8bit".

Training Trumps Inference: The Unsloth team has stated a preference for advancing training techniques rather than inference, where the competition is fierce. They've touted progress in accelerating training in their open-source contributions.

Long Context Model Skepticism: Discussions point to scepticism among users regarding the feasibility and evaluation of very long context models, such as a mentioned effort to tackle up to a 10M context length.

Dataset Cost-Benefit Debated: The community has exchanged differing views on the investment needed for high-quality datasets for model training, considering both instruct tuning and synthetic data creation.

Market-First Advice for Aspiring Bloggers: A member's idea for a multi-feature blogging platform prompted advice on conducting market research and ensuring a clear customer base to avoid a lack of product/market fit.

Ghost 3B Beta Tackles Time and Space: Early training of the Ghost 3B Beta model demonstrates its ability to explain Einstein's theory of relativity in lay terms across various languages, hinting at its potential for complex scientific communication.

Help Forums Foster Fine-Tuning Finesse: The Unsloth AI help channel is buzzing with tips for fine-tuning AI models on Google Colab, though multi-GPU support is a wanted yet unavailable feature. Solutions for CUDA memory errors and a nod towards YouTube fine-tuning tutorials are shared among users.

Customer Support AI at Your Service: ReplyCaddy, a tool based on a fine-tuned Twitter dataset and a tiny llama model for customer support, was showcased, with acknowledgments to Unsloth AI for fast inference assistance, found on hf.co.

LM Studio Discord

LM Studio Laments Library Limitations: While LM Studio excels with models like Llama 3 70B, users struggle to run models such as llama1.6 Mistral or Vicuña even on a 192GB Mac Studio, pointing to a mysterious RAM capacity issue despite ample system resources. There's also discomfort among users concerning the LM Studio installer on Windows since it doesn't offer installation directory selection.

AI Models Demand Hefty Hardware: Running large models necessitates substantial VRAM; members discussed VRAM being a bigger constraint than RAM. Intel's ipex-llm library was introduced to accelerate local LLM inference on Intel CPUs and GPUs Intel Analytics Github, but it's not yet compatible with LM Studio.

New Frontier of Multi-Device Collaboration: Engineers explored the challenges and potential for integrating AMD and Nvidia hardware, addressing the theoretical possibility versus the practical complexity. The fading projects like ZLUDA, aimed at broadening CUDA support for non-Nvidia hardware, were lamented ZLUDA Github.

Translation Model Exchange: For translation projects, Meta AI's NLLB-200, SeamlessM4T, and M2M-100 models came highly recommended, elevating the search for efficient multilingual capabilities.

CrewAI's Cryptic Cut-Off: When faced with truncated token outputs from CrewAI, users deduced that it wasn't quantization to blame. A mishap in the OpenAI API import amid conditional statements was the culprit, a snag now untangled, reaffirming the devil's in the details.

HuggingFace Discord

Graph Learning Enters LLM Territory: The Hugging Face Reading Group explored the integration of Graph Machine Learning with LLMs, fueled by Isamu Isozaki's insights, complete with a supportive write-up and a video.

Demystifying AI Creativity: B-LoRA's integration into advanced DreamBooth's LoRA training script promises new creative heights just by adding the flag --use_blora and training for a relatively short span, as per the diffusers GitHub script and findings in the research paper.

On the Hunt for Resources: AI enthusiasts sought guidance and shared resources across a variety of tasks, with a notable GitHub repository on creating PowerPoint slides using OpenAI's API and DALL-E available at Creating slides with Assistants API and DALL-E and the mention of Ankush Singal's Medium articles for table extraction tools.

Challenging NLP Channel Conversations: The NLP channel tackled diverse topics such as recommending models for specific languages—indicating a preference for sentence transformers and encoder models, instructing versions of Llama, and also referenced community involvement in interview preparations.

Hiccups and Fixes in Diffusion Discussions: The diffusion discussions detailed issues and potential solutions related to HuggingChat bot errors and color shifts in diffusion models, noting a possible fix for login issues by switching the login module from lixiwu to anton-l in order to troubleshoot a 401 status code error.

Modular (Mojo 🔥) Discord

- Mystery of the Missing MAX Launch Date: While a question was raised regarding the launch date of MAX for enterprises, no direct answer was found in the conversation.

- Tuning up Modularity: There's anticipation for GPU support with Mojo, showing potential for scientific computing advancements. The Modular community continues to explore new capabilities in MAX Engine and Mojo, with discussions ranging from backend development expertise in languages like Golang and Rust, to seeking collaborative efforts for smart bot assistance using Hugging Face models.

- Rapid Racing with MoString: A custom

MoStringstruct in Rust showed a staggering 4000x speed increase for string concatenation tasks, igniting talks about enhancing Modular's string manipulation capabilities and how it could aid in LLM Tokenizer decoding tasks.

- Iterator Iterations and Exception Exceptions: The Modular community is deliberating the implementation of iterators and exception handling in Mojo, exploring whether to return

Optional[Self.Output]or raise exceptions. This feeds into broader conversations about language design choices, with a focus on balancing usability and Zero-Cost Abstractions.

- From Drafts to Discussions: An array of technical proposals is in the mix, from structuring Reference types backed by lit.ref to enhancing language ergonomics in Mojo. Contributions to these discussions range from insights into auto-dereferencing to considerations around Small Buffer Optimization (SBO) in

List, all leading to thoughtful scrutiny and collaboration among Modular aficionados.

Nous Research AI Discord

- TensorRT Turbocharges Llama 3: An engineer highlighted the remarkable speed improvements in Llama 3 70b fp16 when using TensorRT, sharing practical guidance with a setup link for those willing to bear the setup complexities.

- Multimodal Fine-Tuning and Evaluation Unveiled: Discourse revolved around fine-tuning methods and evaluations for models. Fine-tuning Idefics2 showcased via YouTube, while the Scaling_on_scales approach challenges the necessity of larger vision models, detailed on its GitHub page. Additionally, the UK Government's Inspect AI framework was mentioned for evaluating large language models.

- Navigation Errors and Credit Confusion in Worldsim: Users encountered hurdles with Nous World Client, specifically with navigation commands, and discussed unexpected changes in user credits post-update. The staff is actively addressing the related system flaws evident in Worldsim Client's interface.

- Efficacious Token Counter and LLM Optimization: Solutions for counting tokens in Llama 3 and details about Meta Llama 3 were shared, including an alternative token counting method using Nous' copy and model details on Huggingface. Additionally, Salesforce's SFR-Embedding-Mistral was highlighted for surpassing its predecessors in text embedding tasks, as detailed on its webpage.

- Painstaking Rope KV Cache Debacle: The dialogue includes an engineer's struggle with a KV cache implementation for rope, the querying of token counts for Llama 3, and uploading errors experienced on the bittensor-finetune-subnet, exemplifying the type of technical challenges prevalent in the community.

OpenAI Discord

- Get Ready for GPT Goodies: A live stream is scheduled for May 13 at 10AM PT on openai.com to reveal new updates for ChatGPT and GPT-4.

- Grammar Police Gather Online: A debate has arisen regarding the importance of grammar, with a high school English teacher advocating for language excellence and others suggesting patience and the use of grammar-checking tools.

- Shaping the Future of Searches: There's buzzing speculation over a potential GPT-based search engine and chatter about using Perplexity as a go-to while awaiting this development.

- GPT-4 API vs. App: Unpacking the Confusion: Users distinguished between ChatGPT Plus and the GPT-4 API billing, noting the app has different output quality and usage limits, specifically an 18-25 messages per 3-hour limit.

- Sharing is Caring for Prompt Enthusiasts: Community members shared resources, including a detailed learning post and a free prompt template for analyzing target demographics, enriched with specifics on buying behavior and competitor engagement.

Eleuther Discord

- Evolving Large Model Landscapes: Discussion within the community spans topics from the applicability of Transformer Math 101 memory heuristics from a ScottLogic blog post to techniques in Microsoft's YOCO repository for self-supervised pre-training, as well as QServe's W4A8KV4 quantization method for LLM inference acceleration. There's an ongoing interest in optimizing Transformer architectures with novel tactics like using a KV cache with sliding window attention and the potential of a multi-level tensor algebra superoptimizer showcased in the Mirage GitHub repository.

- Exploring Bias and Dataset Influence on LLMs: The community raises concerns about bias in LLMs, analyzing findings from the CrossCare project and detailing conversations around dataset discrepancies versus real-world prevalence. The EleutherAI community is leveraging resources like the EleutherAI Cookbook and findings presented in a paper discussing tokenizer glitch tokens, which could inform model improvements regarding language processing.

- Positional Encoding Mechanics: Researchers debate the merits of different positional encoding techniques, such as Rotary Position Embedding (RoPE) and Orthogonal Polynomial Based Positional Encoding (PoPE), contemplating the effectiveness of each in addressing limitations of existing methods and the potential impact on improving language model performance.

- Deep Dive into Model Evaluations and Safety: The community introduces Inspect AI, a new evaluation platform from the UK AI Safety Institute designed for extensive LLM evaluations, which can be explored further through its comprehensive documentation found here. Parallel to this, the conversation regarding mathematical benchmarks brings attention to the gap in benchmarks aimed at AI's reasoning capabilities and the potential "zero-shot" generalization limitations as detailed in an arXiv paper.

- Inquiry into Resources Availability: Discussions hint at demand for resources, with specific inquires about the availability of tuned lenses for every Pythia checkpoint, indicating the community's ongoing effort to refine and access tools to enhance model analysis and interpretability.

CUDA MODE Discord

AI Hype Train Hits Practical Station: The community is buzzing with discussions on the practical aspects of deep learning optimization, contrasting with the usual hype around AI capabilities. Specific areas of focus include saving and loading compiled models in PyTorch, acceleration of compiled artifacts, and the non-support of MPS backend in Torch Inductor as illustrated in a PR by msaroufim.

Memory Efficiency Breakthroughs: Innovations like vAttention and QServe are reshaping GPU memory efficiency and serving optimizations for large language models (LLMs), promising larger batch sizes without internal fragmentation and efficient new quantization algorithms.

Engineering Precision: CUDA vs Triton: Critical comparisons between CUDA and Triton for warp and thread management, including performance nuances and kernel-launch overheads, were dissected. A YouTube lecture on the topic was recommended, with discussions pointing out the pros and cons of using Triton, notably its attempt at minimizing Python-related overhead through potential C++ runtimes.

Optimization Odyssey: Links shared revealed a fascination with optimizing inference latency for models like Toyota's diffusion model, discussed in Vrushank Desai's series found here, and a "superoptimizer" explored in the Mirage paper for DNNs, raising eyebrows regarding benchmark claims and the lack of autotune.

CUDA Conundrums and Determinism Dilemmas: From troubleshooting CUDA's device-side asserts to setting the correct NVCC compiler flags, beginners are wrestling with the nuances of GPU computing. Meanwhile, seasoned developers are debating determinism in backward passes and the trade-offs with performance in LLM training, as discussed in the llmdotc channel.

Latent Space Discord

New Kid on the Block Outshines Olmo: A model from 01.ai is claimed to vastly outperform Olmo, stirring up interest and debate within the community about its potential and real-world performance.

Sloppy Business: Borrowing from Simon Willison's terminology, community members adopt "slop" to describe unwanted AI-generated content. Here's the buzz about AI etiquette.

LLM-UI Cleans Up Your Markup Act: llm-ui was introduced as a solution for refining Large Language Model (LLM) outputs by addressing problematic markdown, adding custom components, and enhancing pauses with a smoother output.

Meta Llama 3 Hackathon Gears Up: An upcoming hackathon focused on Llama 3 has been announced, with Meta at the helm and a $10K+ prize pool, looking to excite AI enthusiasts and developers. Details and RSVP here.

AI Guardrails and Token Talk: Discussions revolved around LLM guardrails featuring tools like Outlines.dev, and the concept of token restriction pregeneration, an approach ill-suited for API-controlled models like those from OpenAI.

LAION Discord

- Codec Evolution: Speech to New Heights: A speech-only codec showcased in a YouTube video was shared alongside a Google Colab for a general-purpose codec at 32kHz. This global codec is an advancement in speech processing technology.

- New Kid on the Block: Introduction of Llama3s: The llama3s model from LLMs lab was released, offering an enhanced tool for various AI tasks with details available on Hugging Face's LLMs lab.

- LLaVA Defines Dimensions of Strength: LLaVA blog post delineates the improvements in their latest language models with a comprehensive exploration of LLaVA's stronger LLMs at llava-vl.github.io.

- Cutting through the Noise: Score Networks and Diffusion Models: Engineers discussed convergence of Noise Conditional Score Networks (NCSNs) to Gaussian distribution with Yang Song’s insights on his blog, and dissected the shades between DDPM, DDIM, and k-diffusion, referencing the k-diffusion paper.

- Beyond Images: Lumina Family's Modality Expedition: Announcing the Lumina-T2X family as a unified model for transforming noise to multiple modalities based on text prompts, utilizing a flow-based mechanism. Future improvements and training details were highlighted in a Reddit discussion.

OpenInterpreter Discord

Groq API Joins OpenInterpreter's Toolset: The Groq API is now being used within OpenInterpreter, with the best practice being to use groq/ as the prefix in completion requests and define the GROQ_API_KEY. Python integration examples are available, aiding in rapid deployment of Groq models.

OpenInterpreter Empowers Automation with GPT-4: OpenInterpreter demonstrates successful task automation, specifically using GPT-4 alongside OpenCV/Pyautogui for GUI navigation tasks on Ubuntu systems.

Innovative OpenInterpreter and Hardware Mashups: Community members are creatively integrating OpenInterpreter with Billiant Labs Frame to craft unique applications such as AI glasses, as shown in this demo, and are exploring compatible hardware like the ESP32-S3-BOX 3 for the O1 Light.

Performance Variability in Local LLMs: While OpenInterpreter's tools are actively used, members have observed inconsistent performance in local LLMs for file system tasks, with Mixtral recognized for enhanced outcomes.

Updates and Advances in LLM Landscapes: The unveiling of LLaVA-NeXT models marks progress in local image and video understanding. Concurrently, OpenInterpreter's 0.2.5 release has brought in new features like the --os flag and a Computer API, detailed in the change log, improving inclusiveness and empowering developers with better tools.

LlamaIndex Discord

LLM Integration for All: LlamaIndex announced a new feature allowing local LLM integrations, supporting models like Mistral, Gemma, and others, and shared details on Twitter.

TypeScript and Local LLMs Unite: There's an open-source guide for building TypeScript agents that leverage local LLMs, like Mixtral, announced on Twitter.

Top-k RAG Approach Discouraged for 2024: A caution against using top-k RAG for future projects trended, hinting at emerging standards in the community. LlamaIndex tweeted this guidance here.

Graph Database Woes and Wonders: A user detailed their method of turning Gmail content into a Graph database via a custom retriever but is now looking for ways to improve efficiency and data feature extraction.

Interactive Troubleshooting: When facing a NotImplementedError with Mistral and HuggingFace, users were directed to a Colab notebook to facilitate the setup of a ReAct agent.

OpenAccess AI Collective (axolotl) Discord

Pretraining Predicaments and Fine-Tuning Frustrations: Engineers reported challenges and sought advice on pretraining optimization, dealing with a dual Epoch Enigma where one epoch unexpectedly saved a model twice, and facing a Pickle Pickle with PyTorch, which threw a TypeError when it couldn’t pickle a 'torch._C._distributed_c10d.ProcessGroup' object.

LoRA Snafu Sorted: A fix was proposed for a LoRA Configuration issue, advising to include 'embed_tokens' and 'lm_head' in the settings to address a ValueError; this snippet was shared for precise YAML configuration:

lora_modules_to_save:

- lm_head

- embed_tokens

Additionally, an engineer struggling with an AttributeError in transformers/trainer.py was counseled on debugging steps, including batch inspection and data structure logging.

Scaling Saga: For fine-tuning extended contexts in Llama 3 models, linear scaling was recommended, while dynamic scaling was suggested as the better option for scenarios outside fine-tuning.

Bot Troubles Teleported: A Telegram bot user highlighted a timeout error, suggesting networking or API rate-limiting issues could be in play, with the error message being: 'Connection aborted.', TimeoutError('The write operation timed out').

Axolotl Artefacts Ahead: Discussion on the Axolotl platform revealed capabilities to fine-tune Llama 3 models, confirming a possibility for handling a 262k sequence length and further curious explorations into fine-tuning a 32k dataset.

Interconnects (Nathan Lambert) Discord

- Echo Chamber Evolves into Debate Arena: Engineers voiced the need for a Retort channel for robust debates, echoing frustration by comically referencing self-dialogues on YouTube due to the current lack of structured argumentative discourse.

- Scrutinizing Altman's Search Engine Claims: A member cast doubt on the purported readiness of a search engine mentioned by Sam Altman in a tweet, signaling ongoing evaluations and suggesting the claim could be premature.

- Recurrent Models Meet TPUs: Discussions surfaced around training Recurrent Models (RMs) using TPUs and Fully Sharded Data Parallel (FSDP) protocols. Nathan Lambert pointed to EasyLM as a possibly adaptable Jax-based training tool, offering hope for streamlined training endeavors on TPUs.

- OpenAI Seeks News Allies: The revelation of the OpenAI Preferred Publisher Program, connecting with heavy hitters like Axel Springer and The Financial Times, underlined OpenAI's strategy to prioritize these publishers with enhanced content representation. This suggests a monetization through advertisement and preferred exposure within language models, marking a step towards commercializing AI-generated content.

- Digesting AI Discourse Across Platforms: A new information service from AI News proposes a synthesis of AI conversations across platforms, looking to condense insights for a time-strapped audience. Meanwhile, thoughts on max_tokens awareness and AI behavior by John Schulman spurred critical discourse on model effectiveness, while community appreciation for AI influencers ran high, citing Schulman as a seasoned thought leader.

LangChain AI Discord

Structuring AI with a Google Twist: Discussion unfolded around emulating Google's Gemini AI studio structured prompts within various LLMS, introducing function calling as a new approach to managing LangChain's model interactions.

Navigating LangGraph and Vector Databases: Users troubleshoot issues with ToolNode in LangGraph, with pointers to the LangGraph documentation for in-depth guidance; while others deliberated the complexities and costs of vector databases, with some favoring pgvector for its simplicity and open-source availability, and a comprehensive comparison guide recommended for those considering free local options.

Python vs POST Discrepancies in Chain Invocation: A peculiar case emerged where a member experienced different outcomes when employing a chain() through Python compared to utilizing an /invoke endpoint, indicating the chain began with an empty dictionary via the latter method, signaling potential variations in LangChain's initialization procedures.

AgencyRuntime Beckons Collaborators: A prominent article introduces AgencyRuntime, a social platform designed for crafting modular teams of generative AI agents, and extends an invitation to enhance its capabilities by incorporating further LangChain features.

Learning from LangChain Experts: Tutorial content released guides users on linking crewAI with the Binance Crypto Market and contrasts Function Calling agents and ReACt agents within LangChain, offering practical insights for those fine-tuning their AI applications.

OpenRouter (Alex Atallah) Discord

- A New Browser Extension Joins the Fray: Languify.ai was unveiled, harnessing Openrouter to optimize website text for better engagement and sales.

- Rubik's AI Calls for Beta Testers: Tech enthusiasts have a chance to shape the future of Rubik's AI, an emerging research assistant and search engine, with beta testers receiving a 2-month trial and encouraged to provide feedback using the code

RUBIX.

- PHP and Open Router API Clash: An issue was reported with the PHP React library resulting in a RuntimeException while interacting with the Open Router API, as a user sought help with their "Connection ended before receiving response" error.

- Router Training Evolves with Roleplay: In a search for optimal metrics, a user favored validation loss and precision recall AUC for evaluating router performances on roleplay-themed conversations.

- Gemma Breaks Context Length Records: Interest surged in the promising yet doubted Gemma, a model flaunting a whopping 10M context window that operates on less than 32GB of memory, as announced in a tweet by Siddharth Sharma.

Cohere Discord

- Fine-Tuning Features Fuel Fervor: Command R Fine-Tuning is now available, offering best-in-class performance, up to 15x lower costs, and faster throughput, with accessibility through Cohere platform and Amazon SageMaker. There's anticipation for additional platforms and the upcoming CMD-R+, as well as discussions of cost-effectiveness and use cases for fine-tuning—the details are explored on the Cohere blog post.

- Credit Where Credit Is Due: Engineers seeking to add credits to their Cohere account can utilize the Cohere billing dashboard to set spending limits post credit card addition. This ensures frictionless management of their model usage costs.

- Dark Mode Hits Spot After Sunset: Cohere's Coral does not feature a native dark mode yet; however, a community member provided a custom CSS snippet for a browser-based dark mode solution for those night owl coders.

- Cost Inquiry for Cohere Embeds: A user inquiring about the embed model pricing for production was directed to the Cohere pricing page, which details various plans and the steps to obtain Trial and Production API keys.

- New Faces, New Paces: The community welcomed a new member from Annaba, Algeria, with interests in NLP and LLM, highlighting the diverse and growing global interest in language model applications and development.

Datasette - LLM (@SimonW) Discord

- Apple's Brave New AI World: Apple plans to use M2 Ultra chips for powering generative AI workloads in data centers while gearing up for an eventual shift to M4 chips, within the scope of Project ACDC, which emphasizes security and privacy.

- Mixture of Experts Approach: The discussions ponder over Apple's strategy that resembles a 'mixture of experts', where less demanding AI tasks could run on users' devices, and more complex operations would utilize cloud processing.

- Apple Silicon Lust: Amidst the buzz, an engineer shared their desire for an M2 Ultra-powered Mac, showcasing the enthusiasm stemming from Apple's recent hardware revelations.

- MLX Framework on the Spotlight: Apple's MLX framework is gaining attention, with its capabilities to run large AI models on Apple's hardware, supported by resources available on a GitHub repository.

- ONNX as a Common Language: Despite Apple's tendency towards proprietary formats, the adoption of the ONNX model format, including the Phi-3 128k model, underlines its growing importance in the AI community.

Mozilla AI Discord

- Clustering Ideas Gets a Creative Makeover: A new blog post introduces the application of Llamafile to topic clustering, enhanced with Figma's FigJam AI and DALL-E's visual aids, illustrating the potential for novel approaches to idea organization.

- Getting Technical with llamafile's GPU Magic: Details on llamafile's GPU layer offloading were provided, focusing on the

-ngl 999flag that enables this feature according to the llama.cpp server README, alongside shared benchmarks highlighting the performance variations with different GPU layer offloads. - Alert: llamafile v0.8.2 Drops: The release of llamafile version 0.8.2 by developer K introduces performance enhancements for K quants, with guidance for integrating this update given in an issue comment.

- Openelm and llama.cpp Integration Hurdles: A developer's quest to blend Openelm with

llama.cppappears in a draft GitHub pull request, with the primary obstacle pinpointed tosgemm.cppwithin the pull request discussion. - Podman Container Tactics for llamafile Deployment: A workaround involving shell scripting was shared to address issues encountered when deploying llamafile with Podman containers, suggesting a potential conflict with

binfmt_miscin the context of multi-architecture format support.

LLM Perf Enthusiasts AI Discord

- Spreadsheets Meet AI: A tweet discussed the potential of AI in tackling the chaos of biological lab spreadsheets, suggesting AI could be pivotal in data extraction from complex sheets. However, a demonstration with what might be a less capable model did not meet expectations, highlighting a gap between concept and execution.

- GPT-4's Sibling, Not Successor: Enthusiasm bubbled over speculations around GPT-4's upcoming release; however, it's confirmed not to be GPT-5. Discussion underway suggests the development might be an "agentic-tuned" version or a "GPT4Lite," promising high quality with reduced latency.

- Chasing Efficiency in AI Models: The hope for a more efficient model akin to "GPT4Lite," inspired by Haiku's performance, indicates a strong desire for maintaining model quality while improving efficiency, cost, and speed.

- Beyond GPT-3.5: The advancements in language models have outpaced GPT-3.5, rendering it nearly obsolete compared to its successors.

- Excitement and Guesswork Pre-Announcement: Anticipation heats up with predictions about a dual release featuring an agentic-tuned GPT-4 alongside a more cost-effective version, underscoring the dynamic evolution of language model offerings.

tinygrad (George Hotz) Discord

Metal Build Conundrum: Despite extensive research including Metal-cpp API, MSL spec, Apple documentation, and a developer reference, a user is struggling to understand the libraryDataContents() function in a Metal build process.

Tensor Vision: An online visualizer has been developed by a user to help others comprehend tensor shapes and strides, potentially simplifying learning for AI engineers.

TinyGrad Performance Metrics: Clarification was provided that InterpretedFlopCounters in TinyGrad's ops.py are used as performance proxies through flop count insights.

Buffer Registration Clarified: Responding to an inquiry about self.register_buffer in TinyGrad, a user mentioned that initializing a Tensor with requires_grad=False is the alternative approach in TinyGrad.

Symbolic Range Challenge: There's a call for a more symbolic approach to functions and control flow within TinyGrad, hinting at an ambition to have a rendering system that comprehends expansions of general algebraic lambdas and control statements symbolically.

Alignment Lab AI Discord

- Seeking Buzz Model Finetuning Intel: A user has inquired about the best practices for iterative sft finetuning of Buzz models, noting the current gaps in documentation and guidance.

Skunkworks AI Discord

- AI Skunkworks Shares a Video: Member pradeep1148 shared a YouTube video in the #off-topic channel, context or relevance was not provided.

AI Stack Devs (Yoko Li) Discord

- Phaser-Based AI Takes the Classroom: The AI Town community announced an upcoming live session about the intersection of AI and education, featuring the use of Phaser-based AI in interactive experiences and classroom integration. The event includes showcases from the #WeekOfAI Game Jam and insights on implementing the AI tool, Rosie.

- AI EdTech Engagement Opportunity: AI developers and educators are invited to attend the event on Monday, 13th at 5:30 PM PST, with registration available via a recent Twitter post. Attendees can expect to learn about gaming in AI learning and engage with the educational community.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #announcements (1 messages):

- Stable Artisan Joins the Discord Family: Stability AI presents Stable Artisan, a Discord bot that integrates multimodal AI capabilities, including Stable Diffusion 3 and Stable Video Diffusion for image and video generation within Discord.

- The Future is Multimodal: Stable Artisan brings a suite of editing tools such as Search and Replace, Remove Background, Creative Upscale, and Outpainting to enhance media creation on Discord.

- Accessibility and Community Engagement Enhanced: By fulfilling a popular demand, Stability AI aims to make their advanced models more accessible to the Stable Diffusion community directly on Discord.

- Embark On the Stable Artisan Experience: Discord users eager to try Stable Artisan can get started on the Stable Diffusion Discord Server in designated channels such as #1237461679286128730 and others listed in the announcement.

Link mentioned: Stable Artisan: Media Generation and Editing on Discord — Stability AI: One of the most frequent requests from the Stable Diffusion community is the ability to use our models directly on Discord. Today, we are excited to introduce Stable Artisan, a user-friendly bot for m...

Stability.ai (Stable Diffusion) ▷ #general-chat (877 messages🔥🔥🔥):

- SD3 Weights Discussion: Members discussed at length their views on whether Stability AI will release SD3 open-source weights and whether the current API access indicates the model is complete. The consensus varies, with some arguing for financial motives behind the API-only access and others suggesting the model will be open-sourced after further refinement.

- Community Engagement with Model Versions: There was discussion about the usefulness of various Stable Diffusion model versions, such as SDXL, and community contributions like Lora models and ControlNets. Different perspectives were shared on whether SDXL has reached its limits and if the open-source community additions significantly enhance its capabilities.

- Video Generation Capability Enquiry: A user inquired about the capability of generating videos from prompts, specifically if Discord allows this or if it's solely web-based. It was clarified that while it's possible with tools like Stable Video Diffusion, doing so directly on Discord might require a paid service like Artisan.

- 360-degree Image Generation Requests: One member sought advice on generating a 360-degree runway image, sharing several links to tools and methods that might produce such an image. The user was exploring options such as web tools, specific GitHub repositories, and advice from Reddit but was still seeking a clear solution.

- Execution Errors and Quick Support Requests: Users reported issues like the "DLL load failed while importing bz2" error when running webui-user.bat, seeking solutions in channels specifically dedicated to technical support. Conversations were brisk and to the point, with some users preferring direct assistance over formalities.

- Stop Killing Games: no description found

- Reddit - Dive into anything: no description found

- #NeuraLunk's Profile and Image Gallery | OpenArt: Free AI image generator. Free AI art generator. Free AI video generator. 100+ models and styles to choose from. Train your personalized model. Most popular AI apps: sketch to image, image to video, in...

- ComfyUI-SAI_API/api_cat_with_workflow.png at main · Stability-AI/ComfyUI-SAI_API: Contribute to Stability-AI/ComfyUI-SAI_API development by creating an account on GitHub.

- Skybox AI: Skybox AI: One-click 360° image generator from Blockade Labs

- GitHub - tjm35/asymmetric-tiling-sd-webui: Asymmetric Tiling for stable-diffusion-webui: Asymmetric Tiling for stable-diffusion-webui. Contribute to tjm35/asymmetric-tiling-sd-webui development by creating an account on GitHub.

- GitHub - mix1009/model-keyword: Automatic1111 WEBUI extension to autofill keyword for custom stable diffusion models and LORA models.: Automatic1111 WEBUI extension to autofill keyword for custom stable diffusion models and LORA models. - mix1009/model-keyword

- GitHub - lucataco/cog-sdxl-panoramic-inpaint: Attempt at cog wrapper for Panoramic SDXL inpainted image: Attempt at cog wrapper for Panoramic SDXL inpainted image - lucataco/cog-sdxl-panoramic-inpaint

- Donald Trump ft Vladimir Putin - It wasn't me #trending #music #comedy: no description found

- GitHub - AUTOMATIC1111/stable-diffusion-webui: Stable Diffusion web UI: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

Perplexity AI ▷ #announcements (2 messages):

- Perplexity Powers SoundHound's Voice AI: Perplexity is teaming up with SoundHound, a leader in voice AI. This partnership will bring real-time web search to voice assistants in cars, TVs, and IoT devices.

- Incognito Mode and Citation Previews Go Live: Users can now ask questions anonymously with incognito search, with queries disappearing after 24 hours for privacy. Improved citations offer source previews; available on Perplexity and soon on mobile.

Link mentioned: SoundHound AI and Perplexity Partner to Bring Online LLMs to Next Gen Voice Assistants Across Cars and IoT Devices: This marks a new chapter for generative AI, proving that the powerful technology can still deliver optimal results in the absence of cloud connectivity. SoundHound’s work with NVIDIA will allow it to ...

Perplexity AI ▷ #general (715 messages🔥🔥🔥):

- In Search of Answers: Members reported that Pro Search is not providing internet search results or citing sources, unlike the normal model. A bug has been reported, and users are turning off the Pro mode as a temporary workaround.

- Pro Search Bug Acknowledged: The perplexity team is aware of the Pro Search issues, and users are directed towards bug-report status updates.

- Concerns Over Opus Limitations: Users expressed frustration over Opus being limited to 50 uses per day on Perplexity, questioning the transparency and purported temporary nature of the restriction. The community discussed alternatives and potential workarounds using other models or platforms.

- Data Privacy Settings Clarified: A discussion about the data privacy settings in Perplexity revealed that turning off "AI Data retention" prevents sharing information between threads, considered preferable for privacy.

- Assistance for Business Collaboration Inquiry: In search of business development contacts at Perplexity, a user was directed to email support[@]perplexity.ai cc: a tagged team member for appropriate direction.

- Tweet from Perplexity (@perplexity_ai): @monocleaaron Yep! — If you revisit the Thread after 24 hours, it'll expire and will be deleted in 30 days.

- OpenAI plans to announce Google search competitor on Monday, sources say | Forth: OpenAI plans to announce its artificial intelligence-powered search product on Monday, according to two sources familiar with the matter, raising the stakes in its competition with search king Google....

- Sam Altman shoots down reports of search engine launch ahead of Google I/O: OpenAI denies plans about a search product launch for Monday.

- Reddit - Dive into anything: no description found

Perplexity AI ▷ #sharing (15 messages🔥):

- Reminder to Make Threads Shareable: A prompt was provided reminding users to ensure their threads are shareable, alongside an instruction attachment. See the thread reminder here.

- Exploration of Radioactive Bananas: A user shared a link about the radioactivity of bananas, asking if bananas are radioactive.

- Discovering the Nature of Rings: Someone inquired about the definition or nature of a ring through a shared link: What is a ring?.

- Interest in Server Creation: A user expressed interest in creating a server by sharing a related link: Crear un servidor.

- Launch of Natron Energy's New Page Revealed: Information about Natron Energy's launch was provided through a shared URL. Check out Natron Energy's new launch.

- Insights into Publishing on Medium: A user sought to learn more about publishing content on Medium, indicated by a shared perplexity search link.

Perplexity AI ▷ #pplx-api (9 messages🔥):

- API Feature Inquiry: A member inquired about whether the API will return a "related" object with related items, akin to the feature available in the web/mobile search.

- Model Version Update Noted: An update has been mentioned regarding the page stating the model version as 70b.

- Difficulty with Output Formatting Instructions: Users are discussing that the latest online models are struggling with adhering to output formatting instructions, such as phrasing and list-making. They are seeking recommendations for effective prompt design.

- Inconsistencies between API and Perplexity Labs: Members have reported significant deviations in response quality when using the same prompt and model between the API and Perplexity Labs. It was acknowledged that they are separate entities.

- Clarity Sought on API vs. Labs Outputs: A member expressed interest in acquiring official clarification on why there might be inconsistencies between the two platforms even when using the same models and prompts.

Unsloth AI (Daniel Han) ▷ #general (476 messages🔥🔥🔥):

- Unsloth Studio's Delays and Upcoming Release: Unsloth Studio's release has been delayed due to other commitments related to phi and llama, with the team being about 50% done and planning to focus on it after tending to new releases.

- Inference Speed and Variety of Optimizers in Unsloth: While the Unsloth notebook mentions "adamw_8bit" optimization, there was confusion on how to specify other optimizer options for training. It's suggested to consult Hugging Face documentation for a list of valid strings for optimizers.

- Training vs Inference Focus for AI Development: The team stated that they're prioritizing training over inference as the field of inference is highly competitive. They mentioned that significant progress has been made on speeding up training in their open-source work.

- Difficulties with Long Context Models: Users sarcastically discussed the usefulness of long context models, with one mentioning an attempt by a different project to create a model with up to 10M context length. There is skepticism over the practical application and effective evaluation of such models.

- Discussions on Costs and Quality of Datasets: Conversations amongst users revealed differing opinions on the costs associated with acquiring high-quality datasets for training models, especially in regards to instruct tuning and synthetic data generation.

- mustafaaljadery/gemma-2B-10M · Hugging Face: no description found

- Replete-AI/code_bagel · Datasets at Hugging Face: no description found

- Tweet from RomboDawg (@dudeman6790): Ok so what do think of this idea? Bagel dataset... But for coding 🤔

- Tweet from ifioravanti (@ivanfioravanti): Look at this! Llama-3 70B english only is now at 1st 🥇 place with GPT 4 turbo on @lmsysorg Chatbot Arena Leaderboard🔝 I did some rounds too and both 8B and 70B were always the best models for me. ...

- Reddit - Dive into anything: no description found

- The Simpsons Homer Simpson GIF - The Simpsons Homer Simpson Good Bye - Discover & Share GIFs: Click to view the GIF

- Samurai Champloo GIF - Samurai Champloo Head Bang - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- gradientai/Llama-3-8B-Instruct-Gradient-4194k · Hugging Face: no description found

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - coqui-ai/TTS: 🐸💬 - a deep learning toolkit for Text-to-Speech, battle-tested in research and production: 🐸💬 - a deep learning toolkit for Text-to-Speech, battle-tested in research and production - coqui-ai/TTS

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- chargoddard/commitpack-ft-instruct · Datasets at Hugging Face: no description found

- no title found: no description found

- Tweet from RomboDawg (@dudeman6790): Thank you to this brave man. He did what i couldnt. I tried and failed to make a codellama model. But he made one amazingly. Ill be uploading humaneval score to his model page soon as a pull request o...

- Stop Sign GIF - Stop Sign Red - Discover & Share GIFs: Click to view the GIF

- Capybara Let Him Cook GIF - Capybara Let him cook - Discover & Share GIFs: Click to view the GIF

- teknium/OpenHermes-2.5 · Datasets at Hugging Face: no description found

- No way to get ONLY the generated text, not including the prompt. · Issue #17117 · huggingface/transformers: System Info - `transformers` version: 4.15.0 - Platform: Windows-10-10.0.19041-SP0 - Python version: 3.8.5 - PyTorch version (GPU?): 1.10.2+cu113 (True) - Tensorflow version (GPU?): 2.5.1 (True) - ...

- How AI was Stolen: CHAPTERS:00:00 - How AI was Stolen02:39 - A History of AI: God is a Logical Being17:32 - A History of AI: The Impossible Totality of Knowledge33:24 - The Lea...

- Emotional Damage GIF - Emotional Damage Gif - Discover & Share GIFs: Click to view the GIF

Unsloth AI (Daniel Han) ▷ #random (19 messages🔥):

- Aspiring Startup Dreams: A member is working on a multi-user blogging platform with features like comment sections, content summaries, video scripts, blog generation, content checking, and anonymous posting. The community suggested conducting market research, identifying unique selling points, and to ensure there's a customer base willing to pay before proceeding with the startup idea.

- Words of Caution for Startups: Another member advised that before building a product, one should find a market of people willing to pay for it to avoid building something without product/market fit. Building to learn is okay, but startups require a clear path to profitability.

- Reddit Community Engagement: Link shared to Reddit's r/LocalLLaMA where a humor post discusses "Llama 3 8B extended to 500M context," jokingly illustrating the challenges in finding extended context for AI.

- Emoji Essentials in Chat: The chat includes members discussing the need for new emojis representing reactions like ROFL and WOW. Efforts to source suitable emojis are underway, with agreements to communicate once options are found.

Link mentioned: Reddit - Dive into anything: no description found

Unsloth AI (Daniel Han) ▷ #help (113 messages🔥🔥):

- Colab Notebooks to the Rescue: Unsloth AI has provided Google Colab notebooks tailored for various AI models, helping users with their training settings and model fine-tuning. Users are directed to these resources for executing their projects with models like Llama3.

- The Unsloth Multi-GPU Dilemma: Currently, Unsloth AI doesn't support multi-GPU configurations, much to the chagrin of users with multiple powerful GPUs. Although it's in the roadmap, multi-GPU support isn't a priority over single-GPU setups due to Unsloth's limited manpower.

- Grappling with GPU Memory: Users are trying to fine-tune LLM models like llama3-70b, but hit the wall with CUDA out of memory errors. Suggestions include using CPU for certain operations with environment variable tweaks.

- Finetuning Frustrations and Friendly Fire: Queries about fine-tuning using different datasets and LLMs abound, with Unsloth's dev team and community members providing guidance on dataset format transformations and sharing helpful resources such as relevant YouTube tutorials.

- Fine-Tuning Frameworks and Fervent Requests: Detailed discussions on fine-tuning procedures with Unsloth's framework are complemented by requests for features and clarifications on VRAM requirements for models like llama3-70-4bit. The community shares insights, links, and tips on addressing common problems.

- Finetune Llama 3 with Unsloth: Fine-tune Meta's new model Llama 3 easily with 6x longer context lengths via Unsloth!

- Llama 3 Fine Tuning for Dummies (with 16k, 32k,... Context): Learn how to easily fine-tune Meta's powerful new Llama 3 language model using Unsloth in this step-by-step tutorial. We cover:* Overview of Llama 3's 8B and...

- GitHub - facebookresearch/xformers: Hackable and optimized Transformers building blocks, supporting a composable construction.: Hackable and optimized Transformers building blocks, supporting a composable construction. - facebookresearch/xformers

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- How I Fine-Tuned Llama 3 for My Newsletters: A Complete Guide: In today's video, I'm sharing how I've utilized my newsletters to fine-tune the Llama 3 model for better drafting future content using an innovative open-sou...

- teknium/OpenHermes-2.5 · Datasets at Hugging Face: no description found

- Google Colab: no description found

- Mistral Fine Tuning for Dummies (with 16k, 32k, 128k+ Context): Discover the secrets to effortlessly fine-tuning Language Models (LLMs) with your own data in our latest tutorial video. We dive into a cost-effective and su...

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Quantization: no description found

Unsloth AI (Daniel Han) ▷ #showcase (10 messages🔥):

- Exploring Ghost 3B Beta's Understanding: Ghost 3B Beta, in its early training stage, produces responses in Spanish explaining Einstein's theory of relativity in simplistic terms for a twelve-year-old. The response highlighted Einstein's revolutionary concepts, describing how motion can affect the perception of time and introducing the idea that space is not empty, but filled with various fields influencing time and space.

- Debating Relativity's Fallibility: In Portuguese, Ghost 3B Beta discusses the possibility of proving Einstein's theory of relativity incorrect, acknowledging that while it's a widely accepted mathematical theory, some scientists critique its ability to explain every phenomenon or its alignment with quantum theory. However, it mentions that the theory stands strong with most scientists agreeing on its significance.

- Stressing the Robustness of Relativity: The same question posed in English received an answer emphasizing the theory of relativity as a cornerstone of physics with extensive experimental confirmation. Ghost 3B Beta acknowledges that while the theory is open to scrutiny, no substantial evidence has surfaced to refute it, showcasing the ongoing process of scientific verification.

- Ghost 3B Beta's Model Impresses Early: An update by lh0x00 reveals that Ghost 3B Beta's model response at only 3/5 into the first stage of training (15% of total progress) is already showing impressive results. This showcases the AI's potential in understanding and explaining complex scientific theories.

- ReplyCaddy Unveiled: User dr13x3 introduces ReplyCaddy, a fine-tuned Twitter dataset and tiny llama model aimed at assisting with customer support messages, which is now accessible at hf.co. They extend special thanks to the Unsloth team for their rapid inference support.

Link mentioned: Reply Caddy - a Hugging Face Space by jed-tiotuico: no description found

LM Studio ▷ #💬-general (150 messages🔥🔥):

- In Search of ROCm for Linux: A member inquired about the existence of a ROCm version for Linux, and another confirmed that there currently isn't one available.

- Memory Limitations for AI Models: A user expressed issues with an "unable to allocate backend buffer" error while trying to use AI models, which another member diagnosed as being due to insufficient RAM/VRAM.

- Local Model Use Requires Ample Resources: It was highlighted that local models require at least 8GB of vram and 16GB of ram to be of any practical use.

- Searchable Model Listing Inquiry: A suggestion was made to use "GGUF" in the search bar within LM Studio for combing through available models, as the default search behavior requires input to display results.

- Stable Diffusion Model Not Supported by LM Studio: A user faced difficulties running the Stable Diffusion model in LM Studio, with another member clarifying that these models are not supported there. For local use, they recommended searching Discord for alternative solutions.

- PDF Documents Handling in LM Studio: When asked if LM Studio supports chatting with bots using pdf documents, a member explained that while LM Studio does not have such capabilities, users can copy and paste text from the documents, as RAG (Retrieval Augmented Generation) is not possible within LM Studio. Details on how to process documents for language models were also provided, including steps such as document loading, splitting, and using embedding models to create a vector database (source).

- Quantization Assistance and Resource Sharing: Discussion occurred regarding the tools and resources needed to quantize models such as converting f16 versions of the llama3 model into Q6_K quants. A member shared a useful guide from Reddit for a concise overview of gguf quantization methods (source) and another sourced from Hugging Face's datasets explaining LLM Quantization Impact (source).

- Vector Embedding and LLM Models Used Concurrently: In response to a question on whether vector embedding models and LLM models can be used simultaneously, it was confirmed that both can be loaded at the same time, allowing for efficient processing of large documents, such as a 2000-page text for RAG purposes.

- Proposal for AI Broker API Standard: A developer introduced the idea of a new API standard for AI model searching and download which could unify service providers like Hugging Face and serverless AI like Cloudflare AI, facilitating easier model and agent discovery for apps like LM Studio. They encouraged participation in the development of this standard on a designated GitHub repository (source).

- GGUF My Repo - a Hugging Face Space by ggml-org: no description found

- bartowski/Meta-Llama-3-70B-Instruct-GGUF · Hugging Face: no description found

- Q&A with RAG | 🦜️🔗 LangChain: Overview

- Shamus/mistral_ins_clean_data · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- Aha GIF - Aha - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- GitHub - openaibroker/aibroker: Open AI Broker API specification: Open AI Broker API specification. Contribute to openaibroker/aibroker development by creating an account on GitHub.

- GitHub - jodendaal/OpenAI.Net: OpenAI library for .NET: OpenAI library for .NET. Contribute to jodendaal/OpenAI.Net development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

- christopherthompson81/quant_exploration · Datasets at Hugging Face: no description found

LM Studio ▷ #🤖-models-discussion-chat (99 messages🔥🔥):

- LLM Integration Possibilities: A member pondered the potential of LLMs to generate stories with accompanying AI-generated visuals solely based on a textual prompt about a "banker frog with magical powers." The capability was confirmed to some extent with GPT-4.

- Model Performance Discussions: The L3-MS-Astoria-70b model was reported to deliver high-quality prose and provide competition to Cmd-R, although context size limitations might drive some users back to QuartetAnemoi.

- Understanding Quant Quality: A community member compared quant (q6 vs. q8) accuracy claims to the Apple Newton's handwriting recognition, suggesting that even if a q6 is nearly as good as q8, larger tasks might reveal significant quality drops.

- GPU Compatibility Issues for AMD CPUs: Users reported issues with GPU Offload of models like lmstudio-llama-3 on AMD CPU systems, with discussions on compatibility and hardware constraints like VRAM and RAM limitations.

- LLM Model Variants Clarified: Clarity was sought on differences between the numerous Llama-3 models; the distinction largely boils down to quantization efforts by different contributors, with advice to prefer models by reputable names or those with higher downloads.

- deepseek-ai/DeepSeek-V2 · Hugging Face: no description found

- Text Embeddings | LM Studio: Text embeddings are a way to represent text as a vector of numbers.

- Fix Futurama GIF - Fix Futurama Help - Discover & Share GIFs: Click to view the GIF

- Pout Christian Bale GIF - Pout Christian Bale American Psycho - Discover & Share GIFs: Click to view the GIF

- Machine Preparing GIF - Machine Preparing Old Man - Discover & Share GIFs: Click to view the GIF

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

LM Studio ▷ #🧠-feedback (8 messages🔥):

- Mac Studio RAM Capacity Issue: A member reported an issue running llama1.6 Mistral or Vicuña on a 192GB Mac Studio that can successfully run Llama3 70B but gives an error for the other models. The error message indicates a RAM capacity problem, with "ram_unused" at "10.00 GB" and "vram_unused" at "9.75 GB".

- Granite Model Load Failure on Windows: Upon attempting to load the NikolayKozloff/granite-3b-code-instruct-Q8_0-GGUF model, a member got an error related to an "unknown pre-tokenizer type: 'refact'". The error message specifies a failure to load the model vocabulary in llama.cpp, which includes system specifications like "ram_unused" at "41.06 GB" and "vram_unused" at "6.93 GB".

- Clarification on Granite Model Support: In response to the previous error, another member clarified that Granite models are unsupported in llama.cpp and consequently will not work at all within that context.

- LM Studio Installer Critique on Windows: A member expressed dissatisfaction with the LM Studio installer on Windows, lamenting the lack of user options to select the installation directory and describing the forced installation as "not okay".

- Seeking Installation Alternatives: The concerned member further sought guidance on how to choose an installation directory for LM Studio on Windows, highlighting the need for user control during the installation process.

LM Studio ▷ #📝-prompts-discussion-chat (1 messages):

- RAG Architecture Discussed for Efficient Document Handling: A member discussed various Retrieval-Augmented Generation (RAG) architectures that involve chunking documents and combining them with additional data like embeddings and location information. They suggested that this approach could be used to limit the scope of the document and mentioned reranking based on cosine similarity as a potential method for analysis.

LM Studio ▷ #🎛-hardware-discussion (46 messages🔥):

- Understanding the Hardware Hurdles for LLMs: Members discussed that VRAM is the main limiting factor when running large language models like Llama 3 70B, with only lower quantizations being manageable depending on the VRAM available.

- Intel's New LLM Acceleration Library: Intel's introduction of ipex-llm, a tool to accelerate local LLM inference and fine-tuning on Intel CPUs and GPUs, was shared by a user, noting that it currently lacks support for LM Studio.

- AMD vs Nvidia for AI Inference: The discourse included views that Nvidia currently offers better value for VRAM, with products like the 4060ti and used 3090 being highlighted as best for 16/24GB cards, despite some anticipation for AMD's potential new offerings.

- Challenges with Multi-Device Support Across Platforms: Links and discussions indicated obstacles in utilizing AMD and Nvidia hardware simultaneously for computation, suggesting that although theoretically possible, such integration requires significant technical hurdles to be overcome.

- ZLUDA and Hip Compatibility Talks: Conversations touched on the limited lifecycle of projects like ZLUDA, which aimed to bring CUDA compatibility to AMD devices, and the potential for developer tools to improve, given the updates and recent maintenance releases on the ZLUDA repository.

- Native Intel IPEX-LLM Support · Issue #7190 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. [X ] I am running the latest code. Development is very rapid so there are no tagged versions as of now. ...

- GitHub - intel-analytics/ipex-llm: Accelerate local LLM inference and finetuning (LLaMA, Mistral, ChatGLM, Qwen, Baichuan, Mixtral, Gemma, etc.) on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max). A PyTorch LLM library that seamlessly integrates with llama.cpp, Ollama, HuggingFace, LangChain, LlamaIndex, DeepSpeed, vLLM, FastChat, etc.: Accelerate local LLM inference and finetuning (LLaMA, Mistral, ChatGLM, Qwen, Baichuan, Mixtral, Gemma, etc.) on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max)...

- GitHub - vosen/ZLUDA: CUDA on AMD GPUs: CUDA on AMD GPUs. Contribute to vosen/ZLUDA development by creating an account on GitHub.

- Llama.cpp not working with intel ARC 770? · Issue #7042 · ggerganov/llama.cpp: Hi, I am trying to get llama.cpp to work on a workstation with one ARC 770 Intel GPU but somehow whenever I try to use the GPU, llama.cpp does something (I see the GPU being used for computation us...

LM Studio ▷ #🧪-beta-releases-chat (4 messages):

- Seeking the Right Translation Model: A user inquired about a model for translation, and another member recommended checking out Meta AI's work, which includes robust translation models for over 100 languages.

- Model Recommendations for Translation: Specific models NLLB-200, SeamlessM4T, and M2M-100 were suggested for translation tasks, all stemming from Meta AI's developments.

- Request for bfloat16 Support in Beta: A member expressed interest in beta testing with bfloat16 support, indicating a potential future direction for experimentation.

LM Studio ▷ #memgpt (6 messages):

- Log Path Puzzles Member: A member was experiencing difficulties changing the log path from

C:\tmp\lmstudio-server-log.txttoC:\Users\user.memgpt\chromafor server logs, which was preventing MemGPT from saving to its archive. - Misunderstood Server Logs: Another member clarified that the server logs are not an archive or a chroma DB, they're cleared on every restart, and suggested creating a symbolic link (symlink) if file access from a different location was still desired.

- MemGPT Saving Issue Resolved with Kobold: The initial member resolved their issue by switching to Kobold, which then saved logs correctly, expressing gratitude for the assistance provided.

LM Studio ▷ #amd-rocm-tech-preview (2 messages):

- Compatibility Queries for AMD GPUs: A member raised a question about why RX 7600 is included on AMD's compatibility list while the 7600 XT is absent. Another member speculated that it could be due to the timing of the lists' updates, as the XT version was released about six months later.

LM Studio ▷ #crew-ai (12 messages🔥):

- Token Generation Troubles with CrewAI: A member reported an issue with incomplete token generation when using CrewAI, whereas Ollama and Groq functioned properly with the same setup. CrewAI seemed to stop output after ~250 tokens although it should handle up to 8192.

- Max Token Settings Puzzle: Altering the max token settings did not resolve the member's issue with incomplete outputs, prompting them to seek assistance.

- Comparing Quantizations Across Models: In a discussion about the problem, a member clarified that all models (llama 3 70b) were using q4 quantization. A lack of quantization in Groq was considered but discarded as a cause because the issue persisted despite the quantization settings.