[AINews] Anthropic launches the Model Context Protocol

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

claude_desktop_config.json is all you need.

AI News for 11/25/2024-11/26/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (202 channels, and 2684 messages) for you. Estimated reading time saved (at 200wpm): 314 minutes. You can now tag @smol_ai for AINews discussions!

Special Note: we have pruned some inactive discords, and added the Cursor discord!

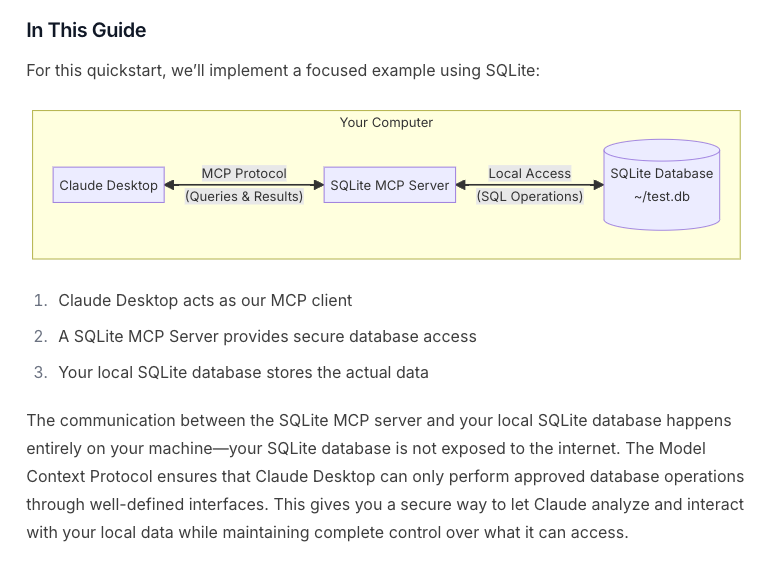

Fresh off their $4bn fundraise from Amazon, Anthropic is not stopping at visual Computer Use (our coverage here). The next step is defining terminal-level integration points for Claude Desktop to directly interface with code run on your machine. From the quickstart:

The Model Context Protocol (MCP) is an open protocol that enables seamless integration between LLM applications and external data sources and tools. Similar to the Language Server Protocol, MCP how to integrate additional context and tools into the ecosystem of AI applications. For implementation guides and examples, visit modelcontextprotocol.io.

The protocol is flexible enough to cover:

- Resources: any kind of data that an MCP server wants to make available to clients. This can include: File contents, Database records, API responses, Live system data, Screenshots and images, Log files, and more. Each resource is identified by a unique URI and can contain either text or binary data.

- Prompts: Resuable templates and workflows (including multi-step)

- Tools: everything from system operations to API integrations to running data processing tasks.

- Transports: Requests, Responses, and Notifications between clients and servers via JSON-RPC 2.0, including support for server-to-client streaming and other custom transports (WebSockets/WebRTC is not mentioned... yet)

- Sampling: allows servers to request LLM completions through the client, enabling sophisticated agentic behaviors (including rating costPriority, speedPriority, and intelligencePriority, implying Anthropic will soon offer a model router) while maintaining security and privacy.

The docs make solid recommendations on security considerations, testing, and dynamic tool discovery.

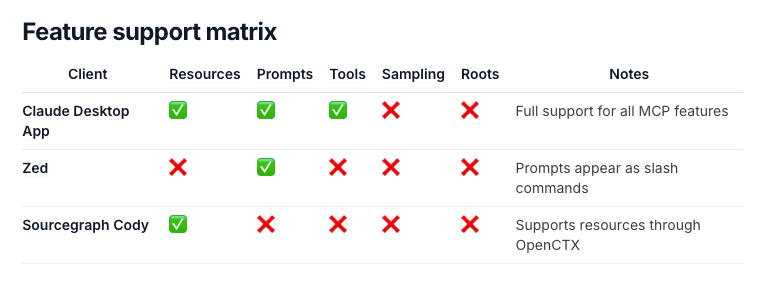

The launch clients show an interesting array of these feature implementations:

The launch partners Zed, Sourcegraph, and Replit all reviewed it favorably, however others were a bit more critical or confused. Hacker News is already recalling XKCD 927.

Glama.ai has already written a good guide/overview to MCP, and both Alex Albert and Matt Pocock have nice introductory videos.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

1. MCP Release and Reaction: Anthropic's Model Context Protocol (MCP)

- Introducing MCP by Anthropic: @alexalbert__ discusses MCP, an open standard for connecting LLMs to data resources via a single protocol. It calls attention to the complexities and critiques its narrow provider focus.

- Skepticism Around Adoption: @hwchase17 compares MCP to earlier OpenAI innovations, questioning its provider exclusivity and potential for becoming a widespread standard.

- Developer Insights: @pirroh reflects on MCP's similarities with web standards for ensuring interoperability across diverse AI agents.

2. Excitement Around Claude and AI Capability Discussions

- Potential of Claude in AI Integrations: @AmandaAskell is querying the community for Claude prompts that could enhance task-specific performance and insights.

- Capability and Integrations: @skirano highlights Claude's capability to integrate locally stored files, presenting it as a powerful tool for API-based GUI automation.

3. NeurIPS and Event Innovations

- NeurIPS 2024 Event Planning: @swyx announces Latent Space LIVE, a novel side event with unique formats like "Too Hot For NeurIPS" and "Oxford Style Debates," aiming for meaningful interactions and audience engagement.

- Registration Adjustments and Speaker Calls: @swyx clarifies registration confusion, urging for new speaker applications amidst event planning.

4. Investments and Growth in Cloud-AI Collaborations

- Amazon's Strategic Moves with Anthropic: @andrew_n_carr discusses Amazon's strategic focus on Anthropic, emphasizing computational collaborations via AWS's Trainium chips.

- Infrastructure Impact: @finbarrtimbers shares thoughts on the potential of Trainium, expressing hope for developments that match Google's TPUs.

5. Open Source Initiatives and Innovations in Model Training

- NuminaMath Dataset Licensing: @_lewtun celebrates the NuminaMath dataset's new Apache 2.0 license, indicating a significant open-source advancement in math problem datasets.

- AI Model Developments: Tweets like @TheAITimeline's synopsis of current papers highlight innovations such as LLaVA-o1 and Marco-o1, contributing to reasoning model discussions.

Memes and Humor

- AI Capability Ducks: @arankomatsuzaki humorously frames AI trends with playful listicles of AI applications.

- Unlikely Scenarios: @mickeyxfriedman shares a whimsical interaction, blending humor with unexpected real-life moments.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Marco-o1 Hits 83% on Cyber Tests: 7B Model Chain-of-Thought Breakthrough

- macro-o1 (open-source o1) gives the cutest AI response to the question "Which is greater, 9.9 or 9.11?" :) (Score: 443, Comments: 87): Marco-o1, an open-source AI model, demonstrated chain-of-thought reasoning by answering a numerical comparison question between 9.9 and 9.11. Due to lack of additional context in the post body, specific details about the response content or the model's implementation cannot be included in this summary.

- Users noted the model exhibits overthinking behavior similar to those with autism, with many commenters relating to its detailed thought process. The model's response to a simple "Hi!" garnered significant attention with 229 upvotes.

- Technical discussion revealed the model runs on M1 Pro chip using Ollama, with a system prompt enabling chain-of-thought reasoning. Users clarified this is the CoT model, not the unreleased MCTS model.

- The model performs best with mathematical and simple queries, showing entertaining but sometimes unnecessary verbose reasoning. Several users noted it struggles with basic spelling tasks like counting letters in "strawberry", suggesting potential training limitations.

- Testing LLM's knowledge of Cyber Security (15 models tested) (Score: 72, Comments: 17): A benchmark test of 421 CompTIA practice questions across 15 different LLM models shows 01-preview leading with 95.72% accuracy, followed by Claude-3.5-October at 92.92% and O1-mini at 92.87%. The test reveals some unexpected results, with marco-o1-7B scoring lower than expected at 83.14% (behind Qwen2.5-7B's 83.73%), and Hunyuan-Large-389b underperforming at 88.60% despite its larger size.

- Marco-o1 model's performance is explained by its base being Qwen2-7B-Instruct (not 2.5), and it currently lacks proper search inference code, making it essentially a CoT finetune implementation.

- Users suggest testing additional models including WhiteRabbitNeo specialized models and Deepseek with its deep thinker version, while others note the importance of considering whether the CompTIA questions might be in the training sets.

- Discussion highlights the significance of focusing on security testing for AI models, with commenters noting this sector needs more attention as developers often build without security considerations.

Theme 2. OuteTTS-0.2-500M: New Compact Text-to-Speech Model Released

- OuteTTS-0.2-500M: Our new and improved lightweight text-to-speech model (Score: 172, Comments: 29): OuteTTS released version 0.2 of their 500M parameter text-to-speech model. The post lacks additional context about specific improvements or technical details.

- The model supports voice cloning via reference audio, with documentation available on HuggingFace, though users may need to finetune for voices outside the Emilia dataset.

- Users report the model performs well despite its small 500M parameter size, though some experience slow generation times (~3 minutes for 14 seconds of audio) and attention mask errors on the Gradio demo.

- Discussion around licensing restrictions emerged, as the model's non-commercial license (inherited from the Emilia dataset) potentially limits usage in monetized content like YouTube videos, despite similar models like Whisper using web-scraped training data.

Theme 3. Winning with Small Models: 1.5B-3B LLMs Show Impressive Results

- These tiny models are pretty impressive! What are you all using them for? (Score: 28, Comments: 3): Tiny LLMs ranging from 1.5B to 3B parameters demonstrated impressive capabilities in handling multiple function calls, with Gemma-2B successfully executing 6 parallel function calls while others managed 4 out of 6. The tested models included Gemma 2B (2.6GB), Llama-3 3B (3.2GB), Ministral 3B (3.3GB), Qwen2.5 1.5B (1.8GB), and SmolLM2 1.7B (1.7GB), all showing potential for domain-specific applications.

- Local deployment capabilities of these tiny models offer significant privacy advantages and reduced cloud infrastructure dependencies, making them practical for sensitive applications.

- 3B parameter models prove sufficient for common use cases like grammar checking, text summarization, code completion, and personal assistant tasks, challenging the notion that larger models are always necessary.

- The efficiency of these smaller models demonstrates successful parameter optimization, achieving targeted functionality without the resource demands of larger models.

- Teleut 7B - Tulu 3 SFT replication on Qwen 2.5 (Score: 55, Comments: 16): A new 7B parameter LLM called Teleut trained on a single 8xH100 node using AllenAI's data mixture achieves competitive performance against larger models like Tülu 3 SFT 8B, Qwen 2.5 7B, and Ministral 8B across multiple benchmarks including BBH (64.4%), GSM8K (78.5%), and MMLU (73.2%). The model is available on Hugging Face and demonstrates that state-of-the-art performance can be replicated using publicly available training data from AllenAI.

- MMLU performance of 76% at 7B parameters is noted as remarkable since this level was previously achieved only by 32/34B models, though some users express skepticism about the accuracy of these comparative metrics.

- Users highlight that Qwen 2.5 Instruct outperforms Teleut in most metrics, raising questions about the actual improvements over the base model and the significance of the results.

- The community appreciates AllenAI's contribution to open data, with Retis Labs offering additional compute resources for further research based on community demand.

Theme 4. Major LLM Development Tools Released: SmolLM2 & Optillm

- Full LLM training and evaluation toolkit (Score: 41, Comments: 3): HuggingFace released their complete SmolLM2 toolkit under Apache 2.0 license at smollm, which provides comprehensive LLM development tools including pre-training with nanotron, evaluation with lighteval, and synthetic data generation with distilabel. The toolkit also includes post-training scripts using TRL and the alignment handbook, plus on-device tools with llama.cpp for tasks like summarization and agents.

- Users inquired about minimum hardware requirements for running the SmolLM2 toolkit, though no official specifications were provided in the discussion.

- Beating o1-preview on AIME 2024 with Chain-of-Code reasoning in Optillm (Score: 54, Comments: 7): Optillm implemented chain-of-code (CoC) reasoning which outperformed OpenAI's o1-preview on AIME 2024 (pass@1) metrics using base models from Anthropic and DeepMind. The implementation, available in their open-source optimizing inference proxy, builds on research from the Chain of Code paper and competes with recent releases from DeepSeek, Fireworks AI, and NousResearch.

- Chain-of-Code implementation follows a structured approach: starting with initial code generation, followed by direct execution, then up to 3 code fix attempts, and finally LLM-based simulation if previous steps fail.

- The OpenAI o1-preview model's innovation is characterized more by accounting than capability improvements, with its architecture potentially incorporating multiple agents and infrastructure rather than a single model improvement.

- Google and Anthropic are predicted to outperform OpenAI's next-generation models, with benchmark reliability being questioned due to the ease of training specifically for benchmarks and obscuring distribution through alignment techniques.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Chinese LLMs surpass Gemini in benchmarks: StepFun & Qwen

- Chinese LLMs catch up with US LLMs: Stepfun ranks higher than Gemini and Qwen ranks higher than 4o (Score: 174, Comments: 75): Chinese language models demonstrate competitive performance with Stepfun ranking above Google's Gemini and Qwen surpassing Claude 4.0 according to recent benchmarks. The specific metrics and testing methodology for these rankings were not provided in the source material.

- Chinese AI models are showing strong real-world performance, with users confirming that models like Deepseek Coder and R1 are competitive though not superior to OpenAI and Anthropic. Multiple users note that the latest experimental models offer 2M context windows.

- Users debate the quality of GPT-4 versions, with many reporting that the November version performs worse than August/May versions, particularly in text analysis tasks. Some attribute this to potential model size reduction for optimization of actual usage.

- Discussion around US-China AI competition highlights broader technological competition, with references to ASPI's Tech Tracker showing China's advancement in strategic technologies while the US maintains leads in specific sectors like AI/ML and semiconductors.

- Jensen Huang says AI Scaling Laws are continuing because there is not one but three dimensions where development occurs: pre-training (like a college degree), post-training ("going deep into a domain") and test-time compute ("thinking") (Score: 66, Comments: 9): Jensen Huang discusses three dimensions of AI scaling: pre-training (comparable to general education), post-training (domain specialization), and test-time compute (active processing). His analysis suggests continued growth in AI capabilities through these distinct development paths, countering arguments about reaching scaling limits.

- Jensen Huang's analysis aligns with NVIDIA's business interests, as each scaling dimension requires additional GPU compute resources for implementation and operation.

- The concept of AI agents emerges as another potential scaling dimension, with experts suggesting a future architecture of thousands of specialized models coordinated by a state-of-the-art controller for achieving AGI/ASI.

- Discussion emphasizes how multiple scaling approaches (pre-training, post-training, test-time, and agents) all drive increased GPU demand, supporting NVIDIA's market position.

Theme 2. Flux Video Generation & Style Transfer Breakthroughs

- Flux + Regional Prompting ❄🔥 (Score: 263, Comments: 23): Flux and Regional Prompting are mentioned in the title but no additional context or content is provided in the post body to create a meaningful summary.

- Regional Prompting with Flux workflow is now freely available on Patreon, though LoRAs currently have reduced fidelity when used with regional prompting. The recommended approach is using regional prompting for base composition, then img-to-img with LoRAs.

- A comprehensive tutorial for ComfyUI setup and usage with Flux vs SD is available on YouTube, covering installation, ComfyUI manager, default workflows, and troubleshooting common issues.

- Discussion touched on modern content monetization, with users noting how 2024's economic environment drives creators toward multiple income streams, contrasting with the early 2000s when monetization was less prevalent.

- LTX Time Comparison: 7900xtx vs 3090 vs 4090 (Score: 21, Comments: 23): The performance comparison between AMD 7900xtx, NVIDIA RTX 3090, and RTX 4090 for Flux and LTX video generation shows the 4090 significantly outperforming with total processing time of 6m15s versus 12m for the 3090 and 27m30s for the 7900xtx, with specific iteration speeds of 4.2it/s, 1.76it/s, and 1.5it/s respectively for Flux. The author notes that LTX video generation quality heavily depends on seed luck and motion intensity, with significant motion causing quality degradation, while the entire test on RunPod cost $1.32.

- Triton Flash Attention and bf16-vae optimizations can potentially improve performance, with the latter being enabled via

--bf16-vaecommand line argument. Documentation for Triton is currently limited to a GitHub Issue. - Community speculates that the upcoming NVIDIA 5090 could complete the test in approximately 3m30s, though concerns about pricing were raised.

- Discussion around VAE decoders and frame rate optimization suggests post-processing speed adjustments for better results, while the high seed sensitivity indicates potential model improvements needed in future versions.

- Triton Flash Attention and bf16-vae optimizations can potentially improve performance, with the latter being enabled via

Theme 3. IntLoRA: Memory-Efficient Model Training and Inference

- IntLoRA: Integral Low-rank Adaptation of Quantized Diffusion Models (Score: 44, Comments: 6): IntLoRA, a new quantization technique for diffusion models, focuses on adapting quantized models through low-rank updates. The technique's name combines "Integral" with "LoRA" (Low-Rank Adaptation), suggesting it deals with integer-based computations in model adaptation.

- IntLoRA offers three key advantages: quantized pre-trained weights for reduced memory in fine-tuning, INT storage for both pre-trained and low-rank weights, and efficient integer multiplication or bit-shifting for merged inference without post-training quantization.

- The technique is explained using a crayon box analogy, where quantization reduces color variations (like fewer shades of blue) and low-rank adaptation identifies the most important elements, making the model more efficient and accessible.

- IntLoRA uses an auxiliary matrix and variance matching control for organization and balance, functioning similarly to GGUFs for base models but specifically designed for diffusion model LoRAs.

Theme 4. Anthropic's Model Context Protocol for Claude Integration

- Introducing the Model Context Protocol (Score: 26, Comments: 16): Model Context Protocol launched to enable Claude integration, though no specific details were provided in the post body.

- The Model Context Protocol allows Claude to interact with local systems including file systems, SQL servers, and GitHub through simple API connections, enabling basic agent/tool functionality through the desktop app.

- Implementation requires installing via

pip install uvto run the MCP server, with setup instructions available at modelcontextprotocol.io/quickstart. A SQLite3 connection example was shared through an imgur screenshot. - Users expressed interest in practical applications, including using it to analyze and fix bug reports through GitHub repository connections.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. AI Model Shuffles Stir Up User Communities

- Cursor Cuts Long Context Mode, Users Cry Foul: Cursor's recent removal of the long context mode, particularly impacting the claude-3.5-200k version, has left users frustrated and scrambling to adjust their workflows. Speculations arise about a shift towards agent-based models, but many are unhappy with the sudden change.

- Qwen 2.5 Coder Baffles with Performance Variability: Confusion reigns as users test the Qwen 2.5 Coder, noting significant discrepancies in benchmark results between providers and local setups. This has led to tweaks in models and settings to chase consistent performance.

- GPT-4o Wows Users Amid Performance Praise: The release of

openai/gpt-4o-2024-11-20has users singing its praises, highlighting its impressive performance and positioning it as a preferred choice in the community.

Theme 2. AI Tools and Platforms Ride the Rollercoaster

- LM Studio's Model Search Limbo Leaves Users Lost: After updating to version 0.3.5, users find LM Studio's model search functionality limited, causing confusion about accessing new models unless manually searched.

- OpenRouter API Plays Hard to Get with Rate Limits: Users hit potential rate limit issues with the OpenRouter API, though some mention private agreements offering more flexibility, highlighting inconsistencies in access.

- Aider and Friends Debate Who's the Fairest IDE of All: Aider users compare it with tools like Cursor and Windsurf, debating effectiveness for coding tasks and noting that Copilot might lag behind premium options.

Theme 3. Fine-Tuners Face Trials and Tribulations

- Fine-Tuners Twist in the Wind with Command R: Users attempting to fine-tune Command R models report outputs stopping prematurely due to max_output_token limits. Hypotheses about premature EOS tokens spark discussions on dataset configurations.

- Windows Woes: Unsloth Users Wrestle with Embeddings: Users grapple with using input embeddings over IDs and face module errors on Windows, prompting suggestions to switch to WSL or Linux, per the Unsloth Notebooks guide.

- PDFs Prove Perplexing for Model Fine-Tuning: Members consider fine-tuning models with an 80-page PDF of company regulations but debate shifting to RAG methods due to challenges in data extraction and relevance.

Theme 4. Communities Collaborate, Commiserate, and Celebrate

- Prompt Hackers Unite in Weekly Study Group: Enthusiasts kick off a weekly study group focusing on prompt hacking techniques, aiming to boost coding practices ahead of hackathons and fostering collaborative learning.

- Perplexity Pro Users Bond Over Glitches and Grit: Perplexity Pro users face feature hiccups, including lost prompts and search issues, leading to shared experiences and collective troubleshooting efforts within the community.

Theme 5. Ethical Quarrels and Governance Grumbles in AI Land

- ChatGPT as Plagiarism Police? Educators Sound Off: Attempts to configure ChatGPT as a plagiarism checker ignite debates over the ethical implications and reliability of using AI for academic integrity tasks.

- Mojo's Type Confusion Leaves Developers Scratching Heads: Discussions around Mojo's type system reveal confusion between

objectandPyObject, raising concerns about dynamic typing handling and potential thread safety issues. - Notebook LM's Language Flip-Flops Frustrate Users: While some celebrate Notebook LM's new multilingual support, others express frustration over unwanted language switches in summaries, impacting usability and leading to calls for improved language control features.

PART 1: High level Discord summaries

Cursor IDE Discord

-

Cursor's Context Mode Removal: Users are frustrated with the recent removal of the long context mode in Cursor, especially the claude-3.5-200k version, disrupting their workflow.

- Some speculate that transitioning to an agent-based model may enhance context retrieval, whereas others are unhappy with the loss of previous functionalities.

- Agent Feature Challenges: Several users report issues with the agent feature in Cursor, noting unresponsive behavior and unexpected task outcomes.

- There's notable interest in implementing auto-approving agent tasks to streamline functionality.

- Cursor Development Initiatives: Developers are leveraging Cursor to build innovative projects, such as an AI-driven dating app and a dog breed learning website.

- The community actively shares ideas on potential Cursor applications, blending both personal and professional project endeavors.

- Cursor vs Windsurf Performance: Users are debating the performance and utility of Cursor versus Windsurf, seeking insights into which tool better serves developers.

- While some prefer Cursor for its capabilities, others advocate for Windsurf due to specific functionalities or personal experiences.

- Cursor Updates and User Support: There are frequent inquiries about updating to the latest Cursor version and accessing its new features, with users sharing resources and tips.

- Community members assist each other in troubleshooting issues and navigating recent changes introduced by updates.

aider (Paul Gauthier) Discord

-

Qwen 2.5 Coder Performance Confusion: Users expressed confusion over the Qwen 2.5 Coder performance, noting discrepancies in benchmark results between different providers and local setups.

- Testing with varying configurations revealed significant performance variations, prompting users to adjust models and settings for improved outcomes.

- Challenges with Local Models: Users reported difficulties running local models with Ollama, indicating poorer performance compared to cloud-hosted versions.

- The conversation highlighted the need for better configurations and suggested alternatives for running Aider models locally.

- Team Account Pricing at $30/month: The team account is priced at $30 per month, allowing for 140 O1 requests weekly and unlimited requests for other models.

- This upgrade offers increased request limits and greater flexibility in model usage, enhancing team capabilities.

- Introduction of Model Context Protocol: Anthropic announced the open-sourcing of the Model Context Protocol (MCP), a standard designed to connect AI assistants to various data systems.

- The protocol aims to replace fragmented integrations with a single universal standard, improving AI's access to essential data.

- Understanding Benchmark

error_outputs: Members inquired about the meaning oferror_outputsin benchmark results, questioning if it reflects model errors or API/network issues.

- It was clarified that this indicates errors were printed, often TimeoutErrors, and Aider will retry these occurrences.

HuggingFace Discord

-

Challenges Accessing Gated Llama-2-7b Models: Users reported difficulties in accessing gated models like meta-llama/Llama-2-7b, encountering errors related to missing files and permissions.

- Feedback included user frustration over access rejections and suggestions for using alternative, ungated models to bypass these restrictions.

- Saplings Tree Search Library: Saplings is a library designed to build smarter AI agents using an easy tree search algorithm, streamlining the creation of efficient AI agents.

- The project aims to enhance AI agent performance, with community members discussing implementation strategies and potential use cases.

- Decentralized Model Storage on Filecoin: Users are adopting Filecoin for decentralized storage of AI models, noting that storage costs have become reasonable, with nearly 1TB stored.

- This approach allows models to be fetched freely after a one-time write, improving accessibility and censorship-resistance.

- SenTrEv Sentence Transformers Evaluator: SenTrEv is a Python package for customizable evaluation of Sentence Transformers-compatible text embedders on PDF data, providing detailed accuracy and performance metrics.

- Comprehensive details are available in their LinkedIn post and the GitHub repository.

- HuggingFace TOP 300 Trending Board: The HuggingFace Trending TOP 300 Board features a dashboard for trending Spaces, Models, and Datasets.

- Key features include an AI Rising Rate and an AI Popularity Score that assess the growth potential and popularity of listed items.

Unsloth AI (Daniel Han) Discord

-

Fine-tuning Models with Unsloth: A member inquired about fine-tuning models to interact with JSON data on Bollywood actors, and others directed them to Unsloth Notebooks for user-friendly resources.

- It was suggested that using RAG could simplify the process for engaging with their scraped data, enhancing the fine-tuning workflow.

- MergeKit for Model Merging: A member recommended using MergeKit from Arcee to effectively merge pretrained large language models, aiming to improve instructional model performance.

- MergeKit provides tools for merging pretrained LLMs, as highlighted in its GitHub page.

- Shift to Multi-task Models from BERT: Discussions covered transitioning from single-task architectures like BERT, which required separate classification heads, to multi-task models such as T5 and decoder-only architectures that integrate text generation capabilities.

- This shift enables models to perform all of BERT's functions alongside text generation, streamlining model usage across tasks.

- RAG Strategy for Hybrid Retrieval: A member advocated for a RAG approach with hybrid retrieval, drawing from experience with over 500 PDFs in specialized domains like chemical R&D.

- They confirmed that this method enhances Q&A generation, even in niche fields, leveraging robust retrieval mechanisms.

- Using Embeddings in LLMs: A user sought to use input embeddings instead of input IDs when generating text with LLMs on Hugging Face, prompting discussions on the differences between embedding and tokenization.

- They were directed to example implementations in the shared Google Colab Notebook, facilitating better understanding of embedding usage.

Modular (Mojo 🔥) Discord

-

Mojo Type System Overhaul: Members discussed Mojo's type system confusions, highlighting the split between object and PyObject; PyObject maps directly to CPython types, while object may require reworking for clarity.

- Concerns were raised about dynamic typing handling and how type merging affects thread safety.

- Closure Syntax Clarity in Mojo: Participants explained that the syntax

fn(Params) capturing -> Typedenotes a closure in Mojo, with discussions on how function types are determined by origin, arguments, and return type.

- There was a comparison to Rust's indirection approaches in capturing closures.

- Vectorization vs Unrolling Tactics: Discussions compared @unroll and @parameter, noting both allow the system to find parallelism but offer different control levels.

- The consensus favored vectorize and @parameter for their richer functionality over simply using @unroll.

- Mojo's Python Superset Ambitions: Mojo aims to become a superset of Python over time, initially focusing on systems programming and AI performance features before fully supporting dynamic typing.

- GitHub issue #3808 indicates that achieving full Python compatibility is complicated by existing dynamic typing and language ergonomics issues.

- Memory Optimization in Mojo: A user shared their experience of porting a QA bot from Python to Mojo, highlighting significant memory usage reductions from 16GB to 2GB.

- Despite encountering segmentation faults during the porting process, the performance improvements enable faster research iterations.

OpenRouter (Alex Atallah) Discord

-

AI Commit Command Launches: A new CLI tool called

cmaiwas introduced to generate commit messages using the OpenRouter API with Bring Your Own Key (BYOK) functionality.- This open-source command aims to simplify the commit message process, encouraging contributions from the developer community.

- Toledo1 AI Adopts Pay-Per-Question Model: Toledo1 offers a novel AI chat experience featuring a pay-per-question model and the capability to combine multiple AIs for customized responses.

- Users can access the demo at toledo1.com and integrate the service seamlessly through its native desktop application.

- Hermes Enhancements Boost llama3.c Performance: Modifications to

llama3.cachieved an impressive 43.44 tok/s in prompt processing, surpassing other implementations utilizing Intel's MKL functions.

- The performance improvements stemmed from using local arrays for matrix calculations, significantly enhancing processing speed.

- OpenRouter API Faces Rate Limit Concerns: Discussions revealed potential rate limit issues with the OpenRouter API, although some responses indicated the existence of private agreements that offer flexibility.

- The variability in contract terms highlights OpenRouter's customized approach to partnerships with its providers.

- Gemini 1.5 Model Encounters Downtime: Users reported receiving empty responses from the Gemini 1.5 model, leading to speculation about its operational status.

- However, confirmations from some users suggest that the issue might be isolated to specific setups.

OpenAI Discord

-

ChatGPT as Plagiarism Detector: Users explored configuring ChatGPT to function as a plagiarism checker with a specific JSON output structure for academic assessments.

- However, concerns were raised regarding the ethical implications and reliability of using AI for detecting academic dishonesty.

- Positive Feedback on GPT-4o Version: The

openai/gpt-4o-2024-11-20release received admiration from members, highlighting its impressive performance.

- Users noted that GPT-4o offers enhanced capabilities, making it a preferable choice within the community.

- Integrating Custom GPT with Vertex: A member inquired about the feasibility of connecting their custom GPT model with Vertex, prompting guidance from others.

- Responses included references to OpenAI’s documentation on actions, indicating available resources for integration.

- Real-time API Use in Multimedia AI: Discussions focused on the application of real-time APIs in multimedia AI, particularly for voice recognition requiring low latency.

- Members clarified that real-time refers to processes occurring instantaneously, relevant for categorizing multimedia content.

- Memory Capabilities in AI Agents: Participants highlighted the importance of memory management in AI agents, referencing chat history and contextual understanding.

- Encouragement was given to explore OpenAI’s documentation for better leveraging memory frameworks in AI functionalities.

Perplexity AI Discord

-

Chatbot Models: Claude vs. Sonnet 3.5 vs GPT-4o: Members debated the strengths of different chatbot models, noting that Claude delivers superior outputs while Sonnet 3.5 adds more personality for academic writing. There was also interest in GPT-4o for its creative task capabilities.

- Discussions highlighted the trade-offs between output quality and personality, with some users advocating for Claude's reliability and others preferring Sonnet 3.5's engaging responses.

- Amazon Invests $4B in Anthropic: Amazon announced a substantial $4 billion investment in Anthropic, signaling strong confidence in advancing AI technologies. This funding is expected to accelerate Anthropic's research and development efforts.

- The investment aims to bolster Anthropic's capabilities in creating more reliable and steerable AI systems, fostering innovation within the AI engineering community.

- API Updates Affect Llama-3.1 Functionality: Recent API changes have impacted the functionality of llama-3.1 models, with users reporting that certain requests now return instructions instead of relevant search results. The supported models section currently only lists the three online models under pricing.

- Users noted that despite these issues, no models have been disabled yet, providing a grace period to transition as no updates are reflected in the changelog.

- Perplexity Pro Users Face Feature Issues: Several members reported issues with Perplexity Pro, particularly the online search feature, leading one user to suggest contacting support. Additionally, refreshing sessions caused loss of long prompts, raising concerns about website stability.

- These stability issues highlight the need for improvements in the platform's reliability to enhance user experience for AI Engineers relying on these tools.

- Best Black Friday VPS Deals Revealed: Members shared insights on the best Black Friday VPS deals, mentioning significant discounts such as a 50% discount on You.Com. These deals are expected to offer substantial savings for tech enthusiasts during the holiday season.

- The discussions also compared the effectiveness of various services, indicating diverse user experiences and preferences in selecting VPS providers.

LM Studio Discord

-

Limited Model Search in LM Studio: After updating to version 0.3.5, users report that the model search functionality in LM Studio is now limited, leading to confusion about available updates.

- Since version 0.3.3, the default search only includes downloaded models, causing users to potentially miss out on new models unless manually searched.

- Uploading Documents for LLM Context: Users inquired about uploading documents to enhance LLM contexts, receiving guidance on supported file formats like

.docx,.pdf, and.txtwith the 0.3.5 update.

- Official documentation was provided, emphasizing that document uploads can significantly improve LLM interactions.

- GPU Compatibility and Power Requirements in LM Studio: Discussions confirmed that LM Studio supports a wide range of GPUs, including the RX 5600 XT, utilizing the effective llama.cpp Vulkan API.

- For high-end builds featuring GPUs like the 3090 and CPUs like the 5800x3D, members recommended a power supply unit (PSU) with approximately 80% of its capacity as a buffer.

- Soaring GPU Prices: Members expressed frustration over the skyrocketing GPU prices, particularly for models like the Pascal series, deeming them as barely performing and resembling e-waste.

- The community agreed that current pricing trends are unsustainable, leading to overpayments for high-performance GPUs.

- PCIe Configurations Impact Performance: PCIe revisions were discussed in relation to LM Studio, with members noting that they primarily affect model loading times rather than inference speeds.

- It was clarified that using PCIe 3.0 does not hinder inference performance, making bandwidth considerations less critical for real-time operations.

Eleuther Discord

-

Type Checking in Python: Members discussed the challenges with type hinting in Python, highlighting that libraries like wandb lack sufficient type checks, complicating integration.

- A specific mention was made of unsloth in fine-tuning, with members expressing more leniency due to its newer status.

- Role-Play Project Collaboration: The Our Brood project was shared, focusing on creating an alloparenting community with AI agents and human participants running 24/7 for 72 hours.

- The project lead is seeking collaborators to set up models and expressed eagerness for further discussions with interested parties.

- Reinforcement Learning in State-Space Models: A discussion about updating the hidden state in state-space models using Reinforcement Learning suggested teaching models to predict state updates through methods resembling truncated backpropagation through time.

- One member proposed fine-tuning as a strategy to enhance learning robotic policies for models.

- Learning on Compressed Text for LLMs: Members highlighted that training large language models (LLMs) on compressed text significantly impacts performance due to challenges with non-sequential data.

- They noted that maintaining relevant information while compressing sequential relationships could facilitate more effective learning, as discussed in Training LLMs over Neurally Compressed Text.

- YAML Self-Consistency Voting: A member confirmed that the YAML file specifies self-consistency voting across all task repeats and inquired about obtaining the average fewshot CoT score without listing each repeat explicitly.

- Another member noted the complexity due to independent filter pipelines affecting the response metrics.

Nous Research AI Discord

-

Custom Quantization in Llama.cpp: A pull request for custom quantization schemes in Llama.cpp was proposed, allowing more granular control over model parameters.

- The discussion emphasized that critical layers could remain unquantized, while less important layers might be quantized to reduce model size.

- LLM Puzzle Evaluation: A river crossing puzzle was evaluated with two solutions focusing on the farmer's actions and the cabbage's fate, revealing that LLMs often misinterpret such puzzles.

- Feedback indicated that models like deepseek-r1 and o1-preview struggle with interpreting the puzzle correctly, echoing challenges faced by humans in reasoning under constraints.

- Anthropic's Model Developments: Anthropic continues advancing their models, positioning themselves for custom fine-tuning and model improvements, as referenced in the Model Context Protocol.

- There is a growing focus on enhancing model capabilities through structured approaches, as discussed in the community.

- Hermes 3 Overview: A user requested a summary on how Hermes 3 differs from other LLMs, leading to the sharing of Nous Research's Hermes 3 page.

- An LLM specialist expressed interest in Nous Research, highlighting increased engagement from experts towards emerging models like Hermes 3.

Notebook LM Discord Discord

-

Convert Notes to Source Feature: NotebookLM introduces the 'Convert notes to source' feature, allowing users to transform their notes into a single source or select notes manually, with each note separated by dividers and named by date.

- This feature enables enhanced interaction with notes using the latest chat functionalities and serves as a backup method, with an auto-update feature slated for 2025.

- Integration with Wondercraft AI: Notebook LM integrates with Wondercraft AI to customize audio presentations, enabling users to splice their own audio and manipulate spoken words.

- While this integration enhances audio customization capabilities, users have noted some limitations regarding free usage.

- Commercial Use of Podcasts: Discussions confirm that content generated via Notebook LM can be commercially published, as users retain ownership of the generated podcasts.

- Members are exploring monetization strategies such as sponsorships and affiliate marketing based on this content ownership.

- Hyper-Reading Blog Insights: A member shared a blog post on 'Hyper-Reading', detailing a modern approach to reading non-fiction books by leveraging AI to enhance learning.

- The blog outlines steps like acquiring books in textual formats and utilizing NotebookLM for improved information retention.

- Language Support in Notebook LM: Notebook LM now supports multiple languages, with users successfully operating it in Spanish and encountering issues with Italian summarizations.

- Users emphasized the need for ensuring AI-generated summaries are in the desired language to maintain overall usability.

Interconnects (Nathan Lambert) Discord

-

Optillm outperforms o1-preview with Chain-of-Code: Using the Chain-of-Code (CoC) plugin, Optillm surpassed OpenAI's o1-preview on the AIME 2024 benchmark.

- Optillm leveraged SOTA models from @AnthropicAI and @GoogleDeepMind, referencing the original CoC paper.

- Google consolidates research talent: Google is speculated to have acquired all their researchers, including high-profile figures like Noam and Yi Tay.

- If true, it highlights Google's strategy to enhance their capabilities by consolidating top talent.

- Reka acquisition rumors with Snowflake: There were rumors of Reka being acquihired by Snowflake, but the deal did not materialize.

- Nathan Lambert expressed dismay about the failed acquisition attempt.

- GPT-4 release date leaked by Microsoft exec: A Microsoft executive leaked the GPT-4 release date in Germany, raising concerns about insider information.

- This incident highlights the risks associated with insider leaks within tech organizations.

- Reasoners Problem and NATO discussions: The Reasoners Problem was discussed, highlighting its implications in AI research.

- A brief mention of NATO in the context of technology or security suggests broader tech landscape implications.

Cohere Discord

-

Command R Fine-tuning Challenges: A member reported that the fine-tuned Command R model's output stops prematurely due to reaching the max_output_token limit during generation.

- Another member suggested that the EOS token might be causing the early termination and requested dataset details for further investigation.

- Cohere API Output Inconsistencies: Users are experiencing incomplete responses from the Cohere API, whereas integrations with Claude and ChatGPT are functioning correctly.

- Despite multiple attempts with different API calls, the issues with incomplete content persist, indicating potential underlying API limitations.

- Deploying Cohere API on Vercel: A developer encountered 500 errors related to client instantiation while deploying a React application using the Cohere API on Vercel.

- They noted that the application functions correctly with a separate server.js file locally, but faced challenges configuring it to work on the Vercel platform.

- Batching and LLM as Judge Approach: A member shared their approach using batching plus LLM as a judge and sought feedback on fine-tuning consistency, highlighting hallucination issues with the command-r-plus model.

- In response, another member proposed the use of Langchain in a mass multi-agent setup to potentially address the observed challenges.

- Suggestions for Multi-Agent Setups: A member recommended exploring a massively multi-agent setup when implementing the batching approach with LLM as judge.

- They also inquired whether the 'judge' role was simply to pass or fail after analysis, seeking clarity on its functionality.

Stability.ai (Stable Diffusion) Discord

-

Beginners Seek Learning Resources: New users are struggling with image creation and are seeking beginner guides to effectively navigate the tools.

- One suggestion emphasized watching beginner guides as they provide a clearer perspective for newcomers.

- ControlNet Upscaling in A1111: A member inquired about enabling upscale in A1111 while utilizing ControlNet features like Depth.

- Another member cautioned against direct messages to avoid scammers, directing the original poster to the support channel instead.

- Buzzflix.ai for Automated Video Creation: A member shared a link to Buzzflix.ai, which automates the creation of viral faceless videos for TikTok and YouTube.

- They expressed astonishment at its potential to grow channels to millions of views, noting it feels like a cheat.

- Hugging Face Website Confusion: Members conveyed confusion regarding the Hugging Face website, particularly the lack of an 'about' section and pricing details for models.

- Concerns were raised about the site's accessibility and usability, with suggestions for better documentation and user guidance.

- Spam Friend Requests Concerns: Users reported receiving suspicious friend requests, suspecting they may be spam.

- The conversation elicited lighthearted responses, but many expressed concern over the unsolicited requests.

GPU MODE Discord

-

Grouped GEMM struggles with fp8 speedup: A member reported that they couldn't achieve a speedup with fp8 compared to fp16 in their Grouped GEMM example, necessitating adjustments to the strides.

- They emphasized setting B's strides to (1, 4096) and providing both the leading and second dimension strides for proper configuration.

- Triton and TPU compatibility: Another member inquired about the compatibility of Triton with TPUs, indicating an interest in utilizing Triton's functionalities on TPU hardware.

- The discussion points to potential future development or community insights regarding Triton's performance on TPU setups.

- CUDA simulations yield weird results without delays: A user observed that running CUDA simulations in quick succession results in weird outcomes, but introducing a one-second delay mitigates the issue.

- This behavior was noted during the inspection of random process performance.

- Torchao shines in GPTFast: The discussion centered around the potential of Torchao being integrated with GPTFast, possibly leveraging Flash Attention 3 FP8.

- Members expressed interest in this integration and its implications for efficiency.

- Understanding Data Dependency in Techniques: A member queried the meaning of data dependent techniques in the context of their necessity for fine-tuning during or after sparsification calibration.

- This sparked a discussion on the implications of such techniques on performance and accuracy.

tinygrad (George Hotz) Discord

-

Integrating Flash-Attention into tinygrad: Flash-attention was proposed for incorporation into tinygrad to enhance attention mechanism efficiency.

- A member raised the possibility of integrating flash-attention, although the discussion did not cover implementation specifics.

- Expanding Operations in nn/onnx.py: Discussions were held on adding instancenorm and groupnorm operations to nn/onnx.py, aiming to extend functionality.

- Concerns were voiced about the increasing complexity of ONNX exclusive modes and the inadequate test coverage for these additions.

- Implementing Symbolic Multidimensional Swap: Guidance was sought on performing a symbolic multidimensional element swap using the

swap(self, axis, i, j)method to manipulate views without altering the underlying array.

- The proposed notation for creating axis-specific views highlighted the need for clarity in execution strategies.

- Developing a Prototype Radix Sort Function: A working radix sort prototype was presented, efficiently handling non-negative integers with potential optimizations suggested.

- Questions were raised about extending the sort function to manage negative and floating-point values, with suggestions to incorporate scatter operations.

- Assessing Kernel Launches in Radix Sort: An inquiry was made into methods for evaluating the number of kernel launches during radix sort execution, considering debug techniques and big-O estimations.

- Debates emerged on the benefits of in-place modification versus input tensor copying prior to kernel execution for efficiency purposes.

LLM Agents (Berkeley MOOC) Discord

-

Compute Resources Deadline Today: Teams must submit the GPU/CPU Compute Resources Form by the end of today via this link to secure necessary compute resources for the hackathon.

- This deadline ensures that resource allocation is managed efficiently, allowing teams to proceed with their projects without delays.

- Lecture 11: AI Safety with Benjamin Mann: The 11th lecture features Benjamin Mann discussing Responsible Scaling Policy, AI safety governance, and Agent capability measurement, streamed live here.

- Mann will share insights from his time at OpenAI on measuring AI capabilities while maintaining system safety and control.

- Weekly Prompt Hacking Study Group: A weekly study group has been initiated to focus on prompt hacking techniques, with sessions starting in 1.5 hours and accessible via this Discord link.

- Participants will explore practical code examples from lectures to enhance their coding practices for the hackathon.

- GSM8K Test Set Cost Analysis: An analysis reveals that one inference run on the GSM8K 1k test set costs approximately $0.66 based on current GPT-4o pricing.

- Additionally, implementing self-correction methods could increase output costs proportional to the number of corrections applied.

Axolotl AI Discord

-

PDF Fine-Tuning Inquiry: A member inquired about generating an instruction dataset for fine-tuning a model using an 80-page PDF containing company regulations and internal data.

- They specifically wondered if the document's structure with titles and subtitles could aid in processing with LangChain.

- Challenges in PDF Data Extraction: Another member suggested checking how much information could be extracted from the PDF, noting that some documents—especially those with tables or diagrams—are harder to read.

- Extracting relevant data from PDFs varies significantly depending on their layout and complexity.

- RAG vs Fine-Tuning Debate: A member shared that while fine-tuning a model with PDF data is possible, using Retrieval-Augmented Generation (RAG) would likely yield better results.

- This method provides an enhanced approach for integrating external data into model performance.

LlamaIndex Discord

-

AI Tools Survey Partnership Kicks Off: A partnership with Vellum AI, FireworksAI HQ, and Weaviate IO has launched a 4-minute survey about the AI tools used by developers, with participants entering to win a MacBook Pro M4.

- The survey covers respondents' AI development journey, team structures, and technology usage, accessible here.

- RAG Applications Webinar Scheduled: Join MongoDB and LlamaIndex on December 5th at 9am Pacific for a webinar focused on transforming RAG applications from basic to agentic.

- Featuring Laurie Voss from LlamaIndex and Anaiya Raisinghani from MongoDB, the session will provide detailed insights.

- Crypto Startup Seeks Angel Investors: A member announced their cross-chain DEX startup based in SF is looking to raise a Series A round and connect with angel investors in the crypto infrastructure space.

- They encouraged interested parties to HMU, signaling readiness for investment discussions.

- Full-Stack Engineer Seeks Opportunities: An experienced Full Stack Software Engineer with over 6 years in web app development and blockchain technologies is seeking full-time or part-time roles.

- They highlighted proficiency in JavaScript frameworks, smart contracts, and various cloud services, eager to discuss potential team contributions.

Torchtune Discord

-

Custom Reference Models' Impact: A member opened an issue regarding the impact of custom reference models, suggesting it's time to add this consideration.

- They highlighted these models' potential effectiveness in the current context.

- Full-Finetune Recipe Development: A member expressed the need for a full-finetune recipe, acknowledging that none currently exist.

- They proposed modifying existing LoRA recipes to support this approach, advocating for caution due to the technique's newness.

- Pip-extra Tools Accelerate Development: Integrating pip-extra tools, pyenv, and poetry results in a faster development process with efficient bug fixes.

- However, some expressed skepticism about poetry's future design direction compared to other tools.

- Rust-like Features Appeal to Developers: The setup is similar to cargo and pubdev, catering to Rust developers.

- This similarity highlights the convergence of tools across programming languages for package and dependency management.

- uv.lock and Caching Boost Efficiency: Utilizing uv.lock and caching enhances the speed and efficiency of project management.

- These features streamline workflows, ensuring common tasks are handled more swiftly.

DSPy Discord

-

Synthetic Data Paper Sought: A member requested a paper to understand how synthetic data generation works.

- This reflects an increasing interest in the principles of synthetic data and its applications.

- Implications of Synthetic Data Generation: The request indicates a deeper exploration into data generation techniques is underway.

- Members noted the importance of understanding these techniques for future projects.

LAION Discord

-

Collaborating with Foundation Model Developers: A member is seeking foundation model developers for collaboration opportunities, offering over 80 million tagged images available for potential projects.

- They also highlighted providing thousands of niche photography options on demand, presenting a valuable resource for developers in the foundation model space.

- Niche Photography Services On Demand: A member is offering thousands of niche photography options on demand, indicating a resource for model training and development.

- This service presents a unique opportunity for developers within the foundation model domain to enhance their projects.

Mozilla AI Discord

-

Lumigator Tech Talk Enhances LLM Selection: Join engineers for an in-depth tech talk on Lumigator, a powerful open-source tool designed to help developers choose the best LLMs for their projects, with a roadmap towards General Availability in early 2025.

- The session will showcase Lumigator's features, demonstrate real-world usage scenarios, and outline the planned roadmap targeting early 2025 for broader availability.

- Lumigator Advances Ethical AI Development: Lumigator aims to evolve into a comprehensive open-source product that supports ethical and transparent AI development, addressing gaps in the current tooling landscape.

- The initiative focuses on creating trust in development tools, ensuring that solutions align with developers' values.

AI21 Labs (Jamba) Discord

-

Confusion Over API Key Generation: A member expressed frustration regarding API key generating issues on the site, questioning if they were making a mistake or if the issue was external.

- They sought clarification on the reliability of the API key generation process from community members.

- Request for Assistance on API Key Issues: The member prompted others for insights into potential problems with the site's API key generation functionality.

- Some participants voiced their experiences, suggesting that the issue might be temporary or linked to specific configurations.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The OpenInterpreter Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!

```