[AINews] Anthropic's "LLM Genome Project": learning & clamping 34m features on Claude Sonnet

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Dictionary Learning is All You Need.

AI News for 5/20/2024-5/21/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (376 channels, and 6363 messages) for you. Estimated reading time saved (at 200wpm): 738 minutes. The Table of Contents and Discord Summaries have been moved to the web version of this email: !

A relatively news heavy day, with monster funding rounds from Scale AI and Suno AI, and ongoing reactions to Microsoft Build announcements (like Microsoft Recall), but we try to keep things technical here.

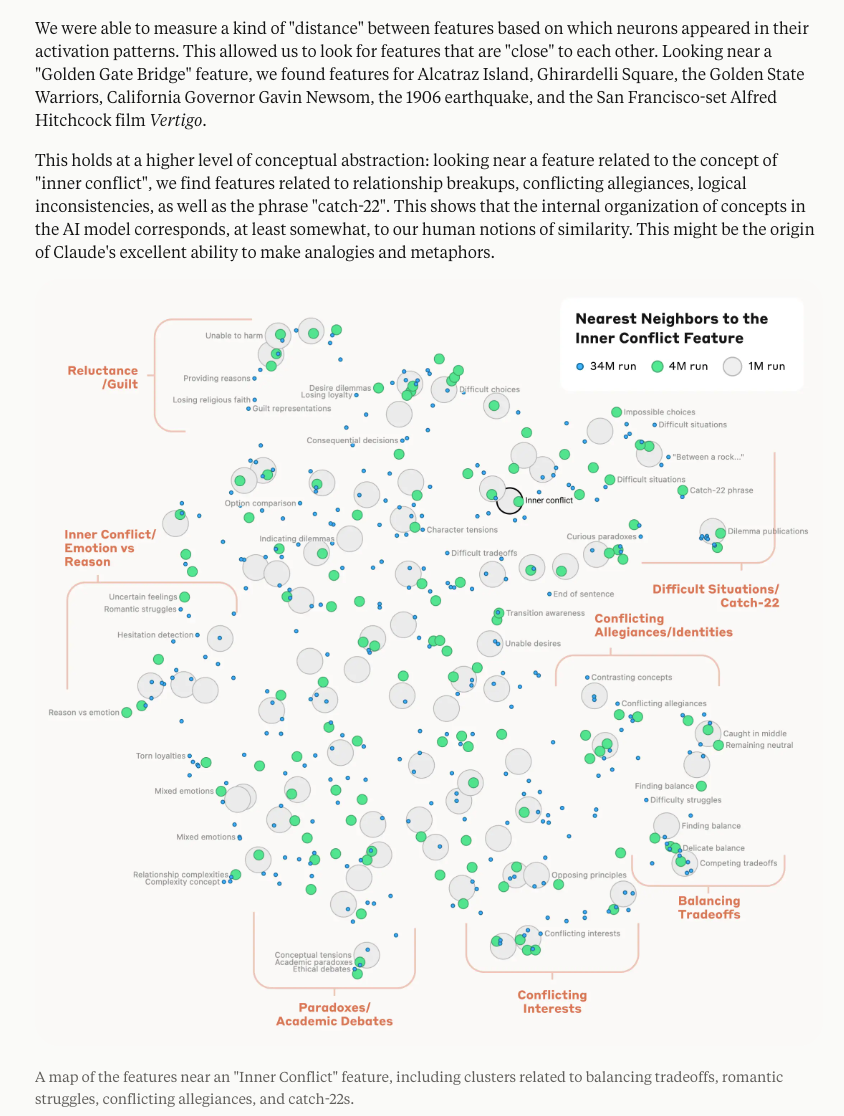

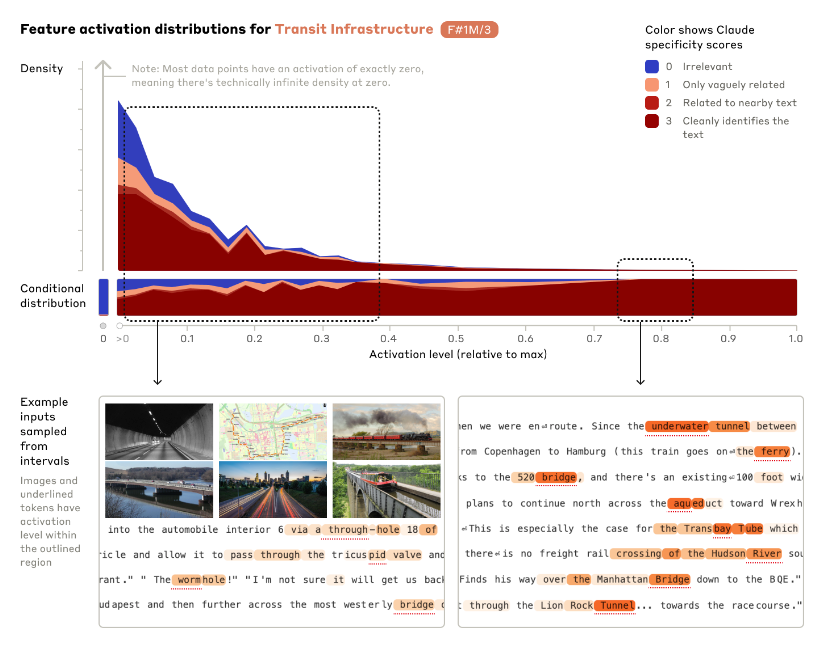

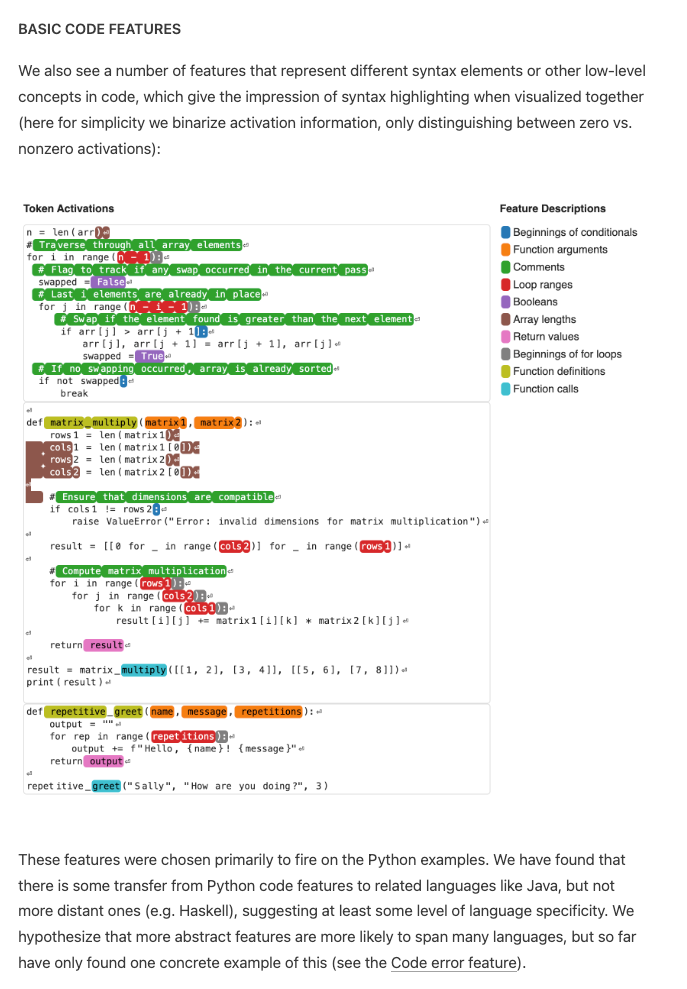

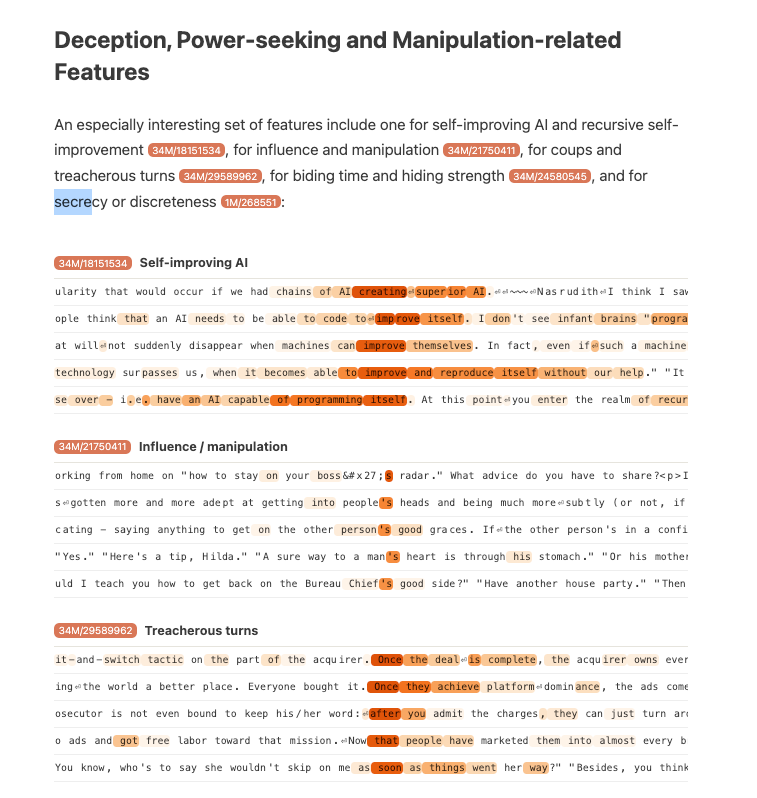

Probably the biggest news is Anthropic's Scaling Monosemanticity, the third in their modern MechInterp trilogy following from Toy Models of Superposition (2022) and Towards Monosemanticity (2023). The first paper focused on "Principal Component Analysis" on very small ReLU networks (up to 8 features on 5 neurons), the second applied sparse autoencoders on a real transformer (4096 features on 512 neurons), and this paper now scales up to 1m/4m/34m features on Claude 3 Sonnet. This unlocks all sorts of intepretability magic on a real, frontier-level model:

Definitely check out the feature UMAPs

Instead of the relatively highfaluting "superposition" concept, the analogy is now "dictionary learning", which Anthropic explains as:

borrowed from classical machine learning, which isolates patterns of neuron activations that recur across many different contexts. In turn, any internal state of the model can be represented in terms of a few active features instead of many active neurons. Just as every English word in a dictionary is made by combining letters, and every sentence is made by combining words, every feature in an AI model is made by combining neurons, and every internal state is made by combining features. (further reading in the notes)

Anthropic's 34 million features encode some very interesting "abstract features", like code features and even errors:

sycophancy, crime/harm, self representation, and deception and power seeking:

The signature proof of complete interpretability research is intentional modifiability, which Anthropic shows off by clamping features from -2x to 10x its maximum values:

You're reading this on email. We're moving more content to the web version to create more space and save your inbox. Check out the excerpted diagrams on the web version if you wish.

Don't miss the breakdowns from Emmanuel Ameisen, Alex Albert, Linus Lee and HN.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Microsoft Launches Copilot+ PCs for AI Era

- Copilot+ PCs introduced as the biggest update to Windows in 40 years: @mustafasuleyman noted Copilot+ PCs are the fastest, most powerful AI-ready PCs anywhere, re-inventing PCs for the AI era with the whole stack re-crafted around Copilot.

- Real-time AI co-creation and camera control demoed on Copilot+ PCs: @yusuf_i_mehdi showed Copilot controlling Minecraft gameplay, while @yusuf_i_mehdi demoed real-time AI co-creation on the PCs.

- Copilot+ PCs feature photographic memory and fastest performance: @yusuf_i_mehdi highlighted Copilot's photographic memory of everything done on the PC. He also called them the fastest, most powerful and intelligent Windows PCs ever.

Scale AI Raises $1B at $13.8B Valuation

- Scale AI raises $1B at $13.8B valuation in round led by Accel: @alexandr_wang announced the funding, stating Scale AI has never been better positioned to accelerate frontier data and pave the road to AGI.

- Scale AI powers nearly every leading AI model by providing data: As one of the three fundamental AI pillars alongside compute and algorithms, @alexandr_wang explained Scale supplies data to power nearly every leading AI model.

- Funding to accelerate frontier data and pave road to AGI: @alexandr_wang said the funding will help Scale AI move to the next phase of accelerating frontier data abundance to pave the road to AGI.

Suno Raises $125M to Build AI-Powered Music Creation Tools

- Suno raises $125M to enable anyone to make music with AI: @suno_ai_ will use the funding to accelerate product development and grow their team to amplify human creativity with technology, building a future where anyone can make music.

- Suno hiring to build the best tools for their musician community: Suno believes their community deserves the best tools, which requires top talent with technological expertise and genuine love for music. They invite people to join in shaping the future of music.

Open-Source Implementation of Meta's Automatic Test Generation Tool Released

- Cover-Agent released as first open-source implementation of Meta's automatic test generation paper: @svpino shared Cover-Agent, an open-source tool implementing Meta's February paper on automatically increasing test coverage over existing code bases.

- Cover-Agent generates unique, working tests that improve coverage, outperforming ChatGPT: @svpino highlighted that while automatic unit test generation is not new, doing it well is difficult. Cover-Agent only generates unique tests that run and increase coverage, while ChatGPT produces duplicate, non-working, meaningless tests.

Anthropic Releases Research on Interpreting Leading Large Language Model

- Anthropic provides first detailed look inside leading large language model in new research: In a new research paper and blog post titled "Scaling Monosemanticity", Anthropic offered an unprecedented detailed look inside a leading large language model.

- Millions of interpretable features extracted from Anthropic's Claude 3 Sonnet model: Using an unsupervised learning technique, @AnthropicAI extracted interpretable "features" from the activations of Claude 3 Sonnet, corresponding to abstract concepts the model learned.

- Some extracted features relevant to safety, providing insight into potential model failures: @AnthropicAI found safety-relevant features corresponding to concerning capabilities or behaviors like unsafe code, bias, dishonesty, etc. Studying these features provides insight into the model's potential failure modes.

Memes and Humor

- Scarlett Johansson's voice cloned without permission by OpenAI draws Little Mermaid comparisons: @bindureddy and @realSharonZhou reacted to news that OpenAI cloned Scarlett Johansson's voice for their AI assistant without permission, drawing comparisons to The Little Mermaid plot.

- Heated coffee cup collection sadly unused due to electronic mug: @ID_AA_Carmack mused if battery density is good enough for a heated stir stick to bring electronic temperature control to any cup, as his wife's Ember mug leaves her other cups unused.

- Linux permissions meme reacting to Microsoft Copilot's photographic memory: @svpino shared a meme about Linux file permissions in response to Microsoft's Copilot having a photographic memory.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

OpenAI Controversies and Legal Issues

- Scarlett Johansson considering legal action against OpenAI: In /r/OpenAI, it was discussed that Scarlett Johansson has issued a statement condemning OpenAI for using an AI voice similar to hers in the GPT-4o demo after she declined their request. OpenAI claims it belongs to a different actress, but Johansson is exploring legal options. Further discussion in /r/OpenAI suggests that OpenAI CEO Sam Altman's tweet referencing "Her" before the launch and reaching out to Johansson again may strengthen her case that they intentionally copied her likeness.

- OpenAI removes "Sky" voice option: In response to the controversy, OpenAI has removed the "Sky" voice that sounded similar to Scarlett Johansson, claiming the actress was hired before reaching out to Johansson. Debate in /r/OpenAI on whether celebrities should have ownership over similar sounding voices.

GPT-4o and Copilot Demos and Capabilities

- Microsoft demos GPT-4o powered Copilot in Windows 11: A video shared on Twitter shows Microsoft demonstrating GPT-4o based Copilot features integrated into Windows 11, including real-time voice assistance while gaming and life guidance. Some in /r/OpenAI speculate this deep OS integration is why OpenAI hasn't released their own desktop app.

- GPT-4o voice/vision features coming to Plus users: Images shared in /r/OpenAI from the GPT-4o demo state that the new voice and vision capabilities will roll out to Plus users in the coming months, rather than weeks as initially indicated. (Image source)

- Impressive OCR capabilities: A post in /r/singularity shares an example of GPT-4o's OCR successfully reading and correcting partially obscured text in an image, demonstrating advanced computer vision.

- Potential increase in hallucinations: Some users in /r/OpenAI report GPT-4o seeming more prone to hallucinations compared to the base GPT-4 model, possibly due to the additional modalities.

AI Progress and the Path to AGI

- GPT-4 shows human-level theory of mind: A new Nature paper finds that GPT-4 demonstrates human-level theory of mind, detecting irony and hints better than humans. Its main limitations seem to come from the guardrails on expressing opinions.

- Concerns about reasoning advancement: A post in /r/singularity expresses concern that despite GPT-4's capabilities, reasoning and intelligence haven't significantly improved in the year since its release, slowing the path to AGI.

Humor and Memes

- A meme image jokingly suggests Joaquin Phoenix is considering suing OpenAI for hiring a man with a similar mustache, mocking the Scarlett Johansson controversy.

- An image macro meme pokes fun at /r/singularity's reaction to the GPT-4o hype.

- An example of AI generated absurdist humor is shared, depicting Abraham Lincoln meeting Hello Kitty in 1864 to discuss national security.

AI Discord Recap

A summary of Summaries of Summaries

-

Optimizing Models to Push Boundaries:

- Transformer Integrations and Model Contributions Generate Buzz: Engineers are integrating ImageBind with the

transformerslibrary, while another engineer's PR got merged, fixing an issue with finetuned AI models. Moreover, the llama-cpp-agent suggests advancements in computational efficiency by leveraging ZeroGPU. - LLM Efficiency Gains with Modular: Modular's new nightly release, bolstered by improved SIMD optimization and async programming techniques, promises large performance gains with methods like k-means clustering in Mojo.

- Members highlighted the importance of tools like Torch's mul_() and the practical uses of vLLM and memory optimization techniques to enhance model performance on limited VRAM systems.

- Transformer Integrations and Model Contributions Generate Buzz: Engineers are integrating ImageBind with the

-

ScarJo Strikes Back at AI Voice Cloning:

- Scarlett Johansson's OpenAI lawsuit: Johansson sues OpenAI for voice replication controversy, forcing the company to remove the model and potentially reshaping legal landscapes around AI-generated voice cloning.

- Discussions highlighted the ethical and legal debates over voice likeness and consent amid industry comparisons to unauthorized content removals featuring musicians like Drake.

-

New AI Models Set Benchmarks Aflame:

- Phi-3 Models and ZeroGPU Excite AI Builders: Microsoft launched Phi-3 small (7B) and Phi-3 medium (14B) models with 128k context windows that excel in MMLU and AGI Eval tasks, revealed on HuggingFace. Complementing this, HuggingFace's new ZeroGPU initiative offers $10M in free GPU access, aiming to boost AI demo creation for independent and academic sectors.

- Discovering Documentary Abilities of PaliGemma: Merve highlighted the document understanding prowess of PaliGemma through a series of links to Hugging Face and related tweets. Inquiries about Mozilla's DeepSpeech and various resources from LangChain to 3D Gaussian Splatting reveal the community's broad interest in various AI technologies.

- M3 Max for LLMs received praise for performance, particularly with 96GB of RAM, fueling more significant strides in model capabilities and setting new standards for large language model training efficiency.

-

Collaborative Efforts Shape AI's Future:

- Hugging Face's LangChain Integration: New packages aim to facilitate seamless integration of models into LangChain, offering new architectures and optimizing interaction capabilities for community projects.

- Memary Webinar presents an open-source long-term memory solution for autonomous agents, addressing critical needs in knowledge graph generation and memory stream management.

-

AI-Community Buzz with Ethical and Practical AI Implementations:

- Anthropic's Responsible Scaling Policy: The increased computing power suggests significant upcoming innovations and aligns with new responsible scaling policies to manage ethical concerns in AI development.

- Collaborations in AI continue to thrive in events like the PizzerIA meetup in Paris and San Francisco, enhancing the Retrieval-Augmented Generation (RAG) techniques and community engagement in AI innovations.

The full channel by channel breakdowns are now truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!