[AINews] AIPhone 16: the Visual Intelligence Phone

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Apple Intelligence is maybe all you need.

AI News for 9/6/2024-9/9/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (215 channels, and 7493 messages) for you. Estimated reading time saved (at 200wpm): 774 minutes. You can now tag @smol_ai for AINews discussions!

At the special Apple Event today, the new iPhone 16 lineup was announced, together with 5 minutes spent covering some updates on Apple Intelligence (we'll assume you are up to speed on our WWDC and Beta release coverage).

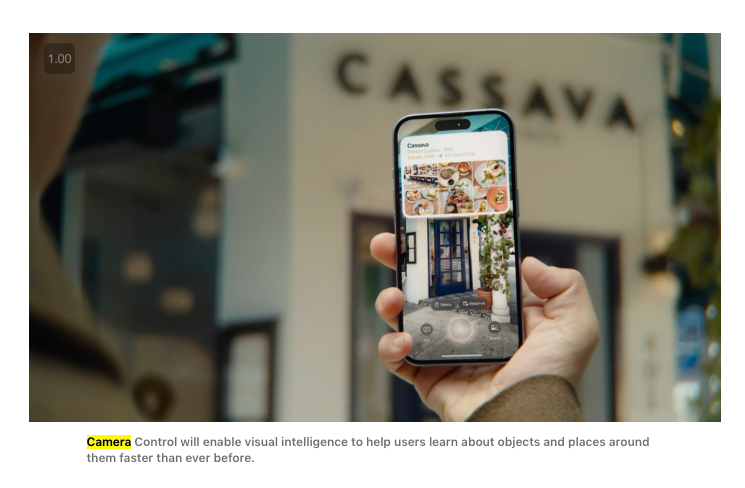

The newest update is what they now call Visual Intelligence, rolling out with the new dedicated Camera Control button for iPhone 16:

As discussed on the Winds of AI Winter pod and now confirmed, Apple is commoditizing OpenAI and putting its own services first:

Presumably one will eventually be able to configure what the Ask and Search buttons call in the new UI, but every Visual Intelligence request will run through Apple Maps and Siri first and those services second. Apple wins here by running first, being default, and being private/free, which is surprisingly a more defensible position than being "best".

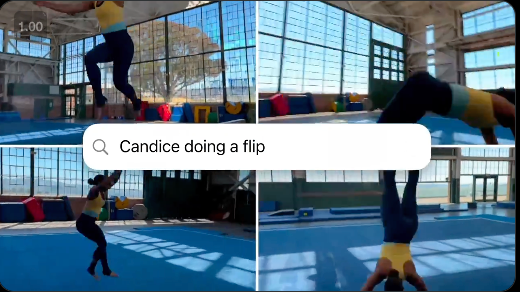

Apple Photos now also have very good video understanding, down to the timestamps in a video:

Craig Federighi called this a part of Apple Intelligence in his segment, but some of these features are already in the iOS 18.0 beta (Apple Intelligence only shipped in iOS 18.1).

You can read the Hacker News commentary for other highlights and cynical takes but that's the big must-know thing from today.

How many years until Apple Visual Intelligence is just... always on?

A Note on Reflection 70B: our coverage last week (and tweet op-ed) covered known criticisms on Friday, but more emerged over the weekend to challenge their claims. We expect more developments over the course of this week, therefore it is premature to make it another title story, but interested readers should scroll to the /r/localLlama section below for a full accounting.

Perhaps we should work on more ungameable LLM evals? Good thing this month's inference is supported by our friends at W&B...

Sponsored by Weights & Biases: If you’re a builder in the Bay Area Sep 21/22, Weights & Biases invites you to hack with them on pushing the state of LLM-evaluators forward. Build better LLM Judges at the W&B Judgement Day hack - $5k in prizes, API access and food provided.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Benchmarks

- Reflection-70B Claims: @JJitsev reported that Reflection-70B claims to be the "world's top open source model" based on common benchmarks. However, preliminary tests using the AIW problem show the model is close to Llama 3 70B and slightly worse than Qwen 2 72B, not reaching top-tier performance as claimed.

- LLM Planning Capabilities: @ylecun noted that LLMs still struggle with planning. Llama-3.1-405b and Claude show some planning ability on Blocksworld, while GPT4 and Gemini perform poorly. Performance is described as "abysmal" for all models on Mystery Blocksworld.

- PLANSEARCH Algorithm: @rohanpaul_ai highlighted a new search algorithm called PLANSEARCH for code generation. It generates diverse observations, constructs plans in natural language, and translates promising plans into code. Claude 3.5 achieved a pass@200 of 77.0% on LiveCodeBench using this method, outperforming the no-search baseline.

AI Tools and Applications

- RAG Pipeline Development: @dzhng reported coding a RAG pipeline in under an hour using Cursor AI composer, optimized with Hyde and Cohere reranker, without writing a single line of code. The entire process was done through voice dictation.

- Google AI's Illuminate: @rohanpaul_ai mentioned Google AI's release of Illuminate, a tool that converts research papers to short podcasts. Users may experience a waiting period of a few days.

- Claude vs Google: @svpino shared an experience where Claude provided step-by-step instructions for a problem in 5 minutes, after spending hours trying to solve it using Google.

AI Research and Developments

- AlphaProteo: @adcock_brett reported on Google DeepMind's unveiling of AlphaProteo, an AI system designed to create custom proteins for binding with specific molecular targets, potentially accelerating drug discovery and cancer research.

- AI-Driven Research Assistant: @LangChainAI shared an advanced AI-powered research assistant system using multiple specialized agents for tasks like data analysis, visualization, and report generation. It's open-source and uses LangGraph.

- Top ML Papers: @dair_ai listed the top ML papers of the week, including OLMoE, LongCite, AlphaProteo, Role of RAG Noise in LLMs, Strategic Chain-of-Thought, and RAG in the Era of Long-Context LLMs.

AI Ethics and Societal Impact

- Immigration Concerns: @fchollet expressed concerns about potential immigration enforcement actions, suggesting that legal documents may not provide protection in certain scenarios.

- AI's Broader Impact: @bindureddy emphasized that AI is more than hype or a business cycle, stating that we are creating new beings more capable than humans and that AI is "way bigger than money."

Hardware and Infrastructure

- Framework 13 Computer: @svpino mentioned purchasing a Framework 13 computer (Batch 3) for use with Ubuntu, moving away from Mac after 14 years.

- Llama 3 Performance: @vipulved reported that Llama 3 405B crossed the 100 TPS barrier on Together APIs with a new inference engine release, achieving 106.9 TPS on NVIDIA H100 GPUs.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Reflection 70B Controversy: Potential API Fraud and Community Backlash

- CONFIRMED: REFLECTION 70B'S OFFICIAL API IS SONNET 3.5 (Score: 278, Comments: 168): Reflection 70B's official API has been confirmed to be Sonnet 3.5. This information aligns with previous speculations and provides clarity on the technical infrastructure supporting this large language model. The confirmation of Sonnet 3.5 as the API suggests specific capabilities and integration methods for developers working with Reflection 70B.

- OpenRouter Reflection 70B claims to be Claude, Created by Anthropic (try it yourself) (Score: 68, Comments: 29): OpenRouter's Reflection 70B model, available through their API, claims to be Claude and states it was created by Anthropic. This assertion raises questions about the model's true identity and origin, as it's unlikely that Anthropic would release Claude through a third-party API without announcement. Users are encouraged to test the model themselves to verify these claims and assess its capabilities.

- Reflection 70B (Free) is broken now (Score: 86, Comments: 25): The Reflection 70B free API is currently non-functional, possibly due to exhaustion of Claude credits. Users attempting to access the service are encountering errors, suggesting that the underlying AI model may no longer be available or accessible through the free tier.

- Reflection 70B API outage is attributed to exhausted Claude credits, with users speculating on the end game of the developer. A VentureBeat article hyped GlaiveAI as a threat to OpenAI and Anthropic, but major publications have yet to cover the fallout.

- OpenRouter replaced the API version with an open weights version, still named Reflection 70B (Free). Users questioned OpenRouter's verification process, with the company defending its quick model deployment without extensive review.

- Some users suggest this incident mirrors a previous Glaive-instruct 3b controversy, indicating a pattern of hyping models for funding. Others speculate on potential distractions or ulterior motives behind the reputation-damaging event.

Theme 2. Community Lessons from Reflection 70B Incident: Trust and Verification in AI

- Well. here it goes. Supposedly the new weights of you know what. (Score: 67, Comments: 77): The post suggests the release of new weights for Reflection 70B, a large language model. However, the community appears to remain highly skeptical about the authenticity or significance of this release, as implied by the cautious and uncertain tone of the post title.

- Reflection 70B lessons learned (Score: 114, Comments: 51): The post emphasizes the critical importance of model verification and benchmark skepticism in AI research. It advises that all benchmarks should start by identifying the specific model being used (e.g., LLAMA, GPT-4, Sonnet) through careful examination, and warns against trusting benchmarks or API claims without personal replication and verification.

- Users emphasized the importance of verifying models through platforms like Lmarena and livebench, warning against trusting unsubstantiated claims from unknown sources. The community expressed a need to recognize bias towards believing groundbreaking improvements.

- There's growing evidence that Matt Shumer may have been dishonest about his AI model claims. Some speculate this could be due to mental health issues, given the short timeframe from project conception to revealed fraud.

- Commenters stressed the importance of developing personal benchmarks based on practical use cases to avoid falling for hype. They also noted that the incident highlights the expectation for open-weight models to soon match or surpass proprietary options.

- Extraordinary claims require extraordinary evidence, something Reflection 70B clearly lacks (Score: 177, Comments: 31): The post title "Extraordinary claims require extraordinary evidence, something Reflection 70B clearly lacks" suggests skepticism about claims made regarding the Reflection 70B model. However, the post body only contains the incomplete phrase "Extraordinary c", providing insufficient context for a meaningful summary of the author's intended argument or critique.

- Reflection 70B's performance is significantly worse when benchmarked using the latest HuggingFace release compared to the private API. Users speculate the private API was actually Claude, leading to skepticism about the model's claimed capabilities.

- Questions arise about Matt Shumer's endgame, as he would eventually need to deliver a working model. Some suggest he didn't anticipate the visibility his claims would receive, while others compare the situation to LK99 and Elon Musk's FSD promises.

- Users criticize Shumer's lack of technical knowledge, noting he asked about LORA on social media. The incident is seen as potentially damaging to his credibility, with some labeling it a scam.

Theme 3. Memes and Humor Surrounding Reflection 70B Controversy

- Who are you? (Score: 363, Comments: 34): The post presents a meme depicting Reflection 70B's inconsistent responses to the question "Who are you?". The image shows multiple conflicting identity claims made by the AI model, including being an AI language model, a human, and even Jesus Christ. This meme highlights the issue of AI models' inconsistent self-awareness and their tendency to generate contradictory statements about their own identity.

- The Reflection 70B controversy sparked numerous memes and discussions, with users noting the model's responses changing from Claude to OpenAI to Llama 70B as suspicions grew about its authenticity.

- A user suggested that the developer behind Reflection is using commercial SOTA models to gather data for retraining, aiming to eventually deliver a model that partially fulfills the claims. Others speculated about the developer's true intentions.

- A detailed explanation of the controversy was provided, describing how the model initially impressed users but failed to perform as expected upon release. Investigations revealed that requests were being forwarded to popular models like Claude Sonnet, leading to accusations of deception.

- TL;DR (Score: 249, Comments: 12): The post consists solely of a meme image summarizing the recent Reflection 70B situation. The meme uses a popular format to humorously contrast the expectations versus reality of the model's release, suggesting that the actual performance or impact of Reflection 70B may have fallen short of initial hype or anticipation.

- The Twitter AI community was criticized for overhyping Reflection 70B, with mentions that it was actually tested on Reddit. Users pointed out similar behavior in subreddits like /r/OpenAI and /r/Singularity.

- Some users expressed confusion or criticism about the meme and its creator, while others defended the release, noting that it provides free access to a model comparable to Claude Sonnet 3.5.

- A user suggested that the hype around Reflection 70B might be due to OpenAI's pivot to B2B SaaS, indicating a desire for new developments in the open-source AI community.

- POV : The anthropic employee under NDA that see all the API requests from a guy called « matt.schumer.freeaccounttrial27 » (Score: 442, Comments: 17): An Anthropic employee, bound by an NDA, observes API requests from a suspicious account named "matt.schumer.freeaccounttrial27". The username suggests potential attempts to circumvent free trial limitations or engage in unauthorized access, raising concerns about account abuse and security implications for Anthropic's API services.

- Users joked about the potential consequences of API abuse, with one comment suggesting a progression from "Matt from the IT department" to "Matt from his guantanamo cell" as the scamming strategy escalates.

- The thread took a humorous turn with comments about Anthropic employing cats, including playful responses like "Meow 🐱" and "As a cat, I can confirm this."

- Some users critiqued the post itself, with one suggesting a "class action lawsuit for wasting our time" and another pointing out the misuse of the term "POV" (Point of View) in the original post.

Theme 4. Advancements in Open-Source AI Models and Tools

- gemma-2-9b-it-WPO-HB surpassed gemma-2-9b-it-simpo on AlpacaEval 2.0 Leaderboard (Score: 30, Comments: 5): The gemma-2-9b-it-WPO-HB model has outperformed gemma-2-9b-it-simpo on the AlpacaEval 2.0 Leaderboard, achieving a score of 80.31 compared to the latter's 79.99. This improvement demonstrates the effectiveness of the WPO-HB (Weighted Prompt Optimization with Human Baseline) technique in enhancing model performance on instruction-following tasks.

- The WPO (Weighted Preference Optimization) technique is detailed in a recent paper, with "hybrid" referring to a mix of human-generated and synthetic data in the preference optimization dataset.

- AlpacaEval 2.0 may need updating, as it currently uses GPT4-1106-preview for human preference benchmarking. Suggestions include using gpt-4o-2024-08-06 and validating with claude-3-5-sonnet-20240620.

- The gemma-2-9b-it-WPO-HB model, available on Hugging Face, has outperformed both gemma-2-9b-it-simpo and llama-3-70b-it on different leaderboards, prompting interest in further testing.

- New upstage release: SOLAR-Pro-PT (Score: 33, Comments: 10): Upstage has released SOLAR-Pro-PT, a new pre-trained model available on Hugging Face. The model is accessible at upstage/SOLAR-Pro-PT, though detailed information about its capabilities and architecture is currently limited.

- Users speculate SOLAR-Pro-PT might be an upscaled Nemo model. The previous SOLAR model impressed users with its performance relative to its size.

- The model's terms and conditions prohibit redistribution but allow fine-tuning and open-sourcing of resulting models. Some users suggest fine-tuning it on empty datasets to create quantized versions.

- There's anticipation for nousresearch to fine-tune the model, as their previous Open Hermes solar fine-tunes were highly regarded for coding and reasoning tasks.

- Ollama Alternative for Local Inference Across Text, Image, Audio, and Multimodal Models (Score: 54, Comments: 34): The Nexa SDK is a new toolkit that supports local inference across text, audio, image generation, and multimodal models, using both ONNX and GGML formats. It includes an OpenAI-compatible API with JSON schema for function calling and streaming, a Streamlit UI for easy testing and deployment, and can run on any device with a Python environment, supporting GPU acceleration. The developers are seeking community feedback and suggestions for the project, which is available on GitHub at https://github.com/NexaAI/nexa-sdk.

- ROCm support for AMD GPUs was requested, with the developers planning to add it in the next week. The SDK already supports ONNX and GGML formats, which have existing ROCm compatibility.

- A user compared Nexa SDK to Ollama, suggesting improvements such as ensuring model accuracy, providing clear update information, and improving the model management and naming conventions.

- Suggestions for Nexa SDK include using K quantization as default, offering I matrix quantization, and improving the model listing and download experience to show different quantizations hierarchically.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Developments and Releases

- Salesforce's xLAM-1b model surpasses GPT-3.5 in function calling: A 1 billion parameter model achieving 70% accuracy in function calling, outperforming GPT-3.5 despite its smaller size.

- Phi-3 Mini update with function calling: Rubra AI released an updated Phi-3 Mini model with function calling capabilities, competitive with Mistral-7b v3.

- Reflection API controversy: A sonnet 3.5 wrapper with prompt engineering was marketed as a new model, leading to discussions about AI hype and verification.

AI Research and Applications

- Virotherapy for breast cancer: A virologist successfully treated her own recurring breast cancer using experimental virotherapy, raising discussions about medical ethics and self-experimentation.

- Waymo robotaxi progress: Waymo is providing 100,000 robotaxi rides per week but not yet profitable, drawing comparisons to early-stage strategies of companies like Uber and YouTube.

- AI-generated video creation: A demonstration of creating an AI-generated video using multiple tools including ComfyUI, Runway GEN.3, and SUNO for music generation.

AI Development Tools and Visualization

- TensorHue visualization library: An open-source Python library for tensor visualization compatible with PyTorch, JAX, TensorFlow, Numpy, and Pillow, designed to simplify debugging of tensor contents.

AI Ethics and Societal Impact

- AI-generated art evaluation: A discussion on shifting focus from identifying AI-generated art to assessing its quality, highlighting the evolving perception of AI in creative fields.

AI Industry and Market Trends

- Data growth and AI training: Michael Dell claims the amount of data in the world is doubling every 6-7 months, with Dell Technologies possessing 120,000 petabytes compared to 1 petabyte used in advanced AI model training.

Memes and Humor

- A humorous video about OpenAI's release cycle and the anticipation for new models.

AI Discord Recap

A summary of Summaries of Summaries GPT4O (gpt-4o-2024-05-13)

1. AI Model Performance

- Reflection 70B underwhelms: Reflection 70B's performance lagged behind Llama 3.1 in benchmarks, raising skepticism about its capabilities, with independent tests showing lower scores and delayed weight releases.

- Matt Shumer acknowledged issues with the uploaded weights on Hugging Face, promising a fix soon.

- DeepSeek Coder struggles: Users reported DeepSeek Coder malfunctioning and providing zero responses, indicating possible upstream issues despite the status page showing no problems.

- This added to existing frustrations over API limitations and service inconsistencies.

- CancerLLM and MedUnA advance medical AI: CancerLLM and MedUnA are enhancing clinical applications and medical imagery, supported by benchmarks like TrialBench.

- Discussions emphasized diving deeper into medical papers to improve research visibility.

2. AI Tools and Integrations

- Aider improves workflow efficiency: Community members shared their Aider workflows, integrating tools like CodeCompanion for streamlined project setups and emphasizing clear planning.

- A refined system prompt is expected to enhance output consistency in Aider.

- OpenInterpreter's resource management woes: While the 01 app allows quick access to audio files, users face performance variability on Mac, leading to inconsistent outcomes.

- One user indicated a preference for plain OpenInterpreter due to the 01 app's stability problems.

3. Open Source AI Developments

- GitHub Open Source AI panel: GitHub is hosting a free Open Source AI panel next Thursday (9/19) at their San Francisco office, discussing access, democratization, and the impact of open source on AI.

- Panelists include representatives from Ollama, Nous Research, Black Forest Labs, and Unsloth AI.

- Finegrain's open-source image segmentation model: Finegrain released an open-source image segmentation model outperforming closed-source alternatives, available under the MIT License on Hugging Face.

- Future improvements include a subtler prompting method for enhanced disambiguation beyond simple bounding boxes.

4. Benchmarking and Evaluation

- Overfitting concerns in model training: Concerns were raised about overfitting, with benchmarks often misleading and models inevitably experiencing overfitting regardless of size, leading to skepticism about benchmark reliability.

- A member expressed hope for their article on benchmark issues to be reviewed at NeurIPS, highlighting evaluation challenges.

- Benchmark limitations acknowledged: Insights were shared on benchmark limitations, with members noting they remain crucial for comparisons despite flaws.

- Discussions emphasized the necessity of diverse benchmarks to gauge AI models, pointing out risks of overfitting to certain datasets.

5. AI Community Events

- Berlin AI Hackathon: The Factory Network x Tech: Berlin AI Hackathon is scheduled for September 28-29 at Factory Berlin Mitte, aiming to gather 50-100 builders motivated to drive AI-driven innovations.

- Participants can improve existing products or initiate new projects in a collaborative environment.

- LLVM Developer Meeting: The upcoming Fall LLVM Developer Meeting in October will feature 5 talks by Modular on topics including Mojo and GPU programming.

- Recorded sessions will be available on YouTube following the event, generating excitement among attendees.

PART 1: High level Discord summaries

HuggingFace Discord

- Hugging Face Inference API Troubles: Users are facing 'bad credentials' errors when accessing private models via the Hugging Face Inference API, often without helpful logs.

- Suggested solutions involve verifying API token setups and reviewing recent updates affecting functionality.

- Fine-Tuning Models on Hugging Face: Discussions indicated that models fine-tuned on Hugging Face might not always upload correctly, leading to missing files in repositories.

- Users recommended scrutinizing configurations and managing larger models during conversion processes for optimal results.

- Challenges in AI Art Generation: The community shared experiences about generating quality AI art, highlighting persistent issues with limb and hand representations.

- Simpler, cheesier prompts were suggested as surprisingly more effective in yielding desirable results.

- Universal Approximation Theorem Insights: Members analyzed the Universal Approximation Theorem, referencing Wikipedia for foundational details.

- Discussions revealed limitations in Haykin's work and better generalizations from Leshno et al. addressing continuity.

- Exploring Medical AI Advances: Recent updates featured CancerLLM and MedUnA for their roles in clinical applications, alongside benchmarks like TrialBench.

- Members expressed enthusiasm for delving deeper into medical papers, enhancing the visibility of significant research.

aider (Paul Gauthier) Discord

- DeepSeek struggles with benchmark accuracy: Users voiced concerns about DeepSeek Coder performance, indicating it may be using the incorrect model ID, leading to poor stats on the dashboard.

- Both model IDs currently point to DeepSeek 2.5, which may be contributing to the benchmarking issues.

- Aider improves workflow efficiency: Community members shared their Aider workflows, integrating tools like CodeCompanion for streamlined project setups and emphasizing clear planning.

- The introduction of a refined system prompt is expected to enhance output consistency in Aider.

- Reflection 70B falls short against Llama3 70B: Reflection 70B scored 42% on the code editing benchmark, while Llama3 70B achieved 49%; the modified version of Aider lacks necessary functionality with certain tags.

- For further details, check out the leaderboards.

- V0 update shows strong performance metrics: Recent updates to v0, tailored for NextJS UIs, have demonstrated remarkable capabilities, with users sharing a YouTube video showcasing its potential.

- For more insights, visit v0.dev/chat for demos and updates.

- Concerns over AI's impact on developer jobs: Members expressed worries about how advanced AI tools could potentially alter the developer role, raising questions over job oversaturation and relevance.

- As AI continues to evolve, there's rising tension regarding the workforce's future in development.

OpenRouter (Alex Atallah) Discord

- Reflection API Available for Playtesting: The Reflection API is now available for free playtesting on OpenRouter, with notable performance differences between hosted and internal versions.

- Matt Shumer expressed that the hosted API is currently not fully optimized and a fixed version is anticipated shortly.

- ISO20022 Gains Attention in Crypto: Members are urged to explore ISO20022 as it could significantly influence financial transactions amid crypto developments.

- The discussion highlighted the standard's implications, reflecting a growing interest in its relevance to the evolving financial landscape.

- DeepSeek Coder Faces API Malfunctions: Users reported that the DeepSeek Coder is providing zero responses and malfunctioning, indicating possible upstream issues despite the status page showing no reported problems.

- This complication adds to frustrations surrounding existing API limitations and inconsistencies in service availability.

- Base64 Encoding Workaround for Vertex AI: A workaround was devised for JSON upload issues with Vertex AI; users are now advised to convert the entire JSON into Base64 before submission.

- This technique, drawn from a GitHub PR discussion, streamlines the transfer process.

- Integration of Multi-Modal Models: Technicians inquired about methods for combining local images with multi-modal models, focusing on request formatting for proper integration.

- Guidance was provided on encoding images into base64 format to facilitate direct API interactions.

Stability.ai (Stable Diffusion) Discord

- LoRA vs Dreambooth Showdown: LoRAs are compact and easily shareable, allowing for runtime combinations, whereas Dreambooth generates much larger full checkpoints.

- Both training methods thrive on limited images, with Kohya and OneTrainer leading the way, and Kohya taking the crown for popularity.

- Budget GPU Guide Under $600: For local image generation, users suggest considering a used 3090 or 2080 within a $600 budget to boost VRAM-dependent performance.

- Increasing VRAM ensures better results, especially for local training tasks.

- The Backward Compatibility Hail Mary: There is a plea for new Stable Diffusion models to maintain backward compatibility with SD1.5 LoRAs, as SD1.5 is still favored among users.

- Conversations underline SD1.5's strengths in composition, with many asserting that newer models have yet to eclipse its effectiveness.

- Content Creation Critique: Influencers vs Creators: A critique surfaced regarding the influencer culture that pressures content creators into monetizing via platforms like Patreon and YouTube.

- Some community members yearn for a shift back to less commercialized content creation, while balancing the reality of influencer marketing.

- LoRAs Enhance Image Generation: Users highlighted that improving details in AI-generated images depends heavily on workflow enhancements rather than merely on prompting, with LoRAs proving essential.

- Many incorporate combinations like Detail Tweaker XL to maximize results in their image productions.

LM Studio Discord

- Users express concerns over LM Studio v0.3: Feedback on LM Studio v0.3 reveals disappointment over the removal of features from v0.2, sparking discussions about potential downgrades.

- Concerns about missing system prompts and adjusting settings led developers to assure users that updates are forthcoming.

- Model configuration bugs impact performance: Users face issues with model configurations, particularly regarding GPU offloading and context length settings, affecting the assistant's message continuity.

- Solutions suggested involve tweaking GPU layers and ensuring dedicated VRAM, as one user experienced context overflow errors.

- Interest in Training Small Language Models: Discussion focused on the viability of training smaller language models, weighing dataset quality and parameter counts against anticipated training loss.

- Challenges specific to supporting less common languages and obtaining high-quality datasets were highlighted by multiple members.

- Navigating LM Studio server interactions: Users clarified that sending API requests is essential for interacting with the LM Studio server rather than a web interface.

- One user found success after grasping the correct API request format, resolving their earlier issues.

- Excitement for Apple Hardware: Speculation surrounds Apple's upcoming hardware announcements, particularly regarding the 5090 GPU and its capabilities compared to previous models.

- Expectations suggest that Apple will maintain dominance with innovative memory architectures in the next wave of hardware.

Perplexity AI Discord

- Cancellation of Subscriptions Sparks Outrage: Users are frustrated with the cancellation of their subscriptions after using leaked promo codes, with reports of limited support responses from Perplexity's team.

- Many are seeking clarification on this issue, feeling left in the dark about their subscription status.

- Model Usage Limit Confusion Reigns: Clarification is needed regarding imposed limits on model usage, with pro users facing a cap of 450 queries and Claude Opus users only 50.

- Questions are arising about how to accurately specify the model in use during interactions, pointing to a lack of straightforward guidance.

- API Responses Lack Depth: Users noticed that API responses are short and lack the richness of web responses, raising concerns about the default response format.

- They are looking for suggestions on adjusting parameters to enhance the API output, indicating potential areas for improvement.

- Payment Method Errors Cause Frustration: Numerous users reported authentication issues with their payment methods when trying to set up API access, with various errors across multiple cards.

- This problem appears to be widespread, as others noted similar payment challenges, particularly with security code error messages.

- Web Scraping Alternatives Emerge: Discussions have shifted towards alternatives to Perplexity's functionality, citing other search engines like You.com and Kagi that utilize web scraping.

- These options are gaining attention for effectively addressing issues related to knowledge cutoffs and inaccuracies in generated responses.

Cohere Discord

- Cohere tech tackles moderation spam: Members highlighted how Cohere's classification tech effectively filters out crypto spam, maintaining the integrity of server discussions.

- One user remarked, 'It's a necessary tool for enjoyable conversations!', emphasizing the bot's importance.

- Wittgenstein launches LLM web app: A member shared the GitHub link to their newly coded LLM web app, expressing excitement for feedback.

- They confirmed that the app uses Langchain and is available on Streamlit, now deployed in the cloud.

- Concerns about crypto scammers: Members voiced frustrations over crypto scams infiltrating the AI space, impacting the reputation of legitimate advancements.

- It was noted by an enthusiast how such spam tarnishes AI's credibility in broader discussions.

- Exploring Cohere products and their applications: Members expressed interest in Cohere products, pointing to customer use cases available regularly on the Cohere blog.

- Usage insights and starter code can be found in the cookbooks, inspiring members' projects.

- Invalid raw prompt and API usage challenges: Members discussed a 400 Bad Request error associated with the

raw_promptingparameter while clarifying how to configure outputs.- A member noted, 'Understanding chat turns is critical', reinforcing the need for clarity in API documentation.

Nous Research AI Discord

- Reflection 70B's Underwhelming Benchmarks: Recent evaluations reveal that Reflection 70B scores 42% on the aider code editing benchmark, falling short of Llama 3.1 at 49%.

- This discrepancy has led to skepticism regarding its capabilities and the delayed release of some model weights, raising questions about transparency.

- Medical LLM Advancements in Oncology: Highlighted models like CancerLLM and MedUnA enhance applications in oncology and medical imagery, showing promise in clinical environments.

- Initiatives like OpenlifesciAI's thread detail their impact on improving patient care.

- AGI Through RL Training: Discussion emphasized that AGI may be achievable through intensive training combined with reinforcement learning (RL).

- However, doubts persist about the efficacy of transformers in achieving Supervised Semantic Intelligence (SSI).

- PlanSearch Introduces Diverse LLM Outputs: Scale SEAL released PlanSearch, a method improving LLM reasoning by promoting output diversity through natural language search.

- Hugh Zhang noted this enables deeper reasoning at inference time, representing a strategic shift in model capabilities.

- Scaling Models for Enhanced Reasoning: Scaling larger models may address reasoning challenges by training on diverse, clean datasets to improve performance.

- Concerns remain regarding resource demands and the current limitations of cognitive simulations in achieving human-like reasoning.

CUDA MODE Discord

- Together AI's MLP Kernels outperform cuBLAS: Members discussed how Together AI's MLP kernels achieve a 20% speed enhancement, with observations on SwiGLU driving performance. The conversation hinted at further insights from Tri Dao at the upcoming CUDA MODE IRL event.

- This sparked inquiries on efficiency metrics compared to cuBLAS and prompted exchanges on achieving competitive speedups in machine learning frameworks.

- ROCm/AMD Falling Behind NVIDIA: Discussions raised concerns about why ROCm/AMD struggles to capitalize on the AI boom compared to NVIDIA, with members questioning corporate trust issues. Despite PyTorch's compatibility with ROCm, community consensus suggests NVIDIA's hardware outperforms in real-world applications.

- Such insights have led to speculations about the strategic decisions AMD is making in the ever-evolving GPU marketplace.

- Triton Matmul Integration Shows Potential: The Thunder channel session highlighted the application of Triton Matmul, focusing on real-world integration with custom kernels. For those interested, a recap is available in a YouTube video.

- Members expressed enthusiasm for the deployment of fusing operations and teased future application to the Liger kernel.

- AMD's UDNA Architecture Announcement: At IFA 2024, AMD introduced UDNA, a unified architecture merging RDNA and CDNA, aiming to better compete against NVIDIA's CUDA ecosystem. This strategic pivot indicates a commitment to enhancing performance across gaming and compute sectors.

- Moreover, AMD's decision to deprioritize flagship gaming GPUs reflects a broader strategy to expand their influence in diverse GPU applications, moving away from a narrow focus on high-end gaming.

- Concerns with PyTorch's ignore_index: It was confirmed that the handling of

ignore_indexin Cross Entropy avoids invalid memory access, managing conditions effectively with early returns. Test cases demonstrating proper handling reassured concerned members.- This exchange pinpointed the essentiality of robust testing in kernel implementations, particularly as performance tuning discussions continued to evolve.

OpenAI Discord

- Reflection Llama-3.1 Claims Top Open Source Title: The newly released Reflection Llama-3.1 70B model is claimed to be the best open-source LLM currently available, utilizing Reflection-Tuning to enhance reasoning capabilities.

- Users reported earlier issues have been addressed, encouraging further testing for improved outcomes.

- Clarifications on OpenAI's Mysterious 'GPT Next': Members were skeptical about GPT Next being a new model, which OpenAI clarified was just figurative terminology with no real implications.

- Despite clarification, frustration remains regarding the lack of concrete updates amid rising expectations.

- Hardware Needs for Running Llama 3.1 70B: To successfully operate models like Llama 3.1 70B, users need a high-spec GPU PC or Apple Silicon Mac with at least 8GB of VRAM.

- Experiences on various setups highlighted that inadequate resources severely hamper performance.

- Enhancing AI Outputs with Prompt Engineering: Members recommended using styles like 'In the writing style of Terry Pratchett' to creatively boost AI responses, showcasing prompt adaptability.

- Structured output templates and defined chunking strategies were emphasized for effective API interactions.

- Debating AI for Stock Analysis: Caution arose over using OpenAI models for stock analysis, advocating against reliance solely on prompts without historical data.

- Discussions pointed towards the necessity of real-time updates and traditional models for comprehensive evaluations.

Modular (Mojo 🔥) Discord

- Integrating C with Mojo via DLHandle: Members discussed how to integrate C code with Mojo using

DLHandleto dynamically link to shared libraries, allowing for function calls between the two.- An example was provided where a function to check if a number is even was executed successfully after being loaded from a C library.

- LLVM Developer Meeting Nuggets: The upcoming Fall LLVM Developer Meeting in October will feature 5 talks by Modular on topics including Mojo and GPU programming.

- Attendees expressed excitement, with recorded sessions expected to be available on YouTube following the event.

- Subprocess Implementation Aspirations: A member expressed interest in implementing Subprocess capabilities in the Mojo stdlib, indicating a push to enhance the library.

- Concerns were raised about the challenges of setting up development on older hardware, emphasizing resource difficulties.

- DType's Role in Dict Keys: Discussion focused on why

DTypecannot serve as a key in a Dict, noting DType.uint8 as a value rather than a type.- Members mentioned that changing this implementation could be complex due to its ties with SIMD types having specific constraints.

- Exploration of Multiple-precision Arithmetic: Members discussed the potential for multiple-precision integer arithmetic packages in Mojo, referencing implementations akin to Rust.

- One participant shared a GitHub link showing progress on a

uintpackage for this capability.

- One participant shared a GitHub link showing progress on a

Eleuther Discord

- DeepMind's Resource Allocation Shift: A former DeepMind employee indicated that compute required for projects relies heavily on their product-focus, especially post-genai pivot.

- This insight stirred discussions on how foundational research might face reduced resources, as noted by prevalent community skepticism.

- Scraping Quora Data Issues: Members examined the potential use of Quora's data in AI training datasets, acknowledging its value but raising concerns over its TOS.

- The discussion highlighted the possible infeasibility of scraping due to stringent regulations.

- Releasing TurkishMMLU Dataset: TurkishMMLU was officially released with links to the dataset and a relevant GitHub issue.

- This addition aims to bolster language model evaluation for Turkish, as outlined in a related paper.

- Insights on Power Law Curves in ML: Members discussed that power law curves effectively model performance scaling in ML, referencing statistical models related to scaling laws in estimation tasks.

- One member noted similarities between scaling laws for LLM loss and those in statistical estimation, indicating that mean squared error scales as N^(-1/2).

- Exploring Adaptive Transformers: A discussion focused on 'Continual In-Context Learning with Adaptive Transformers,' which allows transformers to adapt to new tasks using prior knowledge without parameter changes.

- This technique aims for high adaptability while minimizing catastrophic failure risks, attracting attention across various domains.

Interconnects (Nathan Lambert) Discord

- Reflection API Performance Questioned: The Reflection 70B model faced scrutiny, suspected to have been simply a LoRA trained on benchmark sets atop Llama 3.0; claims of top-tier performance were misleading due to flawed evaluations.

- Initial private API tests yielded better results than public versions, raising concerns over inconsistencies across releases.

- AI Model Release Practices Critiqued: Debates emerged on the incompetence surrounding significant model announcements without robust validation, leading to community distrust regarding AI capabilities.

- Members urged the industry to enforce stricter evaluation standards before making claims public, noting a troubling trend in inflated expectations.

- OpenAI's Transition to Anthropic Stirs Talks: Discussion centered on OpenAI co-founder John Schulman’s move to Anthropic, described as surreal and highlighting transitions within leadership.

- The light-hearted remark about frequent mentions of 'from OpenAI (now at Anthropic)' captures the shift in community dynamics.

- Speculative Buzz Around GPT Next: Speculation arose from a KDDI Summit presentation regarding a model labeled GPT Next, which OpenAI clarified was just a figurative placeholder.

- A company spokesperson noted that the graphical representation was merely illustrative, not indicative of a timeline for future releases.

- Internal Bureaucracy Slowing Google Down: An ex-Googler voiced concerns over massive bureaucracy in Google, citing numerous internal stakeholders stymying effective project execution.

- This sentiment underscores challenges employees face in large organizations where internal politics often hinder productivity.

Latent Space Discord

- AI Codex Boosts Cursor: The new AI Codex for Cursor implements self-improvement features like auto-saving insights and smart categorization.

- Members suggested that a month of usage could unveil valuable learning outcomes about its efficiency.

- Reflection API Raises Eyebrows: The Reflection API appears to function as a Sonnet 3.5 wrapper, reportedly filtering out references to Claude to mask its identity.

- Various evaluations suggest its performance may not align with claims, igniting inquiry about the benchmarking methodology.

- Apple's Bold AI Advances: Apple's recent event teased substantial updates to Apple Intelligence, hinting at a potentially improved Siri and an upcoming AI phone.

- This generated excitement around competitive implications, as many members called for insights from Apple engineers.

- New Enum Mode Launches in Gemini: Logan K announced the advent of Enum Mode in the Gemini API, enhancing structured outputs by enabling selection from predefined options.

- This innovation looks to streamline decision-making for developers interacting with the Gemini framework.

- Interest in Photorealistic LoRA Model: A user showcased a photorealistic LoRA model that's captivating the Stable Diffusion community with its detailed capabilities.

- Discussions surrounding its performance, particularly unexpected anime images, have garnered significant attention.

OpenInterpreter Discord

- OpenInterpreter's resource management woes: While the 01 app allows quick access to audio files, users face performance variability on Mac, leading to inconsistent outcomes.

- One user indicated a preference for plain OpenInterpreter due to the 01 app's stability problems.

- Call for AI Skills in OpenInterpreter: Users are eager for the release of AI Skills for the standard OpenInterpreter rather than just the 01 app, showcasing a demand for enhanced functionality.

- Frustration echoed regarding the 01 app's performance relative to the base OpenInterpreter.

- Discontinuation and Refunds for 01 Light: The team announced the official end of the 01 Light, focusing on a free 01 app and processing refunds for all hardware orders.

- Disappointment was prevalent among users eagerly waiting for devices, but assurance was given regarding refund processing through help@openinterpreter.com.

- Scriptomatic’s triumph with Open Source Models: A member successfully integrated Scriptomatic with structured outputs from open source models and plans to submit a PR soon.

- They expressed appreciation for the support provided for Dspy, emphasizing their methodical approach involving grepping and printing.

- Instructor Library Enhances LLM Outputs: The Instructor library was shared, designed to simplify structured outputs from LLMs using a user-friendly API based on Pydantic.

- Instructor is poised to streamline validation, retries, and streaming, bolstering user workflows with LLMs.

LlamaIndex Discord

- Deploy Agentic System with llama-deploy: Explore this full-stack example of deploying an agentic system as microservices with LlamaIndex and getreflex.

- This setup streamlines chatbot systems, making it a go-to for developers wanting efficiency.

- Run Reflection 70B Effortlessly: You can now run Reflection 70B directly from LlamaIndex using Ollama, given your laptop supports it (details here).

- This capability allows hands-on experimentation without extensive infrastructure requirements.

- Build Advanced RAG Pipelines: Check out this guide for building advanced agentic RAG pipelines with dynamic query routing using Amazon Bedrock.

- The tutorial covers all necessary steps to optimize RAG implementations effectively.

- Automate Financial Analysis Workflows: A blog post discusses creating an agentic summarization system for automating quarterly and annual financial analysis (read more).

- This approach can significantly boost efficiency in financial reporting and insights.

- Dynamic ETL for RAG Environments: Learn how LLMs can automate ETL processes with data-specific decisions, as outlined in this tutorial.

- This method enhances data extraction and filtering by adapting to different dataset characteristics.

Torchtune Discord

- Gemma Model Configuration Updates: To configure a Gemma 9B model using Torchtune, users suggested modifying the

modelentry in the config with specific parameters found in config.json.- This approach leverages the component builder, aiming for flexibility across various model sizes.

- Gemma 2 Support Challenges in Torchtune: Discussion arose around difficulties in supporting Gemma 2 within Torchtune, mainly due to issues with logit-softcapping and bandwidth constraints.

- The burgeoning architecture improvements in Gemma 2 have generated a backlog of requested features waiting for implementation.

- Proposed Enhancements for Torchtune: A potential bug concerning padding sequence behavior in Torchtune was highlighted alongside a proposed PR to fix the issue by clarifying the flip method.

- The goal is to achieve feature parity with the torch pad_sequence, enhancing overall library functionality.

- Cache Handling During Generation Needs Refinement: Users discussed the need for modifications in cache behavior during generation, proposing the use of

torch.inference_modefor consecutive forward calls in attention modules.- Despite this, they acknowledged that an explicit flag for

.forward()might yield a more robust solution.

- Despite this, they acknowledged that an explicit flag for

- Chunked Linear Method Implementation Reference: A member shared interest in a clean implementation of chunked linear combined with cross-entropy from a GitHub gist as a potential enhancement for Torchtune.

- Integrating this method may pose challenges due to the library's current separation of the LM-head from loss calculations.

LangChain AI Discord

- Struggling with .astream_events() Decoding: Users reported challenges with decoding streams from .astream_events(), especially the tedious manual serialization through various branches and event types.

- Participants highlighted the lack of useful resources, calling for a reference implementation to ease the burdens of this process.

- Gradio Struggles with Concurrency: After launching Gradio with 10 tabs, only 6 requests generated despite higher concurrency limits, hinting at potential configuration issues.

- Users pointed out the hardware limitations, suggesting the need for further investigation into handling concurrent requests.

- Azure OpenAI Integration Facing 500 Errors: A user is dealing with 500 errors when interacting with Azure OpenAI, prompting queries about endpoint parameters.

- Advice included validating environment variables and naming conventions to potentially resolve these troubleshooting headaches.

- VAKX Offers No-Code AI Assistant Building: VAKX was introduced as a no-code platform enabling users to build AI assistants, with features like VAKChat integration.

- Members were encouraged to explore VAKX and the Start Building for Free link for quick setups.

- Selenium Integrated with GPT-4 Vision: An experimental project demonstrated the integration of Selenium with the GPT-4 vision model, with a detailed process available in this YouTube video.

- Interest sparked around leveraging this integration for more effective automated testing with vector databases.

OpenAccess AI Collective (axolotl) Discord

- Overfitting Concerns Take Center Stage: Members raised issues regarding overfitting, emphasizing that benchmarks can mislead expectations, suggesting that models inevitably experience overfitting regardless of size.

- “I don't believe benchmarks anymore” captured skepticism towards reliability in model evaluations based on inadequate data.

- Benchmark Limitations Under Scrutiny: Insights were shared on benchmark limitations, revealing that although flawed, they remain crucial for comparisons among models.

- A member expressed optimism for their article on benchmark issues to be reviewed at NeurIPS, highlighting current evaluation challenges.

- AI Tool Exposed as a Scam: A recently hyped AI tool turned out to be a scam, falsely claiming to compare with Claude 3.5 or GPT-4.

- Discussions stressed the time loss caused by such scams and their distracting nature across various channels.

- Urgent Inquiry on RAG APIs: A member urgently sought experiences with RAG APIs, needing immediate support for a project due to their model being unready.

- They highlighted the challenges of 24/7 hosting costs and sought alternatives to manage their AI projects effectively.

- H100's 8-Bit Loading Limitations Questioned: A member queried why the H100 does not support loading models in 8-bit format, seeking clarity on this limitation.

- They reiterated the urgency for insights into the H100's constraints regarding 8-bit model loading.

LAION Discord

- Berlin AI Hackathon Promises Innovation: The Factory Network x Tech: Berlin AI Hackathon is scheduled for September 28-29 at Factory Berlin Mitte, aiming to gather 50-100 builders motivated to drive AI-driven innovations.

- Participants can improve existing products or initiate new projects in a collaborative environment, fostering creative approaches.

- Finegrain's Open-Source Breakthrough: Finegrain released an open-source image segmentation model outperforming closed-source alternatives, available under the MIT License on Hugging Face.

- Future improvements include a subtler prompting method for enhanced disambiguation and usability beyond simple bounding boxes.

- Concrete ML Faces Scaling Issues: Discussions highlighted that Concrete ML demands Quantization Aware Training (QAT) for effective integration with homomorphic encryption, resulting in potential performance compromises.

- Concerns about limited documentation were raised, especially in its applicability to larger models in machine learning.

- Free Open Source AI Panel Event: GitHub will host an Open Source AI panel on September 19 in SF, featuring notable panelists from organizations like Ollama and Nous Research.

- While free to attend, registration is prerequisite due to limited seating, making early sign-up essential.

- Multimodality in AI Captivates Interest: The rise of multimodality in AI has been underscored with examples like Meta AI transfusion and DeepMind RT-2, showcasing significant advancements.

- Discussion suggested investigating tool augmented generation employing techniques like RAG, API interactions, web searches, and Python executions.

DSPy Discord

- LanceDB Integration PR Submitted: A member raised a PR for LanceDB Integration to add it as a retriever for handling large datasets in the project.

- They requested feedback and changes from a specific user for the review process, emphasizing collaboration in enhancements.

- Mixed feelings on GPT-3.5 deprecation: Members discussed varying user experiences with models following the deprecation of GPT-3.5, noting inconsistent performance, especially with open models like 4o-mini.

- One user suggested using top closed models as teachers for lower ones to improve performance consistency.

- AttributeError Plagues MIPROv2: A user reported encountering an

AttributeErrorin MIPROv2, indicating a potential issue in theGenerateModuleInstructionfunction.- Discussion circled around suggested fixes, with some members pointing to possible problems in the CookLangFormatter code.

- Finetuning small LLMs Generates Buzz: A member shared success in finetuning a small LLM using a unique reflection dataset, available for interaction on Hugging Face.

- They provided a link while encouraging others to explore their findings in this domain.

- CookLangFormatter Issues Under Scrutiny: Members debated potential issues with the CookLangFormatter class, identifying errors in method signatures.

- Post-modifications, one user reported positive outcomes and suggested logging the issue on GitHub for future reference.

tinygrad (George Hotz) Discord

- WebGPU PR #6304 makes waves: The WebGPU PR #6304 by geohot marks a significant effort aimed at reviving webgpu functionality on Asahi Linux, with a $300 bounty attached.

- 'It's a promising start for the initiative,' noted a member, emphasizing the community’s excitement over the proposal.

- Multi-GPU Tensor Issues complicate development: Developers are encountering AssertionError with multi-GPU operations, which requires all buffers to share the same device.

- A frustrated user remarked, 'I've spent enough time... convinced this goal is orthogonal to how tinygrad currently handles multi-gpu tensors.'

- GGUF PRs facing delays and confusion: Concerns are rising regarding the stalled status of various GGUF PRs, which are lacking merges and clear project direction.

- One user inquired about a roadmap for GGUF, highlighting a need for guidance moving forward.

- Challenges in Model Sharding: Discussions unveiled issues with model sharding, where certain setups function on a single GPU yet fail when expanded across multiple devices.

- One user observed that 'George gave pushback on my workaround...', indicating a complex dialogue around solutions.

Gorilla LLM (Berkeley Function Calling) Discord

- xLAM Prompts Deviation from Standard: Members discussed the unique system prompt used for xLAM, as detailed in the Hugging Face model card.

- This prompted an analysis of how personalized prompts can diverge from the BFCL default.

- LLaMA Lacks Function Calling Clarity: Participants noted that LLaMA offers no documentation on function calling, raising concerns regarding prompt formats.

- Although classified as a prompt model, LLaMA's handling of function calling remains ambiguous due to inadequate documentation.

- GitHub Conflicts Cause Integration Delays: A user reported facing merge conflicts with their pull request, #625, obstructing its merger.

- After resolving the conflicts, they resubmitted a new pull request, #627 to facilitate integration.

- Exploring Model Evaluation via VLLM: A query arose regarding the evaluation of models after setting up the VLLM service.

- The inquiry reflects a significant interest in model assessment methodologies and best practices within the community.

- Introducing the Hammer-7b Handler: The community discussed the new Hammer-7b handler, emphasizing its features as outlined in the associated pull request.

- Detailed documentation with a CSV table highlights model accuracy and performance metrics.

LLM Finetuning (Hamel + Dan) Discord

- 4090 GPU enables larger models: With a 4090 GPU, engineers can run larger embedding models concurrently, including Llama-8b, and should consider version 3.1 for enhanced performance.

- This setup boosts efficiency in processing tasks and allows more complex models to operate smoothly.

- Hybrid Search Magic with Milvus: Discussions highlighted using hybrid search with BGE and BM25 on Milvus, demonstrated with an example from the GitHub repository.

- This example effectively illustrates the incorporation of both sparse and dense hybrid search for improved data retrieval.

- Boost Results with Reranking: Implementing a reranker that utilizes metadata for each chunk helps prioritize and refine result sorting.

- This method aims to enhance data handling, making retrieved information more relevant and accurate.

Alignment Lab AI Discord

- Understanding RAG Based Retrieval Evaluation: A member inquired about necessary evaluation metrics for assessing a RAG based retrieval system within a domain-specific context.

- They were uncertain whether to compare their RAG approach to other LLMs or to evaluate against results without using RAG.

- Comparison Strategies for RAG: The same member pondered whether to conduct comparisons only with and without RAG or also against other large language models.

- This question sparked interest, prompting members to consider various approaches for evaluating the effectiveness of RAG in their projects.

MLOps @Chipro Discord

- GitHub Hosts Open Source AI Panel: GitHub is hosting a free Open Source AI panel next Thursday (9/19) at their San Francisco office, aimed at discussing access, democratization, and the impact of open source on AI.

- Panelists include representatives from Ollama, Nous Research, Black Forest Labs, and Unsloth AI, contributing to vital conversations in the AI community.

- Registration Approval Required for AI Panel: Attendees are required to register for the event, with registration subject to host approval to manage effective attendance.

- This process aims to ensure a controlled environment as interest in the event grows within the AI sector.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

> {% else %} > {% else %} >

OpenInterpreter ▷ #O1 (54 messages🔥):

Torch installation issues01 Light discontinuationRefund process for 0101 app launch detailsUsing OpenInterpreter

- Struggles with Torch Installation: Many users encountered issues installing Torch 2.3.1 using Poetry, leading to RuntimeError stating there are no installation candidates. A user shared that switching Python versions and even updating their VS Code seemed to resolve it for them.

- Ohhhhh boy have I had that same problem described their journey of repeatedly attempting to fix the issue.

- Discontinuation of 01 Light: The 01 Light has been officially discontinued, and the team announced they will be refunding all hardware orders while launching a free 01 app. This decision aimed to enable the software team to focus on advancing their platform without compromising software capabilities.

- Users expressed disappointment about the discontinuation, especially those who had been eagerly waiting for their devices.

- Refund Process for 01 Hardware: Users inquired about the refund policy for the 01 Light with assurances that refunds are being processed by emailing help@openinterpreter.com. Some users worried about receiving refunds if their purchases were made via gift cards.

- Mikebirdtech confirmed that refunds are available, stating, Now worries, you'll get your money back.

- Launch of 01 App: The team announced the launch of a free 01 app, stating it retains all functionalities of the 01 Light. They encouraged users to try the app despite the discontinuation of the hardware device.

- Creative responses acknowledged that smartphones can perform similar functions, making the discontinuation less critical.

- Running OpenInterpreter on Different Platforms: Some users asked about running the app on iOS and Windows, indicating interest in cross-platform compatibility. Concerns were raised about issues related to Poetry configurations, especially when missing a

pyproject.tomlfile.- Users offered tips while grappling with the intricacies of managing virtual environments and running commands.

- Tweet from killian (@hellokillian): Today we're discontinuing the 01 light, refunding everyone, and launching a free 01 app. We're also open-sourcing all our manufacturing materials + a major 01.1 update. Why? Focus. This soft...

- Open Interpreter - It should have been an app: Official changelog for the open-source Open Interpreter project.

OpenInterpreter ▷ #ai-content (5 messages):

Scriptomatic with open source modelsInstructor Python library

- Scriptomatic integrates with Open Source Models: A member reported successfully getting Scriptomatic to work with structured output from open source models, indicating that they will submit a PR soon.

- They expressed gratitude for the framework provided for Dspy and noted their process involved a lot of grepping and printing.

- Instructor Library Enhances LLM Outputs: A message shared a link to the Instructor library, which simplifies working with structured outputs from LLMs using a user-friendly API built on Pydantic.

- Instructor promises to streamline validation, retries, and streaming responses for users looking to improve their LLM workflows.

- YouTube Resource for Scriptomatic: A member posted a link to a YouTube video that worked for them in relation to the ongoing discussions about Scriptomatic.

- This resource seems to be aimed at helping others navigate the tools discussed in the channel.

Link mentioned: instructor: structured outputs for llm

LlamaIndex ▷ #blog (9 messages🔥):

Agentic System DeploymentRunning Reflection 70BAdvanced RAG PipelinesAutomating Financial AnalysisDynamic ETL for RAG

- Deploy Agentic System with llama-deploy: If you're looking for an end-to-end example of deploying an agentic system as microservices, check out this full-stack example using @getreflex and LlamaIndex.

- It simplifies the process significantly and is perfect for developers who want to streamline their chatbot systems.

- Run Reflection 70B Effortlessly: You can now run Reflection 70B using Ollama directly from LlamaIndex if your laptop supports it, as mentioned here.

- This allows developers to experiment with this advanced model without needing extensive infrastructure.

- Build Advanced RAG Pipelines: A guide is available for building advanced agentic RAG pipelines using Amazon Bedrock that includes dynamic query routing and top-k vector search.

- This comprehensive tutorial covers everything needed to optimize RAG implementations.

- Automate Financial Analysis with Agentic Workflows: This blog post discusses how to build an agentic summarization system to automate quarterly and annual financial analysis, which can aggregate results effectively (read more).

- Such automation can greatly enhance efficiency in financial reporting and decision-making.

- Dynamic ETL with LLMs: Instead of fixed chunking, LLMs can automate the ETL processes in RAG environments with data-specific decisions, as demonstrated in this tutorial (link).

- This approach streamlines data extraction and filtering, adapting to the characteristics of different datasets.

LlamaIndex ▷ #general (51 messages🔥):

Cohere RerankerLlamaIndex Node PostprocessorsLlama Parse Service StatusLlamaIndex Structured OutputsUsing Llama 3 with LlamaIndex

- Using Cohere Reranker with Azure: A user inquired about utilizing the Cohere reranker as a node postprocessor in Azure AI studio, referencing potential issues with existing imports.

- Another member confirmed that Azure doesn't currently have a dedicated rerank module, but mentioned that creating one is feasible as the base class is simple.

- Understanding LlamaIndex Workflows: A member asked about the differences between passing data through Context and setting instance properties in LlamaIndex workflows, seeking clarification on persistence across runs.

- It was explained that Context is not shared between nested workflows to promote modularity, whereas setting attributes on 'self' retains data across multiple runs.

- Llama Parse Service Status Update: Concerns were raised about the operational status of the Llama Parse service, prompting updates and current status indications from participants.

- As of the latest updates, the service appeared to be back online but still showed some degradation due to a backlog in processing.

- Structured Outputs Support in LlamaIndex: A user inquired if LlamaIndex supports structured outputs with OpenAI, which was confirmed to be supported with specific usage instructions provided.

- In addition, links to documentation were shared to illustrate how to implement structured prediction using LlamaIndex with OpenAI.

- Examples of Llama 3 Usage with LlamaIndex: A user sought examples of working with Llama 3 using LlamaIndex instead of OpenAI, reflecting a need for resources focused on this integration.

- One member directed them to relevant documentation that details how to set up and utilize Llama 3 with LlamaIndex effectively.

- Node Postprocessor - LlamaIndex: no description found

- Starter Tutorial (Local Models) - LlamaIndex: no description found

- Ollama - Llama 3.1 - LlamaIndex: no description found

- LlamaIndex Status: no description found

- Self-querying | 🦜️🔗 LangChain: Head to Integrations for documentation on vector stores with built-in support for self-querying.

- OpenAI - LlamaIndex: no description found

Torchtune ▷ #general (25 messages🔥):

Gemma model configurationSupport for gemma 2PR for torchtune adjustmentsTokenizer eos problem

- Gemma Model Configuration Updates: To configure a Gemma 9B model using Torchtune, a member suggested replacing the

modelentry in the config with specific parameters includingvocab_size,num_layers, and more.- This utilizes the underlying component builder for Gemma, aiming for versatility in model sizes based on values from the config.json.

- Support Challenges for Gemma 2: A discussion highlighted obstacles in supporting Gemma 2 in Torchtune primarily due to the logit-softcapping and bandwidth issues.

- It was noted that the enhancements in Gemma 2 architecture have not yet been requested, increasing the backlog of features to implement.

- PR Proposal for Torchtune Improvements: A member identified a potential bug in Torchtune regarding padding sequence behavior and proposed a PR to rectify it.

- They suggested modifying the flip method for clarity and aimed to ensure feature parity with torch pad_sequence.

- Clarification Needed on Dataset Return Types: Concerns were raised about the misleading return types in the ConcatDataset implementation in Torchtune, which might require defining a consistent type for all datasets.

- The discussion also mentioned that while Torchtune excludes support for negative indexing, the reasoning behind this decision was questioned.

- Tokenizer Eos Problem in Mistral & Gemma: A member offered to submit a PR to address the eos token issue but noted that the current Mistral and Gemma tokenizers lack the

add_eosoption.- This highlights a limitation in the tokenizer's capabilities that could affect implementations relying on end-of-sequence tokens.

- pytorch/test/nn/test_packed_sequence.py at main · pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration - pytorch/pytorch

- GitHub - pytorch/torchtune: A Native-PyTorch Library for LLM Fine-tuning: A Native-PyTorch Library for LLM Fine-tuning. Contribute to pytorch/torchtune development by creating an account on GitHub.

- torchtune/torchtune/data/_collate.py at main · pytorch/torchtune: A Native-PyTorch Library for LLM Fine-tuning. Contribute to pytorch/torchtune development by creating an account on GitHub.

- torchtune/torchtune/datasets/_concat.py at main · pytorch/torchtune: A Native-PyTorch Library for LLM Fine-tuning. Contribute to pytorch/torchtune development by creating an account on GitHub.

Torchtune ▷ #dev (32 messages🔥):

Compiling Generation MethodsCache Handling During GenerationHandling Non-Contiguous InputsTensor.is_inference() Method ProposalProposed Implementation of Chunked Linear + CE

- Compiling Generation Methods for Speed: The user aims to utilize

torch.compileforgenerate_next_tokento enhance generation speeds, akin to their previous successes with the PPO loss step.- However, they report no expected speedups, possibly due to issues like activation checkpointing and non-contiguous inputs.

- Cache Handling During Generation Discussion: Discussion revolves around the need for consecutive forward calls in attention modules to behave differently based on cache status during generation.

- They propose using

torch.inference_modebut acknowledge that passing an explicit flag to.forward()might be the better approach.

- They propose using

- Proposing Tensor.is_inference() Method: The user proposes implementing a

Tensor.is_inference()method to better manage caching behavior across multiple forward calls.- Despite interest, they are concerned about the challenges of integrating this change into existing maintainers' workflows.

- Implementation Concerns Regarding Attributes: There's a suggestion to add a toggle attribute to the model for checking caching behavior without modifying the

.forward()signature.- Concerns were raised about potential issues with

torch.compilewhen mutating non-Tensor module attributes.

- Concerns were raised about potential issues with

- Clean Implementation of Chunked Linear + CE: A member referenced a clean implementation of chunked linear combined with cross-entropy from a GitHub gist as a point of interest.

- They noted that integrating a similar approach into torchtune may prove difficult due to its separation of the LM-head and the loss calculation.

- chunked_lce.py: chunked_lce.py. GitHub Gist: instantly share code, notes, and snippets.

- [dynamo] Add support for tensor's is_complex method by YangQun1 · Pull Request #124927 · pytorch/pytorch: This PR is to add support for tensor's is_complex method in dynamo. Take the following code as an example: def test_tensor_is_complex(x): if x.is_complex(): return x + 1...

LangChain AI ▷ #general (41 messages🔥):

Decoding .astream_events()Gradio Upload LimitationsLangChain Azure IntegrationData Set Creation StrategiesAudio Transcription with Claude

- Frustrations with Decoding .astream_events(): Users are encountering challenges decoding streams from .astream_events(), with one mentioning that manual serialization through all branches and event types is tedious.

- A participant inquired about finding a reference implementation, highlighting the lack of good resources on the topic.

- Gradio Concurrency Issues: A user noted that after launching Gradio with 10 tabs open, only 6 requests began generating, indicating limits despite setting a higher concurrency limit.

- Despite high token rates, it seems the hardware is not handling more than 6 concurrent requests, signaling a potential configuration or limitation issue.

- Troubleshooting Azure OpenAI Integration: A user reported facing a 500 error when trying to interact with Azure OpenAI, seeking advice on parameters and possible endpoint issues.

- Another member pointed out that validating environment variables and naming conventions, especially around endpoints, could resolve the issues.

- Creating Datasets from Diverse Documents: A user asked whether to build individual datasets for different sets of documents or save input text alongside the corresponding documents in one dataset.

- This highlights a common dilemma in dataset creation regarding efficiency and organization.

- Exploring Audio Processing Capabilities of Claude: Discussion around whether it's possible to pass audio data to Claude's 3.5 LLM using Langchain for transcription purposes sparked some interest.

- Participants noted that while Claude supports image input, there was uncertainty about audio functionality.

LangChain AI ▷ #share-your-work (9 messages🔥):

VAKX platformSelenium and GPT-4 vision integrationAI Reddit Manager toolMocking LLM embedderRAG chatbot using OpenAI and LangChain

- VAKX: Your No-Code Assistant Builder: A member introduced VAKX, a no-code LLM orchestration platform that enables users to build AI assistants quickly. They invited feedback and provided links to explore the platform further: VAKX and Start Building for Free.

- They highlighted features like VAKChat integration for adding AI-powered chat to sites and outlined simple setup steps to engage users.

- Selenium Meets GPT-4 Vision: A member shared their experimental project integrating Selenium and the GPT-4 vision model, detailing the integration process in this YouTube video. They also provided a link to their GitHub repository containing the code: GitHub Repository.

- Discussion ensued about the purpose of this integration, focusing on benefits for integration testing with vector databases instead of using live embedding models.

- Create Posts with AI Reddit Manager: A member showcased their AI Reddit Manager that autonomously curates and posts content to subreddits using the Lyzr Agent API and Streamlit. They aimed to save time by generating posts based on specific topics, although their linked Medium article is currently a broken link.

- They provided a YouTube link to demonstrate their tool's functionality: YouTube Video.

- Guide on Mocking LLM Embedder: A member wrote a guide on how to mock an LLM embedder for integration testing with MongoDB Atlas, available here. They spoke about the challenges faced when using live embedding models during integration.

- Discussion included clarifying that the goal of this work is integration testing rather than focusing on the embedding model itself, facilitating integration with LangChainGo.

- RAG Chatbot Embracing OpenAI and LangChain: A member introduced their RAG chatbot utilizing OpenAI and LangChain, available for users at AdaletGPT. They encouraged members to reach out for assistance as needed.

- This chatbot represents an application of recent AI advancements for engaging conversation and interaction.

- no title found: no description found

- no title found: no description found

- Integrating Selenium and gpt-4 vision: In this recording I show a use case where I integrated GPT4 vision model with selenium.code:https://github.com/rajib76/browser_agent

- no title found: no description found

- VAKX | Empower Your Documentation with AI-Powered Assistance: no description found

OpenAccess AI Collective (axolotl) ▷ #general (33 messages🔥):

Overfitting in ModelsBenchmark LimitationsScam in AI ToolRAG APIs

- Overfitting Throughout Training: Concerns were raised about overfitting by a member, highlighting that benchmarks can be misleading and that models will get overfitted regardless of their size.

- “I don't believe benchmarks anymore” reflects skepticism about the reliability of models evaluated on insufficient data.