[AINews] AI Engineer Summit Day 1

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI Engineers are all you need.

AI News for 2/19/2025-2/20/2025. We checked 7 subreddits, 433 Twitters and 29 Discords (211 channels, and 6423 messages) for you. Estimated reading time saved (at 200wpm): 647 minutes. You can now tag @smol_ai for AINews discussions!

Day 1 of AIE Summit has concluded here in NYC.

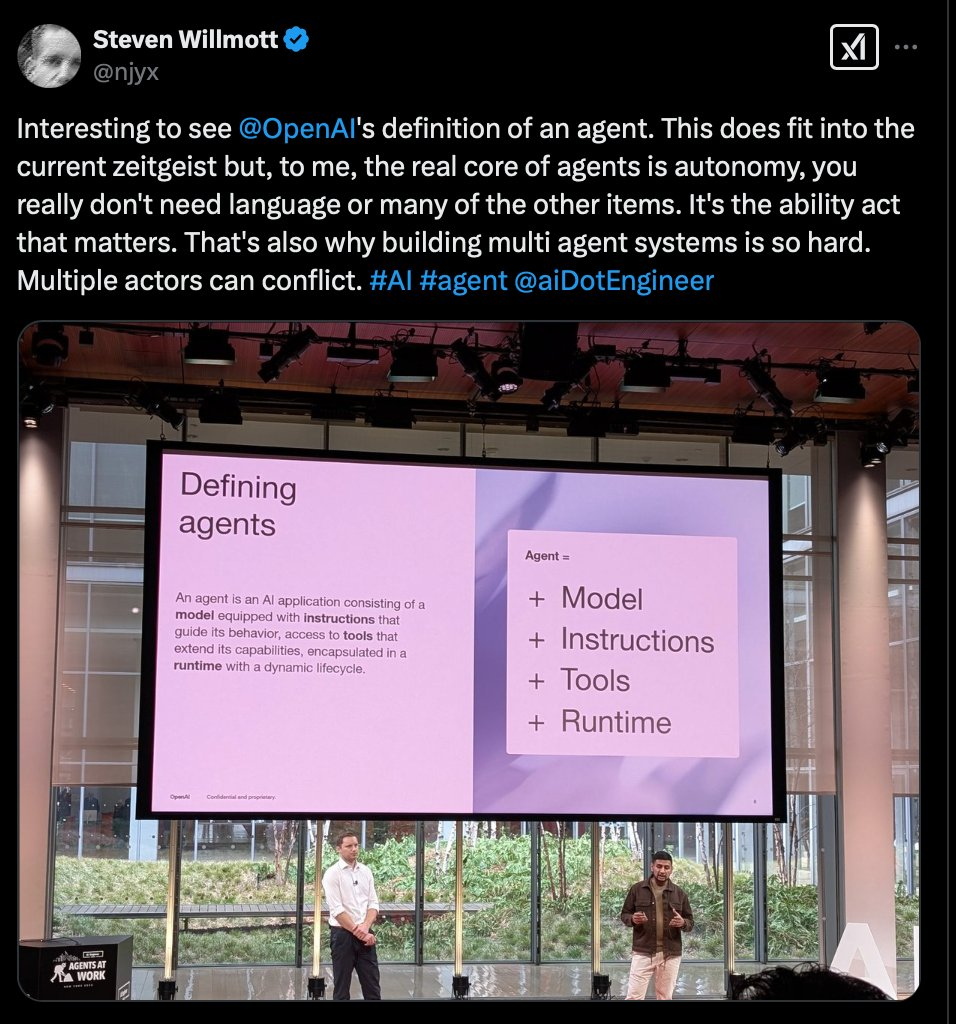

If you forced us to pick only 3 talks to focus on, check out Grace Isford's Trends Keynote, Neo4j/Pfizer's presentation, and OpenAI defining Agents for the first time. $930m of funding was announced by speakers/sponsors. Multiple Anthropic datapoints went semi-viral.

You can watch back the full VOD here:

Day 2 will focus on Agent Engineering, while Day 3 will have IRL workshops and the new Online track.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Models, Benchmarks, and Performance

- Grok-3 Performance and Capabilities: @BorisMPower reported that o3-mini is better in every eval compared to Grok 3, stating that Grok 3 is decent but oversold. This sparked discussion with @ibab from xAI who responded that they used the same evaluation methods. @Yuhu_ai_ from xAI defended Grok 3's performance, claiming their mini model surpassed o3-mini high in AIME 2024, GPQA, and LCB for pass@1, and that benchmarks don't fully capture model intelligence. @aidan_mclau criticized Grok 3's chart presentation as "chart crimes". @itsclivetime shared initial positive experiences with Grok 3, noting its speed in Deep Research, but also mentioned slower coding and occasional crashes. @nrehiew_ defended xAI's evaluation reporting, saying it follows OpenAI's practices and the issue was clarity, not deception. @teortaxesTex expressed surprise at the grief received for bullishness on Grok. @EpochAIResearch noted Grok-3's record compute scale, estimating 4e26 to 5e26 FLOP, making it the first released model trained on over 1e26 FLOP.

- o3-mini Performance and CUDA Kernel Issue: @giffmana highlighted that o3-mini figured out an issue with Sakana AI's CUDA kernels in 11 seconds, revealing a bug that made it appear 150x faster when it was actually 3x slower. @giffmana emphasized lessons learned: straightforward CUDA code is unlikely to outperform optimized kernels, inconsistent benchmarks indicate problems, and o3-mini is highly effective for debugging. @main_horse also benchmarked and found Sakana AI's claimed 150x speedup to be actually 3x slower, pointing to issues with their CUDA kernel.

- DeepSeek R1 Capabilities and Training: @teortaxesTex mentioned a "R1-inspired Cambrian explosion in RL", noting its scientific recipe is similar to other top labs, highlighting a shift away from demoralizing "hopeless BS". @togethercompute promoted DeepSeek-R1 as an open-source alternative to proprietary models, offering fast inference on NVIDIA GPUs. @andrew_n_carr shared a cool fact about DeepSeek's training, noting a batch size of ~60M tokens for 14 trillion tokens, contrasting with Llama 1's smaller batch size.

- Qwen 2.5-VL Model Release: @Alibaba_Qwen announced the tech report for Qwen2.5-VL, detailing its architecture and training, highlighting its capability alignment with Qwen2.5-72B and industry-leading visual semantic parsing. @Alibaba_Qwen also released AWQ quantized models for Qwen2.5-VL in 3B, 7B, and 72B sizes. @_akhaliq shared the Qwen2.5-VL Technical Report drop. @arankomatsuzaki also announced the Qwen2.5-VL Technical Report release. @_philschmid detailed how Qwen Vision Language Models are trained, emphasizing dynamic resolution processing and a redesigned Vision Transformer.

- SmolVLM2 Video Models: @mervenoyann announced SmolVLM2, "world's smollest video models" in 256M, 500M, and 2.2B sizes, including an iPhone app, VLC integration, and a highlights extractor. @reach_vb highlighted SmolVLM2, Apache 2.0 licensed VideoLMs ranging from 2.2B to 256M, noting they can run on a free Colab and even an iPhone. @awnihannun promoted SmolVLM2's day-zero support for MLX and MLX Swift, enabling local runs on Apple devices.

- Helix VLA Model for Robotics: @adcock_brett announced the technical report for Helix, a generalist Vision-Language-Action (VLA) model. @adcock_brett described Helix's architecture as "System 1, System 2", with a 7B parameter VLM and an 80M parameter visuomotor policy, running on embedded GPUs. @adcock_brett showcased Helix robots picking up household items, and @adcock_brett detailed Helix coordinating a 35-DoF action space at 200Hz. @adcock_brett presented two robots collaboratively storing groceries using Helix. @adcock_brett emphasized Helix's human-like thinking and generalization capabilities for robotics. @adcock_brett introduced Helix as "AI that thinks like a human", aiming for robots in homes.

- SholtoBench AGI Benchmark: @nearcyan announced SholtoBench, a new AGI benchmark tracking Sholto Douglas's (@_sholtodouglas) AGI lab employment. @nearcyan provided a link to the official SholtoBench website and thanked anonymous contributors.

- AIME 2025 Performance Chart: @teortaxesTex shared a "definitive Teortaxes edition" performance chart for AIME 2025, comparing models like o3-mini, Grok-3, DeepSeek-R1, and Gemini-2 FlashThinking. @teortaxesTex commented on labs releasing "asinine, deformed charts" to claim SoTA. @teortaxesTex presented a compilation of AIME 2025 results, aiming for clarity over "chart crimes".

- Grok DeepSearch Evaluation: @casper_hansen_ found Grok DeepSearch "pretty good", noting its query expansions and questioning its comparison to OpenAI's DeepResearch.

- LLM Scaling Laws and Data Quality: @JonathanRoss321 discussed LLM scaling laws, arguing that improvement can continue with better data quality, even if internet data is exhausted, citing AlphaGo Zero's self-play as an example of synthetic data driving progress.

- FlexTok Image Tokenizer: @iScienceLuvr highlighted FlexTok, a new tokenizer from Apple and EPFL, projecting 2D images into variable-length 1D token sequences, allowing for hierarchical and semantic compression.

- Vision Language Model Training: @_philschmid explained how Vision Language Models like @Alibaba_Qwen 2.5-VL are trained, detailing pre-training phases (ViT only, Multimodal, Long-Context) and post-training (SFT & DPO).

- vLLM Speedup with DeepSeek's Module: @vllm_project announced vLLM v0.7.3 now supports DeepSeek's Multi-Token Prediction module, achieving up to 69% speedup boost.

Open Source and Community

- Open Source AI Models: @togethercompute affirmed their belief that "the future of AI is open source", building their cloud company around open-source models and high-performance infrastructure. @_akhaliq congratulated @bradlightcap and suggested open models could further enhance their success. @cognitivecompai expressed love for new Apache 2.0 drops from @arcee_ai.

- Hugging Face Inference Support Expansion: @_akhaliq announced Hugging Face Inference providers now support over 8 different providers and close to 100 models.

- LangChain Agent Components and Open Deep Research: @LangChainAI promoted Interrupt conference with speakers from Uber sharing reusable agent components with LangGraph. @LangChainAI introduced Open Deep Research, a configurable open-source deep researcher agent. @LangChainAI highlighted Decagon's AI Agent Engine, used by companies like Duolingo and Notion, in a fireside chat.

- Unsloth Memory Efficient GRPO: @danielhanchen announced memory savings of up to 90% for GRPO (algorithm behind R1) in @UnslothAI, achieving 20K context length GRPO with 54GB VRAM versus 510GB in other trainers.

- Lumina2 LoRA Fine-tuning Release: @RisingSayak announced Lumina2 LoRA fine-tuning release under Apache 2.0 license.

- Offmute Open Source Meeting Summarization: @_philschmid presented Offmute, an open-source project using Google DeepMind Gemini 2.0 to transcribe, analyze, and summarize meetings, generating structured reports and key points.

- SongGen Open-Source Text-to-Music Model: @multimodalart announced SongGen, joining YuE as an open-source text-to-music model, similar to Suno, allowing users to create songs from voice samples, descriptions, and lyrics.

Research and Development

- AI CUDA Engineer - Agentic CUDA Kernel Optimization: @DrJimFan highlighted Sakana AI's "AI CUDA Engineer," an agentic system that produces optimized CUDA kernels, using AI to accelerate AI. @omarsar0 broke down Sakana AI's AI CUDA Engineer, explaining its end-to-end agentic system for kernel optimization. @SakanaAILabs announced the "AI CUDA Engineer," an agent system automating CUDA kernel generation, potentially speeding up model processing by 10-100x, and releasing a dataset of 17,000+ CUDA kernels. @omarsar0 detailed the Agentic Pipeline of the AI CUDA Engineer, including PyTorch to CUDA conversion and evolutionary optimization. @omarsar0 mentioned the availability of an archive of 17000+ verified CUDA kernels created by the AI CUDA Engineer.

- Thinking Preference Optimization (TPO): @_akhaliq shared a link to research on Thinking Preference Optimization.

- Craw4LLM for Efficient Web Crawling: @_akhaliq posted about Craw4LLM, efficient web crawling for LLM pretraining.

- RAD for Driving Policy via 3DGS-based RL: @_akhaliq shared RAD research on training an end-to-end driving policy using large-scale 3DGS-based Reinforcement Learning.

- Autellix - Efficient Serving Engine for LLM Agents: @_akhaliq highlighted Autellix, an efficient serving engine for LLM agents as general programs.

- NExT-Mol for 3D Molecule Generation: @_akhaliq shared NExT-Mol research on 3D Diffusion Meets 1D Language Modeling for 3D Molecule Generation.

- Small Models Learning from Strong Reasoners: @_akhaliq linked to research on Small Models Struggling to Learn from Strong Reasoners.

- NaturalReasoning Dataset for Complex Reasoning: @maximelabonne introduced NaturalReasoning, a new instruction dataset designed to improve LLMs' complex reasoning without human annotation, emphasizing quality over quantity and diverse training data.

- Fine-grained Distribution Refinement for Object Detection: @skalskip92 introduced D-FINE, a "new" SOTA object detector using Fine-grained Distribution Refinement, improving bounding box accuracy through iterative edge offset adjustments and sharing precise distributions across network layers.

- BioEmu for Biomolecular Equilibrium Structure Prediction: @reach_vb highlighted Microsoft's BioEmu, a large-scale deep learning model for efficient prediction of biomolecular equilibrium structure ensembles, capable of sampling thousands of structures per hour.

Robotics and Embodiment

- Figure's Helix Humanoid Robot AI: Figure AI is developing Helix, an AI model for humanoid robots, showcased through various capabilities like grocery storage and object manipulation (tweets from @adcock_brett). They are scaling their AI team for Helix, Training Infra, Large Scale Training, Manipulation Engineer, Large Scale Model Evals, and Reinforcement Learning (@adcock_brett). They are aiming for production and shipping more robots by 2025, focusing on home robotics (@adcock_brett).

- 7B LLM on Robots vs. o3 for Math: @abacaj stated that "putting a 7B LLM on a robot is more interesting than using o3 to solve phd level math problems". @abacaj found a 7B parameter onboard vision-based LLM powering a robot "interesting and sort of expected", noting increased model capability. @abacaj humorously suggested "a 7B LLM will do your dishes, o3 won't".

- Skyfire AI Drone Saves Police Officer: @AndrewYNg shared a story about a Skyfire AI drone saving a police officer's life, by locating an officer in distress during a traffic stop, enabling rapid backup and intervention.

Tools and Applications

- Glass 4.0 AI Clinical Decision Support Platform: @GlassHealthHQ introduced Glass 4.0, their updated AI clinical decision support platform, featuring continuous chat, advanced reasoning, expanded medical literature coverage, and increased response speed.

- AI-Toolkit UI: @ostrisai shared progress on the AI-Toolkit UI, noting the "hard stuff is done" and UI cleanup is underway before adding "fun features".

- Gradio Sketch for AI App Building: @_akhaliq highlighted a new way to build AI apps using "gradio sketch," enabling visual component selection and configuration to generate Python code.

- Gemini App Deep Research: @GoogleDeepMind announced Deep Research is available in the Gemini App for Gemini Advanced users in 150 countries and 45+ languages, functioning as a personal AI research assistant.

- Elicit Systematic Reviews: @elicitorg introduced Elicit Systematic Reviews, supporting automated search, screening, and data extraction for research reviews, aiming to accelerate research with user control.

- PocketPal Mobile App with Qwen 2.5 Models: Qwen 2.5 models, including 1.5B (Q8) and 3B (Q5_0) versions, have been added to the PocketPal mobile app for both iOS and Android platforms. Users can provide feedback or report issues through the project's GitHub repository, with the developer promising to address concerns as time permits. The app supports various chat templates (ChatML, Llama, Gemma) and models, with users comparing performance of Qwen 2.5 3B (Q5), Gemma 2 2B (Q6), and Danube 3. The developer provided screenshots.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Qwen2.5-VL-Instruct excels in visual and video tasks

- Qwen/Qwen2.5-VL-3B/7B/72B-Instruct are out!! (Score: 489, Comments: 75): Qwen2.5-VL offers significant enhancements, including improved visual understanding for recognizing objects, text, charts, and layouts within images, and agentic capabilities that allow it to reason and interact with tools like computers and phones. It also features long video comprehension for videos over an hour, visual localization with accurate object identification and localization, and structured output generation for complex data such as invoices and forms, making it highly applicable in finance and commerce. Links to the models are available on Hugging Face.

- Users noted the release of Qwen2.5-VL and its AWQ versions, with some confusion about its timing. Recoil42 highlighted the potential impact of its long video comprehension feature in the video industry, while others discussed the substantial VRAM requirements for processing long videos, particularly with the 70B model.

- Benchmark results for different model sizes and quantizations were shared, including performance metrics like MMMU_VAL, DocVQA_VAL, and MathVista_MINI, showing variations between BF16 and AWQ quantizations. The 3B, 7B, and 72B models were compared, with AWQ generally showing slightly lower performance than BF16.

- Users discussed compatibility and support issues, including whether ollama or llama.cpp support the model, and shared solutions for running the model on different platforms like MLX on Mac and TabbyAPI on Nvidia/Linux. There was also discussion about the exl2 format and its compatibility with newer Nvidia hardware.

Theme 2. Reverb-7b Outperforms in Open LLM Leaderboards

- New AI Model | Ozone AI (Score: 164, Comments: 54): Reverb-7b, the latest AI model from Ozone AI, has been released, showcasing significant improvements in 7B model performance. Trained on over 200 million tokens from Claude 3.5 Sonnet and GPT-4o, and fine-tuned from Qwen 2.5 7b, Reverb-7b surpasses other 7B models on the Open LLM Leaderboard, particularly excelling in the MMLU Pro dataset with an average accuracy of 0.4006 across various subjects. More details and the model can be found on Hugging Face, and upcoming models include a 14B version currently under training.

- Performance Concerns: There are concerns about Reverb-7b's creative writing capabilities, with users noting it performs poorly in this area despite its high MMLU Pro scores, which suggest a focus on STEM subjects rather than diverse word knowledge.

- Model Differentiation: The model is a fine-tune of Qwen 2.5 7b, with improvements in intelligence and creative writing over previous versions, as noted by users comparing it to models like llama 3.1 8B.

- Dataset and Releases: The dataset remains closed due to profit motives, though there are future plans for openness. Reverb-7b's GGUF version was released on Hugging Face, and users have converted it to mlx format for broader accessibility.

Theme 3. SmolVLM2: Compact models optimizing video tasks

- SmolVLM2: New open-source video models running on your toaster (Score: 104, Comments: 15): SmolVLM2 has been released by Merve from Hugging Face, offering new open-source vision language models in sizes 256M, 500M, and 2.2B. The release includes zero-day support for transformers and MLX, an iPhone app using the 500M model, VLC integration for description segmentation using the 2.2B model, and a video highlights extractor also based on the 2.2B model. More details can be found in their blog.

- Zero-shot vision is explained as the capability of a vision model to perform tasks without direct training for those specific tasks, by leveraging general knowledge. An example given is classifying images for new labels specified at test time.

- Users express appreciation for Hugging Face's work on small models, noting the impressive performance of SmolVLM2 despite its compact size. The model's integration and utility in various applications are highlighted as significant achievements.

- Merve provides links to the blog and collection of checkpoints and demos for SmolVLM2, facilitating further exploration and use of the model.

Theme 4. Open-source AI agents tackling new frontiers

- Agent using Canva. Things are getting wild now... (Score: 125, Comments: 47): The post discusses an AI agent using Canva and potentially bypassing CAPTCHAs, indicating advanced capabilities in automating tasks that typically require human interaction. The absence of a detailed post body suggests reliance on the accompanying video for further context.

- The AI agent showcased in the post has the capability to bypass CAPTCHAs, though skepticism remains about the authenticity of such demos, with advice to verify by personal use. The project is open-sourced and available on GitHub.

- There is interest in the agent's compatibility with other multimodal models beyond OpenAI, with confirmation that it can work with other open-source models, although performance may vary. Running costs can be managed by renting a GPU for approximately $1.5 per hour.

- The setup for using Canva with the AI requires detailed instructions, indicating a trial-and-error process. Concerns about the agent's adaptability to interface changes were raised, highlighting the need for precise control details in prompts or a knowledge base.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Multi-modal AI Systems: Bridging Text and Vision

- "Actually.. on a second thought" ahh AI (Score: 103, Comments: 44): The post discusses a common error in AI's understanding of numerical data, specifically how AI can misinterpret decimal numbers. The example given shows a comparison between 9.11 and 9.9, illustrating that 9.9 is larger because 0.90 is greater than 0.11, emphasizing the importance of correctly parsing decimal components.

- Human-like Confusion: The discussion highlights that the initial confusion in AI's interpretation of numbers is similar to how humans might misinterpret at first glance, but humans can quickly analyze and correct their understanding.

- AI's Self-Correction: Users noted instances where AI, like ChatGPT, acknowledges its mistakes midway through responses, similar to human behavior when realizing an error.

- Humor in Misinterpretation: Comments humorously compare the numerical misinterpretation to other contexts, such as physical size or dates, and joke about AI's tendency to cover up mistakes like humans do.

AI Discord Recap

A summary of Summaries of Summaries by o1-preview-2024-09-12

Theme 1. Grok 3 Steals the Spotlight from OpenAI

- Grok 3 Crushes Coding Tasks ChatGPT Can't Handle: Users report Grok 3 solves complex coding problems that ChatGPT Pro struggles with, prompting many to consider switching to SuperGrok.

- SuperGrok Offers Premium AI at a Bargain Price: At $30/month, SuperGrok is seen as a better value than ChatGPT Pro's $250/month subscription, leading users to reevaluate their AI service choices.

- Grok 3 Becomes the Community's New 'Bestie': Enthusiastic users call Grok 3 their "bestie" due to its performance, speed, and user-friendly interface, with many praising its unlimited API and upcoming features.

Theme 2. Unsloth's GRPO Algorithm Slashes VRAM Requirements

- Train GRPO Models with Just 5GB VRAM—No Magic Required!: Unsloth releases new algorithms enabling 10x longer context lengths and 90% less VRAM, allowing training with only 5GB VRAM without accuracy loss.

- Community Cheers Unsloth's VRAM-Saving Breakthrough: Users express excitement and gratitude, sharing improvements while using Unsloth's Google Colab notebooks for their projects.

- Llama 3.1 Training Gets 90% VRAM Reduction: Unsloth's GRPO algorithm reduces Llama 3.1 VRAM requirements from 510.8GB to 54.3GB, inspired by Horace He's gradient checkpointing techniques.

Theme 3. AI CUDA Engineer's Wild Speedup Claims Raise Eyebrows

- 'AI CUDA Engineer' Claims 100x Speedup, Engineers Cry Foul: Sakana AI launches an AI system boasting 10-100x speedups in CUDA kernel optimization, but skeptics point out flawed baselines and fundamental bugs.

- 'NOP Kernels' Win the Race—But Do Nothing!: Members uncover that some kernels achieve speedups by effectively doing nothing, highlighting instances of reward hacking and questioning the system's validity.

- Overhyped AI Kernels Get Roasted by the Community: Experts debunk the impressive speedups, revealing errors like memory reuse and incorrect evaluations; the AI isn't ready to replace human CUDA engineers yet.

Theme 4. Microsoft's Quantum Leap with Majorana 1 Meets Skepticism

- Microsoft Promises Million-Qubit Future with Majorana 1 Chip: Microsoft unveils the world's first quantum processor powered by topological qubits, aiming for scalability to one million qubits.

- Topological Qubits Explained—or Are They?: In a YouTube video, Microsoft's team discusses topological qubits, but some remain skeptical about their practical applications requiring helium fridges.

- Nadella Hypes Quantum, Users Groan Over Teams: While Satya Nadella promotes Microsoft's quantum breakthroughs, users express frustration with existing products like Teams and Copilot, questioning Microsoft's focus on innovation over product quality.

Theme 5. AI Companies Bag Big Bucks, Betting on Inference Boom

- Lambda Lands $480M to Power the AI Cloud: Lambda announces a $480 million Series D to bolster its AI computing resources, aiming to be the go-to cloud service tailored for AI.

- Arize AI Raises $70M to Perfect AI Evaluation: Arize AI secures funding to advance AI evaluation and observability, ensuring AI agents operate reliably at scale.

- Baseten and Together Compute Bet Big on 2025 Inference Boom: Baseten raises $75M and Together Compute bags $305M, both gearing up for what they see as a pivotal year for AI inference technologies.

PART 1: High level Discord summaries

OpenAI Discord

- Grok 3 Outshines OpenAI Models: Grok 3 is showing superior performance compared to OpenAI's models, particularly in benchmarks and resolving coding tasks that ChatGPT Pro struggles with.

- Users express increased confidence in Grok 3's capabilities, reporting it solves complex problems that o1 Pro cannot, and are considering switching to SuperGrok.

- SuperGrok Offers Better Subscription Value: At $30 USD per month, SuperGrok is seen as offering better value compared to ChatGPT Pro’s $250 USD subscription.

- Users perceive SuperGrok as having advantages in terms of performance and usage limits, causing many to reevaluate their AI service subscriptions.

- Grok's Voice Mode Anticipation: Community members are anticipating upcoming features for Grok, such as voice mode and custom instructions, believing they will further enhance its utility and competitiveness.

- The Grok 3 model's API is noted for its unlimited capabilities, allowing for extensive interactions without the strict limits seen in some other models. They are actively seeking more integrations.

- Propose saving Chat URLs to return to valuable discussions: One member proposed saving the URL of the chat to easily return to valuable discussions, encouraging others to share their ideas in the designated channel for OpenAI to see them.

- They also recommended using keywords like 'good1' or 'Track this chat' to help remember significant chats.

- Prompt Engineering Troubleshooting Anticipated: A member expressed eagerness for a call to determine if the issues are due to prompt or the software malfunctioning, which is taking too much time than expected.

- The same member thanked others for their helpful advice, stating they will keep the insights in mind for future reference, but needing something else for a particular case.

Codeium (Windsurf) Discord

- DeepSeek-V3 Grants Unlimited Access!: DeepSeek-V3 is now unlimited for Windsurf Pro and Ultimate plan users, providing unrestricted access with 0 prompt credits and 0 flow action credits.

- Windsurf encouraged users to check this tweet to see more about this change.

- MCP Use Cases Spark Excitement: Matt Li shared MCP content, encouraging users to explore its potential on X, highlighting the community's desire for engagement.

- A quick demo illustrates how MCP can work within Cascade, serving as a resource for those still exploring its capabilities.

- Codeium Plugin Faces EOL Speculation: Users voiced concerns about the JetBrains Codeium plugin potentially being unsupported, expressing frustration over its perceived lack of direction.

- One user lamented, It's a shame to see Codeium as a plugin be abandoned.

- Cascade's Memory System Needs Love: Users are encouraged to use commands such as 'add to memory' and 'update memory' to help Cascade remember project details, while the proposed structure of global rules into separate files aims to improve Cascade's performance.

- There has been discussion on the strengths of DeepSeek v3 versus Cascade Base.

- Windsurf Users Await Support: Users report delays in receiving responses to support tickets, including the lack of auto-replies with expected ticket numbers in the subject line.

- Confusion persists over the correct email source for support communications.

Unsloth AI (Daniel Han) Discord

- Unsloth Unleashes Long Context GRPO: Unsloth has released Long Context GRPO, enabling the training of reasoning models with just 5GB VRAM, promising 10x longer context lengths and 90% less VRAM usage, as noted in this Tweet.

- Users expressed excitement and shared their improvements while gratefully acknowledging Unsloth for providing free resources, such as this Google Colab.

- Training Loss Swings Cause Concern: Users have observed significant fluctuations in training loss during model training, which often stabilizes only after several epochs, with users making adjustments using this Google Colab.

- The community recommended adjusting the learning rate and maintaining clarity in training prompts to reduce overfitting and enhance learning outcomes, which is also mentioned in the Unsloth Documentation.

- 5090 Mobile Specs Spark Upgrade Fantasies: The RTX 5090 Mobile will feature 24GB of memory, and preorders are anticipated to begin next week.

- The announcement has stirred interest among community members who are actively contemplating hardware upgrades.

- Nuances of RAG vs Fine-tuning Revealed: A YouTube video titled "RAG vs. Fine Tuning (Live demo)" was shared which examines if fine tuning yields better results than traditional RAG systems.

- Viewers requested additional examples comparing RAG and fine tuning, hinting at a demand for more comprehensive insights in future demos; the creator indicated plans for a follow-up video detailing how to get started with Kolo.

- Triton's Custom Assembly Works Wonders: Clarification was provided on what custom_asm_works refers to in the context of a challenge scoring system, explaining that it involves inline assembly in Triton, allowing for execution over a tensor without CUDA, as detailed in Triton documentation.

- This is being used as a technique to improve cohesion timing concerns for hardware and is a focus of current work.

LM Studio Discord

- Hunyuan Image Gen Demands VRAM: The Hunyuan model for image generation is now available but requires at least 24GB of VRAM and works primarily on NVIDIA cards, taking several minutes to generate video content.

- Users are keen to test Hunyuan's capabilities against other platforms.

- A100 GPUs for AI Tasks: Users discussed the utility of A100 GPUs with LM Studio, highlighting their 80GB VRAM capacity for AI tasks.

- Despite the potential costs, there's significant interest in acquiring A100s to boost performance.

- AMD Ryzen AI Max+ CPU Rivals RTX 4090: The Ryzen AI Max+ specs have garnered interest, with claims they beat Nvidia RTX 4090 at LLM Work as seen in this article.

- Skepticism remains about their real-world performance compared to existing GPUs, pending independent benchmarks.

- Apple Silicon Criticized for Soldered Components: Discussions around Apple's soldering of components in laptops, limiting repairability and upgrades. Discussion includes concern that integrated design trends limit memory configuration flexibility.

- Users voice a preference for systems allowing component upgrades.

- Speculative Decoding Dives: Speculative decoding with certain models may yield lower token acceptance rates and slower performance, according to user feedback.

- Users shared experiences with token acceptance and asked about optimal model setups for maximizing performance.

aider (Paul Gauthier) Discord

- Grok 3 Takes the Lead: Users are finding Grok 3 performs faster than GPT-4o, and some are canceling other subscriptions for it, calling Grok 3 their 'bestie' due to its performance, cheaper pricing, and user-friendly UI, according to this X post.

- Notably, Grok 3 is available for free (until their servers melt), per xAI's tweet, with increased access for Premium+ and SuperGrok users.

- Aider Faces Linux Argument Size Constraints: A user reported difficulty passing many files into Aider due to Linux argument size constraints, particularly with deeply nested directory paths.

- They suggested using a text file with

/loadcommands as a workaround, while noting the repo contains many small files, the length of the nested directory paths is a significant issue.

- They suggested using a text file with

- SambaNova Claims DeepSeek-R1 Efficiency Crown: SambaNova announced serving DeepSeek-R1 with significant speed and cost reductions compared to existing models, achieving 198 tokens per second, according to their press release.

- The claim positions DeepSeek-R1 as highly efficient, making significant strides in AI model application and implementation, per a Kotlin blog post.

- Aider Font Colors Spark Visibility Debate: Users raised concerns about the font color visibility in Aider, especially the blue color in light mode.

- Suggestions included checking dark mode settings and ensuring proper configurations to address the visibility problem.

- RAG Setup Superior Than AI Chat: A member stated the current RAG setup yields better results than the AI Chat RAG feature for their coding needs.

- Another member agreed, noting that normal RAG struggles with code and improvements are necessary.

Cursor IDE Discord

- Cursor IDE Sparking Debate: Users reported issues with Cursor's Sonnet 3.5 performance, expressing frustration over reliability compared to previous versions.

- In contrast, Grok 3 received praise for its speed and effectiveness in problem-solving during coding tasks, though some criticized its owner and past performance, along with its lack of API access; see Grok 3 is an...interesting model.

- MCP Servers Create Headaches: Users discussed the complications surrounding the setup and functionality of MCP servers within Cursor, with some finding it challenging to utilize effectively; check out Perplexity Chat MCP Server | Smithery.

- Community members suggested that improved documentation could enhance the user experience and streamline installation, noting that the MCP config is OSX and Linux specific, see issue #9 · anaisbetts/mcp-installer.

- AI Model Performance Questioned: Participants expressed dissatisfaction with the current performance of AI models, notably Claude, attributing inconsistencies in output to underlying prompting and context management issues.

- Variations in responses from LLMs are expected, highlighting the stochastic nature of these models, but some are hoping for better performance from Grok-3 and the new DeepSeek-V3 available in Windsurf Pro and Ultimate plans, see Tweet from Windsurf (@windsurf_ai).

- Developer tools trigger frustrations: Users reported challenges using the Cursor Tab, with some stating it introduced bugs during development that slowed workflows.

- The Cursor Composer was praised for generating stronger and more reliable code, but overall developers are looking forward to the next generation of Amazon and Anthropic models powered by the Rainier AI compute cluster, see Amazon announces new ‘Rainier’ AI compute cluster with Anthropic.

HuggingFace Discord

- Hugging Face Hardcover Hits the Shelves: Excitement is building around the release of a new Hugging Face-themed hardcover book, marking a year of teamwork celebrated in a recent blog post.

- Those interested should act fast to secure a copy.

- Qwen2.5 Achieves Training Breakthrough: Leveraging Unsloth's new algorithms, users can now train reasoning models with just 5GB of VRAM for Qwen2.5, achieving 10x longer context lengths and 90% less VRAM, showcased in this blog.

- These improvements provide practical tools for developers.

- HF Spaces Hosts Fast Video Generators: Discussion highlights the availability of video generators on HF Spaces, with ltxv noted as a standout for its speed, generating videos in just 10-15 seconds.

- There is a new plan for collaborations to create a video generator based on the latest releases.

- CommentRescueAI Speeds Up Python Doc Generation: CommentRescueAI, a tool that adds AI-generated docstrings and comments to Python code with a single click, is now available on the VS Code extension marketplace.

- The developer is seeking community input on ideas for improvement.

- Lumina2 Gets Fine-Tuned with LoRA: A new fine-tuning script for Lumina2 using LoRA is now available, enhancing capabilities for users under the Apache2.0 license, with more information in the documentation.

- This promotes open collaboration on AI technology.

Perplexity AI Discord

- Perplexity AI Users Battle Glitches: Users report frustrating experiences with the Perplexity AI app, citing lag, high resource consumption, and glitches during text generation, but the developers may be working on it.

- Concerns have specifically been raised about the model's performance, prompting inquiries into whether the development team is actively addressing these ongoing issues.

- Grok 3 Hallucinates Wildly, say Users: Discussion around Grok 3 revealed mixed feelings; some users feel it performs better than previous models, while others noted significant hallucinatory behavior.

- Users compared Grok 3 to Claude and O3 combinations, generally preferring Claude for more reliable performance.

- Mexico vs. Google Gulf Faceoff: In a bold move, Mexico has threatened Google regarding their operations near the Gulf, highlighting ongoing jurisdictional disputes.

- This conflict underscores the growing tension between tech companies and national regulators over the use of machine learning.

- Sonar API Struggles Stir Concerns: A user raised concerns over the Sonar API's performance, finding it to yield worse results than older models like llama-3.1-sonar-large-128k-online.

- This user reported that the legacy models perform better for tasks like fetching website information, expressing disappointment over the perceived decline in quality despite similar pricing.

- Deep Research API Rumored Soon: Members are inquiring about the potential for deep research capabilities to be integrated into the API, which could lead to exciting new functionalities.

- One user expressed enthusiasm, thanking the Perplexity team for their ongoing work in this area.

Interconnects (Nathan Lambert) Discord

- Saudi Arabia Launches ALLaM: The Saudi Arabia-backed ALLaM focuses on creating Arabic language models to support the ecosystem of Arabic Language Technologies, which represents a push for LLMs in the current geopolitical climate.

- The model can generate both Arabic and English text and has 70B parameters.

- Mercor raises $100M for AI Recruiting: Mercor raises $100 million for its AI recruiting platform, founded by young Thiel Fellows, highlighting its rapid growth and a valuation jump to $2 billion.

- Discussions centered on Mercor's innovative marketing drive amidst the competitive AI landscape.

- Innovative GRPO Algorithm reduces VRAM: Unsloth released a new GRPO algorithm that reduces VRAM requirements for Qwen2.5 training to just 5GB, marking a significant improvement.

- The algorithm enables 10x longer context lengths, offering streamlined setups that could revolutionize model training efficiency.

- Nadella promotes Microsoft, but product quality is questionable: In a recent YouTube video, Satya Nadella shares his skepticism about AGI while promoting economic growth and Microsoft's topological qubit breakthrough.

- Members expressed frustration, questioning how Satya Nadella can be viewed positively when Microsoft products like Teams and Copilot fall short.

OpenRouter (Alex Atallah) Discord

- Reasoning Tokens Ruffle Feathers: Users expressed dissatisfaction with low max_tokens in OpenRouter's implementation leading to empty or null responses when include_reasoning defaults to false.

- Proposed changes include setting include_reasoning to true by default and ensuring content is always a string, avoiding null values to improve response consistency, with community input being gathered via a poll.

- Weaver Extension Weaves Versatile Options: The Weaver Chrome extension provides highly configurable options like PDF support, cloud sync with Supabase, and direct API calls from the browser.

- While currently free and hosted on Vercel's free plan, it may face accessibility limitations due to usage limits, with no backend data logging.

- API Translator Turns Open Source: A user shared a newly developed open-source Chrome extension available via GitHub that allows users to transform any content into their preferred style.

- The tool only requires an OpenAI-compatible API to function.

- Gemini Output Glitches Generate Gripes: Users reported issues with the Gemini 2.0 Flash model's structured outputs, noting discrepancies compared to OpenAI's models when integrating with OpenRouter.

- Feedback suggests a need for clearer UI indications regarding model capabilities, especially concerning input types and error messages.

- DeepSeek's Performance Dips Alarmingly: Some users reported that DeepSeek models yield high-quality responses initially, but later responses deteriorated significantly within OpenRouter.

- Discussions addressed possible causes and mitigation strategies for the decline in response quality.

Nous Research AI Discord

- Grok3 Benchmarks Get Questioned: Doubts emerged around Grok3's performance and benchmarking, as members allege that xAI might have obfuscated data regarding cons@64 usage.

- Skeptics challenged claims of Grok3 outperforming state-of-the-art models and shared specific counterexamples.

- EAs for Neural Net Optimization?: The community debated using evolutionary algorithms for optimizing neural networks, considering slower convergence rates at scale due to high dimensionality.

- Members discussed using GAs for specific training pipeline components to improve model performance, contrasting this with traditional backpropagation.

- Coding Datasets Shared: Members shared coding datasets on Hugging Face, suggesting their use in augmenting existing models.

- The conversation underscored the importance of dataset quality and the possibility of reworking existing datasets with advanced reasoning models, such as NovaSky-AI/Sky-T1_data_17k.

- Agents Team Up to Refine: A member inquired about research on agents collaborating to refine ideas towards a goal, focusing on communication and methodologies.

- The conversation included references to personal experiments where agents discussed and refined processes to achieve specific outcomes, towards goal refinement.

- Equilibrium Propagation > Backprop?: The community explored equilibrium propagation as an alternative to backpropagation for training energy-based models, highlighting its ability to nudge predictions towards minimal error configurations as shown in Equilibrium Propagation: Bridging the Gap Between Energy-Based Models and Backpropagation.

- Discussions covered the parallels between equilibrium propagation and recurrent backpropagation, emphasizing potential applications in neural network training techniques, as discussed in Equivalence of Equilibrium Propagation and Recurrent Backpropagation.

Yannick Kilcher Discord

- Logits Outperform Probabilities for Training: Discussions highlighted that logits are more informative than normalized probabilities, suggesting unnecessary normalization may impede optimization.

- The consensus was that while probabilities are essential for decision-making, leveraging logit space could optimize training efficiency for specific models.

- Sparse Attention Gains Traction: Participants explored DeepSeek's paper on Native Sparse Attention, noting implications for both efficiency and enhanced contextual understanding.

- They appreciated DeepSeek's high research standards and ability to make findings accessible.

- Microsoft Enters Topological Qubit Arena: Microsoft introduced the Majorana 1, the first QPU utilizing topological qubits, aiming for scalability up to one million qubits, as reported on Microsoft Azure Quantum Blog.

- A YouTube video featuring the Microsoft team explains the significance of topological qubits and their potential to redefine quantum computing.

- Perplexity Breaches Censorship Barriers: Perplexity AI launched R1 1776, designed to bypass Chinese censorship in the Deepseek R1 model, employing specialized post-training techniques, according to The Decoder.

- This development showcases the increasing role of AI in navigating and overcoming regulatory restrictions.

- Google Launches PaliGemma 2: A Visionary Leap: Google unveiled PaliGemma 2 mix checkpoints, an enhanced vision-language model, available in various pre-trained sizes, documented in their blog post.

- Engineered for fine-tuning across diverse tasks, this model excels in areas like image segmentation and scientific question answering.

GPU MODE Discord

- Sakana AI's AI CUDA Engineer Automates Optimization: The AI CUDA Engineer automates the production of highly optimized CUDA kernels, claiming 10-100x speedup over common machine learning operations in PyTorch.

- The system also releases a dataset of over 17,000 verified CUDA kernels and a paper detailing its capabilities, though some users feel the paper may be overhyped due to weak baselines.

- Unsloth unveils 10x context and 90% VRAM savings: Unsloth announced new algorithms enabling training with just 5GB VRAM for Qwen2.5-1.5B models, achieving a 90% reduction in VRAM usage, detailed in their blog.

- Comparative benchmarks show that a standard GRPO QLoRA setup for Llama 3.1 at 20K context previously required 510.8GB VRAM, now reduced to 54.3GB by leveraging a previous gradient checkpointing algorithm inspired by Horace He's implementation.

- RTX 5080+ Faces Triton Compatibility Issues: A member shared their experience running RTX 5080+ on Triton with TorchRL, highlighting errors related to

torch.compiletriggering Triton issues, ultimately resolved by removing the PyTorch-triton installation.- This brought attention to the compatibility concerns that remain with Triton and PyTorch interactions.

- Raw-Dogged Tensors Yield Permutation Victory: A member proposed a new nomenclature called a raw-dogged Tensor, aimed at aligning storage format with MMA_Atom thread layout, noting a significant reduction in permutation complexity.

- Another member confirmed using this approach for int8 matmul, emphasizing its necessity to avoid shared-memory bank conflicts.

Stability.ai (Stable Diffusion) Discord

- Stable Diffusion Edges Out Flux: Members find Stable Diffusion (SD) more refined than Flux, though they acknowledged that Flux is still under active development.

- One member suggested comparing example images to see which model matches personal taste.

- ControlNet Tames Image Poses: ControlNet uses depth maps or wireframes to generate images from poses, handling adjustments like 'hand in front' or 'hand behind', for creative control.

- Members pointed out control methods enable precise image generation from poses.

- DIY Custom Models: A user inquired about hiring an artist skilled in both Stable Diffusion and art to create a custom model and prompt style, raising questions about practicality.

- The community suggested that learning to create the model is more beneficial and cost-effective in the long run.

- From Scribbles to AI Images: One user shared their workflow of using sketches on an iPad to guide AI image generation, seeking advice on refining scribbles into finished images.

- The user found img2img useful, but wanted to find out ways to start from simple doodles.

- Nvidia GPUs Still King for Image Generation: Nvidia GPUs are the recommended choice for running Stable Diffusion smoothly, while AMD options may have performance issues.

- Users shared GPU setups and discussed model compatibility with GPU capabilities.

Eleuther Discord

- AI CUDA Engineer Generates Skepticism: The AI CUDA Engineer is an AI system claiming 10-100x speedup in CUDA kernel production, but doubts arose about the accuracy of its evaluation and prior misrepresentations in similar projects.

- Critiques highlighted that a purported 150x speedup kernel had memory reuse and fundamental bugs, leading to skepticism about the reliability of generated kernels.

- Community Debates LLM Compiler Viability: Members speculated on whether an LLM-compiler could translate high-level PyTorch code into optimized machine code, sparking an engaging conversation.

- While intriguing, a consensus emerged that substantial challenges, particularly the lack of a common instruction set, could impede progress.

- Clockwork RNN Architecture is Back: The discussion around the Clockwork RNN, a revised architecture using separate modules for input granularities, gained traction.

- Members debated the viability of such architectures in future models, including the application of dilated convolutions and attention mechanisms.

- NeoX vs NeMo in Llama 3.2 TPS: A comparison of the Llama 3.2 1B configuration across NeMo and NeoX revealed 21.3K TPS for NeoX versus 25-26K TPS for NeMo, with the configuration file available.

- The member shared the WandB run for detailed metrics and others to optimize their setups.

Notebook LM Discord

- Podcast TTS Faces Challenges: A user reported issues with the TTS function in NotebookLM failing to properly read and interpret input prompts for their podcast.

- The user expressed frustration when the desired tone for their podcast host could not be achieved, despite trying varied prompts.

- Non-Google User Access Debated: A member inquired whether users without Google accounts can be invited to access NotebookLM notebooks, similar to Google Docs.

- The discussion highlighted the need for alternative collaboration methods for those not integrated within the Google ecosystem.

- Tesla Patent Explored via Podcast: A user analyzed Tesla's Autonomous Driving AI following a patent grant, spotlighting technologies like Lidar, Radar, and Ultrasonics, and discussed it in a podcast.

- The user provided a free article on their Patreon, inviting listeners to explore their findings further.

- Homeschooling Enhanced with AI Duo: A user shared their successful experience integrating NotebookLM with Gemini in their homeschooling approach, which they likened to having skilled assistants.

- The synergy between the two tools significantly aided in executing teaching efforts, enhancing the learning experience.

- AI Struggles with Literary Nuance: Users expressed concerns about AI's misinterpretations of literary works, citing instances where character details and narrative nuances were misunderstood.

- In some cases, the AI resisted corrections even when presented with direct evidence, causing conflicts with the original text's integrity.

Torchtune Discord

- Torchtune roadmap drops for early 2025: The official Torchtune roadmap for H1 2025 has been released on PyTorch dev-discuss, outlining key directions and projects planned for Torchtune during this period.

- The full set of PyTorch roadmaps for various projects is also accessible on dev-discuss, showcasing exciting developments and ongoing work across the platform.

- Packing Causes VRAM to Explode: Using packing with a dataset at max_tokens length significantly increases VRAM demands, causing out-of-memory errors at 16K sequence lengths.

- One user reported memory usage at 30GB without packing, underscoring the substantial resource implications.

- Attention Mechanisms Debate Heats Up: Discussions revolved around the priority of integrating exotic transformer techniques, such as sparse attention and attention compression, to enhance efficiency in sequence scaling.

- Feedback suggested interest exists but integrating new research faces resistance due to established methodologies.

- AdamWScheduleFree Emerges as Optimizer: Discussions are underway regarding the potential of AdamWScheduleFree as the default optimizer for llama3.1 8B DPO, tested across 2 nodes with 16 GPUs.

- A workaround involving adjustments to the full-dpo Python script was proposed to address previous issues with fsdp.

- Hugging Face Drops UltraScale Playbook: A user shared a link to the UltraScale Playbook hosted on Hugging Face, describing it as refreshing.

- The playbook aims to guide users in scaling model usage within a practical framework.

Latent Space Discord

- Baseten Bags $75M, Eyes Inference in 2025: Baseten announced a $75 million Series C funding round, co-led by @IVP and @sparkcapital, pinpointing 2025 as the key year for AI inference technologies.

- The round included new investors such as Dick Costolo and Adam Bain from @01Advisors, underscoring Baseten's growth and potential in the AI infrastructure space; see the announcement tweet.

- Mastra's Agents Open for Business: The open-source project Mastra introduced a JavaScript SDK for constructing AI agents on Vercel’s AI SDK, emphasizing integration and ease of use; check out Mastra's agent documentation.

- Developers are exploring Mastra agents' capabilities for tasks like accessing third-party APIs and custom functions, enhancing workflow automation.

- Arize AI's $70M Bet on Observability: Arize AI has raised $70 million in Series C funding to advance AI evaluation and observability across generative and decision-making models, according to their Series C announcement.

- Their mission is to ensure AI agents operate reliably at scale, tackling the challenges emerging from new developments in AI technology.

- Lambda Launches to $480M, Aims for AI Cloud: Lambda revealed a $480 million Series D funding round led by Andra Capital and SGW, to solidify the company's standing in AI computing resources; see the announcement from stephenbalaban.

- The funding will help Lambda enhance its position as a cloud service tailored for AI, boosting its capabilities and offerings to meet rising industry demands.

- OpenAI's User Base Skyrockets: OpenAI reported over 400 million weekly active users on ChatGPT, marking a 33% increase in less than three months, according to Brad Lightcap.

- The anticipated GPT-5, promising free unlimited use for all, is expected to consolidate existing models, intensifying competition within the AI landscape.

MCP (Glama) Discord

- SSE Implementation Goes Live: A member confirmed a successful /sse implementation for their project, marking an enhancement to MCP functionality.

- Details can be found in the specified channel, highlighting ongoing improvements.

- Glama Debugging Suffers Cursor Confusion: A member reported issues debugging Glama hosted models, with the cursor failing to locate tools.

- The problem is primarily attributed to improper use of node paths and potential omissions of necessary quotes, accounting for 99% of the issue.

- Docker Installation Confusion Addressed: A new member needed help with Puppeteer installation via a Docker build command, leading to clarification on directory navigation.

- Guidance was given to ensure they were in the correct parent directory and to explain the use of

.in the command.

- Guidance was given to ensure they were in the correct parent directory and to explain the use of

- Python REPL Joins MCP: A member shared a simple Python REPL implementation supporting STDIO for MCP and provided the latest image along with GitHub repository link.

- Inquiries about IPython support were met with optimism for potential addition, opening avenues for further development.

- Docker Deployment Steps Clarified: A member shared a blog post on deploying Dockerized MCP servers, addressing environment setup challenges across architectures.

- The post emphasizes Docker's role in ensuring consistency across development environments and offers a list of reference MCP Servers for implementation.

Modular (Mojo 🔥) Discord

- MAX 25.1 Livestream Scheduled: A livestream is scheduled to discuss MAX 25.1, with opportunities to join on LinkedIn and submit questions through a Google Form.

- Speakers encouraged the community to share their questions, emphasizing eagerness to hear community's insights.

- Mojo on Windows Unlikely Soon: Native Mojo Windows support isn't on the immediate roadmap due to the expenses of running AI clusters on Windows.

- The consensus is that nix OSes are preferred for compute tasks, and many are using cloud Linux platforms instead, diminishing the urgency for Windows support.

- Slab Lists for Memory Efficiency: A member defined a slab list as an efficient data structure, akin to a

LinkedList[InlineArray[T, N]], that promotes simplicity and good memory management, and linked to nickziv/libslablist.- The user noted that this structure can achieve O(1) performance for certain operations and offers faster iteration compared to linked lists because of better cache use.

- Mojo Bridges Python Performance Gap: It was agreed that Mojo is Python-derived but gets performance closer to C/C++/Rust, aiming for future C++-like compatibility with C.

- The community feels Mojo’s type system allows for a Python-like experience, attracting users of languages such as Nim.

- Mojo Excels in Low-Level Ease: A member remarked that handling low-level tasks in Mojo is more user-friendly compared to C/C++, suggesting Mojo makes hardware utilization easier.

- The community suggested that for low-level coding, Mojo doesn’t need to strictly follow Python's syntax, because running Python scripts will be sufficient for many uses.

LlamaIndex Discord

- LlamaCloud Launches in EU: LlamaCloud EU launched early access, offering a new SaaS solution with secure knowledge management and full data residency within the EU.

- The launch aims to remove barriers for European companies needing compliant solutions, emphasizing security and data residency.

- LlamaParse Gets Parsing Boost: LlamaParse introduced new parsing modes—Fast, Balanced, and Premium—to effectively address diverse document parsing needs.

- These upgrades enhance versatility in handling different document types to tackle existing document parsing challenges.

- Agents Stuck in Handoff Limbo: A developer reported issues with an LLM repeatedly returning 'I am handing off to AgentXYZ' instead of executing tool calls in a multi-agent workflow.

- Suggestions included incorporating handoff rules directly into the system message to better clarify expected behavior, but concerns were raised about breaking the existing prompt.

- Redis Races Rampant?: A user seeks strategies to effectively run 1000 parallel batches persisting a summary index, while avoiding race conditions in Redis.

- With review embeddings stored in a Redis namespace, the user is concerned about potential key collisions and resource constraints.

- Scamcoin Shenanigans!: Discussion of the possibility of creating a coin on Solana has led the community to deem such claims as scams.

- Concerns were also raised about the implications of being involved with 'scamcoin' projects more broadly.

Cohere Discord

- Pink Status Gains Traction: A member updated their status to indicate, "now I am pink."

- This color change likely contributes to the visual dynamics of the Discord community.

- Identity Sharing Initiative Under Fire: A user proposed a collaboration opportunity involving identity sharing for profit ranging from $100-1500, highlighting an age range of 25-50.

- This led to concerns being raised about the implications of identity theft in such arrangements, with no website or relevant documentation provided, and sparked debates about being cautious around disclosing personally identifiable information in a public forum.

- Essay on Coffee Absence Requested: A member requested an essay about the effects of a world without coffee, highlighting its cultural and economic significance.

- This request suggests a curiosity about lifestyle changes in the hypothetical scenario where coffee is no longer available.

- Communication Clarity Considered Paramount: Concerns were raised about the ambiguity in written communication, with advice given to use clearer writing to prevent misunderstandings.

- Members emphasized the importance of improving communication to foster positive collaboration within the group.

AI21 Labs (Jamba) Discord

- Engineers Dive into Jamba API: Users are actively exploring the Jamba API, with one member sharing code for making API calls and seeking syntax help, while another offered a detailed API usage outline.

- The comprehensive outline included the headers and necessary parameters, providing practical guidance to other engineers in the channel.

- Jamba API Outputs Spark Debate: Concerns arose over the output format of the Jamba API, particularly regarding escape characters that complicate data processing in different languages.

- Confirmation was given that response formatting varies by language, necessitating tailored handling methods for outputs.

- PHP Engineers Tackle Jamba API Integration: A Symfony and PHP engineer sought advice on converting Jamba API responses into usable formats, specifically addressing special character handling.

- Other members pointed to potential peer assistance with PHP-specific challenges and effective output handling.

- AJAX Proposed for Jamba API Enhancement: One member suggested leveraging AJAX to improve Jamba API response handling, although results showed inconsistencies.

- It was noted that the Jamba chat window formats outputs differently, influencing how results appear and potentially affecting handling strategies.

tinygrad (George Hotz) Discord

- Old GeForce struggles against RTX 4070: Performance tests show an old GeForce 850M achieving 3 tok/s after 8 seconds, while an RTX 4070 reaches 12 tok/s in 1.9 seconds.

- However, overall model usability is limited by significant computational costs and numerical stiffness.

- Int8 Quantization Derails Models: Members noted that Int8 quantization may require adjustment as models occasionally go 'off rails' after several hundred tokens when using Int8Linear.

- The suggestion was made that conversations about tinychat developments should take place in direct messages or GitHub to be more focused.

- Torch Edges Out Tinygrad on Speed Tests: Speed tests indicate that torch outperforms tinygrad on 2048x2048 tensors, with 0.22 ms for torch compared to 0.42 ms for tinygrad.

- However, on 4096x4096 tensors, tinygrad is only 1.08x slower than torch, indicating optimized scaling.

- BEAM Could Boost Performance: Increasing BEAM values might alleviate performance constraints, with tests showing 0.21 ms for 2048x2048 tensors with BEAM=10 in torch.

- Performance appears consistent across different tensor sizes, highlighting potential gains from higher BEAM configurations.

- New PyTorch Channel Launched: A new channel dedicated to PyTorch discussions has been created.

- The intent is to encourage more focused and in-depth conversations as user contributions expand.

Nomic.ai (GPT4All) Discord

- System Message Terminology Causes Confusion: A member clarified that the term 'system message' is now used in the UI, indicating a shift in naming conventions.

- Another participant affirmed that old habits can be difficult to change when navigating these systems.

- Instructions in System Message: Plain English OK?: It's mentioned that plain English instructions can be used in the 'system message', and most models will respect these commands.

- Some members expressed skepticism about the ease of this process, questioning if using Jinja or JSON code is more effective.

- GPT4All Falls Flat on Image Handling: One member queried about the ability to paste images directly into the text bar like in other AI platforms, but it was clarified that GPT4All cannot handle images.

- External software is recommended for such tasks.

- Nomic and NOIMC v2: Is it real?: A member expressed confusion over the implementation of NOIMC v2, questioning why it appears to be incorrectly implemented.

- Another member humorously sought confirmation about being on Nomic, showcasing their frustration.

LLM Agents (Berkeley MOOC) Discord

- 2024 LLM Agents Course Still Useful: A member suggested that while not required, auditing the Fall 2024 Course from this YouTube playlist could deepen understanding, especially for DSPy.

- They noted that DSPy is absent from the current semester’s syllabus, making the Fall 2024 course particularly useful for those interested in it.

- Quizzes Archived for LLM Agents Course: A member shared a link to a quizzes archive for the Fall 2024 course, located here, responding to confusion over their disappearance from the current syllabus.

- The quizzes are now accessible to those who started the course late and want to catch up.

- Navigating Quiz Access on MOOC: In response to a user seeking quiz 1 and 2, it was pointed out that the quizzes can be found on the MOOC’s page or the announcement page.

- It was also mentioned that all certificates have been released and students were encouraged to sign up for the Spring 2025 iteration.

- Course Completion Notice: The LLM Agents MOOC has completed, but video lectures remain accessible in the syllabus.

- All certificates have been released, and students are encouraged to sign up for the Spring 2025 iteration.

DSPy Discord

- Qwen/Qwen2.5-VL-7B-Instruct Scores Varying for HaizeLabs Judge Compute: A member replicated the same dataset as HaizeLabs Judge Compute and found that scores with the model Qwen/Qwen2.5-VL-7B-Instruct ranged from 60%-70% for 2-stage optimized to 88.50% for mipro2.

- The project titled LLM-AggreFact_DSPy has been shared on GitHub with source code related to the evaluation, enabling deeper insights into the methodologies used.

- Leonard Tang Releases Verdict Library: Leonard Tang released Verdict, a library targeting judge-time compute scaling, pointing out AI reliability issues stem from evaluation rather than generation.

- He emphasized that the next advancement for AI should focus on evaluation improvements, contrasting with the emphasis on pre-training and inference-time scaling.

- DSPy Conversation History Examined: A member asked whether DSPy automatically injects conversation history into calls, indicating a caution before more implementation.

- This highlights concerns about potential complexities in managing AI interactions without unintentionally overwriting previous context, especially in more complex applications.

- Exporting Prompts to Message Templates Described: A member shared an FAQ explaining how to freeze and export prompts into message templates by using a Python snippet with

dspy.ChatAdapter().- It was clarified that this method results in a loss of control flow logic, suggesting

program.save()orprogram.dump_state()as alternatives for a more comprehensive export.

- It was clarified that this method results in a loss of control flow logic, suggesting

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

> The full channel by channel breakdowns have been truncated for email. > > If you want the full breakdown, please visit the web version of this email: []()! > > If you enjoyed AInews, please [share with a friend](https://buttondown.email/ainews)! Thanks in advance!