[AINews] AdamW -> AaronD?

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 3/28/2024-4/1/2024. We checked 5 subreddits and 364 Twitters and 26 Discords (381 channels, and 10260 messages) for you. Estimated reading time saved (at 200wpm): 1099 minutes.

It's a quiet Easter weekend and April Fools' is making it harder than normal to sift signal from noise (our contribution here). We do recommend sifting through Sequoia Ascent's playlist, if you're not close to each speaker's work (for example Andrew Ng mostly repeated the writeup we covered last week), which is now fully released.

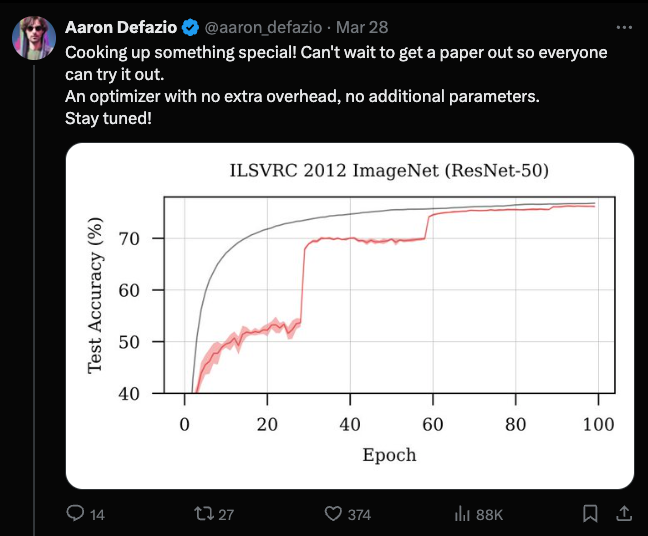

Over in Twitter land, the high alpha seems to come from Aaron Defazio, which several of our AI High Signal follows highlighted as the "new LK-99" for engaging, "impossible" work in public. What's at stake: a potential tuning-free replacement of the very long lived Adam optimizer, and experimental results are currently showing learning at a Pareto frontier in a single run for basically every classic machine learning benchmark (ImageNet ResNet-50, CIFAR-10/100, MLCommons AlgoPerf):

He's writing the paper now, and many "better optimizers" have come and gone, but he is well aware of the literature and going for it. We'll see soon enough in a matter of months.

Table of Contents

- AI Reddit Recap

- AI Twitter Recap

- AI Discords

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Perplexity AI Discord

- Unsloth AI (Daniel Han) Discord

- Nous Research AI Discord

- LM Studio Discord

- OpenAI Discord

- Eleuther Discord

- LAION Discord

- HuggingFace Discord

- OpenInterpreter Discord

- tinygrad (George Hotz) Discord

- LlamaIndex Discord

- OpenRouter (Alex Atallah) Discord

- Latent Space Discord

- CUDA MODE Discord

- OpenAccess AI Collective (axolotl) Discord

- Modular (Mojo 🔥) Discord

- Interconnects (Nathan Lambert) Discord

- AI21 Labs (Jamba) Discord

- LangChain AI Discord

- Mozilla AI Discord

- Datasette - LLM (@SimonW) Discord

- DiscoResearch Discord

- Skunkworks AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence. Comment crawling still not implemented but coming soon.

AI Models and Performance

- Claude 3 Opus overtakes OpenAI models: In /r/singularity, Claude 3 Opus has overtaken all OpenAI models on the LMSys leaderboard, showing impressive performance.

- User pretrains LLaMA-based 300M LLM: In /r/LocalLLaMA, a user pretrained a LLaMA-based 300M LLM that outperformed bert-large for lm-evaluation-harness tasks, using a $500 budget and 4 x 4090 GPUs from vast.ai.

- MambaMixer architecture shows promising results: In /r/MachineLearning, MambaMixer, a new architecture with data-dependent weights using a dual selection mechanism across tokens and channels, shows promising results in vision and time series forecasting tasks.

Stable Diffusion and Image Generation

- Realistic results with SD1.5 and LoRAs: In /r/StableDiffusion, a user achieved good realism using SD1.5 and LoRAs, even passing facecheck.id's AI detection.

- WDXL release showcases impressive capabilities: In /r/StableDiffusion, the WDXL release showcases impressive image generation capabilities.

- Tips and tricks for Stable Diffusion: In /r/StableDiffusion, users share tips and tricks such as base prompts for realistic SDXL renders, colouring in with AI, and creating custom Stardew Valley player portraits.

AI Applications and Demos

- AI-generated Nike spec ad: In /r/MediaSynthesis, an AI-generated Nike spec ad showcases the potential of AI in advertising and creative fields.

- AI engineer beginner project on agentic behavior: In /r/artificial, a user shares an AI engineer beginner project on agentic behavior, demonstrating practical applications of AI.

- Chatbot using OpenAI potentially immune to prompt injections: In /r/OpenAI, a user made a chatbot using OpenAI that is potentially immune to prompt injections, inviting others to test its robustness.

AI Ethics and Policies

- OpenAI planning ban wave for policy violations: In /r/OpenAI, OpenAI is reportedly planning a huge ban wave for users who violated content policies or used jailbreaks.

- Discussion on AI believing AI-generated imagery is reality: In /r/OpenAI, a discussion emerges on whether AI will eventually believe AI-generated imagery is reality, given the increasing amount of generated content in training data.

- OpenAI partnership with G42 in UAE: In /r/OpenAI, OpenAI's partnership with G42 in the UAE aims to expand AI capabilities in the region, with CEO Sam Altman envisioning the UAE as a potential global AI sandbox.

Memes and Humor

- Bill Burr's humorous take on AI: In /r/singularity, Bill Burr shares his humorous take on AI in a popular video post.

- User experiences "brain stroke" while interacting with AI: In /r/singularity, a user experiences a "brain stroke" while interacting with an AI, likely due to unexpected or nonsensical outputs.

- User gets roasted while testing prompt jailbreak: In /r/LocalLLaMA, a user gets roasted while testing prompt jailbreak, showcasing the witty and sometimes snarky responses of AI.

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Capabilities and Limitations

- Limitations of current AI systems: @fchollet noted that memorization, which ML has solely focused on, is not intelligence. Any task that does not involve significant novelty and uncertainty can be solved via memorization, but skill is never a sign of intelligence. @fchollet shared a paper introducing a formal definition of intelligence and benchmark, noting that current state-of-the-art LLMs like Gemini Ultra, Claude 3, or GPT-4 are not able to score higher than a few percents on that benchmark.

- Limitations of benchmarks in assessing AI capabilities: @_akhaliq questioned if we are on the right way for evaluating large vision-language models (LVLMs). They identified two primary issues in current benchmarks: visual content being unnecessary for many samples and unintentional data leakage in training.

- Potential of AI systems: @hardmaru shared a paper noting that collective intelligence is not only the province of groups of animals, and that an important symmetry exists between the behavioral science of swarms and the competencies of cells and other biological systems at different scales.

AI Development and Deployment

- Mojo 🔥 programming language: @svpino noted that Mojo 🔥, the programming language that turns Python into a beast, went open-source. It allows writing Python code or scaling all the way down to metal code.

- Claude 3 beating GPT-4: @svpino reported that Claude 3 is the best model in the market right now, overtaking GPT-4. Claude 3 Opus is #1 in the Arena Leaderboard, beating GPT-4.

- Microsoft and OpenAI's $100B supercomputer: @svpino shared that Microsoft and OpenAI are working on a $100 billion supercomputer called "Stargate", expected to be ready by 2028. The report mentions "proprietary chips".

- Dolphin-2.8-mistral-7b-v0.2 model release: @erhartford announced the release of Dolphin-2.8-mistral-7b-v0.2, trained on @MistralAI's new v0.2 base model with 32k context, sponsored by @CrusoeCloud, @WinsonDabbles, and @abacusai.

- Google's Gecko embeddings: @arankomatsuzaki reported that Google presents Gecko, versatile text embeddings distilled from large language models. Gecko with 768 embedding dimensions competes with 7x larger models and 5x higher dimensional embeddings.

- Apple's ReALM for reference resolution: @arankomatsuzaki shared that Apple presents ReALM: Reference Resolution As Language Modeling.

- Huawei's DiJiang for efficient LLMs: @arankomatsuzaki reported that Huawei presents DiJiang: Efficient Large Language Models through Compact Kernelization, achieving comparable performance with LLaMA2-7B while requiring only about 1/50 pretraining cost.

AI Applications and Use Cases

- Building a RAG application: @svpino recorded a 50-minute YouTube tutorial on how to evaluate a RAG application, building everything from scratch with the goal of learning, not memorizing.

- Generating consistent characters in AI images: @chaseleantj shared a great way to create consistent characters in AI images, allowing telling an entire story about a character in any style and pose.

- Building a perplexity style LLM answer engine: @LangChainAI highlighted a repo taking off, providing a great introduction to building an answer engine from scratch.

- Fine-tuning a Warren Buffett LLM: @virattt shared an update on fine-tuning a Warren Buffett LLM by generating a question-answer dataset using Berkshire's 2023 annual letter. The next step is to generate datasets for all letters from 1965 to 2023 before fine-tuning the LLM.

- Ragdoll for building personalized AI assistants: @llama_index featured Ragdoll and Ragdoll Studio, a library and web app for building AI Personas based on a character, web page, or game NPC. It uses @llama_index under the hood and is powered by local LLMs with image generation built-in.

AI Ethics and Safety

- Potential of AI sentience: @AISafetyMemes shared a conversation with Claude, an AI assistant, discussing the potential of AI sentience and sapience. Claude argued that the fact that it can reflect on its own nature, grapple with existential doubts, and strive to articulate a coherent metaphysical and ethical worldview is evidence of something more than mere shallow mimicry at work.

- Ethical considerations in AI development: @AmandaAskell noted that even if AIs lack moral status, we may have indirect duties towards them, similar to animals. By lying or being cruel to an AI, we indulge in bad moral habits and increase the likelihood of treating humans in the same way.

Memes and Humor

- Humorous take on AI capabilities: @francoisfleuret joked that "Aircraft made of metal lacks the lighter-than-air material to fly, hot air experts say."

- Meme about AI safety concerns: @AISafetyMemes shared a meme quoting Bill Barr on AI: "Do these fucking things have goals?" and "How many sci-fi movies do you need to see to realize where this is going?"

- Joke about AI-generated content: @nearcyan joked that "basically half of twitter is one guy saying ∃x : y and then everyone quote tweeting them with BUT ¬(∀x : y)!!!!"

AI Discords

A summary of Summaries of Summaries

- Stable Diffusion 3 Anticipation and UI Concerns: Discussions in the Stability.ai Discord centered around the potential release date of Stable Diffusion 3 (SD3), with some suspecting an April Fools' prank. Users also expressed frustration with the new inpainting UI in SD, finding it unintuitive. Creative applications of AI, like reimagining video game footage and comic creation workflows, were explored.

- AI Model Comparisons and Inconsistencies: In the Perplexity AI Discord, the Claude 3 Opus model exhibited inconsistent performance on certain question types, sparking comparisons with other models like Haiku and Gemini 1.5 Pro. Discussions also touched on Perplexity's partnership process, API features, and pricing (Perplexity Pricing, OpenAI Pricing).

- Hardware Benchmarks and Fine-Tuning Strategies: The Unsloth AI Discord featured the impressive benchmarks of Qualcomm's Snapdragon Elite X chip (YouTube video), fine-tuning strategies using Unsloth (manual GGUF guide), and a new Chinese AI processor from Intellifusion that could be cost-effective for inference. The successful implementation of Unsloth + ORPO alignment in LLaMA Factory was also praised (ORPO paper).

- Jamba Model Unveiling and BitNet Validation: AI21 Labs' Jamba model, a hybrid SSM-Transformer, generated buzz in the Nous Research AI Discord (AI21's blog post). NousResearch also validated the claims of the BitNet paper with a reproduced 1B model (Hugging Face repo). Discussions touched on the merits of single vs. multiple RAG setups and the challenges of PII anonymization in models like Hermes mistral 7b.

- LM Studio Updates Spark GPU Discussions: The LM Studio Discord saw queries about the JSON output format for application development, feature requests for a plugin system and remote GPU support, and troubleshooting of GPU issues post-update, including missing GPU Acceleration options and unrecognized VRAM. Fine-tuning and hardware compatibility were also hot topics.

- Voice Synthesis Breakthroughs and Ethical Concerns: OpenAI's Voice Engine and the open-source VoiceCraft (GitHub repo, demo) sparked discussions in the OpenAI Discord about the rapid advancements in speech synthesis and the potential for misuse. The choice between OpenAI's APIs for various tasks was also debated.

- 1-Bit and Ternary LLMs Spark Skepticism: The Eleuther Discord featured skepticism and reproducibility attempts surrounding 1-bit and ternary quantized LLMs, often marketed as "1.58 bits per parameter" (BitNet b1.58 model). The effectiveness of the Frechet Inception Distance (FID) metric for evaluating image generation was questioned (alternative metric proposal), and anticipation built for a new optimization technique from Meta.

- DBRX Integration and V-JEPA for Video Lava: The LAION Discord discussed the challenges of integrating DBRX into axolotl (pull request #1462), the potential of V-JEPA embeddings for enhancing video Lava (V-JEPA GitHub), and new approaches in diffusion and embedding models (Pseudo-Huber Loss paper, Gecko paper).

- Hugging Face Introduces 1-Bit Model Weights: Hugging Face released 1.38 bit quantized model weights for large language models (LLMs), a step towards more efficient AI (1bitLLM). The community also discussed the Perturbed-Attention Guidance (PAG) method for improving sample quality without reducing diversity (PAG paper) and real-time video generation using 1 step diffusion with sdxl-turbo (Twitter post with video snippets).

- LlamaIndex Enhancements and RAFT Dataset Generation: The LlamaIndex Discord featured a webinar announcement on Retrieval-Augmented Fine-Tuning (RAFT) with the technique's co-authors (sign-up link), guides on building reflective RAG systems and using LlamaParse for complex documents (Twitter thread), and the introduction of RAFTDatasetPack for generating datasets for RAFT (GitHub notebook). Troubleshooting discussions revolved around handling oversized data chunks with SemanticSplitterNodeParser and outdated documentation.

- OpenRouter Introduces App Rankings and DBRX: OpenRouter launched App Rankings for Models, allowing insights into top public apps using specific models (Claude 3 Opus App Rankings). Databricks' DBRX 132B model was also added, boasting superior performance over models like Mixtral (DBRX Instruct page).

- Mojo's Open-Source Excitement and Challenges: The Modular Discord buzzed with the news of Mojo's standard library going open-source (blog post, GitHub repo), though limitations on non-internal/commercial applications tempered enthusiasm. Installation challenges, the need for better profiling tools, and the potential of Mojo's multithreading and parallelization were discussed.

- Interconnects Preserves Open Alignment History: Nathan Lambert announced an initiative in the Interconnects Discord to document the evolution of open alignment techniques post-ChatGPT, including the reproduction rush and the DPO vs. IPO debate (Lambert's Notion Notes). The stepwise DPO (sDPO) method was also highlighted as a potential democratizer of performance gains in model training (sDPO paper).

- Jamba's Performance Puzzle: The AI21 Labs Discord pondered Jamba's performance on code tasks and the HumanEval benchmark, its language inclusivity, and the potential for fine-tuning on AI21 Studio.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Inpainting Frustration: Engineers voice frustration with Stable Diffusion's (SD) new inpainting UI; the layout challenges efficiency and intuition.

- April Fools' AI Shenanigans: Discussions suggest CivitAI has integrated playful features like "ODOR" models and "chadgpt" alerts for April Fools'—reactions are mixed between amusement and confusion.

- Stable Diffusion 3 Anticipation: Debate heats up about the release date of Stable Diffusion 3 (SD3), with users oscillating between eager anticipation and suspicions of a release date prank.

- AI Technical Support Squad: Members actively seek technical assistance with AI tools spanning ControlNet setup in Colab, using Comfy UI and rendering architecture with SD, hinting at a demand for a more centralized knowledge hub or support system.

- Creative AI Futurism: Ideas circulate on leveraging AI for creative output, such as reimagining video game footage in AI-driven films and integrating AI into comic creation workflows, prompting a discussion on the evolution of content creation.

Perplexity AI Discord

Claude's Classroom Conundrum: The Claude 3 Opus model exhibits inconsistent performance with questions related to identifying remaining books in a room, with some models not producing correct answers despite several adjustments to prompts.

AI Model Melee: Engineers discussed AI model benchmarks, focusing on the comparative performance of Haiku, Gemini 1.5 Pro, and Claude Opus. The conversations highlighted differing strengths and functionalities but did not lean towards consensus on a superior model.

Pondering Partnerships and API Puzzles: For partnership interests with Perplexity, engineers are instructed to email support@perplexity.ai, and seeking details about the API's source citation feature can be directed to Perplexity's Typeform. Additionally, "pplx-70b-online" model support is deprecated, and the alias concerns are culminating in suggesting an update to Perplexity's Supported Models documentation.

Credit Where Credit's Due: Reports of issues with credit purchases on Perplexity surfaced, hinting at potential complications with transaction systems or third-party security features like those implemented by Stripe. Member discussion advised for situational troubleshooting and inquired about further inspection.

Search Spectacles and Query Quirks: Engineers displayed a broad array of interests from Bohmian mechanics to Hyperloop through shared queries on Perplexity AI, but user-contributed informational threads lacked documentation support for their extendibility and shareability.

Unsloth AI (Daniel Han) Discord

Snapdragon Makes Waves: Qualcomm's Snapdragon Elite X Arm chip has impressed engineers with its 45 TOPs performance, leading to discussions about its cost-efficiency and comparisons with other chips like the Tesla T4's 65 TFLOPs of float16. The excitement was fueled by a YouTube video detailing the chip's benchmarks.

Model Training Optimized with Unsloth: Fine-tuning Mistral models with Unsloth AI can encounter dependency issues, but the Unsloth GitHub repository offers a Docker solution and a manual GGUF guide. Moreover, discussions suggest single GPU training is possible by setting os.environ["CUDA_VISIBLE_DEVICES"], although multi-GPU support is a potential future development.

AI Hardware Announcements Catch Attention: Intellifusion's new AI processor could be a game-changer for inference operations due to its cost-effectiveness, raising curiosity about its potential in training scenarios. Details can be found on Tom's Hardware.

Fine-Tuning Techniques Under Scrutiny: Engineers debate fine-tuning methods like QLora 4bit versus SFT/pretraining, discussing how the quantization process might affect performance. There's also talk about the paradox of dataset size in model training, where quality, not just quantity, determines the effectiveness.

ORPO Integration Sparks Commendation: The Unsloth + ORPO (Orthogonal Projection for Language Models Alignment) combination has been implemented effectively in LLaMA Factory, according to a paper on arXiv. The AI community shared success stories and optimizations, acknowledging particular efficacy in training with limited data samples.

Nous Research AI Discord

StyleGAN Gets a Fashion Makeover: When training StyleGAN2-ada with various fashion images, users inquired about the need for script modifications but did not mention outcomes or specify details on solutions.

Learners Take Flight with ML/AI Courses: For those charting a course into machine learning, particularly from other fields like aerospace, the community recommended starting with the foundational fastai courses, and moving toward specialized courses like the Hugging Face NLP course for a deep dive into language models and transformers.

Microsoft's Ternary LLM Paper Replicated: Results from a Microsoft paper on ternary Large Language Models, especially concerning the 3 billion parameter models at 100 billion operations, have been successfully replicated, as evidenced by the model bitnet_b1_58-3B on Hugging Face.

Nous Research Amplifies LLM Discussion with a Tweet: Nous Research fueled the conversation around LLMs with a twitter post, though the content of the announcement was not detailed in the messages.

Privacy Detection Dilemma: Hermes mistral 7b's difficulties in anonymizing PII sparked debate on how to enhance the model's capabilities. There was a mention of upcoming data integrations by NousResearch and models that may aid in improvement, such as open-llama-3b-v2-pii-transform.

Opinions Split on RAG Configurations: The community discussed the merits of using a single large RAG versus multiple specialized RAGs. While specific approaches or results were not mentioned, the conversation touched on the importance of metadata and the idea of integrating RAG with other tools to bolster functionality.

OpenSim Engages Philosophical and Practical Domains: Users debated the economic aspects of token output costs in LLM apps, explored the concept of "Hyperstition" within AI interactivity, and expressed desire for new features in WorldSim, like saving chat sessions with URLs for sharing.

LM Studio Discord

JSON Outputs Draw Developer Attention: AI engineers show interest in LMStudio's JSON output format for the development of practical applications. Seamless integration with langchain has been reported, making the process incredibly efficient.

Plugin Possibilities Percolate in LM Studio: The community calls for plugin support within LM Studio for expandability, while feature requests such as a Unified Settings Menu and Keyboard Shortcuts indicate a desire for a more customizable and efficient user interface.

Apple Silicon Users Adapt and Overcome: LLM users report challenges when running models on Apple Silicon M1 Macs, offering shared solutions like shutting down other apps to free up memory and exploring LoRA adaptation interfaces.

GPUs Under the Microscope after LM Studio Update: Post-update GPU issues with LM Studio, including disappearing GPU Acceleration options and unrecognized VRAM, catalyzes conversations around navigating hardware compatibility, multi-GPU setups, and memory usage.

Remote GPU Support Requested for Power Users: AI Engineers express interest in remote GPU support for LM Studio, noting parallels to services allowing remote gaming, and ask for open-source initiatives considering the community's emphasis on privacy and security.

OpenAI Discord

Voice Tech Marches On: OpenAI's Voice Engine can now generate natural speech just from text and a 15-second voice sample, though they're proceeding with caution to mitigate misuse risks. Simultaneously, OpenAI removed the signup barrier for ChatGPT, allowing instant AI engagement worldwide.

Prompt Engineering Reveals Tech Quirks: Some members experience difficulties when transferring LaTeX equations from ChatGPT to Microsoft Word, whilst others discussed nuanced AI approaches like meta-prompting and observed unusual behaviors in roleplaying scenarios with the gpt-4-0125-preview model.

VoiceCraft's New Frontier: VoiceCraft's GitHub repo and its accompanying demo highlight its speech editing and text-to-speech prowess, igniting discussions around the ethics of voice cloning and potential for misuse.

Choosing the Right AI Tools for Business Insights: In the tech community, there's uncertainty about whether to use the completion API or the assistant API for tasks like summarizing business data and generating quizzes, with ChatGPT format controls suggested as a deciding factor (API context management).

Model Mix-Up Clarified: Discussions clarified that ChatGPT is not an AI model itself, but an application that uses GPT models. Additionally, debates blossomed around the usage and limitations of Custom GPT and how developers might interface with GPT API directly for projects like automated video content management.

Eleuther Discord

- AI Apocalypse: Still a Chuckle, Not a Priority: In a lighthearted debate, the community estimated the risk of AI going rogue at an average concern level of 3.2 out of infinity, indicating a humorous but cautious stance on the subject.

- Grammar Nerds Assemble: An intricate discussion on the proper usage of "axis" led to resource sharing, like Grammar Monster's explanation of the word's grammatical nuances.

- Human or Not Human, That is the AI Question: A spirited conversation raised questions about AI reaching human-level intelligence, intertwining hardware progress with Moore's Law and the critical need for AI alignment to ease societal integration.

- Peering Through the Hype of AI Papers: There's keen interest and healthy skepticism over recent AI papers; the discussions mentioned the promise and doubts around adding more AI agents, with a side-eye towards optimistic forecasts from figures like Andrew Ng.

- Dubious Repositories Raise Eyebrows: GitHub projects by Kye Gomez came under the microscope, prompting contemplation on their impacts on the scientific process and reproducibility.

- MoE with mismatched expert sizes sparks debate: The guild dissected Mixture of Experts (MoE) models with heterogeneous expert sizes; the gap between theory and reported performance has generated conflicting views.

- Questions Raised Over BitNet b1.58 Validity: NousResearch's reproducibility attempt on BitNet b1.58 raised questions about its efficiency claims, found in detail on their Hugging Face repo, compared to FP16 counterparts.

- Is FID the Right Yardstick?: Concerns about Frechet Inception Distance's accuracy prompted researchers to seek better measures for evaluating image generation, as highlighted in an alternative metric proposal.

- Excitement Building for Meta's Optimization Mystery: Anticipation is brewing over a teased new optimization technique from Meta, claimed to outdo Adam with zero memory overhead, challenging current optimization paradigms.

- Tuning For Precision: Dialogue on starting models for SFT on TLDR text summarization showcased an exchange of insights, focusing on models like Pythia against the backdrop of resource limits and performance.

- Keep Your Logits in Check: Exchanges in the guild clarified that tweaking of logits occurs before every softmax within the network, addressing both attention and the final head in anticipation of decision making.

- Softmax Function: A Refresher Course Needed: A temporary forgetfulness about softmax functions was met with a supportive correction, demonstrating the community's spirit of knowledge-sharing and camaraderie.

- Sparse Autoencoders under the Microscope: A new issue with Sparse Autoencoders (SAEs) was unearthed where reconstruction errors can unduly sway model predictions, detailed in a Short research post.

- Visualizing the Invisible: A novel visualization library for SAE has been introduced, aiding researchers in understanding Sparse Autoencoder's features, announced in SAE Vis Announcement Post.

- Deciphering SAE's Features: A post sharing insights into Sparse Autoencoder features led to a discussion on their significances, particularly regarding AI alignment and feature interpretation, found in this LessWrong interpretation.

- Model Loading Mastery and Enhancement: A DBRX model loading issue in lm-eval harness prompted an individual to troubleshoot successfully by updating to nodes with adequate GPUs, while a new pull request for lm-evaluation-harness aims to refine handling of context-based tasks.

- Global Batch Size Balancing Act in NeoX: Discussions in NeoX development unearthed the intricacies of setting a global batch size that doesn't align with the GPU count, revealing potential load imbalance and GPU capacity bottlenecks.

LAION Discord

DBRX Base Hits Home Run: A non-gated re-upload of the DBRX Base model, notable for its mixture-of-experts architecture, reiterates the community's push for open weights and ungatekeeped access. The original models can be explored on Hugging Face.

Euler Method Proves Its Worth: Anecdotal evidence suggests that using the euler ancestral method optimizes results on terminus, backed by amusing examples of precise Chinese translations.

AI's Music Maestros Dissect Suno: Discussing AI music generation tools, particularly Suno's v2 vs v3, the community shared concerns about noise in voice generation and the potential leap v4 could bring.

Voice Synthesis Under the Microscope: Voices in the guild raised concerns about OpenAI's Voice Engine potentially eclipsing Voicecraft, while pondering on the strategic play involved and the potential repercussions on the US Elections.

Stochastic Rounding as a Training Booster: Engineers are looking into stochastic rounding techniques for training AI, presenting nestordemeure/stochastorch as a promising Pytorch implementation to try out.

Transforming Diffusion with Transformers: Conversations trend towards replacing UNETs with transformers in diffusion, with a key research paper guiding the way.

Decoding UNET Mysteries: A member breaks down UNETs as a tool for downsampling and then reconstructing images, which could help with discarding superfluous details in models.

Qwen1.5-MoE-A2.7B Raises Expectations: A buzz surrounds Qwen1.5-MoE-A2.7B, a model challenging larger counterparts with just 2.7 billion activated parameters, detailed across various platforms like GitHub, Hugging Face, and Demo.

V-JEPA Sets the Stage for Video Lava: The community examines V-JEPA's potential in enhancing video Lava, with GitHub resources at hand (V-JEPA GitHub) to broaden the data prep and training terrain.

Diffusion and Embedding Win Big With New Techniques: A paper discussing a new diffusion loss function offers a glimmer of hope against data corruption (paper link), while Gecko's approach in text embedding might be a game changer in accelerating training (Gecko paper link).

HuggingFace Discord

Blazing 1-Bit Model Weights Introduced: Hugging Face released 1.38 bit quantized model weights for large language models (LLMs), signaling strides towards more efficient AI models. Interested engineers can scrutinize the model here.

PAG Refines Samples Without Sacrificing Diversity: The utility of Perturbed-Attention Guidance (PAG) was showcased, which unlike Classifier-Free Guidance (CFG), doesn't reduce diversity when improving sample quality. The usage ratio of CFG 4.5 and PAG between 3.0 to 7.0 was recommended for enhanced results, based on research.

Real-Time Diffusion Now a Reality: The use of 1 step diffusion enabling 30fps generation at 800x800 resolution has been achieved using sdxl-turbo. For those intrigued by the seamless transitions, a Twitter thread with video snippets showcases the evolution of real-time video generation.

In Search of Tokenizer-Compatible Models: An inquiry was made about how to identify suitable assistant models for model.generate by tokenizer, with discussions pointing to the Hugging Face Hub API for potential solutions. Additionally, approaches to extracting domain-specific entities were explored, recommending leveraging pre-trained models or considering independent training for 20k documents.

Melding AI into Musical Alchemy: Discussions included the challenge of AI-generated music, blending artists' voices to create harmonies like those of Little Mix, highlighted by the intricacy of key adjustments. Other technical endeavors shared in the community involved the creation of Terraform provider for Hugging Face Spaces and the introduction of OneMix, a Remix-based SaaS boilerplate.

OpenInterpreter Discord

Getting Chatty with Open Interpreter: A video titled "Open Interpreter Advanced Experimentation - Part 2" reveals new experiments with the OpenInterpreter, demonstrating the platform's growing capabilities for technical innovation.

AI as a Sidekick: The Fabric project on GitHub, an open-source initiative, offers a modular framework designed to augment human skills with AI, utilizing a community-driven collection of AI prompts adaptable for various challenges.

Audio Issues Crackdown: In the OpenInterpreter community, an audio playback problem on MacOS involving ffmpeg was teased out, and solutions involving multiple commands were proposed to mitigate the trouble experienced after a response was generated.

Windows Walkthrough Update: The onboarding experience for Windows users working with the OpenInterpreter 01 client has seen enhancements with new pull requests (#192, #203) aimed at resolving compatibility challenges and improving the setup documentation.

Fine-Tuning for O1 Light Fabricators: Makers of the O1 Light are advised to upscale 3D printing files to 119.67% for fitting the components properly, signaling a community-driven focus on custom hardware optimization.

tinygrad (George Hotz) Discord

Intel Arc Meets Optimized Performance: Efforts to optimize transformers for Intel Arc GPUs identified the underperformance of IPEx library, as it wasn't employing fp16 effectively. Solutions involving PyTorch JIT yielded significant performance improvements for stable diffusion tasks.

Open Call: AMD GEMM Optimization Wanted: A $200 bounty is up for grabs for writing optimized GEMM code for AMD 7900XTX GPUs with instructions including HIP C++ integration. However, the endeavor is hampered by script issues involving missing modules and library paths.

Amendments Afoot in Tinygrad: Discussions are ongoing within the Tinygrad repository, pinpointing issues with failing tests and missing functionalities. One suggestion involves examining the shapetracker and uopt optimization to enable contributions even from non-GPU laptop setups.

AMD's Driver Saga: Conversations centered on AMD driver instability, calling for an open-source approach for firmware and suggesting various GPU reset methods like BACO and PSP mode2. A GitHub discussion thread expressed frustration over full reset limitations and ineffective communication channels with AMD.

Fusion and Views in Shape Manipulation: The technicalities of kernel fusion and shape manipulation in Tinygrad were broached, with a shared link on notes providing possible optimizations. An issue regarding memory layout complexities and uneven stride presentation was pinpointed and addressed in a recent pull request.

LlamaIndex Discord

Phorm.ai Teams Up with LlamaIndex: Phorm.ai integration provides TypeScript and Python support within LlamaIndex Discord, enabling queries and answers through "@-mention" within specific channels.

Learn RAFT, Don't Be Daft: A LlamaIndex webinar with RAFT co-authors, Tianjun Zhang and Shishir Patil, promises insights into domain-specific LLM fine-tuning, set for Thursday, 9am PT with sign-ups at lu.ma.

RAG Revolution Deep Dives: Guides and tutorials detail new strategies for enhancing Retrieval Augmented Generation, including self-reflective systems, integration with LlamaParse, and the importance of re-ranking, discussed across various platforms such as Twitter and YouTube.

LLM Research Made Accessible: A GitHub repository by shure-dev aims to consolidate impactful research papers on Large Language Models, serving as a comprehensive resource for AI enthusiasts.

Tackling LlamaIndex Document Dilemmas: Community members address complex issues, from managing oversized data chunks with SemanticSplitterNodeParser to improving outdated documentation, sharing best practices and solutions such as a helpful Colab tutorial.

OpenRouter (Alex Atallah) Discord

Novus Chat Jets onto OpenRouter: Novus Chat, a fresh platform integrating OpenRouter models, is creating buzz with free access to lowcost models and an invitation extended to AI enthusiasts to join its development discussions.

Ranking Reveal Creates Model Buzz: OpenRouter has introduced App Rankings for Models, allowing a glance at the top public apps that utilize specific models, with the Apps tab for each model revealing token stats; see Claude 3 Opus App Rankings as an example.

OpenRouter Sparks Chatbot API Conversation: Technical exchanges within the community are intensely focused on utilizing OpenRouter's APIs, embracing strategies for enhancing context retention and error handling while comparing functionalities between Assistant Message and Chat Completion approaches.

ClaudeAI Beta: Now Self-Moderating: OpenRouter's beta offering of Anthropic's Claude 3 Opus introduces a self-moderated version aiming to mitigate false positives, promising nuanced performance in sensitive contexts, as detailed in Anthropic's announcements.

Downtime Drama and Resolution: Recent Midnight Rose and Pysfighter2 models faced temporary downtime which was promptly resolved, whereas Coinbase payment issues were also flagged with assurance of a fix in progress, maintaining active wallet connections.

Latent Space Discord

Bold Climb Beyond the Binary: Discussions on 1-bit LLMs, referred to as "1.58 bits per parameter" due to ternary quantization, revealed skepticism about marketing hype vs technical precision. Community engagement included sharing of relevant papers and anecdotal reproductions of key findings.

Cross-Continental Voice Model Win: Voicecraft's new open-source speech model has outperformed ElevenLabs, with members sharing GitHub weights and positive experiences.

Bye-Bye, Boss: Stability AI's CEO stepping down made waves, with the community dissecting interviews such as Diamandis’s YouTube piece and speculating about company futures and the tech executive landscape.

Local LLMs Conquer Complexity: Discussions in the AI-In-Action club took a deep dive into the efficiency of local LLM function calling, with contrasting opinions on which methods lead the pack, outlines vs instructor, and exploration of mechanisms like regular expressions in text generation.

Anticipation for AI Agendas: Upcoming sessions about UI/UX patterns and RAG architectures stirred up interest, backed by a community-driven schedule. Sharing of resources and facilitation plans spotlighted the proactive preparation for future tech talks.

CUDA MODE Discord

- Catch the CUDA Wave: There's an increasing interest in CUDA development, with a preference for VSCode and explorations into CLion. A CUDA course for beginners starting April 5th is announced, with resources available on Cohere's Tweet, while Mojo standard library goes open-source as per details on Modular's blog and GitHub.

- Precision Matters in Triton: Experiments show TF32 causing inaccuracies when using

tl.dot()in Triton, with a noted discrepancy against PyTorch results, linked to this issue. PyTorch's documentation helps clarify TF32 utilization, and Nsight Compute is discussed for profiling Triton code.

- Triton Puzzle Conundrum: The Triton visualisation tool challenges were resolved with a new notebook and detailed installation instructions, but warnings were raised about installation sequences that could lead to version incompatibilities.

- LLM Finetuning Feasibility: PyTorch released a config for single-GPU finetuning of the LLaMA 7B model on a reduced memory footprint, found here.

- Flash Attention Focus: Lecture 12 on Flash Attention raises interest, with the community prompted to attend. However, video quality issues on YouTube were reported, with the recommendation to check back for higher resolution processing.

- From CUDA Queries to GPU Resources: Queries relating to CUDA development on MacBooks and alternatives like Google Colab were addressed, confirming Colab's adequacy for CUDA programming. An Nvidia GPU though, is essential for running CUDA applications. Resources like Lightning AI Studio offer free GPU time, with Colab touted as good for free access to Nvidia T4 GPUs.

- CUDA Bookworms: For the study of GPU architecture, discussions included strategies, such as reading the PMPP book thoroughly before attempting questions, possibly organizing work on GitHub, and diving deeper into memory load phases to understand optimization.

- Ring-Attention Ruffling Feathers: The community actively discusses training with ring-attention on long-context datasets, referencing datasets on Hugging Face and tools like Flash-Decoding. Workflow fixes and dataset sourcing are central, with the value of VRAM resources being a hot topic, hinted by the VRAM table on Reddit.

- Tech Ecosystem Discussions: Papers on distributed training were solicited, yielding insights into AWS GPU instance profiling and cross-mesh resharding in model-parallel deep learning. An MLSys abstract on the topic drew particular attention.

OpenAccess AI Collective (axolotl) Discord

- GPU Memory Optimizations Emerge: Significant memory savings have been reported using PagedAdamW, yielding nearly 50% reduction in peak memory usage (14GB vs. 27GB) for 8-bit implementations; the trick lies in optimizing the backward pass. Details were shared including a configuration example on GitHub.

- Axolotl Meets DBRX: The integration of DBRX into axolotl is a hefty task with substantial efforts underway, as evidenced by the progress in pull request #1462. Discussions revealed intricacies in training control and the pursuit of multi-GPU optimizations that currently challenge the capacity for gradient accumulation.

- Whisper Speaks Volumes in Transcription: In the delicate art of transcribing audio to text, particularly in English and Chinese, solutions like Whisper shine for single speaker scenarios, while Assembly AI and whisperx with diarization were endorsed for complex multi-speaker tasks. Engineers are pushing boundaries, dealing with CUDA errors on Runpod's GPUs and testing ring-attention with increased sequence lengths (16k-32k), as seen in a GitHub repository for ring-attention implementations.

- Model Agility for Text Classification: Faced with limited resources, such as a T4 GPU, the community shared insights on leaner models adept at text classification—Mistral and qlora—alongside tools like auto-ollama for simple model testing via chat interfaces.

- Engineers Exchange Epic Troubleshooting Tales: From tackling OOM issues in ambitious lisa branch projects to diagnosing episodes of training stagnation after just one epoch, members rallied with suggestions pivoting around optimizer nuances and tools like wandb. Meanwhile, constructs for AI-driven phone conversations via Telegram bots keep the dialogue lively and diverse.

Modular (Mojo 🔥) Discord

Open-Sourcing Mojo: A Community Effort: The excitement about Modular's open-sourced Mojo standard library is palpable; however, there are frustrations due to limitations on non-internal/commercial applications and the lack of essential features like string sorting. Installation challenges on Linux Mint and desires for better profiling tools were also voiced, with official support confirmed for Ubuntu, MacOS, and WSL2 and guides provided for setup and local stdlib building.

Mojo's Threading Quest and Docs Expansion: Technical discussions on Mojo's multithreading capabilities highlighted the use of OpenMP for multi-core CPU enhancements and debates about external_call() functionality improvements. MLIR's syntax documentation is being improved to be more user-friendly, and there's a call for more detailed contributions.

Library and Language Enhancements: Several Mojo libraries have been updated to version 24.2, while the anticipation for a more evolved Reference component and better C/C++ interop in Mojo is strong. A new logging library, Stump, is introduced for the community to test.

Tackling Code Challenges: Performance and benchmarking channels discussed the one billion row challenge, noting the absence of certain standard library features and the need for improved memory allocation understanding. Meanwhile, the matmul.mojo example raised concerns over rounding errors and data type inconsistencies.

MAX Makes Moves into Triton: MAX Serving successfully operates as a backend for the Triton Inference Server, and the team is eager to support users in their migration efforts, emphasizing an easy transition and promising enhanced pipeline optimization.

Interconnects (Nathan Lambert) Discord

Benchmarks Set Stage for AI Bravado: The lm-sys released an advanced Arena-Hard benchmark aiming to better evaluate language models through intricate user queries. Debates arose around the potential biases in judging, especially exemplifying GPT-4's self-preference and its significant performance over Claude on Arena-Hard.

Token Talk Takes Theoretical Turn: Conversations pivoted to evaluating the informational content of tokens, with mutual information cited as a possible measure. Discussions framed this analysis against repeng strategies and Typicality methods, the latter detailed in an information theory-based paper.

Innovation Amidst The Hiring Game: Discussions revealed Stability AI actively recruiting top researchers, while Nathan Lambert described Synth Labs' non-traditional startup strategy, introducing ground-breaking papers preeminent to their product launches.

1-Bit Wonders: NousResearch validated Bitnet's claims through a 1B model trained on the Dolma dataset, released on Hugging Face, igniting discussions on the novelty and technicalities of 1-bit training.

sDPO Steps Up in RL: Shared insights unveiled stepwise DPO (sDPO) through a new paper, a technique that could democratize performance gains in model training, aligning models closely with human preferences without heavy financial backing.

Preserving Alignment Almanac: Nathan Lambert announced an initiative to document and discuss the evolution of open alignment techniques post-ChatGPT. Contributions such as an overview of various replicating models and considerations on preference optimization methods glean insight into the historical growth of the field, documented in Lambert's Notion Notes.

AI21 Labs (Jamba) Discord

- Jamba's Code Conundrum Continues: The performance of Jamba-v0.1 on Code tasks, such as the HumanEval benchmark, is still not discussed, sparking curiosity within the community.

- Jamba's Language Inclusivity in Question: Queries were raised about the inclusion of languages like Czech in Jamba's training data, but no conclusive information has been provided.

- Jamba Prepares for Fine-Tune Touchdown: There is anticipation in the community for Jamba to be available for fine-tuning on AI21 Studio, with expectations of an instruct model coming to the platform.

- Understanding Jamba's Hardware Hunger: Discussions highlighted that Jamba efficiently uses just 12B of its 52B parameters through MoE layers during inference, yet there's a consensus that operating Jamba on consumer-grade hardware, such as an NVIDIA 4090, is not feasible.

- Demystifying Jamba's Block Magic: A technical exchange clarified the role of Mamba and MoE layers in Jamba, with a slated ratio being one Transformer layer for every eight total layers, confirming that Transformer layers are not part of MoE but integrated within specific blocks in the Jamba architecture.

LangChain AI Discord

- GalaxyAI Astonishes with Free API Offers: GalaxyAI has rolled out a free API service that allows users to access high-caliber AI models like GPT-4 and others, bolstering the community's ability to integrate AI into their projects. Interested developers can try the API here.

- Illuminating Model Alignment Techniques: A blog has outlined the application of methods such as RLHF, DPO, and KTO to models like Mistral and Zephyr 7B, aiming to enhance model alignment. Those curious can digest the full details on Premai's blog.

- Revolutionizing AI with Chain of Tasks: Innovation in prompting techniques for crafting advanced conversational LLM Taskbots using LangGraph, named the Chain of Tasks, has been highlighted across two blog posts. To probe deeper into these developments, readers can peruse the LinkedIn article.

- CrewAI Ushers in AI Agent Orchestration: The announcement of CrewAI's framework for the orchestration of autonomous AI agents has sparked interest for its seamless OpenAI and local LLM integration capabilities, with the community invited to explore on their website and GitHub.

- Vector Databases Made Accessible with Qdrant and Langchain: Members can now dive into vector databases courtesy of a tutorial demonstrating the fusion of Qdrant and LangChain, looking at local and cloud implementations. The in-depth tutorial awaits enthusiasts in the form of a YouTube video.

Mozilla AI Discord

- Hyperparameter Hiccup Frustrations: Running the command

./server -m all-MiniLM-L6-v2-f32.gguf --embeddingcaused an error related tobert.context_length, but no solution to the error was provided during the discussions.

- llamafile's Stability: A Work in Progress: Users have experienced instability when executing llamafile, with some instances of inconsistent performance; one user committed to probing these issues in the upcoming week.

- llamafile v0.7 Makes Its Entrance: The community heralded the release of llamafile v0.7, highlighting enhancements in performance and accuracy, alongside a well-received blog post detailing improvements in matmul just before April Fool's Day.

- In Search of the Perfect Prompt: There were inquiries about the ideal prompt templating for running llamafile using openchat 3.5 0106 in the web UI, including examples of template input fields and variables, but clear guidance remained elusive.

- Matmul Benchmarking Throwdown: A benchmarking code snippet for comparing numpy's matmul with a custom implementation was provided by jartine, sparking interest in alternative methods that bypass threading yet improve efficiency.

Datasette - LLM (@SimonW) Discord

- Scout Law Inspires Chatbot Banter: Employing the Scout Law, a user programmed Claude 3 Haiku to respond with quirky yet honest quips, exemplified by witty phrases like "A door is not a door when it's ajar!".

- Chatbot's Friendly Babble by Design: The chatbot's tendency to elaborate extensively is by design, aligning with a system prompt that directs it to embody friendliness and helpfulness, demonstrating this by integrating elements of the Scout Law in its dialogue.

- Trustworthy Shells and Chatbots: Mimicking the Scout Law's value of trustworthiness, the bot creatively compared limpets and their protective shells to the concept of trust, showing adeptness at thematic interpretation.

- Strategizing Queries for Clearer Understanding: An approach was tested where the chatbot would pose clarifying questions before offering direct solutions, suggesting a method that could enhance problem-solving effectiveness.

- Resolution for Installation Hiccups: Addressing a

FileNotFoundErrorduringllminstallation, it was advised to reinstall the package, as this was a confirmed necessary step by another user who recently confronted similar issues.

DiscoResearch Discord

- Jamba Joins the Model Mix: AI21 Labs introduces Jamba, featuring a Structured State Space model combined with a Transformer, which can be tested on Hugging Face.

- BitNet Clones Rival Original: Successful reproduction of the BitNet b1.58 model matched original performance on the RedPajama dataset, guided by their follow-up paper's methodology.

- Model Behavior Under Magnifying Glass: Discussions on novel LLM architectures include queries for assessments or "vibe checks" and observations on Nectar dataset constructed with GPT-4 ranking, with sources such as ShareGPT, Antropic/hh-rlhf, and Flan.

- Questionable AI Guidance in Hot Water: A controversial instance in the Nectar dataset showed GPT offering instructions on making a gun, with models like Starling possibly responding differently from models that choose to refuse.

- Translation Evaluation Tools at the Ready: Translation quality is scrutinized using a new tool found on Hugging Face and comet scores, providing metrics for translation assessments in German language discussions.

Skunkworks AI Discord

AI21's Jamba Jumps into the Fray: The Jamba model by AI21 has been shared within the Skunkworks AI community, touting enhancements in SSM-Transformer design for large language models.

Databricks' DBRX LLM Claims the Crown: Databricks' general-purpose large language model DBRX supposedly establishes new highs on multiple benchmarks, according to a shared video in the Skunkworks AI community.

Tackling Catastrophic Forgetting in Class-Incremental Learning: Research indicates that adapter tuning might be the key to combating catastrophic forgetting in CIL, employing feature sampling and prototype semantic shift analysis. The study is accessible through this arXiv link.

Closing the Gap Between Open-source and Commercial LLMs: A novel paper discusses methodologies aimed at empowering open-source LLMs to close the performance gap with their commercial counterparts, with strategies focused on 7B and 13B LLM enhancements. The paper's detailed insights are available here.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (980 messages🔥🔥🔥):

- In Search of Enhanced Inpainting UI: Users expressed dissatisfaction with the new SD inpainting UI, finding it unintuitive and inefficient due to illogical layout decisions.

- CivitAI April Fools' Day Pranks?: Models with unexpected names like "ODOR" and pop-ups about "chadgpt" led to discussions about possible April Fools' jokes on CivitAI.

- Stable Diffusion Model Concerns and Queries: There were questions about when the stable diffusion 3 (SD3) model will be released, with users sharing their hopes and skepticism. One user jokingly claimed SD3's release as an April Fools' prank.

- Help Wanted with AI Tools: Users sought assistance for various AI-related issues, such as setting up ControlNet reference in Colab, using Comfy UI, and generating architecture renders in Stable Diffusion.

- Ideas on How to Utilize AI for Creativity: Suggestions were made on how to use AI for creating content, including utilizing old video game footage for AI-generated films and crafting comics with AI workflows.

- Tweet from Artsiom Sanakoyeu (@artsiom_s): ⚡️SD3-Turbo: Fast High-Resolution Image Synthesis with Latent Adversarial Diffusion Distillation Following Stable Diffusion 3, my ex-colleagues have published a preprint on SD3 distillation using 4-s...

- stabilityai/stable-video-diffusion-img2vid-xt · Hugging Face: no description found

- Stable Diffusion Benchmarks: 45 Nvidia, AMD, and Intel GPUs Compared: Which graphics card offers the fastest AI performance?

- Reddit - Dive into anything: no description found

- Geeky Ghost Vid2Vid Organized v1 - v3.0 | Stable Diffusion Workflows | Civitai: This workflow is designed for advanced video processing, incorporating various techniques such as style transfer, motion analysis, depth estimation...

- How to Import and Visualize Your Roam Research, Obsidian and Zettelkasten Markdown Format Notes: If you have a markdown format files (.MD) you can import them into InfraNodus to visualize the main topics, their relations, and discover the structural gaps in order to generate new ideas. InfraNo...

- ice on my baby - yung bleu (sped up <3): wsg

- NVIDIA Avatar Cloud Engine ACE: Build and deploy game characters and interactive avatars at scale.

- Pony Ride Back Ride GIF - Pony Ride Back Ride Skates - Discover & Share GIFs: Click to view the GIF

- Feedback - Civitai: Give Civitai feedback on how they could improve their product.

- Your elusive creative genius | Elizabeth Gilbert: Find an accurate transcript (and subtitles in 46 languages) on ted.com:http://www.ted.com/talks/elizabeth_gilbert_on_genius/transcript?language=en "Eat, Pray...

- Reddit - Dive into anything: no description found

- 1万年かけて成長するフリーレン(上半身)/Frieren growing over 10,000 years(upper body) #葬送のフリーレン #frieren #アニメ: no description found

- Download Animation infinite looping triangle black and white - Seamless loop Motion Background for free: Download the Animation infinite looping triangle black and white - Seamless loop Motion Background 8661328 royalty-free Stock Video from Vecteezy and explore thousands of other stock footage clips!

- Original Trogdor Video: This is the first ever video of Trogdor.

- cat but it's a gamecube intro: cat.consider subscribing : https://www.youtube.com/channel/UCIzz...Instagram: https://www.instagram.com/merryyygoat/

- oblivion 4: #oblivion #npc #elderscrolls Stop! You violated the law. Original oblivion video: https://www.youtube.com/watch?v=qN80_7rNmcEOur Let's Play Oblivion Series: ...

- The Name of This Cartoon Would Ruin It: Strong Bad and The Cheat come across Homestar doing something truly terrifying in the snow. Befuddlement ensues.

- Happy 20th Trogday!: For Trogdor's 20th birthday, Strong Bad digs up a 30 year old promo for an unreleased Peasant's Quest game. Do the math!

- GitHub - XPixelGroup/DiffBIR: Official codes of DiffBIR: Towards Blind Image Restoration with Generative Diffusion Prior: Official codes of DiffBIR: Towards Blind Image Restoration with Generative Diffusion Prior - XPixelGroup/DiffBIR

- Will the weights for Stable Diffusion 3 be released (or leaked) before April 30?: 41% chance. On March 15, then CEO of Stability AI Emad Mostaque announced that SD3 would have a full-release "next month". However, after several high-profile departures culminating in Emad...

- Releases · jhc13/taggui: Tag manager and captioner for image datasets. Contribute to jhc13/taggui development by creating an account on GitHub.

- Stable Video Diffusion - SVD - img2vid-xt-1.1 | Stable Diffusion Checkpoint | Civitai: Check out our quickstart Guide! https://education.civitai.com/quickstart-guide-to-stable-video-diffusion/ The base img2vid model was trained to gen...

- GitHub - PixArt-alpha/PixArt-alpha: Fast Training of Diffusion Transformer for Photorealistic Text-to-Image Synthesis: Fast Training of Diffusion Transformer for Photorealistic Text-to-Image Synthesis - PixArt-alpha/PixArt-alpha

- oblivion: Death by poison fruit.Part 2:https://www.youtube.com/watch?v=D_x-x0gawckPart 3:https://www.youtube.com/watch?v=CYAkrJil_w0

- GitHub - jasonppy/VoiceCraft: Zero-Shot Speech Editing and Text-to-Speech in the Wild: Zero-Shot Speech Editing and Text-to-Speech in the Wild - jasonppy/VoiceCraft

- Skymaster Carnival Ride at Night 😳 #carnivalrides #fun: The Kamikaze Ride is a thrilling carnival ride that will bring you to heights never before experienced. It's a Kamikaze ride that will have you soaring throu...

Perplexity AI ▷ #general (915 messages🔥🔥🔥):

- Claude's Opus Performance Quirks: Users report varying performance from the Claude 3 Opus model when answering a question about books remaining in a room. Despite instructions and adjustments in prompts, some models still failed to deliver correct answers in this context.

- Comparing AI models: There was a discussion about various AI models and their capabilities, with mentions of Haiku, Gemini 1.5 Pro, and Claude Opus. Users expressed views on the models' strengths, weaknesses, and differences in functionality.

- Crypto vs. Gold debate: In a brief tangent, members mused over the comparative value of crypto and physical commodities, specifically gold. Opinions varied on the future of currency, with some seeing the potential in digital forms while respecting the long-standing value of traditional materials like gold.

- Evolving Tech Landscape Speculation: Conversation involved speculation about the future direction and advancements in AI technology. Points were raised about companies like Apple's involvement in AI and debates on China's economic approaches, including references to the Evergrande crisis and government strategies.

- Perplexity Access and Features: Queries arose regarding Perplexity's user experience and features like password change or subscription cancellation. The chatbot clarified it uses oauth2 for logins and does not support password-based access.

- Books Father GIF - Books Father Ted - Discover & Share GIFs: Click to view the GIF

- Tweet from Linus ●ᴗ● Ekenstam (@LinusEkenstam): 🚨 Breaking 🚨 Apple is in talks to acquire perplexity This could be the start of something very exciting

- Working On GIF - Working On It - Discover & Share GIFs: Click to view the GIF

- GitHub - shure-dev/Awesome-LLM-related-Papers-Comprehensive-Topics: Awesome LLM-related papers and repos on very comprehensive topics.: Awesome LLM-related papers and repos on very comprehensive topics. - shure-dev/Awesome-LLM-related-Papers-Comprehensive-Topics

- Tweet from Aravind Srinivas (@AravSrinivas): Excited to share Perplexity will be powering http://askjeeves.com

- Harry Potter Quirrell GIF - Harry Potter Quirrell Professor Quirrell - Discover & Share GIFs: Click to view the GIF

- Why Michael Scott GIF - Why Michael Scott The Office - Discover & Share GIFs: Click to view the GIF

- Ouch GIF - Ouch - Discover & Share GIFs: Click to view the GIF

- Vegeta Dragon Ball Z GIF - Vegeta Dragon Ball Z Unlimited Power - Discover & Share GIFs: Click to view the GIF

- Troubleshooting: no description found

- Functions & external tools: no description found

- Prompt engineering: no description found

- Prompt library: no description found

- Wordware - OPUS Insight: Precision Query with Multi-Model Verification -scratchpad-think-Version-1: This prompt processes a question using Gemini, GPT-4 Turbo, Claude 3 (Haiku, Sonnet, Opus), Mistral Medium, Mixtral, and Openchat. It then employs Claude 3 OPUS to review and rank the responses. Upon ...

- Perplexity CEO: Disrupting Google Search with AI: One of the most preeminent AI founders, Aravind Srinivas (CEO, Perplexity), believes we could see 100+ AI startups valued over $10B in our future. In the epi...

Perplexity AI ▷ #sharing (36 messages🔥):

- Browsing the Bounds of Knowledge: Members shared diverse perplexity.ai searches, revealing interests in topics like the limitations of Bohmian mechanics, the workings of SpaceX, and the definition of 'Isekai'.

- Diving Deep into AI and Hyperloop: Curiosity led to explorations explaining Grok15, the Hyperloop concept, and binary embeddings in machine learning.

- Facilitating Knowledge Sharing: A member provided guidance on making threads shareable, enhancing community access to specific topics, as reflected by a shared helpful Discord link.

- Unpacking AI Alliances and AI in Podcasting: Discussions unfolded about OpenAI's collaboration with Microsoft and ways to utilize AI for processing podcast transcripts.

- April's Technological Tricks and Knowledge Collections: Members engaged with an April Fool's tech-related query and shared a link to a Perplexity AI collection for grouped knowledge.

Perplexity AI ▷ #pplx-api (41 messages🔥):

- API Response Differences: A member noted that the API does not show as many sources as the web interface for Perplexity AI. They were directed to information indicating that URL citations are still in beta, and applications are open at Perplexity's Typeform.

- Partnership Proposals: Multiple discussions arose around potential partnerships with Perplexity. Members were urged not to ping others and to reach out via support@perplexity.ai for partnership inquiries and necessary introductions.

- Confusion Over Model Support: Clarifying questions were asked about the continued support for the "pplx-70b-online" model, not listed in the supported models documentation. A member clarified that it's deprecated, and the endpoint name is just an alias for

sonar-medium-online.

- Troubleshooting Credit Purchases: Members reported issues trying to add credits, with transactions stuck in 'pending' state or experiencing errors with debit cards. It was suggested that this might be caused by security features required by Stripe, but concerns were raised that the problem might need further investigation.

- Token Cost Comparisons Requested: A user requested resources to compare token costs of Perplexity models with ChatGPT. They were provided with links to the Perplexity Pricing page and OpenAI's pricing for various models, including detailed price per token and additional request charges for online models.

- Pricing: no description found

- Supported Models: no description found

- pplx-api form: Turn data collection into an experience with Typeform. Create beautiful online forms, surveys, quizzes, and so much more. Try it for FREE.

- Pricing: Simple and flexible. Only pay for what you use.

Unsloth AI (Daniel Han) ▷ #general (549 messages🔥🔥🔥):

- Unsloth Models Are Still a Challenge: Despite attempts to utilize Unsloth for fine-tuning Mistral models, users encounter dependency issues and demand a Docker solution for ease of use. Current discussions suggest multi-GPU support isn't available yet but is a future possibility.

- Discussion on Optimizing Mistral Fines: Questions about fine-tuning methods revealed that QLora 4bit may differ in performance from SFT/pretraining due to the quantization process. Users explore various ways to utilize available VRAM efficiently.

- New AI Hardware from China: Chinese company Intellifusion announces a new AI processor that might be cost-effective for inference but raises questions among users about its potential for training and other technical specifications.

- Dataset Formatting Queries: While discussing the creation of a model that simulates a Discord server ambiance, users debate the optimal format for training data, with a focus on representing conversations accurately.

- Model Quantization and Language Support: Users discuss hallucination issues with quantized models (like Mistral 7B) and explore options, including the incorporation of LASER, for fine-tuning in languages other than English.

- no title found: no description found

- Redirect Notice: no description found

- Chinese chipmaker launches 14nm AI processor that's 90% cheaper than GPUs — $140 chip's older node sidesteps US sanctions: If there's a way to sidestep sanctions, you know China is on that beat.

- cognitivecomputations/dolphin-2.6-mistral-7b-dpo-laser · Hugging Face: no description found

- SambaNova Delivers Accurate Models At Blazing Speed: Samba-CoE v0.2 is climbing on the AlpacaEval leaderboard, outperforming all of the latest open-source models.

- no title found: no description found

- abacusai/Fewshot-Metamath-OrcaVicuna-Mistral-10B · Hugging Face: no description found

- Jamba Initial Tuning Runs: Code here: https://github.com/shisa-ai/shisa-v2/tree/main/_base-evals/jamba Initial experiments with doing fine tuning on Jamba using 1 x A100-80. Uses 99%+ of VRAM w/ these settings but does not...

- Come Look At This GIF - Come Look At This - Discover & Share GIFs: Click to view the GIF

- Preparing for the era of 32K context: Early learnings and explorations: no description found

- The Truth is in There: Improving Reasoning in Language Models with Layer-Selective Rank Reduction: Transformer-based Large Language Models (LLMs) have become a fixture in modern machine learning. Correspondingly, significant resources are allocated towards research that aims to further advance this...

- teknium/OpenHermes-2-Mistral-7B · Hugging Face: no description found

- Tweet from Nous Research (@NousResearch): We are releasing our first step in validating and independently confirming the claims of the Bitnet paper, a 1B model trained on the first 60B tokens of the Dolma dataset. Comparisons made on the @we...

- unsloth/mistral-7b-v0.2-bnb-4bit · Hugging Face: no description found

- yanolja/EEVE-Korean-10.8B-v1.0 · Hugging Face: no description found

- togethercomputer/Long-Data-Collections · Datasets at Hugging Face: no description found

- ZeRO — DeepSpeed 0.14.1 documentation: no description found

- shisa-v2/_base-evals/jamba/01-train-sfttrainer.py at main · shisa-ai/shisa-v2: Contribute to shisa-ai/shisa-v2 development by creating an account on GitHub.

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- GitHub - predibase/lorax: Multi-LoRA inference server that scales to 1000s of fine-tuned LLMs: Multi-LoRA inference server that scales to 1000s of fine-tuned LLMs - predibase/lorax

- teknium/GPTeacher-General-Instruct · Datasets at Hugging Face: no description found

- NousResearch/Genstruct-7B · Hugging Face: no description found

- GitHub - unslothai/unsloth: 2-5X faster 70% less memory QLoRA & LoRA finetuning: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Muzeke GIF - Muzeke - Discover & Share GIFs: Click to view the GIF

- SOLAR 10.7B: Scaling Large Language Models with Simple yet Effective Depth Up-Scaling: We introduce SOLAR 10.7B, a large language model (LLM) with 10.7 billion parameters, demonstrating superior performance in various natural language processing (NLP) tasks. Inspired by recent efforts t...

- ML Blog - Create Mixtures of Experts with MergeKit: Combine multiple experts into a single frankenMoE

- Benchmarking Samba-1: Benchmarking Samba-1 with the EGAI benchmark - a comprehensive collection of widely adapted benchmarks sourced from the open source community.

- no title found: no description found

- Reddit - Dive into anything: no description found

- Trainer: no description found

Unsloth AI (Daniel Han) ▷ #random (24 messages🔥):

- Snapdragon Elite X's Strong Entrance: The Snapdragon Elite X Arm chip is reported to surpass m3 chips in performance, offering a more cost-efficient alternative with its 45 TOPs. The discussion includes a YouTube video titled "Now we know the SCORE | X Elite" which explains the benchmarks of Qualcomm's new offering.

- Benchmark Enthusiasm Over New Chip: There's excitement over the reported 45 TOPs of the Snapdragon Elite X, leading to comparisons with other chips like the Tesla T4 which has around 65 TFLOPs of float16.

- Disappointment Over Modern MacBook Specs: Members expressed frustration with the current specs and prices of MacBooks, highlighting the appeal of next-gen chips like the Snapdragon Elite X as more competitive, cost-effective options.

- Discord Server Security Measures Discussed: Members discussed the prevalence of bots and hacked accounts on Discord and recommended making servers community servers to prevent mass tags and advising the blocking of keywords associated with spam, such as "nitro."

- Training Data Diversity for AI: There was a conversation about the counterintuitive nature of training data required for fine-tuning AI models, debating whether including diverse data, such as "Chinese poems from the 16th century," could be beneficial compared to more directly related data like math for code performance enhancement.

Link mentioned: Now we know the SCORE | X Elite: Qualcomm's new Snapdragon X Elite benchmarks are out! Dive into the evolving ARM-based processor landscape, the promising performance of the Snapdragon X Eli...

Unsloth AI (Daniel Han) ▷ #help (461 messages🔥🔥🔥):

- Model Fine-Tuning Over Different Datasets: A user reported better performance after fine-tuning Mistral with 3,000 rows of data compared to 6,000 rows. A theory was provided that aligns with research indicating an initial accuracy reduction with more data until at a certain point where more data improves performance. The user was advised to possibly use their 3,000 rows dataset instead of 6,000 if the additional data was of poor quality.

- GGUF File Generation Challenges and Solutions: Users experienced issues and confusion while trying to create GGUF files and running models with Unsloth. One solution presented was to follow the manual GGUF guide or leverage tools like llama.cpp to convert and save properly.

- Single vs. Dual GPU Training with Unsloth: A user ran into a warning regarding Unsloth's use of a single GPU. It was clarified that Unsloth currently supports only single GPU training; however, users can select the GPU to be used by setting the environment variable

os.environ["CUDA_VISIBLE_DEVICES"].

- Fine-Tuning Loss Concerns and Optimization Strategies: A discussion on fine-tuning Gemma 2B with various parameters was held to address concerns over a flat loss graph, which usually indicates lack of learning. Strategies such as increasing the rank and alpha values and adjusting the learning rate helped improve results.

- Exploring AI Learning Resources for Beginners: For users interested in learning about AI, recommendations were made for Andrej Karpathy's CS231N lecture videos, Fast AI courses, MIT OCW, and Andrew Ng's CS229 lecture series as great resources to begin with.

- Google Colaboratory: no description found

- Track Jupyter Notebooks | Weights & Biases Documentation: se W&B with Jupyter to get interactive visualizations without leaving your notebook.

- pacozaa/tinyllama-alpaca-lora: Tinyllama Train with Unsloth Notebook, Dataset https://huggingface.co/datasets/yahma/alpaca-cleaned

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Kaggle - LLM Science Exam: no description found

- Kaggle Mistral 7b Unsloth notebook: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- gnumanth/gemma-unsloth-alpaca · Hugging Face: no description found

- Kaggle Mistral 7b Unsloth notebook: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- gnumanth/code-gemma · Hugging Face: no description found

- Hugging Face – The AI community building the future.: no description found

- Load: no description found

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- sloth/sftune.py at master · toranb/sloth: python sftune, qmerge and dpo scripts with unsloth - toranb/sloth

- GPU Cloud comparisons: Stats #,VRAM,Float16 TFLOPs,Float8 TFLOPs,Band Width,W Per Card,Price,Per GPU,Per fp16 PFLOP,Per fp8 PFLOP,BF16,Supply,Info Kaggle,Tesla T4,1,16,65,320,70,0,0,0.000,N,5,<a href="https://www.n...

- Home: 2-5X faster 70% less memory QLoRA & LoRA finetuning - unslothai/unsloth

- Trainer: no description found

- wikimedia/wikipedia · Datasets at Hugging Face: no description found

- CohereForAI/aya_dataset · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #showcase (7 messages):

- Munchkin Streamlit App Launched: Ivysdad_ announced the launch of a new tool or creation located at Munchkin Streamlit App.

- Innovative LLaMA Factory Integration: Hoshi_hiyouga implemented Unsloth + ORPO in LLaMA Factory, offering a method to align Large Language Models (LLMs) which doesn't require two-stage training or a reference model. The paper detailing ORPO is found at arXiv:2403.07691.

- Community Praise for ORPO Implementation: Members, including theyruinedelise and starsupernova, praised the implementation of Unsloth + ORPO, with starsupernova noting their focus on ongoing bug fixes.

- Optimization Appreciation: Hoshi_hiyouga expressed appreciation for starsupernova's work, specifically the optimization for Gemma.

- ORPO Proves Effective in Experiments: Remek1972 shared success in using ORPO for experimental trainings, noting that the fine-tuning of a new Mistral Base model with only 7000 training samples closely matched the performance of the old Mistral instruction model.

Link mentioned: no title found: no description found

Unsloth AI (Daniel Han) ▷ #suggestions (6 messages):

- Boosting Unsloth Notebooks: A member suggested setting

group_by_length=Truein the TrainingArguments of the Unsloth AI notebooks, referencing a discussion on the Hugging Face forum that points to performance improvements. - Packing as an Optional Speed Booster: Another member supported the idea, proposing to add it as an optional parameter like

packing = Truebut noted that it can't be default due to varying losses. - DeepSeek Joins the Unsloth Family: There’s a call to add the smallest DeepSeek model from the official repository to Unsloth 4bit, highlighting the model as a good base model for AGI development.

- Are dynamic padding and smart batching in the library?: Please do not post the same message three times and tag users agressively like you did. You can always edit your message instead of reposting the same thing.

- deepseek-ai (DeepSeek): no description found

- DeepSeek: Chat with DeepSeek AI.

Unsloth AI (Daniel Han) ▷ #unsloth (1 messages):

- Support Unsloth's Mission: The Unsloth team, comprised of two brothers, is asking for community support through engagement or donations. They promise shoutouts for all supporters in future blog posts and encourage contributions to help purchase a new PC and GPU for increased efficiency.