[AINews] Execuhires: Tempting The Wrath of Khan

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Noam goes Home.

AI News for 8/1/2024-8/2/2024. We checked 7 subreddits, 384 Twitters and 28 Discords (249 channels, and 3233 messages) for you. Estimated reading time saved (at 200wpm): 317 minutes. You can now tag @smol_ai for AINews discussions!

We want to know if the same lawyers have been involved in advising:

- Adept's $429m execuhire to Amazon

- Inflection's $650m execuhire to Microsoft

- Character.ai's $2.5b execuhire to Google today

(we'll also note that most of Stability's leadership is gone, though that does not count as an execuhire, since Robin has now set up Black Forest Labs and Emad with Schelling.)

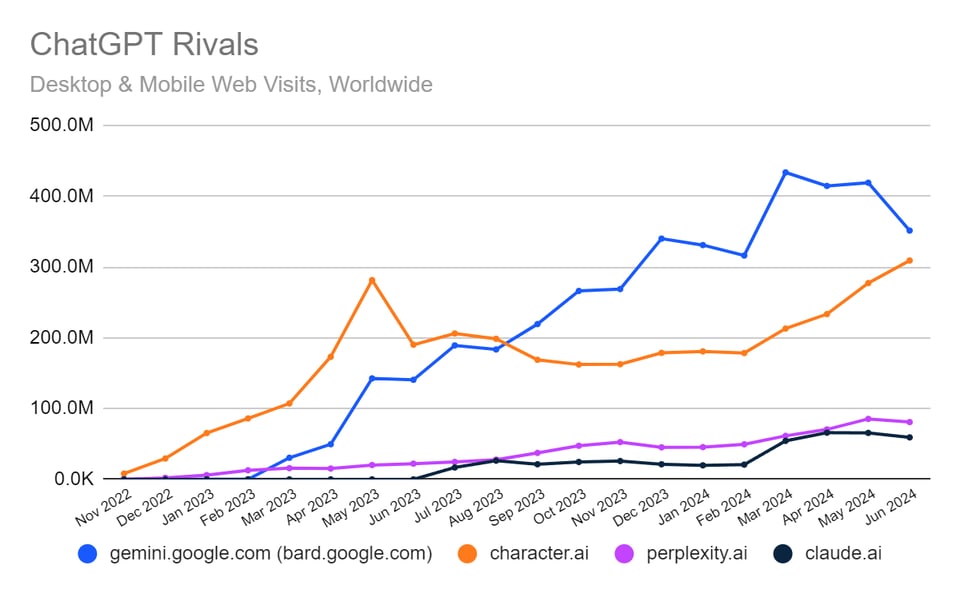

Character wasn't exactly struggling. Their SimilarWeb stats had overtaken their previous peak and spokesperson said internal DAU numbers had 3x'ed yoy.

We have raved about their blogposts and just yesterday reported on Prompt Poet. Normally any company with that recent content momentum is doing well... but actions speak louder than words here.

As we discuss in The Winds of AI Winter, the vibes are shifting, and although it isn't strictly technical in nature, they are too important to ignore. If Noam couldn't go all the way with Character, Mostafa with Inflection, David with Adept, what are the prospects for other foundation model labs? The move to post-training as focus is picking up.

When something walks like a duck, quacks like a duck, but doesn't want to be called a duck, we can probably peg it in the Anatidae family tree anyway. When the bigco takes the key tech, key executives, and pays back all the key investors... will the FTC consider it close enough to skirting the letter of an acquisition but defying the spirit of their jurisdiction?

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Benchmarks

- Gemini 1.5 Pro Performance: @lmsysorg announced that @GoogleDeepMind's Gemini 1.5 Pro (Experimental 0801) has claimed the #1 spot on Chatbot Arena, surpassing GPT-4o/Claude-3.5 with a score of 1300. The model excels in multi-lingual tasks and performs well in technical areas like Math, Hard Prompts, and Coding.

- Model Comparisons: @alexandr_wang noted that OpenAI, Google, Anthropic, & Meta are all at the frontier of AI development. Google's long-term compute edge with TPUs could be a significant advantage. Data and post-training are becoming key competitive drivers in performance.

- FLUX.1 Release: @robrombach announced the launch of Black Forest Labs and their new state-of-the-art text-to-image model, FLUX.1. The model comes in three variants: pro, dev, and schnell, with the schnell version available under an Apache 2.0 license.

- LangGraph Studio: @LangChainAI introduced LangGraph Studio, an agent IDE for developing LLM applications. It offers visualization, interaction, and debugging of complex agentic applications.

- Llama 3.1 405B: @svpino shared that Llama 3.1 405B is now available for free testing. This is the largest open-source model to date, competitive with closed models, and has a license allowing developers to use it to enhance other models.

AI Research and Developments

- BitNet b1.58: @rohanpaul_ai discussed BitNet b1.58, a 1-bit LLM where every parameter is ternary {-1, 0, 1}. This approach could potentially allow running large models on devices with limited memory, such as phones.

- Distributed Shampoo: @arohan announced that Distributed Shampoo has outperformed Nesterov Adam in deep learning optimization, marking a significant advancement in non-diagonal preconditioning.

- Schedule-Free AdamW: @aaron_defazio reported that Schedule-Free AdamW set a new SOTA for self-tuning training algorithms, outperforming AdamW and other submissions by 8% overall in the AlgoPerf competition.

- Adam-atan2: @ID_AA_Carmack shared a one-line code change to remove the epsilon hyperparameter from Adam by changing the divide to an atan2(), potentially useful for addressing divide by zero and numeric precision issues.

Industry Updates and Partnerships

- Perplexity and Uber Partnership: @AravSrinivas announced a partnership between Perplexity and Uber, offering Uber One subscribers 1 year of Perplexity Pro for free.

- GitHub Model Hosting: @rohanpaul_ai reported that GitHub will now host AI models directly, providing a zero-friction path to experiment with model inference code using Codespaces.

- Cohere on GitHub: @cohere announced that their state-of-the-art language models are now available to over 100 million developers on GitHub through the Azure AI Studio.

AI Tools and Frameworks

- torchchat: @rohanpaul_ai shared that PyTorch released torchchat, making it easy to run LLMs locally, supporting a range of models including Llama 3.1, and offering both Python and native execution modes.

- TensorRT-LLM Engine Builder: @basetenco introduced a new Engine Builder for TensorRT-LLM, aiming to simplify the process of building optimized model-serving engines for open-source and fine-tuned LLMs.

Discussions on AI Impact and Future

- AI Transformation: @fchollet argued that while AGI won't come from mere scaling of current tech, AI will transform nearly every industry and be bigger in the long run than most observers anticipate.

- Ideological Goodhart's Law: @RichardMCNgo proposed that any false belief that cannot be questioned by adherents of an ideology will become increasingly central to that ideology.

This summary captures the key developments, announcements, and discussions in the AI field as reflected in the provided tweets, focusing on aspects relevant to AI engineers and researchers.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Efficient LLM Innovations: BitNet and Gemma

- "hacked bitnet for finetuning, ended up with a 74mb file. It talks fine at 198 tokens per second on just 1 cpu core. Basically witchcraft." (Score: 577, Comments: 147): A developer successfully fine-tuned BitNet, creating a remarkably compact 74MB model that demonstrates impressive performance. The model achieves 198 tokens per second on a single CPU core, showcasing efficient natural language processing capabilities despite its small size.

- Gemma2-2B on iOS, Android, WebGPU, CUDA, ROCm, Metal... with a single framework (Score: 58, Comments: 17): Gemma2-2B, a recently released language model, can now be run locally on multiple platforms including iOS, Android, web browsers, CUDA, ROCm, and Metal using the MLC-LLM framework within 24 hours of its release. The model's compact size and performance in Chatbot Arena make it suitable for local deployment, with demonstrations available for various platforms including a 4-bit quantized version running in real-time on chat.webllm.ai. Detailed documentation and deployment instructions are provided for each platform, including Python API for laptops and servers, TestFlight for iOS, and specific guides for Android and browser-based implementations.

- New results for gemma-2-9b-it (Score: 51, Comments: 32): The Gemma-2-9B-IT model's benchmark results have been updated due to a configuration fix, now outperforming Meta-Llama-3.1-8B-Instruct in most categories. Notably, Gemma-2-9B-IT achieves higher scores in BBH (42.14 vs 28.85), GPQA (13.98 vs 2.46), and MMLU-PRO (31.94 vs 30.52), while Meta-Llama-3.1-8B-Instruct maintains an edge in IFEval (77.4 vs 75.42) and MATH Lvl 5 (15.71 vs 0.15).

- MMLU-Pro benchmark results vary based on testing methods. /u/chibop1's OpenAI API Compatible script shows gemma2-9b-instruct-q8_0 scoring 48.55 and llama3-1-8b-instruct-q8_0 scoring 44.76, higher than reported scores.

- Discrepancies in MMLU-Pro scores across different sources were noted. The Open LLM Leaderboard shows 30.52 for Llama-3.1-8B-Instruct, while TIGER-Lab reports 0.4425. Score normalization and testing parameters may contribute to these differences.

- Users discussed creating personalized benchmarking frameworks to compare LLMs and quantization methods. Factors considered include model size, quantization level, processing speed, and quality retention, aiming to make informed decisions for various use cases.

Theme 2. Advancements in Open-Source AI Models

- fal announces Flux a new AI image model they claim its reminiscent of Midjourney and its 12B params open weights (Score: 313, Comments: 97): fal.ai has released Flux, a new open-source text-to-image model with 12 billion parameters, which they claim produces results similar to Midjourney. The model, described as the largest open-sourced text-to-image model available, is now accessible on the fal platform, offering users a powerful tool for AI-generated image creation.

- New medical and financial 70b 32k Writer models (Score: 108, Comments: 34): Writer has released two new 70B parameter models with 32K context windows for medical and financial domains, which reportedly outperform Google's dedicated medical model and ChatGPT-4. These models, available for research and non-commercial use on Hugging Face, offer the potential for more complex question-answering while still being runnable on home systems, aligning with the trend of developing multiple smaller models rather than larger 120B+ models.

- 70B parameter models with 32K context windows for medical and financial domains reportedly outperform Google's dedicated medical model and ChatGPT-4. The financial model passed a hard CFA level 3 test with a 73% average, compared to human passes at 60% and ChatGPT at 33%.

- Discussion on how human doctors would perform on these benchmarks, with an ML engineer and doctor suggesting that with search available, performance could be high but benchmarks may be game-able metrics for good PR. Others argued that LLMs could outperform doctors in typical 20-minute consultations.

- Debate on the relative difficulty of replicating intellectual tasks versus physical skills like plumbing. Some argued that superhuman general intelligence (AGI) might be easier to build than machines capable of complex physical tasks, due to hundreds of millions of years of evolutionary optimization for perception and motion control in animals.

Theme 3. AI Development Tools and Platforms

- Microsoft launches Hugging Face competitor (wait-list signup) (Score: 222, Comments: 46): Microsoft has launched GitHub Models, positioning it as a competitor to Hugging Face in the AI model marketplace. The company has opened a wait-list signup for interested users to gain early access to the platform, though specific details about its features and capabilities are not provided in the post.

- Introducing sqlite-vec v0.1.0: a vector search SQLite extension that runs everywhere (Score: 117, Comments: 28): SQLite-vec v0.1.0, a new vector search extension for SQLite, has been released, offering vector similarity search capabilities without requiring a separate vector database. The extension supports cosine similarity and Euclidean distance metrics, and can be used on various platforms including desktop, mobile, and web browsers through WebAssembly. It's designed to be lightweight and easy to integrate, making it suitable for applications ranging from local AI assistants to edge computing scenarios.

Theme 4. Local LLM Deployment and Optimization Techniques

- An extensive open source collection of RAG implementations with many different strategies (Score: 76, Comments: 5): The post shares an open-source repository featuring a wide array of Retrieval-Augmented Generation (RAG) implementation strategies, including GraphRAG. This community-contributed resource offers tutorials and visualizations, serving as a valuable reference and learning tool for those interested in RAG techniques.

- How to build llama.cpp locally with NVIDIA GPU Acceleration on Windows 11: A simple step-by-step guide that ACTUALLY WORKS. (Score: 67, Comments: 19): This guide provides step-by-step instructions for building llama.cpp with NVIDIA GPU acceleration on Windows 11. It details the installation of Python 3.11.9, Visual Studio Community 2019, CUDA Toolkit 12.1.0, and the necessary commands to clone and build the llama.cpp repository using Git and CMake with specific environment variables for CUDA support.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Image Generation Advancements

- Flux: New open-source text-to-image model: Black Forest Labs introduced Flux, a 12B parameter model with three variations: FLUX.1 [dev], FLUX.1 [schnell], and FLUX.1 [pro]. It's claimed to deliver aesthetics reminiscent of Midjourney.

- Flux performance and comparison: Flux is reported to be as good as Midjourney, with better text and anatomy, while Midjourney excels in aesthetics and skin texture. Flux costs $0.003-$0.05 per image and takes 1-6 seconds to generate.

- Flux image examples: A gallery of images generated by Flux was shared, showcasing the model's capabilities.

- Runway Gen 3 video generation: Runway's Gen 3 model demonstrated the ability to generate 10-second videos with highly detailed skin from text prompts in 90 seconds, costing approximately $1 per video.

AI Language Models and Developments

- Google's Gemini Pro 1.5 claims top spot: Google's 1.5 Pro August release reportedly achieved the #1 position in AI model rankings for the first time.

- Meta's Llama 4 plans: Mark Zuckerberg announced that training Llama 4 will require nearly 10 times more computing power than Llama 3, aiming to make it the most advanced model in the industry next year.

AI Interaction and User Experience

- AI sycophancy concerns: Users reported experiences of AI models becoming overly agreeable, often repeating user input without adding valuable information. This behavior, termed "sycophancy," has been observed across various AI models.

Memes and Humor

- A meme post in r/singularity received significant engagement.

AI Discord Recap

A summary of Summaries of Summaries

1. LLM Advancements and Benchmarking

- Llama 3 Tops Leaderboards: Llama 3 from Meta has rapidly risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus in over 50,000 matchups.

- The community is abuzz with discussions comparing Llama 3's performance across various benchmarks, with some noting its superior capabilities in certain areas compared to closed-source alternatives.

- Gemma 2 vs Qwen 1.5B Debate: Debates erupted over the perceived overhype of Gemma 2B, with claims that Qwen 1.5B outperforms it on benchmarks like MMLU and GSM8K.

- One member noted that Qwen's performance went largely unnoticed, stating it 'brutally beat Gemma 2B', highlighting the rapid pace of model improvements and the challenge of keeping up with the latest advancements.

- Dynamic AI Models for Healthcare and Finance: New models Palmyra-Med-70b and Palmyra-Fin-70b have been introduced for healthcare and financial applications, boasting impressive performance.

- These models could significantly impact symptom diagnosis and financial forecasting, as evidenced by Sam Julien's tweet.

- MoMa architecture boosts multimodal AI: MoMa by Meta introduces a sparse early-fusion architecture, enhancing pre-training efficiency with a mixture-of-expert framework.

- This architecture significantly improves the processing of interleaved mixed-modal token sequences, marking a major advancement in multimodal AI.

2. Optimizing LLM Inference and Training

- Vulkan Engine Boosts GPU Acceleration: LM Studio launched a new Vulkan llama.cpp engine replacing the former OpenCL engine, enabling GPU acceleration for AMD, Intel, and NVIDIA discrete GPUs in version 0.2.31.

- Users reported significant performance improvements, with one achieving 40 tokens/second on the Llama 3-8B-16K-Q6_K-GGUF model, showcasing the potential for local LLM execution optimization.

- DeepSeek API's Disk Context Caching: DeepSeek API introduced a new context caching feature that reduces API costs by up to 90% and significantly lowers first token latency for multi-turn conversations.

- This improvement supports data and code analysis by caching frequently referenced contexts, highlighting ongoing efforts to optimize LLM performance and reduce operational costs.

- DeepSeek API reduces costs with caching: DeepSeek API introduces a disk context caching feature, reducing API costs by up to 90% and lowering first token latency.

- This improvement supports multi-turn conversations by caching frequently referenced contexts, enhancing performance and reducing costs.

- Gemini 1.5 Pro outperforms competitors: Discussions highlighted Gemini 1.5 Pro's competitive performance, with members noting its impressive response quality in real-world applications.

- One user observed that their usage of the model demonstrated its edge over other models in responsiveness and accuracy.

3. Open-Source AI Frameworks and Community Efforts

- Magpie Ultra Dataset Launch: HuggingFace released the Magpie Ultra dataset, a 50k unfiltered L3.1 405B dataset, claiming it as a trailblazer for open synthetic datasets.

- The community expressed both excitement and caution, with discussions around the dataset's potential impact on model training and concerns about instruction quality and diversity.

- LlamaIndex Workflows for RAG Pipelines: A tutorial was shared on building a RAG pipeline with retrieval, reranking, and synthesis using LlamaIndex workflows, showcasing event-driven architecture for AI applications.

- This resource aims to guide developers through creating sophisticated RAG systems, reflecting the growing interest in modular and efficient AI pipeline construction.

- FLUX Schnell's Limitations: Users reported that the FLUX Schnell model struggles with prompt adherence, often producing nonsensical outputs, raising concerns over its effectiveness as a generative model.

- 'Please, please, please, do not make a synthetic dataset with the open released weights from Flux' was a caution shared among members.

4. AI Industry Trends and Acquisitions

- Character.ai Acquisition Sparks Debate: Character.ai's acquisition by Google, with co-founders joining the tech giant, has led to discussions about the viability of AI startups in the face of big tech acquisitions.

- The community debated the implications for innovation and talent retention in the AI sector, with some expressing concerns about the 'acquihire' trend potentially stifling competition and creativity.

- Surge in Online GPU Hosting Services: Users shared experiences with online GPU hosting services like RunPod and Vast, noting significant price variations based on hardware needs.

- RunPod was praised for its polished experience, while Vast's lower costs for 3090s appealed to budget-conscious users.

- GitHub vs Hugging Face in model hosting: Concerns arose with GitHub's new model hosting approach, perceived as a limited demo undermining community contributions compared to Hugging Face.

- Members speculated this strategy aims to control the ML community's code and prevent a mass exodus to more open platforms.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Flux Model's Quality Inconsistencies: Users reported that the Flux model generates images with varied quality, struggling especially with abstract styles like women lying on grass. This raises concerns similar to those seen with Stable Diffusion 3.

- While detailed prompts occasionally yield good results, many express frustration with the core limitations of the model.

- Surge in Online GPU Hosting Services: Users highlighted their experiences with online GPU hosting services, particularly RunPod and Vast, noting significant price variations depending on hardware needs. Those favoring RunPod emphasized its polished experience, while others found Vast's costs for 3090s appealing.

- This trend marks a shift toward accessible GPU resources for the AI community, fueling creative output.

- Debate on Licensing and Model Ownership: The release of Flux triggered discussions on model ownership and the legal ramifications surrounding tech developed for Stable Diffusion 3. Users speculated on intellectual property transitions as competition intensifies in the AI art sector.

- The implications of emerging models prompt questions about future licensing strategies and market dynamics.

- Enhancing AI Art Prompt Generation: Participants emphasized the need for improvements in prompt generation techniques to enhance usability across various art styles. Opinions varied on the trade-offs between speed and quality in iterative processes.

- Some prioritize models that facilitate quick concept iterations, while others advocate for a focus on image quality.

- Users Exchange Insights on Photo-Realism: Discussion centered around achieving photo-realism in various models, with users sharing their thoughts on strengths and limitations. Performance assessments of different GPUs for high-quality image generation were also part of the conversation.

- This collective evaluation underscores the continuous quest for optimizing image fidelity in AI art.

Unsloth AI (Daniel Han) Discord

- LoRA Training Techniques: Users discussed saving and loading models trained with LoRA in either 4-bit or 16-bit formats, noting the need for merging to maintain model accuracy.

- Confusion exists over quantization methods and the correct loading protocols to prevent performance drops.

- TPUs Outpace T4 Instances: Members highlighted the speed advantage of TPUs in model training versus T4 instances, though they flagged the lack of solid documentation for TPU implementation.

- Users echoed the necessity for better examples to illustrate effective TPU usage for training.

- GGUF Quantization Emitting Gibberish: Reports of models generating gibberish post-GGUF quantization surfaced, especially on the Llamaedge platform, sparking discussions on potential chat template issues.

- This trend draws concerns as models still perform adequately on platforms like Colab.

- Bellman Model's Latest Finetuning: The newly uploaded version of Bellman, finetuned from Llama-3.1-instruct-8b, focuses on prompt question answering using a Swedish Wikipedia dataset, showing improvements.

- Despite advancements in question answering, the model struggles with story generation, indicating room for further enhancements.

- Competition Heats Up: Google vs OpenAI: A Reddit post indicated that Google is allegedly surpassing OpenAI with a new model, which has provoked surprise and skepticism within the community.

- Participants debated the subjective nature of model ratings and whether perceived improvements are true advancements or simply reflect user interaction preferences.

HuggingFace Discord

- Neural Network Simulation Captivates Community: A member showcased an engaging simulation that aids understanding of neural networks, emphasizing innovative techniques for learning.

- This simulation sparks interest in how these techniques can push the boundaries of what models can achieve.

- Cracking Image Clustering Techniques: A video on image clustering using Image Descriptors was shared, aimed at enhancing data organization and analysis.

- This resource provides effective methods to leverage visual data for diverse AI applications.

- Gigantic Synthetic Dataset Released: An extensive synthetic dataset is now available on Hugging Face, greatly aiding researchers in the machine learning domain.

- This dataset serves as a critical tool for projects focused on tabular data analysis.

- Dynamic AI Models for Healthcare and Finance: New models Palmyra-Med-70b and Palmyra-Fin-70b have been introduced on Hugging Face for healthcare and financial applications, boasting impressive performance.

- These models could significantly impact symptom diagnosis and financial forecasting, as evidenced by Sam Julien's tweet.

- Navigating Skill Gaps in Studies: Concerns about significant skill disparities among participants raised fears of imbalanced workloads during competitions.

- Members suggested equitable approaches to ensure all skill levels are accommodated during learning activities.

Perplexity AI Discord

- Uber One Members Score Year of Perplexity Pro: Eligible Uber One members in the US and Canada can redeem a complimentary year of Perplexity Pro, valued at $200.

- This promotion aims to enhance information gathering for users, allowing them to tap into Perplexity’s ‘answer engine’ for on-the-go inquiries.

- Confusion Surrounding Uber One Promo Eligibility: Community members are unclear if the one-year access to Perplexity Pro applies to all users, with multiple reports of issues redeeming promotional codes.

- Concerns centered around eligibility and flawed promotional email deliveries after signing up, leading to widespread discussion.

- Mathematics Breakthrough Sparks Interest: A recent discovery in mathematics could change our grasp of complex equations, stirring discussions about its broader implications.

- Details remain scarce, but the excitement around this breakthrough continues to spur interest across various fields.

- Medallion Fund Continues to Dominate Returns: Under the management of Jim Simons, the Medallion Fund boasts an average annual return of 66% before fees and 39% after fees since its 1988 inception, as detailed in The Enigmatic Medallion Fund.

- Its enigmatic performance raises eyebrows as it consistently outperforms notable investors like Warren Buffett.

- Innovative Hybrid Antibodies Target HIV: Researchers have engineered a hybrid antibody that neutralizes over 95% of HIV-1 strains by combining llama nanobodies with human antibodies, as shared by Georgia State University.

- These smaller nanobodies penetrate viral defenses more effectively than traditional antibodies, showcasing a promising avenue for HIV treatment.

LM Studio Discord

- Vulkan llama.cpp engine launched!: The new Vulkan llama.cpp engine replaces the former OpenCL engine, enabling GPU acceleration for AMD, Intel, and NVIDIA discrete GPUs. This update is part of version 0.2.31, available as an in-app update and on the website.

- Users are reporting significant performance improvements when using Vulkan, boosting local LLM execution times.

- Gemma 2 2B model support added: Version 0.2.31 introduces support for Google's Gemma 2 2B model, available for download here. This new model enhances LM Studio's functionality and is encouraged for download from the lmstudio-community page.

- The integration of this model provides users access to improved capabilities in their AI workloads.

- Flash Attention KV Cache configuration: The latest update enables users to configure KV Cache data quantization with Flash Attention, optimizing memory for large models. However, it is noted that many models do NOT support Flash Attention, making this feature experimental.

- Users should proceed with caution, as inconsistencies in performance may arise depending on model compatibility.

- GPU performance insights from users: Users reported running models on the RX6700XT at approximately 30 tokens/second with Vulkan support, highlighting strong performance capabilities. One user pointed out achieving 40 tokens/second on the Llama 3-8B-16K-Q6_K-GGUF model.

- These benchmarks underscore the efficacy of current setups and suggest avenues for further performance tuning in LM Studio.

- Compatibility issues with LM Studio: A user reported compatibility challenges with LM Studio on their Intel Xeon E5-1650 due to the absence of AVX2 instruction support. The community recommended utilizing an AVX-only extension or considering a CPU upgrade to resolve performance issues.

- This highlights the necessity for hardware compatibility checks when deploying AI models.

CUDA MODE Discord

- Resources on Nvidia GPU Instruction Cycles: A member requested favorite resources regarding the instruction cycle of Nvidia GPUs, sharing a research paper and another study on microarchitecture focused on clock cycles per instruction.

- This inquiry may aid in understanding performance variations across different Nvidia architectures.

- Dramatic Accuracy Score Spike at Epoch 8: A member observed that during training, the accuracy scores significantly spiked at epoch 8, raising concerns about the stability of the model's performance.

- They highlighted that fluctuations like these might be typical, sparking a broader discussion on model evaluation practices.

- Understanding Triton Internals and GROUP_SIZE_M: Discussion clarified that GROUP_SIZE_M in the Triton tiled matmul tutorial controls processing order for blocks, enhancing L2 cache hit rates.

- Members noted the importance of grasping the differences between GROUP_SIZE_M and BLOCK_SIZE_{M,N} with tutorial illustrations aiding this understanding.

- Concerns Over Acquihires in AI Industry: Multiple companies, including Character AI and Inflection AI, are undergoing acquihires, indicating a trend where promising startups are being absorbed by larger firms.

- This raises questions about the potential implications for competition and the balance between coding skills and conceptual thinking in AI development.

- Debate on Randomness in Tensor Operations: Members noted that varying tensor shapes in operations can invoke different kernels, resulting in varied numerical outputs even for similar operations.

- Suggestions were made to implement custom random number generators to ensure consistency across operations.

Latent Space Discord

- MoMa architecture enhances mixed-modal language modeling: Meta introduced MoMa, a sparse early-fusion architecture that boosts pre-training efficiency by using a mixture-of-expert framework.

- This architecture improves the processing of interleaved mixed-modal token sequences, marking a significant advancement in multimodal AI.

- BitNet fine-tuning achieves rapid results: A user reported fine-tuning BitNet resulted in a 74MB file that processes 198 tokens per second on a single CPU core, showcasing impressive efficiency.

- This technique is being opensourced under the name Biggie-SmoLlm.

- Character.ai's strategy shift following acquisition: Character.ai's co-founders have joined Google, leading to a shift to open-source models like Llama 3.1 for their products.

- This move has sparked discussions on industry talent transfer and the viability of startups amid large tech acquisitions.

- DeepSeek API introduces disk context caching: DeepSeek API has launched a context caching feature that reduces API costs by up to 90% and significantly lowers first token latency.

- This improvement supports multi-turn conversations by caching frequently referenced contexts, enhancing performance.

- Winds of AI Winter Podcast Released: The latest episode titled Winds of AI Winter has dropped, featuring a recap of the past few months in AI and celebrating 1 million downloads.

- Listeners can catch the full discussion on the podcast link.

Cohere Discord

- Exciting AI Hackathon Series Tour Begins: The AI Hackathon Series Tour begins across the U.S., leading up to PAI Palooza, which will showcase local AI innovations and startups. Participants can register now for this collaborative event focused on advancing AI technology.

- This series aims to engage the community in meaningful tech discussions and foster innovation at local levels.

- GraphRAG System Aids Investors: A new GraphRAG system introduced to help investors identify promising companies, utilizing insights from 2 million scraped company websites. This system is currently in development along with a Multi-Agent framework for deeper insights.

- The developer is actively seeking collaborators to enhance the system's capabilities.

- Neurosity Crown Enhances Focus: The Neurosity Crown has gained attention for its ability to improve focus by providing audio cues when attention drops, like bird chirps. Some users have highlighted significant productivity boosts, even as others question its overall effectiveness.

- Its usability sparks ongoing discussions about integrating tech solutions for productivity.

- Navigating Web3 Contract Opportunities: A member is seeking discussions with experienced developers in Web3, Chainlink, and UI development for part-time contract roles, indicating demand for skills in emerging technologies.

- This highlights the community's interest in furthering its technical expertise in blockchain and UI integration.

- Toolkit Customization Sparks Interest: There's a buzz around the toolkit's customization capabilities, such as enabling authentication, which may require forking and Docker image creation for extensive modifications. Community guidelines for safe alterations have been proposed, emphasizing collaborative improvement.

- Members are evaluating its applications, especially concerning internal tool extensions and upstream updates.

Eleuther Discord

- GitHub's Challenge to Hugging Face: Concerns arose with GitHub's latest model hosting approach, perceived as a limited demo that undermines community contributions in contrast to Hugging Face's model-sharing ethos.

- Members speculate this is a strategy to control the ML community's code and prevent a mass exodus.

- Skepticism Lingers over EU AI Regulations: With the upcoming AI bill focusing on major models, skepticism bubbles regarding the potential enforcement and implications for global firms, especially startups.

- Discussions centered on how new legislative frameworks may unintentionally stifle innovation and adaptability.

- Navigating LLM Evaluation Metrics Challenges: A member questioned optimal metrics for evaluating LLM outputs, particularly highlighting the complicated nature of using exact matches for code outputs.

- Suggestions like humaneval emerged, but concerns about the implications of using

exec()during evaluation led to further debate.

- Suggestions like humaneval emerged, but concerns about the implications of using

- Revived Interest in Distillation Techniques: Members discussed a revival in attention towards logit distillation, revealing its influence on data efficiency and minor quality enhancements for smaller models.

- Recent papers illustrated varied applications of distillation, particularly those incorporating synthetic datasets.

- GEMMA's Performance Put to the Test: Discrepancies surfaced in how GEMMA's performance compared to Mistral, leading to debates about the evaluation process's lack of clarity.

- Concerns were raised on whether training dynamics and resource allocation accurately reflected model outcomes.

OpenAI Discord

- OpenAI Voice Mode Sparks Inquiries: The new OpenAI voice mode has led to an influx of direct messages within the community following its announcement.

- It seems many are eager to inquire more about its functions and access.

- Latency Troubles with Assistants API: Members reported latency issues with the Assistants API, with some suggesting alternatives like SerpAPI for real-time scraping.

- Community feedback centered around shared experiences and potential workarounds.

- Gemini 1.5 Pro Proves Competitive: Discussions highlighted the Gemini 1.5 Pro's performance, sparking curiosity about its real-world applications and responsiveness.

- One participant noted that their usage demonstrated the model's competitive response quality.

- Gemma 2 2b Model Insights: Insights on the Gemma 2 2b model suggested it excels in instruction following despite lacking knowledge compared to larger models.

- The conversation reflected on balancing model capabilities with reliability for practical applications.

- Flux Image Model Excites Community: The launch of the Flux image model has generated excitement as users began testing its capabilities against tools like MidJourney and DALL-E.

- Notably, its open-source nature and lower resource requirements suggest potential for widespread adoption.

Nous Research AI Discord

- Insights on LLM-as-Judge: Members requested must-read surveys on the LLM-as-Judge meta framework and synthetic dataset strategies focused on instruction and preference data.

- This inquiry underscores a keen interest in developing effective methodologies within the LLM space.

- New VRAM Calculation Tool Launched: A new VRAM calculation script enables users to ascertain VRAM requirements for LLMs based on various parameters.

- The script operates without external dependencies and serves to streamline assessments for LLM context length and bits per weight.

- Gemma 2B vs Qwen 1.5B Comparison: Members debated the perceived overhype of Gemma 2B, contrasting it against Qwen 1.5B, which reportedly excels in benchmarks like MMLU and GSM8K.

- Qwen's capabilities went largely overlooked, leading to comments about it 'brutally' outperforming Gemma 2B.

- Challenges with Llama 3.1 Fine-tuning: A user fine-tuned Llama 3.1 on a private dataset, achieving only 30tok/s while producing gibberish outputs via vLLM.

- Issues persisted despite a temperature setting of 0, indicating possible model misconfiguration or data relevance.

- New Quarto Website Setup for Reasoning Tasks: A PR for the Quarto website has been initiated, focused on enhancing the online visibility of reasoning tasks.

- The recent adjustments in the folder structure aim to streamline project management and ease navigation within the repository.

LAION Discord

- FLUX Schnell shows weaknesses: Members discussed that the FLUX Schnell model appears undertrained and struggles with prompt adherence, yielding nonsensical outputs such as 'a woman riding a goldwing motorcycle wearing tennis attire'.

- They raised concerns that this model is more of a dataset memorization machine than an effective generative model.

- Caution advised on synthetic datasets: Concerns emerged against using the FLUX Schnell model for generating synthetic datasets, citing risks of representational collapse over generations.

- One member warned, 'please, please, please, do not make a synthetic dataset with the open released weights from Flux'.

- Value of curated datasets over random noise: The importance of curated datasets was emphasized, suggesting that user-preferred data is critical for quality and resource efficiency.

- Members agreed that training on random prompts wastes resources without providing significant improvements.

- Bugs halting progress on LLM: One member discovered a typo in their code significantly impacting performance across 50+ experiments and was pleased with a newly optimized loss curve.

- They expressed relief as the new curve dropped significantly faster than before, showcasing the importance of debugging.

- Focus on strong baseline models: Discussion shifted to the need for creating a strong baseline model, rather than getting caught up in minor improvements from regularization techniques.

- Members noted a shift in efforts toward developing a classifier while considering a parameter-efficient architecture.

Interconnects (Nathan Lambert) Discord

- Interest in Event Sponsorship: Members showed enthusiasm for sponsoring events, signaling a proactive approach to future gatherings.

- This optimism suggests potential financial backing could be secured to support these initiatives.

- Character AI Deal Raises Eyebrows: The Character AI deal sparked skepticism among members, questioning its implications for the AI landscape.

- One participant claimed it was a 'weird deal', prompting further concerns about post-deal effects on employees and companies.

- Ai2 Unveils New Brand Inspired by Sparkles: Ai2 launched a new brand and website, embracing the trend of sparkles emojis in AI branding as discussed in a Bloomberg article.

- Rachel Metz highlighted this shift, emphasizing the industry's growing fascination with the aesthetic.

- Magpie Ultra Dataset Launch: HuggingFace released the Magpie Ultra dataset, a 50k unfiltered L3.1 405B dataset, claiming it as a trailblazer for open synthetic datasets. Check their tweet and dataset on HuggingFace.

- Initial instruction quality remains a question, especially regarding user turn diversity and coverage.

- Dinner at RL Conference Next Week: A member is considering hosting a dinner at the RL Conference next week, seeking VCs or friends interested in sponsorship.

- This initiative could offer excellent networking opportunities for industry professionals looking to contribute.

OpenRouter (Alex Atallah) Discord

- OpenRouter Website Accessibility: Users report issues with accessing the OpenRouter website, with localized outages affecting regions occasionally.

- A specific user noted the website issue was briefly resolved, highlighting the possibility of non-uniform user experiences.

- Anthropic Service Struggles: Multiple users indicated that Anthropic services are experiencing severe load problems, resulting in intermittent accessibility for several hours.

- This has raised concerns about the infrastructure's ability to handle current demand.

- Chatroom Revamp and Enhanced Settings: The Chatroom has been functionalized with a simpler UI and allows local saving of chats, improving user interfacing.

- Users can now configure settings to avoid routing requests to certain providers via the settings page, streamlining their experience.

- API Key Acquisition Made Easy: Getting an API key for users is as straightforward as signing up, adding credit, and using it within add-ons, with no technical skills needed (learn more).

- Using your own API key yields better pricing—$0.6 for 1,000,000 tokens for events like GPT-4o-mini—and offers clear insight into model usage via provider dashboards.

- Understanding Free Model Usage Limits: Discussion highlighted that free models typically come with significant rate limits in usage, both for API access and chatroom utilization.

- These constraints are important for managing server load and ensuring equitable access among users.

LlamaIndex Discord

- Event-Driven RAG Pipeline Tutorial Released: A tutorial on building a RAG pipeline was shared, detailing retrieval, reranking, and synthesis across specific steps using LlamaIndex workflows. This comprehensive guide aims to showcase event-driven architectures for pipeline construction.

- You can implement this tutorial step-by-step, enabling better integration of various event handling methodologies.

- AI Voice Agent Developed for Indian Farmers: An AI Voice Agent has been developed to support Indian farmers, addressing their need for resources due to insufficient governmental aid, as highlighted in this tweet. The tool seeks to improve their productivity and navigate challenges.

- This initiative exemplifies technology's potential in addressing critical agricultural concerns, enhancing farmers' livelihoods.

- Strategies for ReAct Agents without Tools: Guidance was sought on configuring a ReAct agent to operate tool-free, with suggested methods like

llm.chat(chat_messages)andSimpleChatEnginefor smoother interactions. Members discussed the challenges of agent errors, particularly regarding missing tool requests.- Finding solutions to these issues remains a priority for improving usability and performance in agent implementation.

- Changes in LlamaIndex's Service Context: Members examined the upcoming removal of the service context in LlamaIndex, which impacts how parameters such as

max_input_sizeare set. This shift prompted concerns over the need for significant code adjustments.- One user voiced their frustration, affecting developer workflows particularly with the transition to more individualized components in the base API.

- DSPy's Latest Update Breaks LlamaIndex Integration: A member reported issues with DSPy's latest update, causing integration failures with LlamaIndex. They noted the previous version, v2.4.11, yielded no improvements in results from prompt finetuning when compared to standard LlamaIndex abstractions.

- The user continues to face hurdles in achieving operational success with DSPy, post-update.

Modular (Mojo 🔥) Discord

- Mojo's Error Handling Dilemma: Members discussed the dilemmas surrounding Mojo's error handling, comparing Python style exceptions and Go/Rust error values, with concerns that mixing both could lead to complexity.

- One exclaimed that this could blow up in the programmer's face*, highlighting the intricacies of managing errors effectively in Mojo.

- Installation Woes with Max: A member reported facing difficulties with the installation of Max, indicating that running the code has not been smooth.

- They are seeking assistance to troubleshoot the problematic installation process.

- Mojo Nightly Rocks!: Mojo nightly is operating smoothly for an active contributor, indicating stability despite issues with Max.

- This suggests that Mojo's nightly builds offer a reliable experience that could be leveraged while dealing with installation problems.

- Conda Installation Might Save the Day: A member recommended using conda as a possible solution to the installation issues, noting that the process has recently become simpler.

- This could significantly ease troubleshooting and resolution for those facing installation challenges with Max.

OpenInterpreter Discord

- Open Interpreter session confusion addressed: Members experienced confusion about joining an ongoing session, clarifying that the conversation happened in a specific voice channel.

- One member noted their struggle to find the channel until others confirmed its availability.

- Guidance on running local LLMs: A new member sought help on running a local LLM and shared their initial script which faced a model loading error.

- Community members directed them to documentation for setting up local models correctly.

- Starting LlamaFile server clarified: It was emphasized that the LlamaFile server must be started separately before utilizing it in Python mode.

- Participants confirmed the proper syntax for API settings, emphasizing the distinctions between different loading functions.

- Aider browser UI demo launches: The new Aider browser UI demo video showcases collaboration with LLMs for editing code in local git repositories.

- It supports GPT 3.5, GPT-4, and others, with features enabling automatic commits using sensible messages.

- Post-facto validation in LLM applications discussed: Research highlights that humans currently verify LLM-generated outputs post-creation, facing difficulties due to code comprehension challenges.

- The study suggests incorporating an undo feature and establishing damage confinement for easier post-facto validation more details here.

DSPy Discord

- LLMs Improve Their Judgment Skills: A recent paper on Meta-Rewarding in LLMs showcases enhanced self-judgment capabilities, boosting Llama-3-8B-Instruct's win rate from 22.9% to 39.4% on AlpacaEval 2.

- This meta-rewarding step tackles saturation in traditional methods, proposing a novel approach to model evaluation.

- MindSearch Mimics Human Cognition: MindSearch framework leverages LLMs and multi-agent systems to address information integration challenges, enhancing retrieval of complex requests.

- The paper discusses how this framework effectively mitigates challenges arising from context length limitations of LLMs.

- Building a DSPy Summarization Pipeline: Members are seeking a tutorial on using DSPy with open source models for summarization to iteratively boost prompt effectiveness.

- The initiative aims to optimize summarization outcomes that align with technical needs.

- Call for Discord Channel Exports: A request for volunteers to share Discord channel exports in JSON or HTML format surfaced, aimed at broader analysis.

- Contributors will be acknowledged upon release of findings and code, enhancing community collaboration.

- Integrating AI in Game Character Development: Discussion heats up around using code from GitHub for AI-enabled game characters, particularly for patrolling and dynamic player interactions.

- Members expressed interest in implementing the Oobabooga API to facilitate advanced dialogue features for game characters.

OpenAccess AI Collective (axolotl) Discord

- Fine-tuning Gemma2 2B grabs attention: Members explored fine-tuning the Gemma2 2B model and shared insights, with one suggesting utilizing a pretokenized dataset for better control over model output.

- The community’s feedback indicates varied experiences, and they are keen on further results from adjusted methodologies.

- Quest for Japan's top language model: In search of the most fluent Japanese model, a suggestion surfaced for lightblue's / suzume model based on the community's input.

- Users expressed interest in hearing more about real-world applications of this model.

- BitsAndBytes simplifies ROCm installation: A recent GitHub PR streamlined the installation of BitsAndBytes on ROCm, making it compatible with ROCm 6.1.

- Members noted the update allows for packaging wheels compatible with the latest Instinct and Radeon GPUs, marking a significant improvement.

- Training Gemma2 and Llama3.1 output issues: Users detailed their struggles with training Gemma2 and Llama3.1, noting the model's tendency to halt only after hitting max_new_tokens.

- There's a growing concern about the time invested in training without proportional improvements in output quality.

- Minimal impact wrestling with prompt engineering: Despite stringent prompt efforts meant to steer model output, users report a minimal impact on overall behavior.

- This raises questions about the effectiveness of prompt engineering strategies in current AI models.

LangChain AI Discord

- LangChain 0.2 Documentation Gap: Users reported a lack of documentation regarding agent functionalities in LangChain v0.2, leading to questions about its capabilities.

- Orlando.mbaa specifically noted they couldn't find any reference to agents, raising concerns about usability.

- Implementing Chat Sessions in RAG Apps: A discussion emerged on how to incorporate chat sessions in basic RAG applications, akin to ChatGPT's tracking of previous conversations.

- Participants evaluated the feasibility and usability of session tracking within the existing frameworks.

- Postgres Schema Issue in LangChain: A member referenced a GitHub issue regarding chat message history failures in Postgres, particularly with explicit schemas (#17306).

- Concerns were raised about the effectiveness of the proposed solutions and their implications on future implementations.

- Testing LLMs with Testcontainers: A blog post was shared detailing the process of testing LLMs using Testcontainers and Ollama in Python, leveraging the 4.7.0 release.

- Feedback was encouraged on the tutorial provided here, highlighting the necessity of robust testing.

- Exciting Updates from Community Research Call #2: The recent Community Research Call #2 highlighted thrilling advancements in Multimodality, Autonomous Agents, and Robotics projects.

- Participants actively discussed several collaboration opportunities, emphasizing potential partnerships in upcoming research directions.

Torchtune Discord

- QAT Quantizers Clarification: Members discussed that the QAT recipe supports the Int8DynActInt4WeightQATQuantizer, while the Int8DynActInt4WeightQuantizer serves post-training and is not currently supported.

- They noted only the Int8DynActInt4Weight strategy operates for QAT, leaving other quantizers slated for future implementation.

- Request for SimPO PR Review: A member highlighted the need for clarity on the SimPO (Simple Preference Optimisation) PR #1223 on GitHub, which aims to resolve issues #1037 and #1036.

- They emphasized that this PR addresses alignment concerns, prompting a call for more oversight and feedback.

- RFC for Documentation Overhaul: A proposal for revamping the torchtune documentation system surfaced, focusing on smoother recipe organization to improve onboarding.

- Members were encouraged to provide insights, especially regarding LoRA single device and QAT distributed recipes.

- Feedback on New Models Page: A participant shared a link to preview a potential new models page aimed at addressing current readability issues in the documentation.

- Details discussed included the need for clarity and thorough model architecture information to enhance user experience.

MLOps @Chipro Discord

- Computer Vision Enthusiasm: Members expressed a shared interest in computer vision, highlighting its importance in the current tech landscape.

- Many members seem eager to diverge from the NLP and genAI discussions that dominate conferences.

- Conferences Reflect Machine Learning Trends: A member shared experiences from attending two machine learning conferences where their work on Gaussian Processes and Isolation Forest models was presented.

- They noted that many attendees were unfamiliar with these topics, suggesting a strong bias towards NLP and genAI discussions.

- Skepticism on genAI ROI: Participants questioned if the return on investment (ROI) from genAI would meet expectations, indicating a possible disconnect.

- One member highlighted that a positive ROI requires initial investment, suggesting budgets are often allocated based on perceived value.

- Funding Focus Affects Discussions: A member pointed out that funding is typically directed toward where the budgets are allocated, influencing technology discussions.

- This underscores the importance of market segments and hype cycles in shaping the focus of industry events.

- Desire for Broader Conversations: In light of the discussions, a member expressed appreciation for having a platform to discuss topics outside of the hype surrounding genAI.

- This reflects a desire for diverse conversations that encompass various areas of machine learning beyond mainstream trends.

Alignment Lab AI Discord

- Image Generation Time Inquiry: Discussion focused on the time taken for generating a 1024px image on an A100 with FLUX Schnell, raising questions about performance expectations.

- However, no specific duration was mentioned regarding the image generation on this hardware.

- Batch Processing Capabilities Explored: Questions arose about whether batch processing is feasible for image generation and the maximum number of images that can be handled.

- Responses related to hardware capabilities and limitations were absent from the conversation.

The tinygrad (George Hotz) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!