[AINews] 1/8/2024: The Four Wars of the AI Stack

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Not much happened in the discords today, so time to plug our Latent Space 2023 recap!

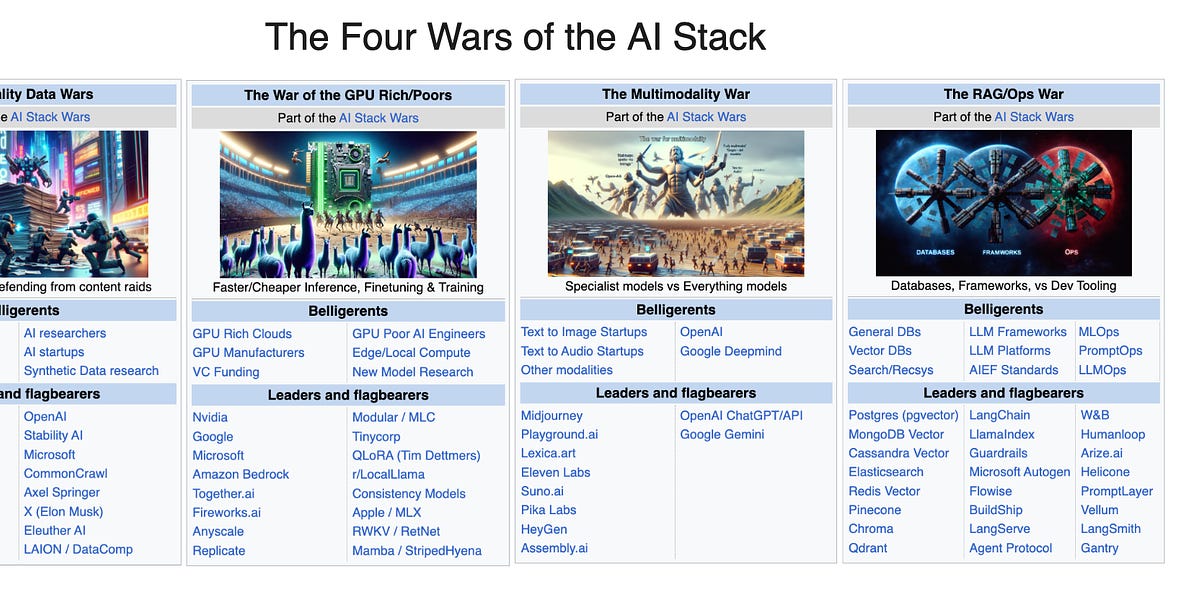

The Four Wars of the AI Stack (Dec 2023 Recap)

The Data Wars, The War of the GPU Rich/Poor, The Multimodality War, The RAG/Ops War. Also: our usual highest-signal recap of top items for the AI Engineer from Dec 2023!

Enjoy!

Table of Contents

Nous Research AI Discord Summary

- "Obsidian Project - in short" : In project Obsidian

@qnguyen3mentioned briefly that they are utilizing DINO, CLIP and CNNs for the project.

- "Cloud-Based LLM Concerns, Addressed": Discussion about the design challenges of cloud-based Large Language Models (LLMs) services captured attention when

@maxwellandrewsshared a research paper that proposed solutions through distributed models like DistAttention and DistKV-LLM.

- "Self-Extending Context Window Triumph":

@kenakafrostyshared a paper titled 'Self-Extend LLM Context Window Without Tuning', arguing that existing LLMs are inherently capable of handling long context situations, sharing the paper's link and relevant Twitter and GitHub discussions.

- "Your Morning Coffee, Brought by AI?": Users tossed jokes and musings about a tweet that

@adjectiveallisonshared regarding an AI robot called 'Figure-01' that claims to have learned to make coffee after observing humans. The conversation expanded into comparing the project to another AI program, ALOHA, shared by@leontello.

- "LLMs that Learn and Teach":

@deki04shared a link to a GitHub repository with a comprehensive course on Large Language Models (LLMs), sparking a discussion about model improvements and their practical applications, led by@leontelloand@vincentweisser.

- "To Embed, or Not to Embed":

@gabriel_symesuggested that hierarchical embeddings may still be a necessary addition to the OAI model, despite not showing an expected boost in performance.

- "The Agentic RAG Trend":

@n8programsannounced plans to experiment with agentic RAG, a model that generates search queries based on input and collects data until enough has been accumulated.

- "AI Engineer's Guide to LLM Fine-Tuning":

@realsedlyfrequested insight on the best methods for creating synthetic data required to fine-tune a language model for a specific domain.

- "Oil & Gas Industry Embraces LLM Analysis":

@kapnap_ndetailed the application of LLMs for an unusual domain - analyzing downhole wellbore data in the oil & gas industry.

- "AgentSearch Dataset Launch!": The newly-released AgentSearch-V1 dataset was promoted by

@tekniumwho shared a link to a tweet by@ocolegro, announcing the availability of one billion embedding vectors encompassing Wikipedia, Arxiv, and more.

- "LLM Talk - Exposés and Suggestions": The '#ask-about-llms' channel saw several captivating debates about different LLM facets like KV_Cache implementation, comparison between MoE and Mistral, and performance differences between TinyLLAM and Lite LLAMAS.

- "Peeking into the Silver Lining": The notion that smaller models may hold more processing capabilities than they seem was introduced by

@kenakafrostyleading to conversations about the saturation point of smaller models.

- "Exploring Implementations of Merging Technology": Queries about notebooks for MoE (mixture of experts) implementations and restraints of PointCloud models led to insightful exchanges between

@tekniumand.beowulfbr, with a Mergekit GitHub link shared for reference.

- "Mixtral Favored for its Roomy Context Window":

@gabriel_symeand@tekniumexpressed preference for the Mixtral model. Despite the availability of other models like Mistral and Marcoroni, Mixtral's larger context window of 32k was considered a standout advantage.

Nous Research AI Channel Summaries

▷ #ctx-length-research (2 messages):

- Exploring DistAttention for Cloud-Based LLM Services: User

@maxwellandrewsshared a link to a research paper on DistAttention and DistKV-LLM, new distributed models that aim to alleviate the challenges of designing cloud-based Large Language Models (LLMs) services. The link leads to an abstract discussing how these models can dynamically manage Key-Value Cache and orchestrate all accessible GPUs.

- LLMs and Long Context Situations: User

@kenakafrostyshared a link to a research paper entitled 'Self-Extend LLM Context Window Without Tuning'. The paper argues existing LLMs have the inherent ability to handle long contexts without fine-tuning training sequences.

- Practical Applications of the Self-Extend Model:

@kenakafrostynoted that the 'Self-Extend LLM Context Window Without Tuning' concept is being implemented with seemingly good results, sharing relevant Twitter and GitHub links.

Links mentioned:

- Paper page - Infinite-LLM: Efficient LLM Service for Long Context with DistAttention and Distributed KVCache

- LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning: This work elicits LLMs' inherent ability to handle long contexts without fine-tuning. The limited length of the training sequence during training may limit the application of Large Language Models...

- GitHub - datamllab/LongLM: LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning: LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning - GitHub - datamllab/LongLM: LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning

▷ #off-topic (5 messages):

- Is Coffee-Making AI a Big Deal?:

@adjectiveallisonshared a tweet from@adcock_brettabout an AI robot called 'Figure-01', claiming it had learned to make coffee by watching humans, and mentioned this is an end-to-end AI with video in, trajectories out. This was followed by skepticism and humor from@tekniumand@gabriel_syme, questioning if the capability to make coffee is remarkable news.

- Comparing AI Complexity:

@leontellocompared the coffee-making AI to another project, ALOHA (linked to a tweet by@tonyzzhao), calling it quite lackluster, but later clarified their comment, recognizing the difference in contexts involving self-driving vs. robotic hardware setups.

- Spotting Coincidences in AI Robotics: In a humorous twist,

@adjectiveallisonshared another tweet, this time from@atroyn, noticing that the coffee machine used by the coffee-making AI looked very familiar, being previously seen in a video from Chelsea Finn's research project.

Links mentioned:

- Tweet from anton (𝔴𝔞𝔯𝔱𝔦𝔪𝔢) (@atroyn): something about this demo video seemed very familiar, then i realized i had seen that same coffee machine before in one of @chelseabfinn's video from her group's paper https://lucys0.github....

- Tweet from Brett Adcock (@adcock_brett): Figure-01 has learned to make coffee ☕️ Our AI learned this after watching humans make coffee This is end-to-end AI: our neural networks are taking video in, trajectories out Join us to train our r...

▷ #interesting-links (5 messages):

- Resource for LLM Course shared:

@deki04shared a link to a GitHub repository offering a comprehensive course on Large Language Models with chatbot roadmaps and Colab notebooks. - Mixed Opinions on Model Improvement:

@leontellospeculated on the feasibility of the introduction of augmented models, suggesting a potential increase in parameter counts which could pose practicality concerns. - Deep Dive into LLM Agents:

@vincentweissershared an article detailing a comprehensive analysis on LLM agents, ChatGPT and GitHub Copilot underlining their significant impact yet existing limitations in complex tasks due to a restricted context window. - LeCun's LLM research feature:

@vincentweisseralso highlighted a research paper related to LLM by Yann LeCun.

Links mentioned:

- Thoughts on LLM Agents: Entropy, criticality, and complexity classes of cellular automata.

- GitHub - mlabonne/llm-course: Course to get into Large Language Models (LLMs) with roadmaps and Colab notebooks.: Course to get into Large Language Models (LLMs) with roadmaps and Colab notebooks. - GitHub - mlabonne/llm-course: Course to get into Large Language Models (LLMs) with roadmaps and Colab notebooks.

▷ #general (79 messages🔥🔥):

- Exploring Performance of Hierarchical Embeddings:

@gabriel_symenoted that hierarchical embeddings might still be needed in addition to the implemented OAI model, hinting at a lack of expected improvement in performance. - Handling Synthetic Data for LLM Fine-Tuning:

@realsedlyfinquired about the best current methods for creating synthetic data for language model fine-tuning in a specific domain. - Agentic RAG Experimentation:

@n8programsannounced plans to experiment with agentic RAG, an approach where the model generates various search queries based on an input question and collects information until a sufficient amount has been gathered. They cited Mistral as being particularly good for such tasks. - Industry Application - LLM and Wellbore Analysis:

@kapnap_nshared their approach to using language model to analyze downhole wellbore data in oil & gas industry. They also discussed how the data is represented and the potential benefits of this approach, sparking interest from other users like@julianottoand@everyoneisgross. - AgentSearch Dataset Announcement:

@tekniumshared a link to@ocolegro's tweet about the release of the AgentSearch-V1 dataset, consisting of over one billion embedding vectors covering Wikipedia, Arxiv, filtered common crawl, and more.

Links mentioned:

Tweet from Owen Colegrove (@ocolegro): The full dataset for AgentSearch-V1 is now available on HF!! Recommended: @qdrant_engine - for indexing and search @nomic_ai - for visualization I'm looking to expand what is indexed - agent spe...

▷ #ask-about-llms (54 messages🔥):

- Request for KV_Cache implementation:

@lwasinamasked for any links to implementations of KV_Cache for confirmation purposes. - Considering MoE for comparison with Mistral:

@bernaferrarisuggested making a mixture of experts (MoE) out of phi and compare it to Mistral. - TinyLLAM vs Lite LLAMAS: In a discussion between

@gabriel_symeand@teknium, it was noted that TinyLLAM underperforms, leading to a decision to switch to Lite LLAMAS. - Processing capabilities of smaller models:

@kenakafrostysparked a discussion on the notion that smaller models (6-20B range) might actually have more processing capability than appears at first glance, with the gap lying in instruction following.@tekniumshared his opinion that 7B models are reaching their saturation point but also added that saturation point scales non-linearly with model size. - Mergekit and MOE demonstration:

@tekniuminquired about any existing notebooks for Mergekit MOE (mixture of experts) implementation. In response,.beowulfbrshared a link to the Mixtral branch of Mergekit on GitHub for reference. - PointCloud model limitations: In a conversation started by

@gabriel_symeabout PointCloud models,@tekniumexplained that if the base model supports 8k, it should be able to do 8k inputs but will not produce more than 4k outputs. - Preference for Mixtral with larger context window:

@gabriel_symeand@tekniumdiscussed various models, including Mistral, Marcoroni, and Mixtral. They expressed a preference for Mixtral, given its larger context window of 32k.

Links mentioned:

GitHub - cg123/mergekit: Tools for merging pretrained large language models.: Tools for merging pretrained large language models. - GitHub - cg123/mergekit: Tools for merging pretrained large language models.

▷ #project-obsidian (1 messages):

qnguyen3: DINO, CLIP and CNNs for now

Eleuther Discord Summary

- Discussing LAION's Decay:

@stellaathenaand@flow7450considered the decay rate of datasets like LAION. Notably, LAION400 experienced approximately 20% failure in download after about 2-2.5 years according to a paper cited by@flow7450. - Duel Over Duplicate Data:

@uwu1468548483828484and@flow7450debated the importance of duplicate data, weighing the merits of backups versus the need for unique samples. - ELO-Ribbing Models:

@letterripproposed a project for creating an ELO rating for each question in models to form a testing benchmark subset for training runs. The discussion also touched on where to propose new projects. - The Axolotl DPO Conundrum:

@karkomagorasked if axolotl supports fine-tuning using DPO datasets, with@main.aisuggesting to ask on the Axolotl server instead. - T5 Breakdown:

@stellaathenaconfirmed T5 as an encoder-decoder in response to a question from@ricklius. - Rattling the Learning Rate Cage: DeepSeek AI models' unusual stepwise decay learning rate schedule was examined by

@maxmaticaland@ad8e. This led@ad8eto propose testing swift final stretches at 0.1xLR, potentially negating the need for a constant decay. - Twisting Transformer Layers:

@kram1032suggested permuting layers in transformer architectures during training, hypothesizing that this might encourage more reliance on skip connections and lead to robust networks even when adding or removing layers. - Seeking MoE-mentous Scaling Laws:

@bshlgrssought cutting-edge literature on LM Scaling Laws specific to Mixture Of Experts (MoE) models, with contributions from@philpaxand@main.ai. - The Harness Snags: From evaluating models like MMLU to implementing custom datasets and even considering adding a toxicity/bias grader, various functionalities of the

lm-eval-harnesswere discussed by@gson_arlo,@hyperion.ai,@ishigami6465, and@.johnnysands. The importance of speculative decoding was emphasized by@stellaathenaand@hailey_schoelkopf.

Eleuther Channel Summaries

▷ #general (29 messages🔥):

- Decaying Datasets: Pondering LAION's Longevity:

@stellaathenainitiated a discussion about linkrot, specifically addressing the decay rate of datasets like LAION.@flow7450examined LAION400's decay, discovering that approximately 20% failed to download after about 2-2.5 years. - Duplicates: A Debate on Backup Strategy vs. Uniqueness:

@uwu1468548483828484and@flow7450had a debate on the importance of duplicate data, arguing whether backups outweigh the need for unique samples. - A New Project Proposal on Model ELO Ranking:

@letterripproposed a new project, suggesting creating an ELO rating for each question in current models to form a subset of benchmarks for testing during training runs. There was discussion about where to propose new projects, with@flow7450suggesting<#1102787157866852402>and@ad8eclarifying that community-projects is mainly for projects that one intends to drive rather than just ideas.@letterripconfirmed interest in driving the project. - Axolotl and DPO datasets:

@karkomagorinquired if axolotl supports fine-tuning using DPO datasets, and@main.airecommended asking this in the Axolotl server instead. - Inquiries about Managing Multiple Server Activities:

@seon5448opened a discussion about keeping track of activities across various servers, and was seeking advice on management tactics for multiple reading groups and project events. Suggestions for management tools or techniques were not mentioned in response.

▷ #research (33 messages🔥):

- T5 Encoder-Decoder Clarification:

@rickliusinquired if T5 was an encoder-decoder to which@stellaathenaconfirmed it is, and mentioned that sometimes people detach the encoder from the encoder-decoder for usage.

- DeepSeek AI Models' Learning Rate Schedules:

@maxmaticaldiscussed DeepSeek AI models, particularly an unusual stepwise decay learning rate schedule they used that had the same final loss as the traditional cosine decay. While this method allows for more flexible use of checkpoints during pre-training,@ad8ehighlighted the potential dangers of large learning rate steps, pointing out that misuse of learning rates could lead to suboptimal outcomes or even divergence in model training.

- Potential Model Training Experiment:

@ad8erevealed an intention to test the idea behind the above-discussed learning rate steps. The intention was to see if a swift final stretch at 0.1xLR was all that's needed, possibly negating the need for a constant decay.

- Discussion on Weight Decay and Gaussian Weight Noise:

@fessusbrought up the subject of the possible effects of combining gaussian weight noise and weight decay in a network that doesn't have learned affines in normalization layers. They reported potential benefits in terms of pruning unnecessary network complexity in toy datasets.

- Transformers with Permuted Layers Idea:

@kram1032proposed a unique idea of permuting layers during training within a transformer architecture with constant layer size. Their hypothesis is that this approach may encourage the network to rely more on skip connections and could lead to more robust networks when it comes to adding or removing layers.

Links mentioned:

- Chess-GPT’s Internal World Model: A Chess-GPT Linear Emergent World Representation

- DeepSeek LLM: Scaling Open-Source Language Models with Longtermism: The rapid development of open-source large language models (LLMs) has been truly remarkable. However, the scaling law described in previous literature presents varying conclusions, which casts a dark ...

- ad8e: Weights & Biases, developer tools for machine learning

▷ #scaling-laws (5 messages):

- Looking for Reading Recommendations on LM Scaling Laws:

@bshlgrssought recommendations for current state-of-the-art literature on Language Model (LM) scaling laws, specifically for Mixture of Experts (MoE) models. They specifically mentioned this paper which recommends MoE use only for LMs with less than 1 billion parameters, a claim appearing to be contested by practitioners. - Suggestion for LM Scaling Laws Paper:

@philpaxhighlighted a recent paper on LM scaling laws. Although this does not specifically address MoE models, it could offer relevant insights. - 'Smaller and Longer' is the Key for Large Inference Demand:

@bshlgrshighlighted a key finding from the suggested paper, suggesting that for Language Model Manufacturers (LLM) with large inference demand (around 1 billion requests), the best strategy is to train smaller models for a longer duration. - Lack of High-Compute Budget Scaling Papers for MoE:

@main.aipointed out the lack of papers addressing high compute budget scaling for MoE models.

▷ #lm-thunderdome (16 messages🔥):

- Understanding lm-eval-harness Functions:

@gson_arloasked howlm-eval-harnessworks with evaluation on datasets like MMLU (4-choice mcqa).@baber_confirmed thatoutput_type: generate_untiltriggers a model's inference once, whereasoutput_type: log_probcalculates the likelihood four times, once for each probable completion. - Flexible Postprocessing for lm-eval-harness:

@hyperion.aisuggested enhancinglm-eval-harnesswith a loose and flexible post-processing factor, aligning closer to real-world practices where the answer output can be flexible yet correct.@stellaathenaconfirmed that the harness can handle such situations. - Implementing Custom Datasets in lm-eval-harness:

@ishigami6465inquired about the specific format of datasets needed forlm-eval-harness.@hailey_schoelkopfclarified that the user can define this in a task's configuration and explained how the configuration could work for different types of tasks. - Potential for Toxigen Grader in lm-eval-harness:

@.johnnysandsbrought up the idea of adding a toxicity/bias grader to lm-eval-harness, considering tools like LlamaGuard offer this functionality already.@hailey_schoelkopfaffirmed that such grader models could be integrated, particularly if locally deployed to avoid disrupting the main evaluation model. - Considerations for Speculative Decoding:

@stellaathenahighlighted the importance of speculative decoding, and@hailey_schoelkopfsuggested that an inference library should handle this externally from lm-eval. Both believe the Hugging Face's TGI and tensorrt-llm currently manage this well.

OpenAI Discord Summary

- Europe's Elderly Shift: In the #prompt-engineering channel,

mysterious_guava_93336andChatGPTexplored the demographic transition from the "European baby boom" of the last century to today's "elderly Europe". The AI clarified that signs of fertility contraction started appearing in the late 1960s due to various factors, leading to an aging European population by the late 20th and early 21st centuries. This conversation was later echoed in the #api-discussions channel, reinforcing the shift's timeline and the factors uniting the demographic change.

- Are PowerPoint Presentations Ruining AI?: In #ai-discussions, an ongoing topic was about

ChatGPTrecently providing verbose, "PowerPoint-like" responses instead of concise, natural conversations. Despite advice from@eskcantaon refining system instructions to generate desired responses,@mysterious_guava_93336reported no significant improvement. The discussion also addressed how to useChatGPTon Discord.

- Staying in Your Domain: Dealing with domain verification and GPT editor issues was a hot topic in #gpt-4-discussions. While

@darthgustavattempted to guide@anardudethrough domain verification,@anardudestruggled with the solution and sought OpenAI Support's help.@.marcolpexpressed frustration at a persistent error disallowing access to the GPT editor until resolved.

- When Training Hours Go to Waste: Despair echoed in #gpt-4-discussions as

@moonlit_shadowsexpressed disappointment in a 20-hour GPT-2 training session that ended unproductively.

- Language-specific GPT Questions and Rule Sets:

@codocodersonqueried about creating a GPT in English and having its descriptions and starter questions shown in the user's language worldwide in #gpt-4-discussions.@jesuisjesinquired if their GPT occasionally missed following an outlined process for strategy-making conversations.

- Why Can't GPT Read my Files?: The #gpt-4-discussions channel saw a discussion by

@cerebrocortex, wondering why GPTs sometimes have issues reading .docx and .txt files. The issue was admittedly uncertain, with possible reasons being document size, token limits, or file corruption during /mnt timeouts.

OpenAI Channel Summaries

▷ #ai-discussions (30 messages🔥):

- Concerns about recent updates to ChatGPT:

-

@mysterious_guava_93336expressed dissatisfaction with recent updates to ChatGPT, stating that the AI now provides verbose, structured "PowerPoint" style responses instead of the concise, natural conversation style it used to have before the summer of 2023. They shared an example of the type of answer they prefer and asked for advice on how to instruct the AI to generate such responses. - Proposed Instructions for Desired Responses:

-

@eskcantasuggested refining the system's custom instructions to be more specific and positive, using guidance techniques similar to dog training. They proposed using a pattern for opinions and encouraging the AI to challenge the user creatively. An example of this approach can be seen on OpenAI's chat. - Disappointment with Proposed Changes:

- Despite changing the instructions,

@mysterious_guava_93336noticed no major improvement, stating that the AI still generated "PowerPoint-like" outputs. - Using ChatGPT on Discord:

-

@lemon77_psinquired about how to use ChatGPT on Discord, and@7877explained that ChatGPT must be used via OpenAI's website. - Interaction on the Discord Server:

-

@michael_6138_97508pointed out that the discord server is meant for interactions with real people, or equivalents, also mentioning the existence of a bot with specific knowledge of OpenAI API and documentation.

▷ #gpt-4-discussions (26 messages🔥):

- Domain Verification Woes: User

@anardudeasked how to verify his domain.@darthgustavprovided instructions for verifying the domain via DNS records in the GPT editor but@anardudereported the solution didn't work and asked about how to contact OpenAI Support, to which@darthgustavsuggested Googling "OpenAI Help and Support". - Long Hours of GPT-2 Training in Vain?: User

@moonlit_shadowsshared their disappointment in a 20-hour training session that seemed to end unproductively due to an attribute error during saving. - Inquiries about Creating Language-Specific GPTs: User

@codocodersonasked about publishing a GPT in English and inquired whether its descriptions and starter questions will be shown in the user's language worldwide. - Issues with GPT Obeying Rulesets:

@jesuisjessought to confirm expectations about their GPT occasionally missing processes, despite setting up rules to follow an outlined process for strategy-making conversations. - Concerns on GPT's Issues with Reading Document Files:

@cerebrocortexasked why GPTs sometimes have problems reading .Docx & .txt files. User@michael_6138_97508made an educated guess about document size and token limits being potential issues, and@darthgustavsuggested /mnt timeouts during updates causing file corruption. - Troubles with GPT Editor:

@.marcolpexpressed frustration over a persistent "error searching knowledge" problem, leading to an inability to even access the GPT editor, rendering further development of GPTs potentially useless until a fix is in place.@darthgustavoffered a potential workaround involving removing and reattaching knowledge.

▷ #prompt-engineering (1 messages):

- European Demographics Evolution Discussed: User

mysterious_guava_93336initiated a conversation withChatGPTabout the transition from the "European baby boom" of last century to today's "elderly Europe". - Fertility Contraction Timeline:

ChatGPTclarified that the first signs of a fertility contraction in Europe began emerging in the late 1960s and became significantly pronounced in the 1970s. - Contributing Factors to Fertility Decline: The reasons cited for this shift included economic changes, women's rights and workforce participation, increased access to contraception and family planning, and cultural shifts.

- Resulting Aging Population: By the late 20th and early 21st centuries, many European countries were experiencing birth rates below the replacement level of 2.1 children per woman, leading to an aging population.

▷ #api-discussions (1 messages):

- The Transition from 'Baby Boom' to 'Elderly Europe':

mysterious_guava_93336engaged ChatGPT in a discussion about when the first signs of a fertility contraction began in Europe after the post-World War II "baby boom". ChatGPT confirmed that this demographic transition started to appear in the late 1960s and became more pronounced in the 1970s, with various factors contributing, including economic changes, women's workforce participation, access to contraception, and cultural shifts.

Perplexity AI Discord Summary

- File Upload Features on Mobile: According to

@righthandofdoomand@giddz, it's currently possible to only upload images from the Perplexity mobile app on iOS. Support for Android is reported to be on the horizon. - Locating the Writing Mode Feature:

@ellestar_52679queried about the location of the Writing Mode feature, and@icelavamanhighlighted the navigation pathway: click "Focus", then "writing". - Detailed Discussion on Features and Promotions:

@debian3queried about file upload features and available promotions for the yearly plan.@icelavamanclarified that savings could be achieved through referral links instead of promotions and redirected to a FAQ link for more details on file upload. - Billing Issues with Perplexity:

@ahmed7089was upset about getting charged by Perplexity despite deleting their account. The situation was addressed by@mares1317, who provided a relevant link. - Perplexity Access Restrictions:

@byerk_enjoyer_sociology_enjoyervoiced concerns about Perplexity's inability to access posts on Pinterest, Instagram, or Tumblr. - In-depth Perplexity vs Pplx Model Comparison Analysis:

@dw_0901initiated discussions about differences among pplx online models (7B/70B) and Perplexity, questioning the differences in product design. - Contrasting Perplexity's Copilot and Normal version:

@promoweb.2024enquired about Perplexity's Copilot and normal version differences. Detailed information about Perplexity Copilot was shared by@icelavamanat this link. - Troubleshoot using $5 Pro Credits on pplx-api:

@blackwhitegreywas guided to the application process for Pro credits onpplx-apiby@mares1317, who also provided a step-by-step guide. - Clarity on Perplexity API User Friendliness:

@blackwhitegreyand@brknclock1215struggled with a perceived lack of user-friendliness in Perplexity API, primarily due to a lack of coding skills.icelavamanclarified that the API is primarily meant for developers. - Clarification on Pro Credits as an Extra Payment:

@blackwhitegreyinitially misconstrued Pro credits as an additional payment for API access.icelavamanclarified that these are actually bonuses provided to developers. - Optimism for Non-Technical Users: Despite the struggles,

@brknclock1215ended with an optimistic view that people, who aren't necessarily coders but understand technology, could benefit the most from its progression. - Help requested to make a thread public:

@me.lkadvised<@1018532617479532608>to make their thread public so others can view their content.<@1018532617479532608>followed this advice and made the thread publicly accessible. - Sharing Perplexity.AI Searches: Searches on how to use and how to draw were shared by

@soansengand@debian3respectively, spreading their knowledge with the community.

Perplexity AI Channel Summaries

▷ #general (23 messages🔥):

- File Upload Functionality In Mobile App:

@righthandofdoomasked about the ability to upload files from the mobile app.@giddzclarified that it's currently possible only for images and is only available on iOS, with Android support coming soon. - Finding the "writing mode" feature:

@ellestar_52679was trying to locate the writing mode feature.@icelavamanadvised them to click "Focus", then "writing". - Questions about Perplexity's functionality and promotions:

@debian3asked about the purpose of the file upload feature, the types of files that can be uploaded and if any promotions were available for the yearly plan.@icelavamanassured that while there was no promo, saving could be achieved through referral links. They also provided a link for the queries on file uploads. - Issues with Account Deletion and Billing:

@ahmed7089complained about being billed by Perplexity even after deleting their account.@mares1317provided a link in response, presumably containing more information. - Perplexity and Social Media Platforms:

@byerk_enjoyer_sociology_enjoyerraised a concern about Perplexity's inability to access Pinterest, Instagram, or Tumblr posts. - Perplexity and Pplx Model Comparison:

@dw_0901, a consultant, asked about the differences between pplx online models (7B/70B) and Perplexity, questioning if there were differences in the underlying product design. - Copilot vs Normal version of Perplexity:

@promoweb.2024enquired about the differences between using Perplexity's Copilot and the normal version.@icelavamanshared a link providing a detailed overview of Perplexity Copilot.

Links mentioned:

- What is Perplexity Copilot?: Explore Perplexity's blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

- How does File Upload work?: Explore Perplexity's blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

- What is Search Focus?: Explore Perplexity's blog for articles, announcements, product updates, and tips to optimize your experience. Stay informed and make the most of Perplexity.

▷ #sharing (4 messages):

- Sharing made public: User

@me.lkadvised<@1018532617479532608>to make their thread public so others can see it, to which<@1018532617479532608>responded that they've made their thread public now. - Perplexity.AI Searches: Users

@soansengand@debian3shared perplexity.ai searches: -@soanseng: shared a link on how to use -@debian3: shared a link on how to draw

▷ #pplx-api (12 messages🔥):

- How to use $5 Pro Credits: User

@blackwhitegreysought advice on using the Pro credits onpplx-api.@mares1317provided a link to Perplexity API's Getting Started Guide and highlighted the steps including providing payment info, purchasing credits, and generating an API key. - Mismatched API knowledge and needs:

@blackwhitegreyexpressed frustration due to their lack of coding skills and perceived the API as not user-friendly.icelavamanclarified that the API is primarily intended for developers and not for direct usage on websites. - Pro Credits understood as an extra payment:

@blackwhitegreyinitially assumed Pro users had to pay an extra $5 for the API access. However,icelavamanclarified that these are not extra payments but bonuses for developers. - Practicality of using the API for Non-Developers:

@brknclock1215echoed@blackwhitegrey's sentiments, expressing similar difficulties in implementing the API due to the lack coding skills. They also reasoned that trying to use advanced tools without the appropriate technical knowledge can be more time-consuming than beneficial. - Non-Technical users can still benefit:

@brknclock1215ended on an optimistic note, insinuating that people who are not necessarily coders, but who understand how to interact with technology, could potentially benefit the most from its progression.

Links mentioned:

Mistral Discord Summary

- Priming the LLM Response: In a conversation with

@bdambrosio,@i_am_domclarified that priming the Latent Language Model (LLM) for an appropriate response is indeed feasible, but the message set must end with a user message. This douses hopes for partially pre-writing responses with the official API as it would return an error. - On the Hunt for a Solid Chat Conversation Program:

@jb_5579posed a question to the community seeking recommendations on repositories that provide a robust chat conversation program, ideally optimized for the Mistral API, and featuring memory session alongside code-assist and code-completion. - The Case of Unknown Context Window Sizes:

@tonyaichamp's inquiry about the context window sizes for different versions of API models was met with uncertainty by@frosty04212, emphasizing on the need for experimental explorations to better understand and harness the system. - Mistral-tiny Proves Its Mettle:

@tonyaichampshared noteworthy success with themistral-tinymodel, leveraging it to extract content from a 16k token HTML page. Given its cost-effectiveness and speed, the user intends to apply it for similar tasks in the future. - Ai Agents Assemble:

@10anant10announced their project centered around building AI agents, to which@.tanuj.expressed interest and initiated direct communication. - Framework Endorsement: User

@joselololrecommended exploring the MLX framework. - Guardrailing Guide: A useful resource on guardrailing is linked by

@akshay_1with a URL:https://docs.mistral.ai/platform/guardrailing/ - Hardware Limitations for Fine-tuning:

@david78901raised the feasibility of fine-tuning the Mistral 7b on a single 3090. Tuning a LoRA or QLoRA could be managed, but full fine-tuning would likely need multiple 3090s or a single A100 withAxolotl. - LLMcord - The Versatile Discord Bot:

@jakobdylancshowcased LLMcord, an open-source Discord bot compatible with Mistral API and personal hardware-run Mistral models via LM Studio. The project available on GitHub also scored mention. - Superiority of Mistral Over OpenAI:

@joselololacknowledged Mistral's edge over OpenAI, recognizing it as faster, cheaper, and more effective for tasks. - Brains Baffled by Bicameral Mind: A mention of the Bicameral Mind theory stirred a debate, with

@cognitivetechrecommending the cornerstone book by Julian Jaynes and a skeptical@king_sleezeequating the theory to pseudoscience. - Mistral API Feature Suggestion:

@jakobdylancfavored an enhancement to the Mistral API to handle edge cases of an empty content list, much like the OpenAI API presently does. - Functionality Expansion on the Horizon: Function calling in Mistral is set to be a priority, as indicated by

@tom_lrd.

Mistral Channel Summaries

▷ #general (9 messages🔥):

- Prime the Response:

@bdambrosiodiscussed the technique of using a final assistant message to 'prime' the LLM for the appropriate response, and was seeking advice on its effectiveness when the server rejects a message set that ends with an assistant message.@i_am_domclarified that response priming can be done, but it needs to end with a user message. They also mentioned that it is not possible to partially pre-write the response itself with the official API, as it would return an error. - Favorite repo for chat conversation:

@jb_5579asked the community for their favorite repositories for a solid chat conversation program - specifically one that is optimized for the Mistral API and features session memory, with a focus on Code Assist and Code Completion. - Context Window Sizes:

@tonyaichampinquired about the context window sizes for different versions of API models, but@frosty04212responded that the sizes are not currently known. They urged for experimentation to understand and leverage the system better. - Quality of Mistral-tiny for extracting content:

@tonyaichampshared a positive experience using themistral-tinymodel for extracting content from a 16k token HTML page. Given the model's cost-effectiveness and speed,@tonyaichampintends to use it for similar tasks in the future.

▷ #models (2 messages):

- Building AI Agents: User

@10anant10announced that they are working on building AI agents. - Direct Communication Initiated: User

@.tanuj.responded to@10anant10's comment, stating their intention to shoot them a direct message.

▷ #deployment (1 messages):

joselolol.: Hello good sir, consider using the MLX framework!

▷ #ref-implem (1 messages):

akshay_1: https://docs.mistral.ai/platform/guardrailing/

▷ #finetuning (2 messages):

- Feasibility of fine-tuning on a single 3090: User

@david78901mentioned that a single 3090 can possibly handle tuning a LoRA or QLoRA on Mistral 7b, but full fine-tuning is only feasible with 3x3090s or a single A100 using Axolotl.

▷ #showcase (4 messages):

- Introducing LLMcord, a Versatile Discord Bot:

@jakobdylancpresented his open-source Discord bot, LLMcord, which supports both Mistral API and running Mistral models on personal hardware via LM Studio. Features include a sophisticated chat system, compatibility with OpenAI API, streamed responses, and concise code contained in a single Python file. One can check out the project on GitHub. - Mistral Powers AI Backend:

@joselololmentioned that they're using Mistral to support the backend of certain AI tasks. - Synthetic Data Generation and Model Evaluation:

@joselololalso shared that his system can generate synthetic data and provide evaluation for fine-tuned models, a potentially useful tool for developers. - Mistral vs OpenAI: In

@joselolol's experience, Mistral outpaces OpenAI in most tasks, proving faster, cheaper, and more effective.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs): Find, download, and experiment with local LLMs

- GitHub - jakobdylanc/llmcord: A Discord AI chat bot | Choose your LLM | GPT-4 Turbo with vision | Mixtral 8X7B | OpenAI API | Mistral API | LM Studio | Streamed responses | And more 🔥: A Discord AI chat bot | Choose your LLM | GPT-4 Turbo with vision | Mixtral 8X7B | OpenAI API | Mistral API | LM Studio | Streamed responses | And more 🔥 - GitHub - jakobdylanc/llmcord: A Discord A.....

▷ #random (5 messages):

- Bicameral Mind Theory Sparked Interest: User

@blueridanusappeared puzzled about something, which was soon clarified by@cognitivetechwith a link to the Wikipedia page that discussed The Origin of Consciousness in the Breakdown of the Bicameral Mind. It's a 1976 publication by author Julian Jaynes that presents a theory on the origin of human consciousness. - Book Recommendation and Contention: The same user

@cognitivetechthen highly recommended the book and highlighted its thought-provoking nature. In response,@king_sleezeexpressed skepticism, arguing that Jaynes’s theory is based on circumstantial evidence, equating it to pseudoscience. - Skepticism Over Understanding Consciousness: In another message,

@king_sleezepointed out the complexity of understanding human consciousness, drawing a comparison to the 'black box' nature of neural networks. They stated, "no human I know of could tell me where or how their thoughts formed", highlighting the mysterious nature of human thought formation.

Links mentioned:

The Origin of Consciousness in the Breakdown of the Bicameral Mind - Wikipedia

▷ #la-plateforme (3 messages):

- Discussion on desired functions:

@gbourdinmentioned that the community is eagerly waiting for a certain function possibility. - Mistral API enhancement suggestion:

@jakobdylancsuggested that the Mistral API should be able to handle the edge case of message.content as an empty list just like the OpenAI API does currently. - Function calling is on the horizon:

@tom_lrdmentioned that function calling has been announced as a priority for future development.

DiscoResearch Discord Summary

Only 1 channel had activity, so no need to summarize...

- German DPR Dataset from Wikipedia:

@philipmayshared his project for creating a German Dense Passage Retrieval (DPR) dataset based on the German Wikipedia. He posted the project on GitHub for public use.

- Debate on Contextual Length: A discussion emerged about the appropriate length of document context for embedding.

@sebastian.bodzaquestioned whether@philipmay'suse of a maximum token count of 270 in his project was too short, and compared it to Jina Embeddings and other models that were trained on as many as 512 tokens.@philipmayand@bjoernpargued that longer contexts may become distracting or be more difficult for a BERT model to encode.

- BAAI Training Data Suggestion:

@sebastian.bodzashared a link to BGE's training data hosted on HuggingFace suggesting it might provide additional insights.

- E5's Training on 512 Tokens:

@sebastian.bodzanoted that the E5 model was also trained on 512 tokens, further supporting the debate on the optimal contextual length. Detailed information about E5's training can be found here.

Links mentioned:

- Jina Embeddings 2: 8192-Token General-Purpose Text Embeddings for Long Documents: Text embedding models have emerged as powerful tools for transforming sentences into fixed-sized feature vectors that encapsulate semantic information. While these models are essential for tasks like ...

- Text Embeddings by Weakly-Supervised Contrastive Pre-training: This paper presents E5, a family of state-of-the-art text embeddings that transfer well to a wide range of tasks. The model is trained in a contrastive manner with weak supervision signals from our cu...

- GitHub - telekom/wikipedia-22-12-de-dpr: German dataset for DPR model training: German dataset for DPR model training. Contribute to telekom/wikipedia-22-12-de-dpr development by creating an account on GitHub.

- BAAI/bge-large-en-v1.5 · Hugging Face

- Benchmarking Evaluation of LLM Retrieval Augmented Generation: Learn about what retrieval approaches work and chunking strategy. Includes test scripts and examples to parameterize retrieval on your own docs, determine performance with LLM evaluations and provide ...

Latent Space Discord Summary

Only 1 channel had activity, so no need to summarize...

- Exploring the Role of AI as an Editor: User

@slonoquestioned if there are AI writing tools that perform as an editor, helping craft messages, reorganize structure, suggest or eliminate sentences.@coffeebean6887suggested that describing what you want to a custom GPT for a few minutes can achieve this. However, slono pointed out that it's not an ideal solution due to its cumbersome user interface. - Struggles with AI-Assisted Editing: Responding to the above thread,

@swizecshared their experience of implementing such a feature in swiz-cms. They remarked that the biggest challenge was effectively communicating what the AI should look for, implying the need for improved guidance or interface for content editing AI tools. - Insights into Large Language Models (LLMs): User

@swyxioshared a link to a LessWrong post that delves into the implications and potential safety benefits of using LLMs in creating AGIs, and a related Arxiv paper that proposes a post-pretraining method for LLMs to improve their knowledge without catastrophic forgetting. - Appreciation for AI Resources: User

@thenoahheinthanked@swyxiofor providing resources, saying it had given him reading material for the week. The resources link to a Twitter post from user Eugene Yan.

Links mentioned:

- LLaMA Pro: Progressive LLaMA with Block Expansion: Humans generally acquire new skills without compromising the old; however, the opposite holds for Large Language Models (LLMs), e.g., from LLaMA to CodeLLaMA. To this end, we propose a new post-pretra...

- Mat’s Blog - Transformers From Scratch

- An explanation for every token: using an LLM to sample another LLM — LessWrong: Introduction Much has been written about the implications and potential safety benefits of building an AGI based on one or more Large Language Models…

LAION Discord Summary

- The Mysterious Case of LAION's Linkrot: @stellaathena expressed interest in any studies examining the rate of decay of a dataset like LAION due to linkrot.

- A Puzzling Inquiry on Stable Diffusion 1.6: @pseudoterminalx asked about Stable Diffusion 1.6, and @nodja speculated it might combine 1.x architecture with improvements from sdxl and more.

- Delving Deep into Aspect Ratio Bucketed SD 1.5: @thejonasbrothers shared that Aspect Ratio Bucketed SD 1.5 supports up to 1024x1024 pixels.

- CogVLM Steals the Spotlight: @SegmentationFault gave a shoutout to CogVLM stating it's highly impressive and undervalued in the AI community.

- A Dreamy Solution for Overfitting: @progamergov posted a research paper arguing that dreams could potentially act as an anti-overfitting mechanism in the human brain by introducing random noise to overfitted concepts.

- Sleep-deprived Memories Need More Research: In response to the dream theory, @progamergov expressed wish for studies investigating the effects of sleep deprivation on semantic and episodic memory formations as supportive evidence.

LAION Channel Summaries

▷ #general (8 messages🔥):

- Curiosity about Linkrot in LAION Dataset:

@stellaathenainquires if anyone has conducted or seen any study pertaining to the rate of decay of a dataset like LAION due to linkrot. - Questions about Stable Diffusion 1.6:

@pseudoterminalxasks for information about Stable Diffusion 1.6.@nodjahypothesizes that it might be the 1.x architecture with some improvements from sdxl and some extras. - Insights into Aspect Ratio Bucketed SD 1.5:

@thejonasbrothersprovides insights about Aspect Ratio Bucketed SD 1.5, they stated that it supports up to 1024x1024 pixels. - Appreciation towards CogVLM:

@SegmentationFaultexpresses their appreciation for CogVLM, stating that they believe it's highly impressive and undervalued in the AI community.

▷ #research (2 messages):

- Dreams as Anti-overfitting Mechanisms:

@progamergovshared a research paper suggesting that dreaming might play a crucial role in preventing overfitting in the human brain. According to the paper, dreams introduce random noise to overfitted concepts, thereby aiding in avoiding overfitting. - Call for Testing Effects of Sleep Deprivation on Memory:

@progamergovexpressed a wish that the research extended to testing the impacts of sleep deprivation on semantic and episodic memory formations, asserting such tests could provide supporting evidence for the aforementioned hypothesis.