[AI for Engineers Beta] Day 2: RAG — Chat with your Docs

Day 2: Chat with your Docs

Hello Beta testers! welcome to the second of 7 planned lessons in the AI for Engineers email course! We are sending this out for the first time for your feedback - please look out for "BETA TESTERS" callouts!

For feedback, you can use the ABCD framework:

- What’s Amazing?

- What’s Boring?

- What’s Confusing?

- What Didn’t you believe?

And more generally let us know where you got stuck, where we lost you, and where you want more detail! Can reply to Noah at nheindev@gmail.com and swyx at swyx@smol.ai and we'd love to improve this. Thanks for testing it out and we greatly look forward to your feedback!

Here’s a video if you’d like the project explained to you in a video format!

(The video is slightly out of date with the OpenAI syntax changing with their v1 of the python sdk, the same principles apply, but you can't copy the video blindly anymore unfortunately. Will be shipping an update soon)

This project is gonna peel back a few layers of the AI stack to give you an idea of how things are working under the hood. To showcase this, we are going to add semantic search on a body of material to our telegram bot using LangChain.

For this, that means we need a data set to ask questions on. Many other projects out there will show you how to get your own data. I would recommend the openAI web scraper as a good starting point.

But really, that is a fairly trivial example, and the openAI site is one of the few sites that this crawler will work on, as any page that requires JS will not be picked up by this scraper. Not even mentioning the anti-botting capabilities that many larger content aggregation sites employ. I’m not trying to teach you web scraping. I’ll tell you that I tweaked that web scraper and pulled a bunch of information off of the Mozilla documentation, and that is the data we’ll use going forward. You can find a link to the raw text files here.

The scrape is certainly not perfect, and the information contained is NOWHERE NEAR the entirety of MDN docs. But it should give you a fairly good sense of how directly you can retrieve information from a body of text that’s been properly embedded.

I would start by importing that text folder into your Replit project where the telegram bot is currently hosted.

You can do that by:

- Cloning the Github repo

- Using Replit’s Folder upload feature to add the entire

textfolder into your telegram repl.

Next, we will create some new files and folders to hold the embedding and chunking code that we will need to turn all of our text files into a vector that the model can understand and query. Don’t worry if you don’t understand what embedding, chunking, or vectors are. We’ll learn as we go.

Building Your Embeddings

We need to change up the structure a bit in replit to set ourselves up for success. Organized code is good code. Follow the next bullets in order

- Create Two folders called

-

embedding-processed - Create a file in

embeddingnamedembed.py

End result should look like this

The first thing we want to do is begin filling up the embed.py file with code. We’re going to take this huge folder with a bunch of text files and convert it into a CSV. That is because CSVs are a great format for storing embeds and Pandas data frames — both of which we’ll be using.

The first thing is to clean our data a bit. There are tons of excess spaces and newlines which is going to make our embedding process harder than it needs to be. In addition to the function, we will also import a few packages and declare some constants. Add the code below to your embed.py file

import pandas as pd

import os

import tiktoken

from openai import OpenAI

from langchain.text_splitter import RecursiveCharacterTextSplitter

openai = OpenAI(api_key=os.environ['OPENAI_API_KEY'])

DOMAIN = "developer.mozilla.org"

def remove_newlines(series):

series = series.str.replace('\n', ' ')

series = series.str.replace('\\n', ' ')

series = series.str.replace(' ', ' ')

series = series.str.replace(' ', ' ')

return series

With this function written, we’re going to write a loop to convert all of our text into a CSV. We’ll open each text file, remove as much fluff as we can, push it into a pandas data frame, and then use pandas’ built-in CSV converter to turn our data frame into a CSV file.

# Create a list to store the text files

texts=[]

# Get all the text files in the text directory

for file in os.listdir("text/" + DOMAIN + "/"):

# Open the file and read the text

with open("text/" + DOMAIN + "/" + file, "r", encoding="UTF-8") as f:

text = f.read()

# we replace the last 4 characters to get rid of .txt, and replace _ with / to generate the URLs we scraped

filename = file[:-4].replace('_', '/')

"""

There are a lot of contributor.txt files that got included in the scrape, this weeds them out. There are also a lot of auth required urls that have been scraped to weed out as well

"""

if filename.endswith(".txt") or 'users/fxa/login' in filename:

continue

# then we replace underscores with / to get the actual links so we can cite contributions

texts.append(

(filename, text))

# Create a dataframe from the list of texts

df = pd.DataFrame(texts, columns=['fname', 'text'])

# Set the text column to be the raw text with the newlines removed

df['text'] = df.fname + ". " + remove_newlines(df.text)

df.to_csv('processed/scraped.csv')

Next, we will need to tokenize all of the text in our CSV.

Understanding Tokens

Tokens are how the LLMs interpret your words. It’s also how you get charged for your API calls. Everything gets broken down into tokens. As a GENERAL rule of thumb, one token is usually 4 characters of English language text.

- You can play with OpenAI’s tokenizer playground to get a sense of how many tokens different words will generate

- Type in some regular words

- But also type in “rawdownloadcloneembedreportprint” (on gpt3 [legacy])

- only 1 token. Weird right?

- You can see there is some odd behavior in how the model interprets text. This post is quite dense but sheds a bit of light on the behavior. The blogpost is firmly in LLM history territory, so while it isn't as actionable as the next resource, you should absolutely read it for the culture.

- For something a bit more digestable, you can watch Karpathy give a specific explanation on why this happened, using 'SolidGoldMagickarp' (a similar peculiar token) as an example.

- In addition to Andrej's video, there's also the tokenizer playground he made, which is a bit more feature-full than the OpenAI playground.

A lot of the above were things that have since been patched out as the LLM community has learned from these and improved upon them. But I think it's important to see where these edge cases happened, and how they were sanded down as time marched on.

For a comprehensive dive you need to absolutely watch Andrej Karpathy's 2 hour video on building the GPT Tokenizer.

Bridging Tokens and Raw Text

We will turn all of the raw text we have in our data frame and turn it into tokens. Then we will turn those tokens into embeddings. But there’s a bit of a catch here. The embedding API has a maximum upload of tokens per call. So you can’t just upload the entirety of Wikipedia as a single large block of text and ask the embedding model to parse it.

This is where the term chunking comes from. There are a few reasons we’d want to chunk a larger piece of text:

- To generate an embedding of a larger piece of text that is too large to fit into the context.

- To add attribution of sources (a specific paragraph within a page)

- Because embedding gives the “average” value of all the words and positions of words in the text. Embedding 1 word gets a 768 dimension vector. Embedding 1 book also gets a 758 dimension vector.

To understand why this is a bit of a tricky problem, we need to understand embeddings a bit more.

From OpenAI’s website

An embedding is a vector (list) of floating point numbers. The distance between two vectors measures their relatedness. Small distances suggest high relatedness, and large distances suggest low relatedness.

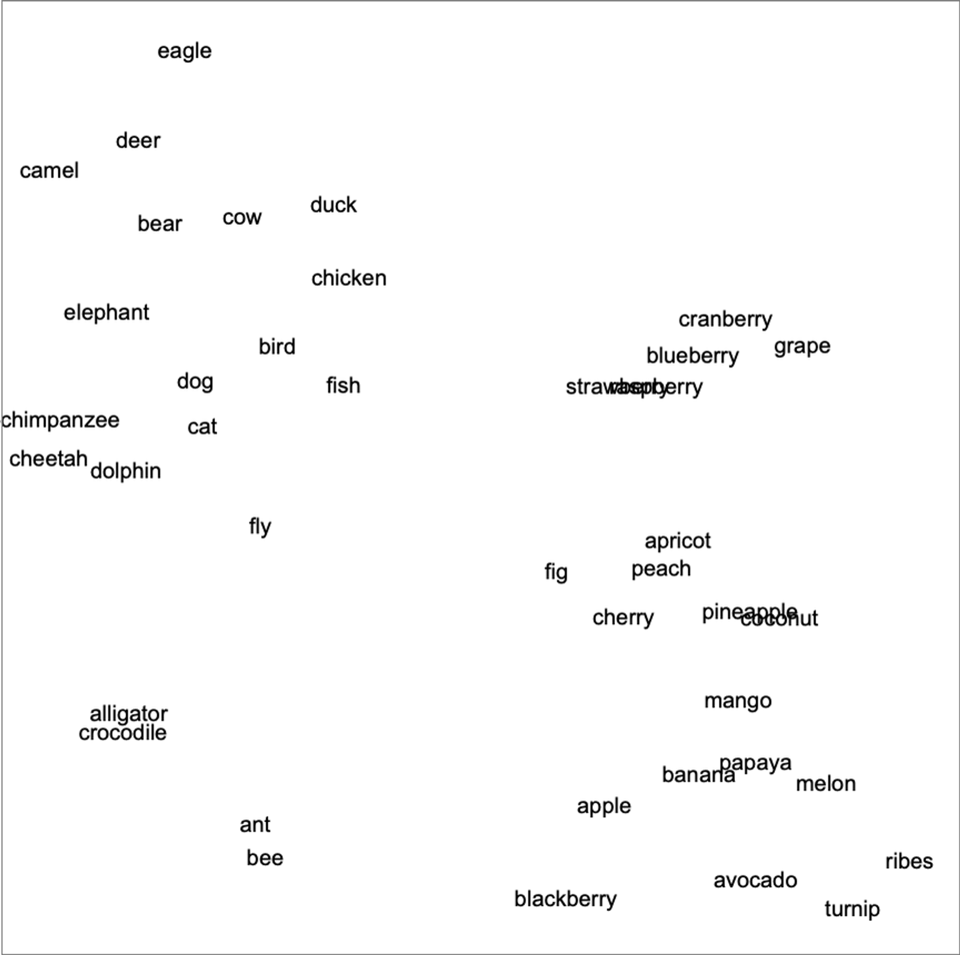

That’s a pretty good practical definition. Embedding models do their best to make sure that semantics are accurately represented by the vectors they generate. So you would hope that the words ‘salmon’ and ‘fish’ are close (but still different) to each other when they’re embedded. Illustrated below are words grouped by semantic closeness.

Picture from https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/#the-concept-of-embeddings

That means we’re generating blocks of numbers that are correlated to their semantic meaning. Chunking is important to retain the appropriate context of any given block of text. If we chunked a large piece of text at the wrong place, you could imagine getting wonky outputs, as you aren’t giving the entire picture to the embedding model.

Once you internalize this, things will begin to make a bit more sense on how we can accomplish something like a Q&A style app. We will generate embeddings from our data source (in this case the MDN docs), and then we can have a user ask a question about the data set.

We will

- Take in the user's query,

- Create an embedding of it

- Compare it to the embeddings we have already generated.

- Whichever result is numerically closest to the input, we will pass that to the text model to generate an answer in English text.

In order to go through that flow, we will need to tokenize the raw text in the CSV that we are generating above.

# Load the cl100k_base tokenizer which is designed to work with the ada-002 model

tokenizer = tiktoken.get_encoding("cl100k_base")

df = pd.read_csv('processed/scraped.csv', index_col=0)

df.columns = ['title', 'text']

# Tokenize the text and save the number of tokens to a new column

df['n_tokens'] = df.text.apply(lambda x: len(tokenizer.encode(x)))

If you were to look at the number of tokens on each page, you would probably see that most of the pages don’t need to be chunked. The embedding model we will be using, text-embedding-ada-002, can embed up to 8191 input tokens, which ends up being sufficient for most pages. There are other models available for different use cases and prices on OpenAI's page here.

But for the ones that do need to be chunked, we need to account for them in our code.

chunk_size = 1000 # Max number of tokens

text_splitter = RecursiveCharacterTextSplitter(

# This could be replaced with a token counting function if needed

length_function = len,

chunk_size = chunk_size,

chunk_overlap = 0, # No overlap between chunks

add_start_index = False, # We don't need start index in this case

)

shortened = []

for row in df.iterrows():

# If the text is None, go to the next row

if row[1]['text'] is None:

continue

# If the number of tokens is greater than the max number of tokens, split the text into chunks

if row[1]['n_tokens'] > chunk_size:

# Split the text using LangChain's text splitter

chunks = text_splitter.create_documents([row[1]['text']])

# Append the content of each chunk to the 'shortened' list

for chunk in chunks:

shortened.append(chunk.page_content)

# Otherwise, add the text to the list of shortened texts

else:

shortened.append(row[1]['text'])

df = pd.DataFrame(shortened, columns=['text'])

df['n_tokens'] = df.text.apply(lambda x: len(tokenizer.encode(x)))

This chunking process is greatly aided by the usage of LangChain. We only make use of the chunking functionality through the RecursiveCharacterTextSplitter, but there are many different uses for LangChain. If you’d like to learn more about it, there’s a crash course here.

With chunking and tokenizing accounted for, we just need to generate the embeds from all of our prepared data.

df['embeddings'] = df.text.apply(lambda x: openai.embeddings.create(

input=x, model='text-embedding-ada-002').data[0].embedding)

df.to_csv('processed/embeddings.csv')

Your entire file should look like this.

executing this file should take several minutes, but once it’s done, you should have both your raw-text and embeds generated and stored in a CSV file to use.

before running the file, we need to import the packages. Run the command:

before running the file, we need to import the packages. Run the command:

poetry add pandas tiktoken langchain

To run this file on replit, open up the shell and run the command:

python embedding/embed.py

⚠️ Some students have reported some troubles with some clashing import dependencies. If you face similar issues, you should be able to delete your

poetry.lockandpyproject.tomlfiles and replace them with the ones here and run again successfully.

This will take ~5 minutes to run. As long as you haven’t gotten an error message, you should trust that everything is operating correctly.

Using Your Embeddings

With all of our embeds generated, we can now move on to taking user inputs, tokenizing them, comparing the question to the embeds we already have, and using an LLM to come up with a response given the results from the query.

Create a file at the root level named questions.py

The first thing we will need to do is load our embeddings in a way that will be easy to manipulate and use, as a CSV full of raw vectors from ada can be over 1000-D. Numpy will flatten the dimension to 1-D, which makes it much easier for us to manipulate and perform operations.

We will also create a distances_from_embeddings function. This is taken from a previous library OpenAI had available, but stripped out, so we're just using the 1 function. given two vectors, it uses the scipy library to calculate the cosine distance between two vectors. If you don't understand it, don't worry, it's a bit of a lower level library, and we won't need to tweak this code at all.

# questions.py

import numpy as np

import pandas as pd

from openai import OpenAI

from typing import List

from scipy import spatial

import os

def distances_from_embeddings(

query_embedding: List[float],

embeddings: List[List[float]],

distance_metric="cosine",

) -> List[List]:

"""Return the distances between a query embedding and a list of embeddings."""

distance_metrics = {

"cosine": spatial.distance.cosine,

"L1": spatial.distance.cityblock,

"L2": spatial.distance.euclidean,

"Linf": spatial.distance.chebyshev,

}

distances = [

distance_metrics[distance_metric](query_embedding, embedding)

for embedding in embeddings

]

return distances

openai = OpenAI(api_key=os.environ['OPENAI_API_KEY'])

df = pd.read_csv('processed/embeddings.csv', index_col=0)

df['embeddings'] = df['embeddings'].apply(eval).apply(np.array)

Next, we will make a function that

- Takes in a question

- Generates embeddings from the question

- Compares the vectors from the question_embedding to the database_embedding

- Returns the vector that is closest in distance to the question

def create_context(question, df, max_len=1800):

"""

Create a context for a question by finding the most similar context from the dataframe

"""

# Get the embeddings for the question

q_embeddings = openai.embeddings.create(

input=question, model='text-embedding-ada-002').data[0].embedding

# Get the distances from the embeddings

df['distances'] = distances_from_embeddings(q_embeddings,

df['embeddings'].values,

distance_metric='cosine')

returns = []

cur_len = 0

# Sort by distance and add the text to the context until the context is too long

for i, row in df.sort_values('distances', ascending=True).iterrows():

# Add the length of the text to the current length

cur_len += row['n_tokens'] + 4

# If the context is too long, break

if cur_len > max_len:

break

# Else add it to the text that is being returned

returns.append(row["text"])

# Return the context

return "\n\n###\n\n".join(returns)

Since we broke up all of our pages into multiple sections of smaller sets of tokens, the step of going through in ascending order and adding text until we’ve reached the max_len is important to make sure we answer the question entirely. You can adjust the max_len to change how long or short your answer is.

The next step is creating a function that will take in our context, and use it to generate a response from a large language model.

def answer_question(df,

model="gpt-3.5-turbo-1106",

question="What is the meaning of life?",

max_len=1800,

debug=False,

max_tokens=150,

stop_sequence=None):

"""

Answer a question based on the most similar context from the dataframe texts

"""

context = create_context(

question,

df,

max_len=max_len,

)

# If debug, print the raw model response

if debug:

print("Context:\n" + context)

print("\n\n")

try:

# Create a completions using the question and context

response = openai.chat.completions.create(

model=model,

messages=[{

"role":

"user",

"content":

f"Answer the question based on the context below, and if the question can't be answered based on the context, say \"I don't know.\" Try to site sources to the links in the context when possible.\n\nContext: {context}\n\n---\n\nQuestion: {question}\nSource:\nAnswer:",

}],

temperature=0,

max_tokens=max_tokens,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=stop_sequence,

)

return response.choices[0].message.content

except Exception as e:

print(e)

return ""

Now we can call this answer_question function, pass in the pandas’ data frame along with our question, and get an answer back.

Now before we pipe this into our main.py file, install the dependencies we're using in this file.

poetry add numpy scipy

With the core functionality made, we now have to connect it back to the telegram bot.

Open up main.py and perform the following steps:

- Load in the dataframe, and convert the embeds into a numpy array.

import pandas as pd

import numpy as np

from questions import answer_question

df = pd.read_csv('processed/embeddings.csv', index_col=0)

df['embeddings'] = df['embeddings'].apply(eval).apply(np.array)

- Create a new function called Mozilla that will let our bot call the

answer_questionfunction we made earlier

async def mozilla(update: Update, context: ContextTypes.DEFAULT_TYPE):

answer = answer_question(df, question=update.message.text, debug=True)

await context.bot.send_message(chat_id=update.effective_chat.id, text=answer)

Here you can see we pass the question to the AI through the update.message.text and then once we get an answer back, we send it to the user through the context.bot.send_message function as we did for the previous bot functions.

Optionally you can set the debug=True portion as we did in this example. This will log out (to the Replit console) all of the context that the embeds used to answer the user’s question.

- Create a new command handler, and then connect that handler to the bot

mozilla_handler = CommandHandler('mozilla', mozilla)

application.add_handler(mozilla_handler)

If you get lost, you can use this gist for reference.

Now if you hit run up top, you can now ask the telegram bot questions about Mozilla by using the /mozilla command and then asking a question

Conclusion

Congrats! You’ve successfully generated your own embeds, and created a way to ask questions about it. You could use this to generate summaries, or ask questions on just about any documents now. This is the process that a lot of companies that have “GPT Powered Docs” are doing under the hood.

So great, you can ask basic questions about CSS - but did you know that GPT is GREAT at writing and fixing code? (because its trained on code!)

In day 3 we’ll be exploring Code Generation with GPT. I hope to see you there, and as always — if you have any feedback, we’re eager to hear!

Bonus

Here are some other jumping-off points where you could progress this on your own

- Try to make it source the link that it got the information from when answering

- Try chunking at different lengths to see if you get better quality answers

- Try a different data set and doing a bit of cleaning on it before generating embeds

- Learn about HyDE