I (Will Have) Told You So.

Every so often, I get into a conversation with someone about A.I.

This is a terrible idea, and I need to stop doing it.

Unfortunately, the subject is in the news a lot and I feel like I’m going to have to keep talking to people about it, unless I can fulfil my lifelong ambition of not talking to anyone, ever. In an attempt to get ahead of this conversation, I’ve decided to set down all my thoughts about Artificial Intelligence and where we’re going and why it’s bad.

By “it”, I mean both that A.I. is bad and that where we’re going is also bad. That’s in ascending order, for what it’s worth. A.I. is shitty, but where it’s leading us is downright catastrophic.

I’m going to attempt to walk people through some stuff and hopefully then, whenever anyone asks my thoughts, I can point to this as a primer and as a full explanation. Strap in.

There Is No “I” in “A.I.”

The first thing to be aware of with the current crop of Artificial Intelligence is that it’s not intelligent in any meaningful way. It’s actually just a predictive text engine, like the one that suggests the next word when you type on your phone. Obviously, it’s far more complex than that, but it’s the same basic principle. ChatGPT, the flagship AI with the highest brand recognition, is what’s called a Large Language Model (LLM), which means that the people at OpenAI (the parent company of ChatGPT) have fed it as much human writing as they could get their hands on until it started to sound human.This is, it should be pointed out, a VAST amount of data. There are already multiple lawsuits underway because it’s become very clear that in order to train their AI, ccompnaies employing Large Language Models have stolen copyrighted material from basically everywhere. When Facebook’s parent company Meta decided to build their LLM, they pirated every book they could get their hands on, and you can actually check here to see if anything you’ve ever written was stolen and used to train an A.I. Remember: Downloading things for free is bad and morally wrong, unless you’re a multi-billion-dollar company who can actually afford to buy books in the first place. Think of it like telling the poor and hungry that it’s wrong to steal a loaf of bread unless you’re OmniBake, the world’s largest bread manufacturer.

You don’t have to have written a book to have been ripped off by billion dollar A.I. companies, by the way. Everything you’ve ever posted to social media, every status update and comment, has been mined in order to teach LLMs what human beings sound like, or more accurately how they write. This is one of those situations where you start to realise why data has become such a hot-button issue, and why it’s such a valuable commodity.

Once the Large Language Model A.I.s have absorbed these staggering volumes of data, they start getting good at immitating human communication. This is what I mean when I say “it’s just predictive text.” The model can create a convincing sounding reply to a prompt because it’s essentially read every reply to every prompt ever written, over and over, and as such it has learned to respond with statistically likely output.

Crucially, this doesn’t mean that the A.I. is actually smart, or that it is thinking. If you type “the quick brown fox jumps over the lazy ———”, an A.I. should predict that the next word is “dog”, but at no point does it actually understand what a dog is. Or a fox. Or quickness, or jumping. It’s at this point that we enter what might be called Blade Runner: Stupid Edition.

Do Androids Hallucinate Electric Sheep?

Alan Turing, one of the biggest names in early computing, famously devised the Turing test. In essence, a computer can pass the Turing test if you have a conversation with it in writing and you can’t tell whether or not it’s a real person.

Computers actually hit this point a while back. If you interact with a customer service bot on a website and you don’t know better, there’s a decent chance you could be fooled for a while into thinking that there’s a real person there. That’s how common technology that beats the Turing test has become.

The next step beyond the Turing test is referred to as the Chinese Room problem. Imagine that you’re in a room with nothing but a Chinese-English dictionary. Also, imagine that Chinese is one language and not several, because this is a thought experiment and stop being a pedant. Someone puts a message in Chinese under the door, and you use the translation book to decipher it, and then write a response, which you post back under the door.

The person outside the room will now assume that you can understand and write in Chinese, but in fact you can’t. This is where A.I. currently sits. It can perform tasks in a way that makes it look like there’s an intelligence and a reasoning process at work, but there actually isn’t. It’s just performing a rote task, albeit a complex one, without ever actually understanding what it’s doing. And this causes two major problems…

Spanish Thai.

The first problem is related to the Chinese Room, and has been called the Thai Library problem.

Imagine that, instead of a room with a lone book, you’re now locked inside the central library in Bangkok. You have access to vast numbers of books, but someone has removed every book that has any English writing in it, or any writing containing the Latin alphabet, which is the one you’re looking at now. All pictures have also been removed from the books. All you have is an essentially limitless amount of writing, in Thai script. Here are the 44 consonants to get you started:

Less popular than the 47 Ronin, 500 Days of Summer or 99 Red Balloons. The question is: Can you ever learn Thai?

There really doesn’t seem to be any way in which you could. Without any sort of reference point, either visual or linguistic, you’ll never be able to get a foothold. I thought about this a lot when it was first presented to me, and while I had some ideas, I never figured out a solution. The best I can do is that, based on a quick Google, Thai script commonly uses Arabic numerals. So maybe you could look inside the start of various books, and teach yourself the Thai word for “Copyright.”

But that’s still not going to get you anywhere.

If, however, you lived forever - and you’re already teleporting around the globe into a series of unlikely scenarios, so why not? - you might eventually start to recognise patterns in Thai script. You might start to learn that X squiggle follows Y squiggle. Over enough time, you might even develop the ability to write entire sentences in Thai script. You just won’t ever know what they mean.

This is what Large Language Models do. Again: It doesn’t know what a fox or a dog is, it just knows that the quick brown fox jumps over the lazy dog. An LLM is carrying out an enormously complex feat of probability, not thought. It’s why A.I. doesn’t know when it makes a mistake; because it doesn’t actually “know” anything. It only knows the probabilistically correct order of meaningless squiggles.

This means that LLMs aren’t ever really going to be able to think, because they can never establish a start point. Anyone saying that an LLM is going to become sentient and intelligent is like a man who breeds horses promising to make a car - you can’t get one from the other. You can breed all the horses you want, but you’re just going to get more horses, and none of them is going to spontaneously grow an engine and wheels.

To stretch this analogy beyond its limits, you can’t even breed that many horses. Existing programs like ChatGPT have essentially used up all of the available data. Again: They’ve been trained on everything that companies could get their hands on, including by theft. It’s already been speculated that to train current A.I. requires essentially everything that human beings have ever written. The proposed solution is dumb enough that it should be grounds for abandoning the entire idea of A.I. forever.

Without any more data to draw from, A.I. companies are beginning to have LLMs write more data for other LLMs to train on. We’re out of books to train our A.I. with, so we’re having A.I. write books in order to train itself.This has led to the phrase “Habsburg A.I.”, after the Habsburg dynasty - a Spanish royal family that famously practiced inbreeding for multiple generations. After decades of intermarrying amongst family members, the final result was Charles (Carlos) II, who was reported to have numerous very obvious physical and mental disabilities as a result of his DNA presumably being wreath-shaped at this point.

As a fun fact, doctors rate inbreeding on what’s called an “inbreeding coefficient” - basically a score of how inbred you are - and whilst Charles II was the most famous and visible result, he wasn’t the Habsburg with the highest inbreeding coefficient. That horrifying title belonged to his niece (technically his niece in two different directions, but explaining this requires a chart), Maria Antonia, who apparently showed no outward signs of genetic problems but who actually would have been less inbred if here parents had simply been brother and sister, OR if they’d been parent-and-child. Yikes.

Here’s a puppy so you can think about something else. Training A.I. off of A.I.-generated data is a terrible idea for the same reason that recycling the same set of genes over and over is a terrible idea: There will always be minor initial mistakes, and if repeated, these mistakes compound over time. As evidence, an A.I. image generator was asked to copy a picture of Dwayne “The Rock” Johnson a hundred times. There’s a full clipshow available here, in which Dwayne goes from his normal face, through some fairly racist territory, until he enters surrealism. After a hundred iterations, this is what A.I. was painting:

This is the future AI wants. Hopefully all of this is evidence that A.I. is not going to become “smart” any time soon. When people say it’s going to gain sentience and kill us all, they’re either naive or else - and bear in mind that tech CEOs have talked about this a lot - they’re just trying to generate headlines in order to stay in the spotlight. And why do they need to do that? Well…

They’re Forever Blowing Bubbles.

OpenAI, the company behind ChatGPT, isn’t profitable.

At all. Not in any way. They are pissing money up the wall at a rate that would be horrifying if the human brain were able to comprehend the numbers involved. They are so far keeping their ship afloat with promises of what A.I. might one day be able to do, but as outlined above: It’s never going to be able to do those things. It’s never going to become smart, it’s never going to think. It’s a mechanical Turk for the 21st century.

A.I. would already be seen as an obvious failure, were it not for the fact that people are desperate to see another invention on the scale of the internet. Over the last twenty years or so, the internet has revolutionised every corner of life, and some people wish more than anything that they could get in on the ground floor when something like that happens again. A while back everything had to be on a BlockChain. Then there was a fad for NFTs. Both things were meant to be the future, just like how ten years ago, the Financial Times said that 3D printing was going to be “bigger than the internet.”

A.I. is just like all of these examples, in that we’re promised it’s going to change everything!It won’t. The internet was the big epochal shift for the modern period. There won’t be another one along for a while, so get used to it.

Unfortunately, people who don’t really understand how A.I. works seem to be thinking no further than “new technology bring money soon!” and so they’re betting the house on all of the spurious promises of A.I. coming true. In fact, worse than that, they’re probably betting YOUR house.

Estimates predict that the current A.I. bubble is four times bigger than the mortgage bubble that burst in 2008 and caused global recession. If that isn’t scary enough, A.I. companies are basically the only thing still propping up the U.S. economy.

How are they worth so much? It’s simple! Companies like NVidia (a microchip company) and Microsoft (who, amongst other things, host cloud storage) are investing huge sums of money into companies like OpenAI. This means OpenAI is making a ton of money! But because it’s still growing, OpenAI uses that money to re-invest in things like microchips, and cloud storage. Which they buy from Nvidia and Microsoft! Which means those companies are making a ton of money! Which they then invest in OpenAI…

You might recognise this better as the old “Naked Gun” gag where two people try to bribe information out of each other by passing the same $20 back and forth. Nobody is actually generating anything, but everyone is declaring the same money as profit and pushing their stocks higher. This can’t last. Not only is it unsustainable, but when the whole process collapses, it’s going to take an enormous amount of the global economy down with it.

It’s The Economy (AND) Stupid.

Maybe you’re not worried about a global recession. Maybe, like me, you’re a millenial on your third “once in a lifetime” recession and as poor as ever. Maybe you feel like economic collapse wouldn’t be any different from your humdrum, everyday levels of poverty.

You’d be wrong, but even if you weren’t, don’t worry. A.I. is still wrecking a lot of other things on the road to financial disaster.

First there’s the environment. A.I. is sucking up an ungodly amount of power. As mentioned, ChatGPT isn’t profitable - it loses money every single time someone asks it a question - but it also requires enormous data centres in order to run, which means more burning of fossil fuels to generate electricity, and also a lot of heat generated. This isn’t good for the computers that make up ChatGPT, so cooling them uses huge volumes of water. About half a litre for every hundred words generated, according to one estimate.

Half a litre per hundred words doesn’t seem that bad until you realise people are currently using it to print entire non-sensical books and sell them for a pittance to gullible readers, day in and day out. The glut of fake books has become such a problem that Amazon has limited people to self-publishing three books PER DAY.

This is obviously tanking the market for paid human writers and (again) uses half a litre of water that could irrigate crops or hydrate drought-struck human beings, every hundred words. A short book is maybe fifty thousand words, for reference, so every talentless con artist posing as an author can use up 750 litres a day, every day, minimum. And that’s just taking fake books as one example. A.I. Images take more - at least five litres per image. If trends continue, by 2027 A.I. water usage is predicted to be greater than the water usage of Denmark.

At a point in time where global temperatures are soaring and water is correspondingly becoming more scarce, A.I. is contributing heavily to both problems. Its advocates point out that as soon as A.I. gets smart enough, it could figure out new solutions to the climate crisis, but again, the load-bearing word there is “could.” It won’t. It can’t. It’s never going to become smart and therefore it’s never going to solve existing problems, much less the problems that it is creating.

Not that that’s stopping tech bros from attempting to A.I. their way out of the A.I. hole they’ve dug themselves. There are now countless instances of people who talk to ChatGPT or other LLMs as therapists or friends, and this is leading to increasingly common incidents of psychosis. It turns out - shockingly - that people who spend all their time talking to a robot that always agrees with them begin to come unglued. Undeterred by the fact that worrying numbers of people now think that they are divine messengers of some kind, Mark Zuckerburg thinks that in the future, 80% of your friends will be A.I.Never mind that it’s demonstrably a path to madness. The man behind Facebook - a company that has exacerbated and been used as an organising platform for multiple genocides and radicalised a generation of old people into Nazi-adjacent rage junkies - thinks he knows what’s best for humanity’s future, and that future is everyone sitting alone in their tiny rooms, talking to A.I. friends, watching A.I. generated movies and T.V. shows that won’t make any sense from one scene to the next because all human writers and actors and directors and artists were fired. These nonsensical entertainment feeds might star that dayglo blob that we’re told represents The Rock. Who knows? Everyone’s reality will be different and everyone will be kept distracted by eternal content that is made specifically for them, but not actually by anyone.

If that sounds horrifying - and it should - then you might think you’ll never fall victim to that kind of obvious mental slavery. You would only ever use ChatGPT to look something up, or in some other brief, harmless manner. Well, bad news: Studies have already shown that using ChatGPT to answer a question makes you measurably dumber. Teachers are already fighting a losing battle against students turning in A.I. essays, if they aren’t the teachers who are also using A.I. to plan lessons in the first place. The whole industry is wrecking people’s ability to think critically, engage with material, or even interact with other human beings. Factor in those three elements and that awful, Zuckerburg future doesn’t seem too far fetched.

A.I. is collapsing the economy, it’s intensifying ecological disasters, it’s taking jobs from artists and writers and designers (and doing them badly, but by then the humans are already fired) and it’s rotting your brain. And if all of that weren’t enough, it’s also flat-out wrong a lot of the time you ask it questions, because it doesn’t know anything in the first place.The China Problem.

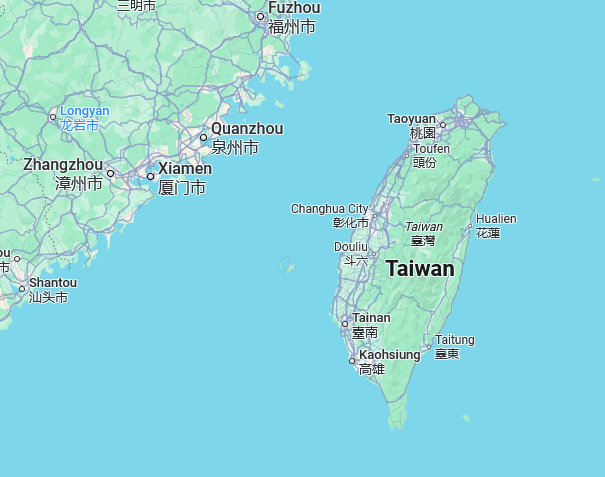

Computers, like the British diet, are built around chips. Currently, the most high-end chips are produced in Taiwan.

In 1949, the Chinese civil war was settled, and if you don’t want to know the results, look away now.

If I lock you in a room with a translation book you can figure this out… With the Communist Party now in charge, opposition leader Chang Kai Shek fled to Taiwan, where for several decades, the West pretended he was still the REAL Chinese leader. This led to an odd situation where some countries recognise Taiwan, while China (along with anyone who wants to stay on China’s good side) doesn’t. “Invade Taiwan” has been on the to-do list for China for a long time, now.

For the past few years, the Chinese military have been working on a couple of pet projects. They have, for example, been spending a lot of time training for littoral operations - teaching troops to fight their way ashore and establish a beach head. They’ve also been building a lot of amphibious vehicles. And technology specifically designed to build enormous bridges, of the kind you could use to quickly close the gap with an island off the Chinese coast.

This could be replaced with that gif of the nervous monkey… They’re also building an awful lot of ferries, of the size that would be useful for transporting troops and tanks and the like.

Having eyed up the possibilities for a long time, China now has the added bonus that most of the world’s high-end microchip facilities would fall under its control if it were to capture Taiwan. These factories are absolute science-fiction level facilities, by the way. The transistors that they make are measured in single-digit nanometers, ie: millionths of a millimeter. For perspective, a human hair is about seventy thousand nanometers thick. So these microchip factories can’t just be rebuilt elsewhere. If the Chinese take Taiwan, then China owns microchip production globally.

Even if the current A.I. bubble persists - if the U.S. government realises that their entire economic model demands that ChatGPT and its ilk continue to be successful, even in the face of all evidence - then in the next eighteen months it’s probably not going to matter. OpenAI, Meta, Google, Microsoft… they’re all building huge data centers, and they’re all going to need chips.

The current betting is that China is planning an invasion of Taiwan for next year, or some time in 2027 at the latest. They seem poised to seize the infrastructure that supports the entire western economy.

If that happens, I can at least console myself that people will probably stop asking me about A.I., because we’ll all have bigger problems. I honestly don’t think, however, that the A.I. bubble can last that long. In many ways I hope it doesn’t, because the only thing worse than an economic bubble this size bursting is going to be a nuclear power aggressively bursting it on purpose.

So there you have it. Or them. All of my opinions on A.I.; I don’t like it, I don’t use it, it’s wrong a lot of the time because it doesn’t know anything, it’s not going to become smart - although it IS making everyone else stupider - it’s killing the planet, and I generally wish the whole thing would go away and every tech CEO that champions it would be kicked in the nuts on a globally broadcast platform.

Now I’m going to go and dig a bunker, and buy canned goods and maybe some shares in a Chinese ferry company. If World War 3 starts while I’m down there, at least I have actual books on real paper to keep me occupied.

Sources for all of the above:

Ed Zitron is a writer and podcaster whose newsletter (“Where’s Your Ed At?”) and podcast (“Better Offline”) have been attempting to warn people about the dangers of A.I. misinformation and stock pumping for a long time.

The useful phrase “Habsburg A.I.” was coined by researcher Jathan Sadowski, who has written several books and can be investigated further HERE.

The Thai Library Problem is the brainchild of Emily M. Bender, who first came up with it in this Medium post.

There’s a full breakdown of why current tech profits are a shell game in this video essay by Vanessa Wingard, whose name has a little Scandinavian accent over the letter “a” that I don’t know how to type.