We made it through January!

January has been ten years long and other stories

I hope your month is going well and that you aren’t struggling too much with all the horrific circling back that this month entails

At least within 30 days, the evenings are lighter and we will be clear of the horrors of January.

In “ai dystopia” news, Google is apparently scraping terrible AI generated content for its news. So just your eternal warning to check sources. And to not share anything before you know if it was just LLM generated from some LinkedIn collaborative article.

The British Library is slowly getting back online after a ransomware incident. I have seen some discussion about this that shows how little we listen or reflect as cybersecurity professionals. It is very easy to sit in a bubble, in a nice organisation with budget and staffing and give advice. Or to scream fearmongering rubbish about Russia.None of this does anything but help people into consulting roles or get them keynotes. Perhaps I say this as someone who has worked in public sector rooms with missing ceiling tiles, no soap in restrooms and no snacks or benefits.

Real helpers understand the real world. Public sector cybersecurity and privacy is often done on a low budget by teams of one or two, who are also paid salaries that some tech rockstars wouldn’t pick up off the sidewalk if they found it.

This is also often the case in many small and medium size organisations. Many of us know the realities of this. There is no one solution,but it starts with grasping that government or regulator advice is just that: advice. Organisations need budget, help and support, and from a wide variety of people who can advise with compassion and insight. (Not just those who can afford £600 to become accredited by the UK Cybersecurity Council, for example.)Tech Policy Press Podcast has a great episode on balancing interests like these.

I wish we could be more honest about how the internet is held up with sticky tape and hope. And that even in big organisations, with budget and staff, things can be challenging. So trying to do things right in the public sector especially can be enormously complicated.We would help each other out significantly if we could be honest, open and humble. Ethical Voices podcast has a wonderful episode relating to this-on building trust.

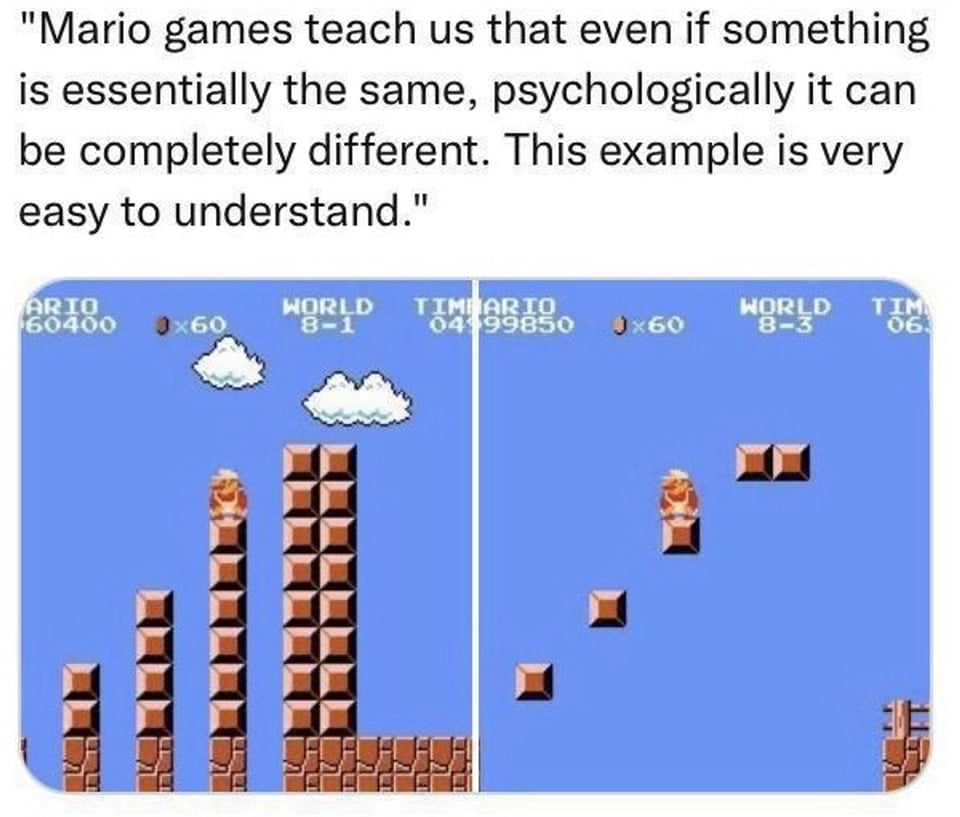

I leave it with this image of how it can seem to individuals when we ask them to do a task. We can turn to Vygotsky’s zones of proximal development and their modern applications, if we wish to consider further making our advice accessible.

User experience

There used to be a joke about the late Queen Elisabeth, that she must think that the whole world smells of fresh paint. While this is unlikely to be true, it shows us that we need to consider who we are designing or working for or with.Everyone has very different experiences, expectations and opportunities.The best security education work understands this and meets people where they are. How much do you consider the lived experiences and needs of the people who you advise?

I say this to lead us to the next point:

Deepfakes and deep needs

Even the White House got involved in denouncing the deep fake sexualised video of Taylor Swift that circulated this week. The risks of deep fake videos and audio are increasing. And as we have noted already in previous newsletters, there are huge risks in 2024 with so many elections globally. We simply have to do more to combat dis and mis information. And to ensure that our advice goes beyond “check have I been pwned”, to realistic, practical advice.

My most common advice is:

Consider what you share of yourself and others.

Evaluate if your organisation should be publicly sharing audio or video of staff. I am thinking particularly of the brand tiktok accounts where real staff do voice to camera almost daily to promote brands.

Surveillance capitalism is what we call the Spotify unwrapped, or the normalisation of live-streaming the public. We need to discuss if this is acceptable when we are feeding strangers’ images into a machine.

If you or your co-workers are required/choose to make videos or do talks, where your voice, face and cadence are public, do this advisedly and with consideration to potential risks. Check you have the right to delete anything should you change your mind or need more privacy.

Advocate, hard, for accountability and clear reporting structures. Taylor Swift has significant resources. But we know that reporting is harder for ordinary folk. Also, reporting can be abused to take down perfectly acceptable content. Simply because sometimes apps will react to mass reporting and not analyse the content. Always consider who watches the watchers. I say this as the UK Online harms bill has criteria being discussed where adult content containing handcuffs will be considered extreme… context is important as are not imposing my values on you,or vice versa.

Uplift the voices of the marginalised researchers who have been publishing work on deepfake risks since at least 2018. Taylor Swift is a great example of how in tech we tend to only listen when a white man or a woman he cares about is affected.I know this from advocacy work in Silicon Valley. I had to present dangers typical to those of white tech bros and their wives in Los Gatos before anyone would listen to me.

None of this is to suggest that progress is impossible. We just need to listen to the right people, often the ones who are not in our rooms already. And possibly, very likely in fact, be a little uncomfortable about what needs to be done. We also need to be comfortable with challenging well intentioned work. KOOSA and the Online Harms Bill could lose us more rights than they claim to protect. We need to be in balanced rooms, prepared to listen carefully, to make sure we bring in the right kind of tech change.

Have a great week,and here’s to a positive February. We sure could do with some light and joy, and hope.