Just Culture and why words matter

In another week where the firehose of news remains overwhelming, I hope that you are taking care of yourself.Sometimes it can seem impossible to make change, but what we do in our little circles of influence does matter. So if you can do something nice for someone, please do, and I hope someone does a good thing for you in return. I have put this dog from the NYC Halloween dog parade, in the hope that it makes you smile. It sums up my week anyway.

Just Culture

I have been interested in just culture for a while, this is part of the discipline of human factors, little used in cybersecurity. I recommend reading this article on just culture in railway operations. Just culture is about understanding the reasons behind human error, instead of immediately seeking to blame. It is about resolving the underlying issues behind a behaviour or a mistake and trying to create systems that prevent that. It also encourages people to report concerns or mistakes. I really enjoy reading work on just or safety cultures in other sectors because of the systems thinking it all involves.

I’m not a human factors expert, so my understanding of it all is basic. I would love to see us working more closely with human factors principles to improve cybersecurity. Much of our work on human risk or behaviour does tend to blame. I would say roughly 80% of security conversations I have heard over the years have focussed on supercilious blame and demeaning comments about co workers or the public. This already toxic mix is further worsened by our reluctance to sit and listen to solve problems. I am not alone in having found that once channels are established for people to raise concerns or questions, you find issues- and then solutions- that you didn’t know existed. But if you don’t ask the right questions, you won’t know. Just culture does not seem simple to implement, but the results are worth the effort.

Words have weight

Many of us who write or contribute to security communications will understand the challenge of gently changing traditional security language. We have happily changed a lot of terms to more inclusive language, block and allowlist for example. Yet we still seem conditioned to using military or war language. I want us to ask if this is helpful?

There have been studies on the war metaphors in Covid 19 messaging, and articles written about the impact of this language.

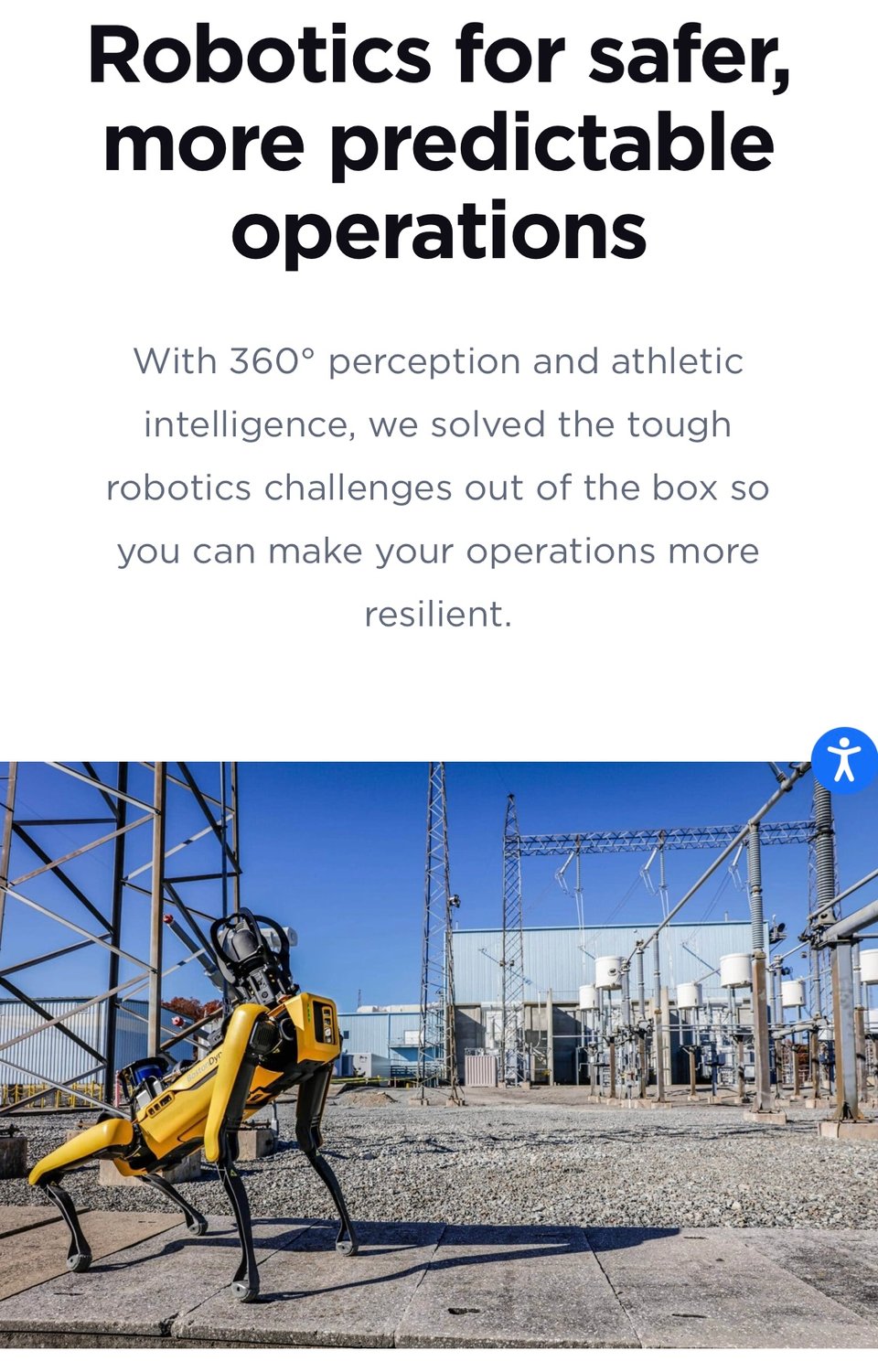

Below is a screenshot from the Boston Dynamics website, the prose used is almost poetic and is starkly different from most cybersecurity vendor messaging. Our vendor halls are covered with slogans about war, attack, threat etc. And this then spills over into our consumer messaging.

With the world in the state it is in, perhaps now is a good time to reflect on our use of war and gun metaphors and language. How helpful is it? Is it making us heard by our co workers or the public, or the board? We should consider why military contractors use such prosaic language. I venture to suggest that it is because they understand how to speak to corporate interests. Perhaps cybersecurity could move beyond vanity dashboards and soldier cosplay and do the same? I think it would make us more credible and get us what we want more often. I just don’t know who looks at the world right now and thinks “people need more war/gun metaphors”.

I suggest that as with the research on Covid 19 messaging finds, that our militaristic communications are increasingly uncomfortable and unheard. Fear is not a great motivator because after a while,people can’t cope. Counter terror messaging doesn’t show us the daily risks averted, it would terrify everyone. Instead they focus on proactive steps “see something, say something” and we see stories of good practice making a difference. I don’t mean to suggest that counter terror messaging is always fantastic, government ministers or law enforcement often get it badly wrong. But the basic principle is to not scare or overload the public with threat messaging. Then when you do need to communicate an issue, people are more likely to listen.

I say this a lot but, too much of our cybersecurity language builds fear instead of collaborative energy and a sense of individual agency. We tell people to be afraid of predators online, or to check if they have been “pwned”. Instead we should talk about how to keep ourselves and others safe and give practical, positive advice. We need to feel less alone and at risk online and more in a collaborative shared space. After all, what got us through 2020 was collective action, real kindness and the understanding that an individual can make a difference.

I could go further and say that as we move into 2024, the last four years have shown many of us who is NOT kind. It has proven to many of us,depending on region, that we are disposable or unimportant to employers and politicians. We would be well advised to be mindful of this when creating security messaging. Because your co-workers or the public are in a different mindset now than in 2019.

If you are interested in this topic, I can recommend the audio book version of “The Body Keeps The Score” by Bessel Van Der Kolk. It describes how the body can store traumatic memories and that these experiences can manifest in unexpected ways. I am not a psychologist or doctor, so my opinion is just that. But I think it is worth us taking a moment to consider how many people we work with who may be living with some kind of trauma. And how we can create safe environments for them. Because our sector, with its metrics and lists of risky humans, mandatory training and more, can add to stress.

New topics for education

This TED talk on “what tech companies know about your kids” is one of the topics that I wish we saw more of in security education. Not because I want people to be afraid, but instead, to see their digital footprint as a thing that they can manage. It would also be wonderful to see more collective awareness of how we as individuals should be mindful of how we may contribute to the digital footprint of strangers or those we know. Over sharing children online or filming strangers on a live stream leaves a digital footprint that is challenging to manage for those who did not upload it or consent to it.

I say this in the week that the UK Online Harm Bill was passed into law, I am pessimistic about how much it will protect children. However well intentioned it is, we need people to be much more aware of what they share or what is shared without consent, about children. I would love to see security education encourage people to go beyond phishing or passwords. It needs to meet people where they are, where they need information. Because there is far too much shared about children by people they trust. You only have to look at the comments or saves of videos with young children in them on social media. Posted by parents, liked and saved by millions of strangers. The surveillance economy.

It would also be wonderful to see more work on helping people to recognise misinformation. Tactical Tech has great resources for this. As we get more AI generated fake videos, voice recordings, images and text, it will be even more urgent to educate the public.

I say this, with some irony, as the cybersecurity sector often fails to identify fake information from vendors… but I hope we can improve..

Simply teaching people how to reverse image search can be really helpful. But we need school level education and beyond to learn to verify before you trust, and to not assume video or “news” is what it seems. This is delicate work as we don’t want to present the world as some Truman Show dystopia. Yet we fail young people and the public at large if we continue to focus on phishing and passwords instead of helping them create critical skills.

And I don’t pretend that doing this in schools will be easy. I have seen my own children sent to sources of misinformation by well intentioned educators. Let’s be honest, a basic Google search returns a top five that is often fake sites. So it is easy to be mistaken. It has happened to me and will probably happen again. We also regularly see inaccurate cybersecurity stories shared by individuals on social media. It is very easy to see a “trusted”source saying something and assume they did their due diligence. I am thinking here in particular of the “usb jacking” story that went wild this year, and was entirely exaggerated.

Gamification and meeting people where they are

As security education/awareness/culture focusses a lot on creating engaging and tailored learning, it is worth remembering that “learning styles” are a myth.Most of us benefit from learning material that offers a mix of formats. We tend to remember better when we are offered content that is visually appealing and involves us.

I keep seeing this article from business insider discussed and I think it has real use for cybersecurity education. My take on it is that we cannot assume that co-workers are engaged with us, or even “care”. This is due to the layoffs, economic pressures and general unease in the workplace. People understand now that they are disposable. I have worked with some organisations who acknowledge this, as even some senior level leaders feel the anxiety. I think our cybersecurity education work needs to accommodate the new workplace “culture” before it creates “security culture”. I don’t call this insider threat or human risk, as people are not acting maliciously.I want us to approach it with real compassion, if we can. Because receiving a phishing sim, mandatory training for your team and then messaging that uses war jargon is a LOT when you are already at your desk stressed about paying your rent or buying your children food.

I would also suggest that we work on more communications to managers so that everyone understands our intended outcomes. People are very stressed and worried about their productivity and job security. If the ciso distributes a list to leadership where certain teams are “bad clickers” or “risky”, the instinct is defensiveness. Or to pass on the stress to their direct reports. No one needs that added stress. Perhaps we could change our language and be less blame and stress. Maybe we don’t need to loudly target teams or regions,but work with them quietly and on their terms. We should be able to explain why we are doing something, but we also need to listen to them. In my experience, 8/10 teams where there is “risk” are working under pressure, or in situations where they have repeatedly asked for help and been ignored. An example is a team I saw being screamed at, and I mean yelled at, by an IT manager, for using wetransfer to receive artwork from a known supplier. Because that was the only way the team could receive the material.Screaming and banning a perfectly acceptable platform is not how you create working relationships. Sadly, we see a lot of that, and we should be creating a more compassionate security culture.

That is all for this week, if you are dressing up for Halloween, I hope you have a great time. I will be following three inflatable dinosaurs around my street, if you are lucky, I will share a photo!

Have a great week, take care of yourself and others.And dare to be the change.

M