BeyondThePhish:we all think we are good

We all think we are good

( I have switched to ButtonDown as it is an ethical choice, so your emails will change!)

This week I wanted to talk about the idea of being good.We all think that we are good, kind people and mostly we are.But there are times when our impact does not match our intention.There is a lot to say on that,but my aim here is to ask us to consider our roles in possible harm. I think we should also celebrate the good work that we do too.

Being the “helpers”

The Mr Rogers quote “look for the helpers” is a popular way to reassure children when disasters happen. The intent being that even in terrible circumstances, humans will help each other. I believe this to be true, but then I am a Pisces. I believe in the innate humanity of people. But I also know that fear, pressure or even love can make us put that to one side at times. I see this a lot in tech, when people are part of surveillance or other harms because they get swept along, or fear speaking out.

It is also important to understand that if we say that people are fallible or take risks,we often exclude ourselves from that equation. I know many security leaders and teams who fail to consider their own fallibility and risk type. We hear a lot about empathy, but it rarely goes beyond accepting that empathy is important.Sadly,empathy isn’t common to us all, and even the most empathetic person will have bias or a bad day.I think this is why we don’t always meet customers, coworkers or the public where they are: because as a security sector, we fail to truly see ourselves like the people who need advice. It is the problem I see with “human risk”, in that everyone has risky behaviour, except, of course, the security and engineering teams. We should start with ourselves and work outward.And that will take a lot of humility and compassion, which is where your security education and comms people add so much value. Please use them.

On Risk

I get asked a lot about my thoughts on human risk, the latest popular idea since “security culture”. My response is that I believe in using human risk only within frameworks informed by Human Factors experts. Otherwise it is just sparkling surveillance. I also think this forms part of a wider issue that we have within security education of the dashboard obsession.

Let me explain further. Cars and planes are designed using human factors informed practice. They are safe because they have airbags or improved brakes or warnings or special controls that counter human behaviour. It works.There is a consumer expectation that the car will be safe and will have features for comfort and safety. And in the eventuality that it does not work, getting it fixed should be simple. Accidents still happen,mostly with cars, because there are so many environmental and human factors to contend with.But we are in safer cars now than we were 40 years ago. Because we design AROUND the human, even if you drive too fast, you are likely to be safer than you would have been years ago.

The 737 max tragedies showed us what happens when engineers warning of issues are not listened to.Those dreadful accidents went against all good practice. And there were significant parallels to how we work in the currently little regulated security space.

Yet you don’t see Boeing or Ford producing dashboards showing how many people were saved by airbags or good engineering or seatbelts every day. They don’t need to. No one internally is asking engineers at BMW to show them how many people used the seatbelt alert today or the reverse parking camera.

So we don’t need metrics and dashboards for everything. We have just become obsessed with them. We need to make organisations focus on the human needs that impact security. Not every team across a company is producing data on “how this has worked” every five minutes. It should be enough to say:”trust us, this may take time but it will have an impact”. Or even “we need to do attitude surveys and listening sessions for six months and then we will develop strategy”. There is more value in saying “our risks are xyz and the business has told us that xxxx is needed to help/ xxxxx is the reason this is an issue”, than “we have rolled out phishing sims to sixty thousand people and seven thousand have done training”. One is action and outcomes,the other is box ticking compliance outputs. And where are the humans in all of that?

So give yourself some grace, and stop forcing metrics into your work.Force humans into it instead, make leadership understand.

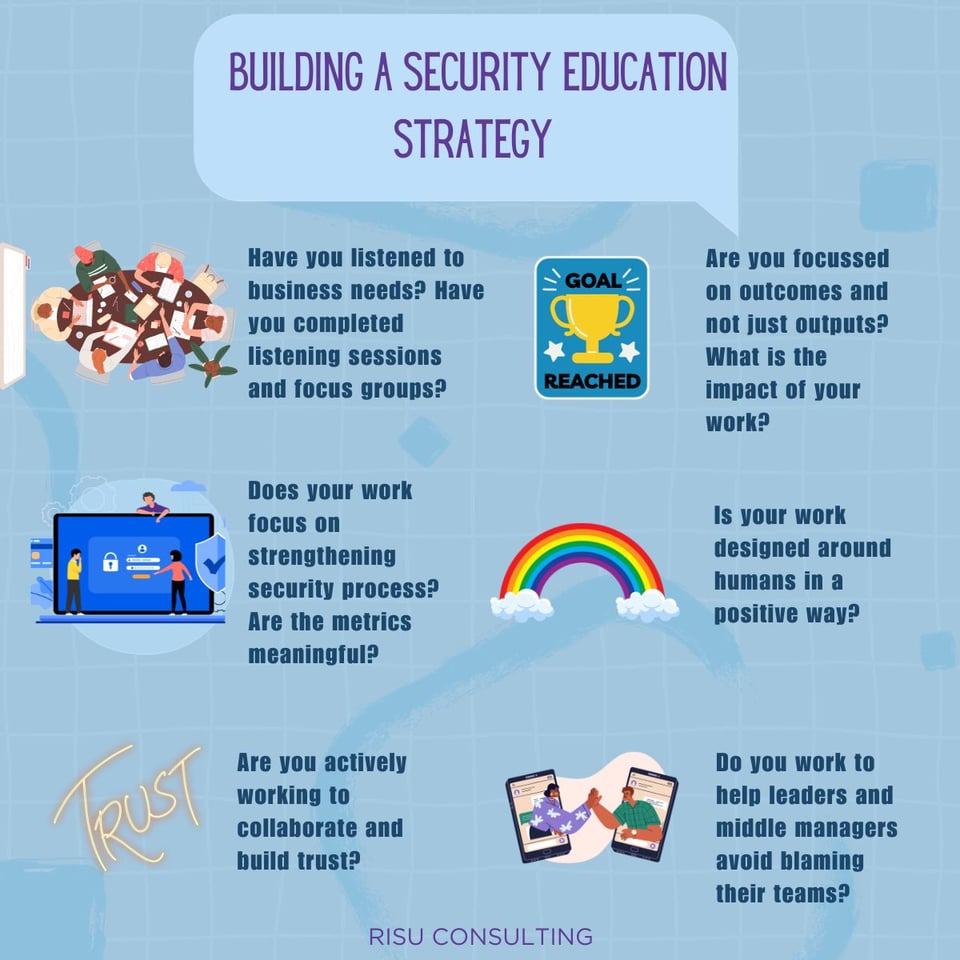

Below is a version of a basic checklist that I use in workshops,just to help people to focus on THE HUMANS- and how to help them.

Build trust,by being human

I still think that the most critical part of our work in security education and change is building trust.A large part of that is our role in helping to represent various interests and needs. A point I repeatedly make is that the world is tough right now. People are stressed over layoffs, rising costs and climate crisis,political instability and more. The amount of time and energy anyone can devote to cybersecurity is limited.Not only this, but if you have laid off lots of members of a team, forced return to office and frozen wages, are you seriously expecting your staff to be fully engaged with securing the company? Add to this the delicious looking recruitment scams on LinkedIn and other sites and you have a very different environment to 2019. We need to explain that to leadership, instead of parroting HR lines about “culture” and “human risk”. All of which make us more compliance workers than communications and change agents. What is your security culture in today’s landscape? I am not pouring water on culture work, even though I don’t believe in it. I know lots of people do good work under that banner. I am simply asking us to consider how we navigate this space and represent the people we work with. How do we meet them where they are and build that trust we need? Or do we see ourselves as HR?

Language also matters. I was talking to someone this week who works in defence contracting. They used some beautiful language around the work that they do for various governments. Now I am not saying that war is beautiful! I do want us to consider that cybersecurity using military terminology is offputting to the people that we need to reach. It also makes us look ridiculous when people in military circles use much more poetic language.Because they speak the language of business and persuasion.

Podcast corner

404 security not found has another great episode

404 Media has a podcast and a website you can subscribe to, it has some of the best reporting I have seen, so I highly recommend it.

Speaking of Psychology has a lovely episode on guilt and shame, very relevant to cybersecurity. I hope one day we move beyond that.

Tarot

It is October, so the tarot is back. Here is the Knight of needles, reminding us all to be consistent with our work and energy. That sure can be challenging in this sector.

I don’t like cybersecurity awareness month so October is all about Halloween for me. I think if in 20 years we have still seen no real forward momentum in public understanding or security regulation, it is time for something new. If the only beneficiary is sales teams, not the consumers, then I think we need to reset. We already have a sector that relies too heavily on vendor messaging. If I could cast one spell it would be for you to trust your instinct and skills more. Not just think whatever some vendor says is gospel.

The month also crosses Black history month in the UK, which I hope has meant cybersecurity teams collaborating with DEI groups to do meaningful online safety or allyship workshops.That would be a great trust building exercise.

Have a wonderful week!