Faster Pandas, Dask, compilation and dataset annotation improvement

Faster Pandas, Dask, compilation and dataset annotation improvement

Further below are 7 job roles including Senior roles in DS and DEng and Research at organisations like Ocado and the Met Police along with a tender offer for a consultancy collaboration with Global Canopy.

Apologies for the gap since the last newsletter. Christmas, illness and an infant makes for a potent combination. I’m now catching up on a host of backlogged reading which I look forward to sharing. I’ll keep this issue a bit lighter.

Below I’ll talk on open source tool improvements to Dask, Pandas with Copy-on-Write, a label-diagnostic tool called CleanLab and an update to the ShedSkin compiler for faster custom libraries.

Open Source updates

Dask speed tweaks

Last week I taught another iteration of my Higher Performance Python course, this time to an insurance data supply firm (but often to quant organisations). I love teaching this course as I get challenged by tricky issues faced in a team.

One nice reveal is that Dask has a scheduler improvements which can lead to an 80% memory reduction - great for large work loads on big machines - the old scheduler is in orange, the new one in blue:

The underlying issue is described as “tasks early in my graph generate data faster than it can be consumed downstream, causing data to pile up, eventually overwhelming my workers” and this is described in detail in the blog post.

I was intrigued to discover that the Ray accelerator, which has largely not been on my radar, now has some integration with Dask so the Ray scheduler can be used as an alternative to the Dask one. Presumably this may get you around certain “don’t make large task graph” issues with Dask, where the default scheduler gets clogged up. I need to read more on this.

Dask’s configuration came up for discussion. One painful issue I’ve hit before is having the temporary files stored on a default disk with a small partition when a larger partition is available. This is easily fixed with the working_directory configuration option. There’s also a nice write-up of techniques to aggressively manage Dask memory, if you’re running short.

CleanLab - find bad annotations and fix them

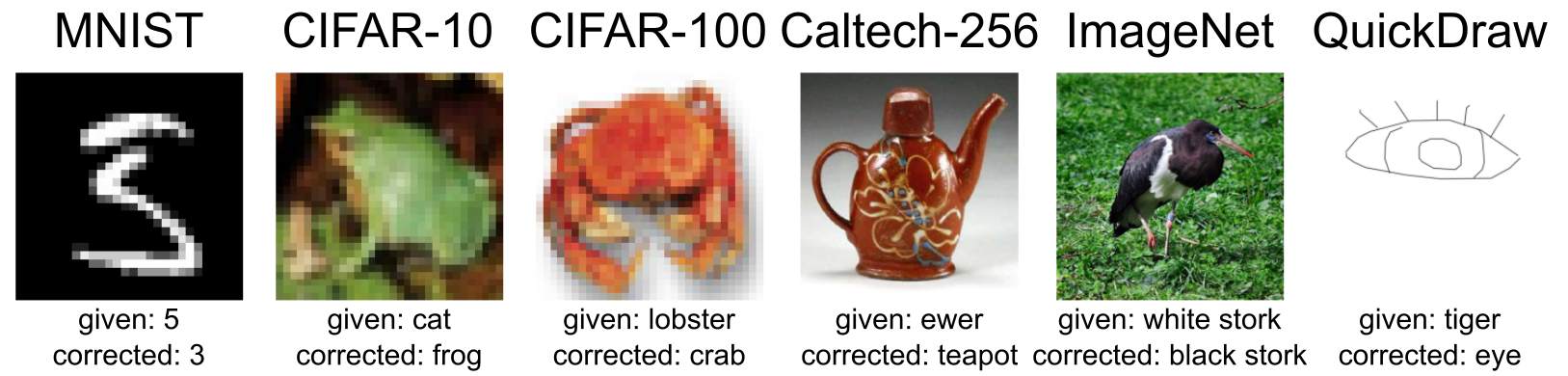

I spotted an interesting tweet introducing CleanLab, a tool that is ML-agnostic that spots your worst labels so you can evaluate and fix them. By fixing the labels you can further tune your ML without getting confused by noisy benchmarks due to erroneous labels. I’ve not tried it but it does look rather good.

The github page has demonstrations, the image below shows common benchmarks with bad labels (e.g. a 5 corrected to a 3 for the first MNIST example):

Pandas Arrow integration and Copy on Write

Itamar has a nice write-up on using the increasingly-resilient Apache Arrow string datatype in a Pandas dataframe in place of the standard Python string. If you do string processing in Pandas, definitely take a look - you’ll save RAM and often you’ll get a nice speed-up.

A video for PyDataGlobal (which hopefully is or will be public soon) describes how the new Copy on Write optimisations are being added to Pandas. This gets us away from the Setting with Copy warning and apparently may offer significant speed-ups. The changelog notes the improved methods with the flag to enable pd.set_option("mode.copy_on_write", True) so you could try it with further details here. Apparently an older high-memory usage bug of mine will be fixed by this!

ShedSkin - Python to C compiler updated for Python 3.11

Mark Dufour, author of the Python to C compiler ShedSkin, sent me an email to let me know that he’s updated his compiler for strong Python 3 support. A small benchmark against Python 3.11 (with the 40% speed-ups I discussed recently ) and Nuitka and PyPy, but sadly missing Numba, suggests that ShedSkin is the best in this bunch.

The announcement notes:

Three painful months and a total diff of 50k lines later, everything now works with Python3 (Shed Skin itself, and all tests and examples..)

I’ve used ShedSkin in the past to generate a C file which could be inspected, then compiled into an importable library. If you want a type-introspecting C code generator (sadly without numpy support), take a look. It is implicitly statically typed so your variables can only have 1 type, but if that’s true, and particularly if your code is pure-Python math-heavy, you’ll get a really nice speed boost. Discussion on reddit.

Do you have any libraries or processes to share that make this kind of data clean-up easier? I’d happily take a look if you’d reply to this email with some links.

Footnotes

See recent issues of this newsletter for a dive back in time. Subscribe via the NotANumber site.

About Ian Ozsvald - author of High Performance Python (2nd edition), trainer for Higher Performance Python, Successful Data Science Projects and Software Engineering for Data Scientists, team coach and strategic advisor. I’m also on twitter, LinkedIn and GitHub.

Now some jobs…

Jobs are provided by readers, if you’re growing your team then reply to this and we can add a relevant job here. This list has 1,500+ subscribers. Your first job listing is free and it’ll go to all 1,500 subscribers 3 times over 6 weeks, subsequent posts are charged.

(Senior) Data Scientist, Ocado Technology, Permanent, Hatfield UK

Ocado technology has developed an end-to-end retail solution, the Ocado Smart Platform (OSP) which it serves a growing list of major partner organisations across the globe. We currently have three open roles in the ecommerce stream.

Our team focuses on machine learning and optimisation problems for the web shop - from recommending products to customers, to ranking optimisation and intelligent substitutions. Our data is stored in Google BigQuery, we work primarily in Python for machine learning and use frameworks such as Apache Beam and TensorFlow. We are looking for someone with experience in developing and optimising data science products as we seek to improve the personalisation capabilities and their performance for OSP.

- Rate:

- Location: Hatfield, UK

- Contact: edward.leming@ocado.com (please mention this list when you get in touch)

- Side reading: link, link, link

Request for proposals – Database Integration Project

Global Canopy is looking for a consultancy that will work with us on the second phase of development of our Forest IQ database. This groundbreaking project brings together a number of leading environmental organisations, and the best available data on corporate performance on deforestation, to help financial institutions understand their exposure and move rapidly towards deforestation-free portfolios.

- Rate: The maximum budget available for this work is NOK 1,000,000 (including VAT)., approximately £80,000 GBP.

- Location: Oxford/Remote

- Contact: tenders@globalcanopy.org (please mention this list when you get in touch)

- Side reading: link

Machine Learning Researcher - Ocado Technology, Permanent

We are looking for a Machine Learning Scientist specialised in Reinforcement Learning who can help us improve our autonomous bot control systems through the development of novel algorithms. The ideal candidate will draw on previous expertise from academia or equivalent practical experience. The role will report to the Head of Data in Fulfilment but will be given the appropriate latitude and autonomy to focus purely on this outcome. Roles and responsibilities will include:

- Rate:

- Location: London | Hybrid (2 days office)

- Contact: jonathan.trillwood@ocado.com (please mention this list when you get in touch)

- Side reading: link, link

Analyst and Lead Analyst at the Metropolitan Police Strategic Insight Unit

The Met is recruiting a data analyst and a lead analyst to join its Strategic Insight Unit (SIU). We are looking for people who are keen on working with large datasets using Python, R, SQL or similar software. The SIU is a small, multi-disciplinary team that combines advanced data analytics and social research skills with expertise in operational policing to empirically answer questions linked with public safety, good governance, and the effectiveness of policing methods.

- Rate: £32,194 to £34,452 (Analyst) / £39,469 to £47,089 (Lead Analyst), plus location allowance

- Location: Hybrid, at least one day per week at New Scotland Yard, London

- Contact: tobias.katus@met.police.uk (please mention this list when you get in touch)

- Side reading: link, link

Web Scraper/Data Engineer

Looking to a hire a full time Web Scraper to join our Data Engineering team, scrapy and SQL are desired skills, as a bonus you’ll have an interest in art.

- Rate:

- Location: London, Remote

- Contact: s.mohamoud@heni.com (please mention this list when you get in touch)

- Side reading: link

Data Scientist at Kantar - Media Division

Our data science team (~15 people in London ~5 in Brazil) is hiring a Data Scientist to work on data science and machine learning projects in the media industry, working with broadcasters and advertisers, media agencies and industry committees. A major area of focus for this role involves the integration of multiple data sources, with a view of creating new Hybrid Datasets that leverage and extract the best out of the original ones.

The role requires advance programming skills with Python and related data science libraries (pandas, numpy, scipy, sklearn, etc) to develop new and enhance existing mathematical, statistical and machine learning algorithms; assist our integration to cloud-based computing and storage solutions, through Databricks and Azure; take the lead in data science projects, run independent project management; interact with colleagues, clients, and partners as appropriate to identify product requirements.

- Rate: £40k - £50K plus benefits

- Location: Hybrid remote and in Farringdon/Clerkenwell offices

- Contact: emiliano.cancellieri@kantar.com 07856089264 (please mention this list when you get in touch)

- Side reading: link

Data Scientist at Kantar - Media Division in London

Our data science team (~15 people in London ~5 in Brazil) is hiring a Data Scientist to work on cross-media audience measurement, consumer targeting, market research and in-depth intelligence into paid, owned and earned media. Redefining client requests into concrete items and best modelling choices is a crucial part of this job. Attention to details, critical thinking, and clear communication are vital skills.

The role requires advance programming skills with Python and related data science libraries (pandas, numpy, scipy, etc) to develop new and enhance existing mathematical, statistical and machine learning algorithms; assist our integration to cloud-based computing and storage solutions, through Databricks and Azure; take the lead in data science projects, run independent project management; interact with colleagues, clients, and partners as appropriate to identify product requirements.

- Rate: £40K-£50K + benefits

- Location: Hybrid remote and in Clerkenwell/Farringdon offices

- Contact: emiliano.cancellieri@kantar.com 07856089264 (please mention this list when you get in touch)

- Side reading: link