Boosting LLM correctness from 12% to 54% by working on data representation (ARC AGI)

Boosting LLM correctness from 12% to 54% by working on data representation (ARC AGI)

Further below are 3 jobs including:

- Python AI Engineer at Qualis Flow Ltd

- Data Scientist at ANNA

- Machine Learning Engineer - Lantern AI (Private Equity tech)

I've had my head down working on book updates for the 3rd edition of our High Performance Python book, that's coming along nicely and I ought to take some time to explain some of our updates - but that'll be in the future.

Outside of an intersting NLP engagement I still push on with the Kaggle ARC AGI challenge, I'll outline a new learning below on how the choice of representation can have a significant impact on accuracy scores (boosting from 12% to 54% correctness). It is just like building a DataFrame of features to expose the signal sufficiently, but this time in "llm space". I'll note a couple of interesting papers below too.

Hawaii Five-LOWer sets off tomorrow

As noted before last year we raised £4,000 for Parkinson's, this year we're driving the 5,000km for Alzheimer's Society. We set off tomorrow, back in a week, hopefully still with all 4 wheels. See the justgiving page for more photos and we'll update as we drive.

Many thanks to those of you who have donated so far - if you like the things I talk about and it has helped you along on your journey, we'd certainly appreciate a donation. All funds raised go to the charity, all costs come from our own pocket.

This was us working on the car (through to 11pm by torchlight the night before), then the morning after still with a pile to do:

and all going well this car will turn up in London tonight, ready to set off in the morning:

Better LLM results by using richer representations

I've been working on the Kaggle ARC AGI using my home RTX 3090 (24GB VRAM) setup with a selection of Llama 3 quantised models, mainly the 8B and 70B. This challenge involves taking a geometric puzzle - a grid of numbers (using the digits 0-9) which get transformed from an initial state to a final state by a rule. You have to figure out the rule. The challenge is set with typically 3 example pairs per rule, then you get a 4th and you have to predict final.

The challenge states to use open-weights models so you could submit to the private leadership on Kaggle and that won't allow API calls (so there'd be no data leakage from the hidden test set). I've found this an interesting challenge to expand my knowledge around "what works reliably and what might work at all, with LLMs", at least via Llama models.

One recent understanding rather blew my mind. If I make a "hmm, looks reasonable to me" prompt that describes the challenge, presenting each pair for initial and final grids and asking Llama to write some Python code to solve the challenge I can radically change the likelihood of success by choosing different representations of the grid alone. Without even changing the prompt I can swing from 12% to 54% correctness. Maybe this is an obvservation you can use to think about your LLM representations?

As an example, let's say we take the provided JSON grid for challenge 9565186b.json where the rule we need is "find the most frequent number in initial, then leave this number alone but change all the other numbers to 5". As a pair of grids with just numbers it'd look like:

initial:

222

218

288

final:

222

255

255

In the following I'll just talk about initial but I transform both grids the same way. These grids come in JSON, so the obvious first step is to ask the LLM to write a program to transform initial = [[2, 2, 2], [2, 1, 8], [2, 8, 8]] to final = [[2, 2, 2], [2, 5, 5], [2, 5, 5]]. There's a bunch more to the prompt but essentially I'm telling it:

- You're a great problem solver

- Look at the following pairs of initial and final, figure out the pattern

- Here's a hint - count the frequency of numbers (NOTE1 below)

- Write some Python code in a

def transform(initial):function that performs the transform

and then I run this many times to check the likelihood of a successful program that solves the 3 training and 1 test problem. NOTE1 - by giving it a hint I'm massively biasing it to solve this challenge and this challenge only, so this wouldn't be generally useful but it is very helpful to figure out "have I representated my data correctly?".

Using just the JSON (and no grids) with a Meta-Llama-3-70B-Instruct-IQ4_XS.gguf (pretty powerful, somewhat quantised/compressed, 42GB model) it writes functions which execute 100% of the time and which successfully solve the challenges 12% of the time.

In the following I'm using n=50 in 2.5 hours per run. Sidenote - I've also used the 8B Q6 ("fairly stupid" but very fast - 5 minutes for n=50) model and the 70B Q6 ("better than IQ4 but so much slower again" - 4 hours) model. I'll report on 70B IQ4 for consistency, the pattern persists across the models.

If I add the block of numbers as shown above in addition to the JSON (so now I have 2 ways of representing the challenge) my success rate jumps to 42% - that's a huge jump just by adding a second representation. Brilliant, I'd figured my work was done.

What if we use a comma separated block, a bit like a CSV file? Now with

2, 2, 2

2, 1, 8

2, 8, 8

instead of a block of numbers my success drops to 30% - blast.

How about an Excel-like representation (plus JSON)?

A1=2, B1=2, C1=2, A2=2, B2=1, C2=8, A3=2, B3=8, C3=8

This gives only 14% - barely above the solid grid of numbers we tried above.

What about adding double-quoted numbers, as we might also see in a CSV file along with the JSON?

"2", "2", "2"

"2", "1", "8"

"2", "8", "8"

Now we get 54% - brilliant! That's a massive improvement.

How about combining double-quoted CSVs and the Excel style (and JSON - so 3 representations)? Nope, that drops to 46%.

Maybe trying single-quote CSVs are better than double-quoted (and JSON)? Nope, 42%.

What about double-quoted CSVs with no spaces (and JSON)? Awful - 22%.

So what's going on? I think it comes down to the tokens that are being used during training - a CSV-like representation (with double quotes and spaces) presumably occurs a lot, so this "fits" this problem better. The solid block of numbers (with JSON) was a pretty good start and gave a solid boost to the JSON-only representation, but by adding quotes + spaces + commas I get another very nice bump.

What's the rammification? I suspect if you're using the "wrong" brackets, quotes, spacing and symbols in your prompts you're probably hurting either performance or the LLM's ability to interpret your request and hence the reliability of your output.

Have you had similar experiences with how you represent your prompts? I'd love to hear!

You may wonder why I want to write 50 programs per test - whilst all I need per challenge is 1 that works, I have to make several to get to that 1. What I want to know is how reliably I can get there, and that requires repeated big runs. Just like in traditional ML with lots of training, test and validation cycles. I'd love to hear how you're approaching this sort of challenge with LLMs.

"Program of Thoughts Prompting"

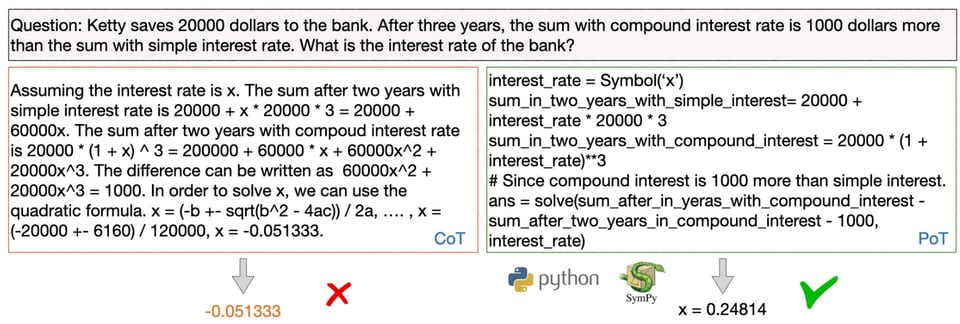

In "Program of Thoughts Prompting: Disentangling Computation from Reasoning for Numerical Reasoning Tasks", Chen, Ma, Wang, Cohen, 2023 the authors propose an interesting idea which I'd realised I was already using (and quite possibly I'd seen before of course). They'd observed that asking an LLM to reason about a numeric challenge was possible but very unreliable. Instead asking it to write the code to solve the math was far more reliable. Here's an example:

For the ARC challenge I'd already tried using the big (70B) models to reason about the initial to final transformation and to explain it - but without asking it to write a transform function and whilst it did work...it was very unreliable. Maybe 2-5% of the time it managed it. As soon as I asked it to write Python code to solve the same task my success jumped to the 30+% rate (and that's before I realised I needed to work on my representations as described above).

One of the interesting obvservations in this paper is that the semantic interpretation of variable names was important - if you assign a semantic interpretation the success rates improved.

"PAL: Program aided Language Models"

Building on the above in "PAL: Program aided Language Models", Gao, Madaan, Zhou, Alon, Liu, Yang, Callan, Neubig, 2023 the authors note that contrasting a Chain of Thought approach to solving a complex reasoning tasks became increasingly unreliable with more objects whilst having the model write Python as it went kept the quality of the results high.

This second paper goes beyond the math problems of Program of Thoughts Prompting and looks at math, algorithmic and symbolic challenges. The also mirror the need to have a semantic interpretation to variable names.

Both papers are worth a read if you're interested in using LLMs to write code to solve reasoning challenges.

Footnotes

See recent issues of this newsletter for a dive back in time. Subscribe via the NotANumber site.

About Ian Ozsvald - author of High Performance Python (2nd edition), trainer for Higher Performance Python, Successful Data Science Projects and Software Engineering for Data Scientists, team coach and strategic advisor. I'm also on twitter, LinkedIn and GitHub.

Now some jobs…

Jobs are provided by readers, if you’re growing your team then reply to this and we can add a relevant job here. This list has 1,700+ subscribers. Your first job listing is free and it'll go to all 1,700 subscribers 3 times over 6 weeks, subsequent posts are charged.

Python AI Engineer at Qualis Flow Ltd

We are seeking a talented Python Engineer who is eager to contribute to building a sustainable future. If you are passionate about sustainability, believe that with cutting-edge technology we can address tangible issues, you value radical transparency, unstoppable tenacity and encourage collaboration and curiosity within your team, this opportunity is tailor-made for you.

- Rate: up to £60k

- Location: Remote with London Office once every 2 weeks

- Contact: sam.joseph@qualisflow.com (please mention this list when you get in touch)

- Side reading: link

Data Scientist at ANNA

The role is focused on helping the product team protect the customers from fincrimes. The first focus will be APP (Authorised Push Payment) Fraud prevention.

You will access the system in place, evaluate the performance of models offered by vendors, and create a roadmap for the system improvements to:

- uncover patterns and irregularities in data through statistical tools that could indicate fraud;

- use predictive modelling to spot possible fraudulent transactions and behaviours;

- develop concise reports explaining findings, risks, and recommended actions;

- team up with other departments to improve overall security and fraud detection.

Responsibilities

- Defining and evaluating key metrics for the AI part of our product and identifying levers to improve them

- Developing pipelines for data annotation using internal assessors as well as crowdsourcing platforms

- Implementing appropriate Machine Learning algorithms and deployable models

- Using BI tools to monitor key product metrics and performance of ML models

- Rate:

- Location: London, Cardiff or Remote

- Contact: Andrei Smirnov, Head of Data Science, asmirnov@anna.money (please mention this list when you get in touch)

- Side reading: link

Machine Learning Engineer - Lantern AI (Private Equity tech)

We are seeking a machine learning engineer who will work on projects to reconcile data between dissimilar sources, build copilots for accountants, and prepare industry benchmarks. This role requires both data science expertise, and the ability to product production-quality Python code.

- Rate: Up to £120k

- Location: London Liverpool Street - hybrid 1 day per week

- Contact: https://lantern.bamboohr.com/careers/56?source=aWQ9MTY%3D (please mention this list when you get in touch)

- Side reading: link