Attend PyDataGlobal (virtually) for a feast of informative sessions

Further below are 3 job roles including Senior roles in Data Science and Data Engineering at organisations like Causaly and MDSol.

Tomorrow at 4-5pm (Wednesday, UK hours) I give an Expert Briefing for PyDataGlobal on The State of Higher Performance Python. Please join if you’d like an open q&a around higher performance topics - you’ll need a ticket to attend. Next week I talk on Data Science Patterns that Work a couple of hours before I run another Executives at PyData discussion session for leaders.

Below I talk some more on a worrying trend with deep language models and share some nice visualisations for correlation that’ll help your team understand the pitfalls of “just using one number to explain a rich space”.

PyDataGlobal’s just starting

PyDataGlobal 2022 starts officially next week, with Expert Briefings running this week for early ticket purchasers. I’m really proud of how the Global fully-virtual conference series has built up as a response to the pandemic, and how it has grown. The schedule runs over most of several days deliberately to give fairer access around the globe.

Tickets are pay-what-you-want with guide prices. All the money raised goes back to NumFOCUS who support the projects that we all depend on. On top of a packed training & talks schedule there’s the Discord chat which will live beyond the conference (so great for networking and q&a) and other events. If you’ve not attended before, the talks will be high quality and cover many interesting subjects.

Expert Briefings

The expert briefings are running this week and next, starting tomorrow with mine. These are ahead of the conference, only for ticket holders. You get a 15 minute briefing and then 45 minutes q&a. The goal is to let you talk to experts which is something that we normally lose out on with virtual-only conferences. If you’d like to attend these - get a ticket quickly!

- Higher Performance Python tomorrow (Wednesday 23rd) 4-5pm UK hours by me (requesting a RT please!)

- Feature engineering for machine learning with open-source by Sole Galli of feature engine, Thursday

- Natural Language Processing: Trends, Challenges and Opportunities by Marco Bonzanini of PyDataLondon, Thursday

- Understanding problems in a highly connected world via graph analytics by Huda Nassar of the Julia community, Monday

- The State of the Art for Probabilistic Programming by Thomas Wiecki of pymc3+4

Executives at PyData

I’ll run another of my highly-participatory leaders-focused discussion sessions next Thursday 1st at 3-5pm. Over these two hours we’ll run through some pressing questions, gather answers, get our special guest Douglas Squirrel (who helps CTOs and CEOs dig their tech out of trouble) and after I’ll write-up a summary of what’s been learned to share to the attendees.

I joined Douglas for one of his calls to CTOs earlier in the year, he wrote up a nice summary on LI with the following graphic. We talked about Accountability, Business Value and Cadence along with the “right” team model. Squirrel will be happy to answer questions on this topic and more, this is a brilliant opportunity to get a CTO-side view of the issues that a data science can present to an organisation (plus the fixes that’ll help you!).

Reply if you’d like to be added to the GCal, note that you’ll need a ticket to the conference (remember - pay-what-you-want, all proceeds to NumFOCUS).

Data Science Project Patterns that Work

Prior to the Executives session I’ll also give a summary of 5 key points I use when I do strategic consulting with teams to help data science projects turn into profitable outcomes. Please join for my talk and come join the Executives discussion after! This is the positive counterpoint to my PyDataLondon talk (youtube ) earlier this year on “things that went really wrong in 20 years of my career”.

NLP and really huge models

Recently Meta launched the high-profile Galactica language model, aimed at scientists and fed research papers. It very confidently helped you to understand science and it very confidently made up rubbish. There’s the rub - these models often appear to be very confident but they can be “hallucinating” (AKA “making stuff up”). We don’t think they’re good because of a “confidence score” but by the language they use in their explanation.

This model lasted several days and then Meta took it down.

Galactica is a large language model for science, trained on 48 million examples of scientific articles, websites, textbooks, lecture notes, and encyclopedias. Meta promoted its model as a shortcut for researchers and students. In the company’s words, Galactica “can summarize academic papers, solve math problems, generate Wiki articles, write scientific code, annotate molecules and proteins, and more.”

Here’s a nice example.

A couple of weeks before GTP-3 was used to build ExplainPaper (via hn). You upload a PDF, highlight a block of text and it summarises a clear answer. You can also ask it questions and it gives sensible answers. Taken from the hn comments - you might ask of a paper “input: Does it say why it is superior?” and it might say “Yes, the paper says that the Transformer model is superior because it is more parallelizable and requires less time to train. “.

Also from the comments “Can you comment… . There were cases (~15%) where it summarized [legal docs] is exactly on the wrong end of the spectrum (disclose within 3 days for example, came out as disclose post-3-days).”. Another example of confidently-wrong explanations which require a domain expert’s eye.

I’m not sure where this all goes. It is great that we can generate such powerful models but, heck, how do we become confident that they’re right? The fact that Galactica made up papers (and you had to verify that they didn’t exist) is awkward, but catchable. What happens when it mis-represents a complex paper? What happens with the legal documents’ requirements being inverted (as seen above)? If a non-expert can’t trust it, why would you make valuable decisions based on it?

I’m genuinely curious about how this evolves. I’ve got a clients who are interested in GPT-3 to explore possible ideas, but it feels so ripe for subtle errors that I’m pretty cautious. How do we get a LIME or a SHAP to explain why an explanation makes sense for these models?

Visualisation - help your managers with a correlation picture

You’ve no doubt seen Anscombe’s quartet (via hn) where 4 different 2D plots share the same summary statistics and correlation and yet look very different:

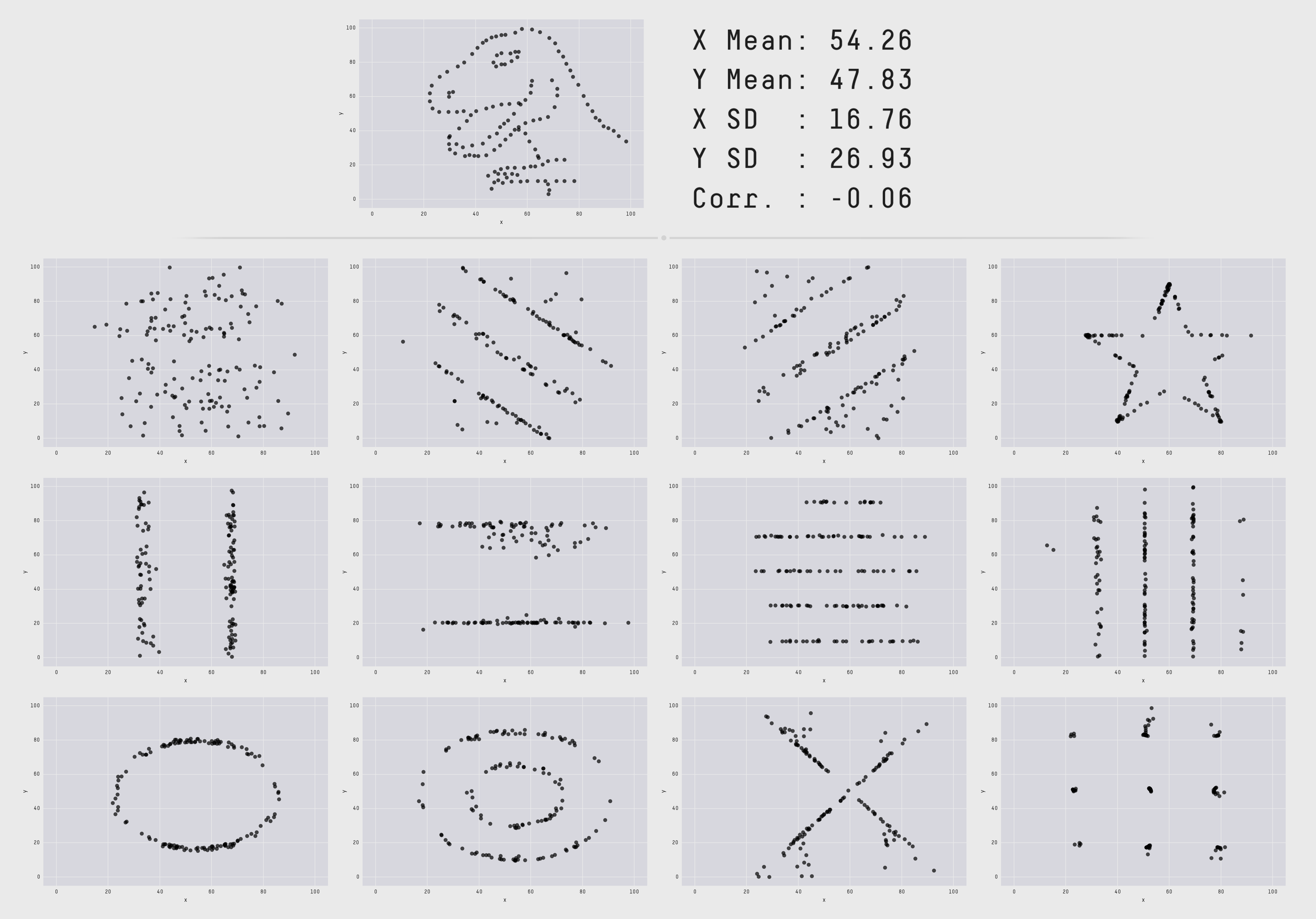

Have you come across the Datasaurus Dozen? There are 13 plots which all have the same means, standard deviation and correlation. The fact that you get a dinosaur as one of the plots should hit home to your less-technical colleagues and might make for a great discussion point (or just something fun to post into the work slack?).

You really do need to visualise your data to check that the relationships you expect to see make sense. Noise and outliers can really mess up single statistics, or single statistics can easily let you use your mental bias to hook on what “your data must mean” when reality (the dinosaur or that star!) is really very different.

The link above adds more notes and links back to the creator of the Datasaurus, props to autodesk for the nice write-up.

The key insight behind our approach is that while it is relatively difficult to generate a dataset from scratch with particular statistical properties, it is relatively easy to take an existing dataset, modify it slightly, and maintain those statistical properties

They use a simulated annealing technique to evolve a starting random dataset towards a set of chosen shapes. Maybe, just maybe, that’s not a million miles away from an eager DS given a crappy dataset massaging it by dropping data and transforming it, into the shape they hope they need?

Footnotes

See recent issues of this newsletter for a dive back in time. Subscribe via the NotANumber site.

About Ian Ozsvald - author of High Performance Python (2nd edition), trainer for Higher Performance Python, Successful Data Science Projects and Software Engineering for Data Scientists, team coach and strategic advisor. I’m also on twitter, LinkedIn and GitHub.

Now some jobs…

Jobs are provided by readers, if you’re growing your team then reply to this and we can add a relevant job here. This list has 1,500+ subscribers. Your first job listing is free and it’ll go to all 1,500 subscribers 3 times over 6 weeks, subsequent posts are charged.

Senior Cloud Platform Applications Engineer, Medidata

our team at Medidata is hiring a Senior Cloud Platform Applications Engineer in the London office. Medidata is a massive software company for clinical trials and our team focus on developing the Sensor Cloud, a technology with capabilities in ingesting, normalizing, and analyzing physiological data collected from wearable sensors and remote devices. We offer a good salary and great benefits !!

- Rate:

- Location: Hammersmith, London

- Contact: kmachadogamboa@mdsol.com (please mention this list when you get in touch)

- Side reading: link

Natural Language Processing Engineer

In this role, NLP engineers will:

Collaborate with a multicultural team of engineers whose focus is in building information extraction pipelines operating on various biomedical texts Leverage a wide variety of techniques ranging from linguistic rules to transformers and deep neural networks in their day to day work Research, experiment with and implement state of the art approaches to named entity recognition, relationship extraction entity linking and document classification Work with professionally curated biomedical text data to both evaluate and continuously iterate on NLP solutions Produce performant and production quality code following best practices adopted by the team Improve (in performance, accuracy, scalability, security etc…) existing solutions to NLP problems

Successful candidates will have:

Master’s degree in Computer Science, Mathematics or a related technical field 2+ years experience working as an NLP or ML Engineer solving problems related to text processing Excellent knowledge of Python and related libraries for working with data and training models (e.g. pandas, PyTorch) Solid understanding of modern software development practices (testing, version control, documentation, etc…) Excellent knowledge of modern natural language processing tools and techniques Excellent understanding of the fundamentals of machine learning A product and user-centric mindset

- Rate:

- Location: London/Hybrid

- Contact: david.sparks@causaly.com 07730 893 999 (please mention this list when you get in touch)

- Side reading: link, link

Senior Data Engineer at Causaly

We are looking for a Senior Data Engineer to join our Applied AI team.

Gather and understand data based on business requirements. Import big data (millions of records) from various formats (e.g. CSV, XML, SQL, JSON) to BigQuery. Process data on BigQuery using SQL, i.e. sanitize fields, aggregate records, combine with external data sources. Implement and maintain highly performant data pipelines with the industry’s best practices and technologies for scalability, fault tolerance and reliability. Build the necessary tools for monitoring, auditing, exporting and gleaning insights from our data pipelines Work with multiple stakeholders including software, machine learning, NLP and knowledge engineers, data curation specialists, and product owners to ensure all teams have a good understanding of the data and are using them in the right way.

Successful candidates will have:

Master’s degree in Computer Science, Mathematics or a related technical field 5+ years experience in backend data processing and data pipelines Excellent knowledge of Python and related libraries for working with data (e.g. pandas, Airflow) Solid understanding of modern software development practices (testing, version control, documentation, etc…) Excellent knowledge of data processing principles A product and user-centric mindset Proficiency in Git version control