Thursday, November 2, 2023. Annette’s News Roundup.

I think the Roundup makes people feel not so alone.

To read an article excerpted in this Roundup, click on its blue title. Each “blue” article is hyperlinked so you can read the whole article.

Please feel free to share.

Invite at least one other person to subscribe today! https://buttondown.email/AnnettesNewsRoundup

—————————————————————-

Joe is always busy.

Yesterday President Biden traveled to Minnesota to participate in a tour of a family farm in Northfield as part of his Administration’s Investing in Rural America Event Series.

The President delivered remarks highlighting how Bidenomics and his Investing in America agenda are ensuring rural Americans do not have to leave their hometowns to find opportunity.

Today, thanks to American leadership, we secured safe passage for wounded Palestinians and for foreign nationals to exit Gaza.

— President Biden (@POTUS) November 1, 2023

We expect American citizens to exit today, and we expect to see more depart over the coming days.

We won't let up working to get Americans out of Gaza.

—————————————————————-

Kamala is always busy.

As #BreastCancerAwarenessMonth comes to a close, I am reminded of my mother’s work as a breast cancer researcher and that awareness must continue beyond October. Get screened and spread the word. Early detection can save lives.

— Vice President Kamala Harris (@VP) October 31, 2023

Harris to Announce Steps to Curb Risks of A.I.

Vice President Kamala Harris plans to announce on Wednesday a slew of additional measures to curb the risks of artificial intelligence as she prepares to take part in a global summit in Britain where world and tech leaders will discuss the future of the technology.

On her visit, which will kick off Wednesday with a policy address at the U.S. Embassy in London, Ms. Harris plans to outline guardrails that the American government will seek to put in place to manage the risks of A.I. as it asserts itself as a global leader in the arena.

Taken together, the steps Ms. Harris plans to announce seek to both flesh out a sweeping executive order President Biden signed this week and make its ideals part of broader global standards for a technology that holds great promise and peril.

They include a new draft policy from the Office of Management and Budget that would guide how federal agencies use artificial intelligence, which would be overseen by new chief A.I. officers. She is also set to announce that 30 other nations have joined a “political declaration” created by the United States that seeks to establish a “set of norms for responsible development, deployment and use of military A.I. capabilities,” as well as $200 million in philanthropic funding to help support the administration’s goals.

“The urgency of this moment must compel us to create a collective vision of what this future must be,” Ms. Harris plans to say on Wednesday, according to prepared remarks released by her office.

The executive order Mr. Biden signed on Monday marked the United States’ most concrete regulatory effort in the A.I. arena to date. Among other things, it requires that companies report to the federal government about the risks that their systems could help countries or terrorists make weapons of mass destruction. It also seeks to lessen the dangers of “deep fakes” — A.I.-generated audio and video that can be difficult to distinguish from authentic footage — that could swing elections or swindle consumers.(New York Times).

The Vice President at 10 Downing Street.

U.S. Embassy

London, United Kingdom

1:43 P.M. GMT

Remarks by Vice President Harris on the Future of Artificial Intelligence | London, United Kingdom.

THE VICE PRESIDENT: Hello, everyone. Good afternoon. Good afternoon, everyone. (Applause.) Please have a seat. Good afternoon. It’s good to see everyone.

Ambassador Hartley, thank you for the warm welcome that you gave us last night and today, and for inviting us to be here with you. And thank you for your extraordinary leadership, on behalf of the President and me and our country.

And it is, of course, my honor to be with everyone here at the United States Embassy in London, as well as to be with former Prime Minister Theresa May and all of the leaders from the private sector, civil society, academia, and our many international partners.

So, tomorrow, I will participate in Prime Minister Rishi Sunak’s Global Summit on AI Safety to continue to advance global collaboration on the safe and responsible use of AI.

Today, I will speak more broadly about the vision and the principles that guide America’s work on AI.

President Biden and I believe that all leaders from government, civil society, and the private sector have a moral, ethical, and societal duty to make sure that AI is adopted and advanced in a way that protects the public from potential harm and that ensures that everyone is able to enjoy its benefits.

AI has the potential to do profound good to develop powerful new medicines to treat and even cure the diseases that have for generations plagued humanity, to dramatically improve agricultural production to help address global food insecurity, and to save countless lives in the fight against the climate crisis.

But just as AI has the potential to do profound good, it also has the potential to cause profound harm. From AI-enabled cyberattacks at a scale beyond anything we have seen before to AI-formulated bio-weapons that could endanger the lives of millions, these threats are often referred to as the “existential threats of AI” because, of course, they could endanger the very existence of humanity. (Pause)

These threats, without question, are profound, and they demand global action.

But let us be clear. There are additional threats that also demand our action — threats that are currently causing harm and which, to many people, also feel existential.

Consider, for example: When a senior is kicked off his healthcare plan because of a faulty AI algorithm, is that not existential for him?

When a woman is threatened by an abusive partner with explicit, deep-fake photographs, is that not existential for her?

When a young father is wrongfully imprisoned because of biased AI facial recognition, is that not existential for his family?

And when people around the world cannot discern fact from fiction because of a flood of AI-enabled mis- and disinformation, I ask, is that not existential for democracy?

Accordingly, to define AI safety, I offer that we must consider and address the full spectrum of AI risk — threats to humanity as a whole, as well as threats to individuals, communities, to our institutions, and to our most vulnerable populations.

We must manage all these dangers to make sure that AI is truly safe.

So, many of you here know, my mother was a scientist. And she worked at one of our nation’s many publicly funded research universities, which have long served as laboratories of invention, creativity, and progress.

My mother had two goals in her life: to raise her two daughters and end breast cancer. At a ver- — very early age then, I learned from her about the power of innovation to save lives, to uplift communities, and move humanity forward.

I believe history will show that this was the moment when we had the opportunity to lay the groundwork for the future of AI. And the urgency of this moment must then compel us to create a collective vision of what this future must be.

A future where AI is used to advance human rights and human dignity, where privacy is protected and people have equal access to opportunity, where we make our democracies stronger and our world safer. A future where AI is used to advance the public interest.

And that is the future President Joe Biden and I are building.

Before generative AI captured global attention, President Biden and I convened leaders from across our country — from computer scientists, to civil rights activists, to business leaders, and legal scholars — all to help make sure that the benefits of AI are shared equitably and to address predictable threats, including deep fakes, data privacy violations, and algorithmic discrimination.

And then, we created the AI Bill of Rights. Building on that earlier this week, President Biden directed the United States government to promote safe, secure, and trustworthy AI — a directive that will have wide-ranging impact.

For example, our administration will establish a national safety reporting program on the unsafe use of AI in hospitals and medical facilities. Tech companies will create new tools to help consumers discern if audio and visual content is AI-generated.

And AI developers will be required to submit the results of AI safety testing to the United States government for review.

In addition, I am proud to announce that President Biden and I have established the United States AI Safety Institute, which will create rigorous standards to test the safety of AI models for public use.

Today, we are also taking steps to establish requirements that when the United States government uses AI, it advances the public interest. And we intend that these domestic AI policies will serve as a model for global policy, understanding that AI developed in one nation can impact the lives and livelihoods of billions of people around the world.

Fundamentally, it is our belief that technology with global impact deserves global action.

And so, to provide order and stability in the midst of global technological change, I firmly believe that we must be guided by a common set of understandings among nations. And that is why the United States will continue to work with our allies and partners to apply existing international rules and norms to AI and work to create new rules and norms.

To that end, earlier this year, the United States announced a set of principles for responsible development, deployment, and use of military AI and autonomous capabilities. It includes a rigorous legal review process for AI decision-making and a commitment that AI systems always operate with international — and within international humanitarian law.

Today, I am also announcing that 30 countries have joined our commitment to the responsible use of military AI. And I call on more nations to join.

In addition to all of this, the United States will continue to work with the G7; the United Nations; and a diverse range of governments, from the Global North to the Global South, to promote AI safety and equity around the world.

But let us agree, governments alone cannot address these challenges. Civil society groups and the private sector also have an important role to play.

Civil society groups advocate for the public interest. They hold the public and private sectors to account and are essential to the health and stability of our democracies.

As with many other important issues, AI policy requires the leadership and partnership of civil society. And today, in response to my call, I am proud to announce that 10 top philanthropies have committed to join us to protect workers’ rights, advanced transparency, prevent discrimination, drive innovation in the public interest, and help build international rules and norms for the responsible use of AI.

These organizations have already made an initial commitment of $200 million in furtherance of these principles.

And so, today, I call on more civil society organizations to join us in this effort.

In addition to our work with civil society, President Biden and I will continue to engage with the private companies who are building this technology.

Today, commercial interests are leading the way in the development and application of large language models and making decisions about how these models are built, trained, tested, and secured.

These decisions have the potential to impact all of society.

As such, President Biden and I have had extensive engagement with the leading AI companies to establish a minimum — minimum — baseline of responsible AI practices.

The result is a set of voluntary company commitments, which range from commitments to report vulnerabilities discovered in AI models to keeping those models secure from bad actors.

Let me be clear, these voluntary commitments are an initial step toward a safer AI future with more to come, because, as history has shown, in the absence of regulation and strong government oversight, some technology companies choose to prioritize profit over the wellbeing of their customers, the safety of our communities, and the stability of our democracies.

An important way to address these challenges, in addition to the work we have already done, is through legislation — legislation that strengthens AI safety without stifling innovation.

In a constitutional government like the United States, the executive branch and the legislative branch should work together to pass laws that advance the public interest. And we must do so swiftly, as this technology rapidly advances.

President Biden and I are committed to working with our partners in Congress to codify future meaningful AI and privacy protections.

And I will also note, even now, ahead of congressional action, there are many existing laws and regulations that reflect our nation’s longstanding commitment to the principles of privacy, transparency, accountability, and consumer protection.

These laws and regulations are enforceable and currently apply to AI companies.

President Biden and I reject the false choice that suggests we can either protect the public or advance innovation. We can and we must do both.

The actions we take today will lay the groundwork for how AI will be used in the years to come.

So, I will end with this: This is a moment of profound opportunity. The benefits of AI are immense. It could give us the power to fight the climate crisis, make medical and scientific breakthroughs, explore our universe, and improve everyday life for people around the world.

So, let us seize this moment. Let us recognize this moment we are in.

As leaders from government, civil society, and the private sector, let us work together to build a future where AI creates opportunity, advances equity, fundamental freedoms and rights being protected.

Let us work together to fulfill our duty to make sure artificial intelligence is in the service of the public interest.

I thank you all. (Applause.)

END

1:59 P.M. GMT

Touch 👇 to phone bank with the Vice President and Representative Maxwell Frost.

This November, voters will make decisions on critical issues facing communities in races across the country.

— Kamala Harris (@KamalaHarris) November 1, 2023

Join me for a phone bank this Friday at 6:15 p.m. ET to call voters and get the vote out for Democrats.https://t.co/yoXVjNOXxZ

—————————————————————-

GOP Representative Ken Buck won’t seek re-election.

His party has left him.

GOP Rep. Ken Buck announced he won’t seek re-election:

“I always have been disappointed with our inability in Congress to deal with major issues, and I’m also disappointed that the Republican Party continues to rely on this lie that the 2020 election was stolen, & rely on the January 6th narrative…”

—————————————————————-

The House too was busy.

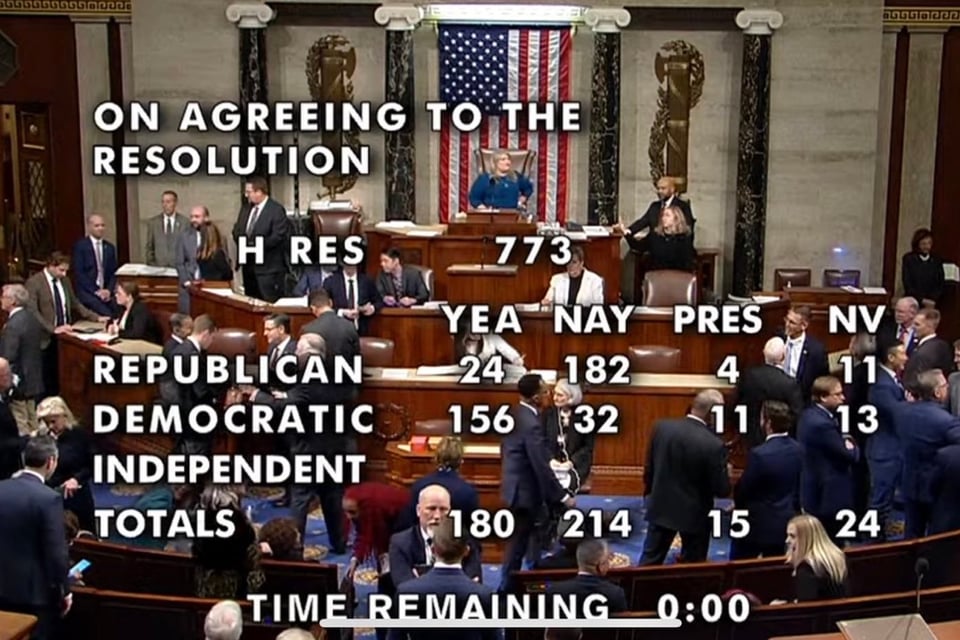

House votes not to censure Rashida Tlaib over Israel comments.

The House on Wednesday defeated a resolution censuring Rep. Rashida Tlaib (D-Mich.) for her criticism of Israel and her speech to a protest calling for a ceasefire in the Israel-Hamas war.

Why it matters: All Democrats and 23 Republicans rejected the measure, which was forced to a vote by right-wing Rep. Marjorie Taylor Greene (R-Ga.).

Driving the news: A motion brought by House Democratic leadership to table – defeat – the censure measure passed 222-186.

The two dozen Republicans who voted against the measure included lawmakers from Michigan and neighboring midwestern states, as well as moderates and members of the right-wing Freedom Caucus.

The backdrop: Pro-Israel lawmakers on both sides of aisle have publicly and privately cast Tlaib's comments about Israel as repugnant.

Tlaib, the only Palestinian American in Congress, responded to Hamas' Oct. 7 attack on Israeli civilians with a statement lamenting the "Palestinian and Israeli lives lost yesterday, today, and every day" and criticizing U.S. military assistance to Israel.

She also gave a speech to a large protest outside a House office building calling for a ceasefire.

More than 300 demonstrators were arrested for going into the building, including three arrests for assaulting a police officer.

The intrigue: After defeating the Tlaib resolution, Democrats pulled a vote on Rep. Becca Balint's (D-Vt.) retaliatory measure censuring Greene over inflammatory remarks dating back to 2018, according to two senior House Democrats.

It "was only going to be offered if the Tlaib [resolution] was passed," said one. (Axios).

The House also rejected an expulsion vote against Representative George Santos.

Yes, 32 Democrats voted to allow Santos to stay in office, and 11 others voted “present” and 13 didn’t vote.

—————————————————————-

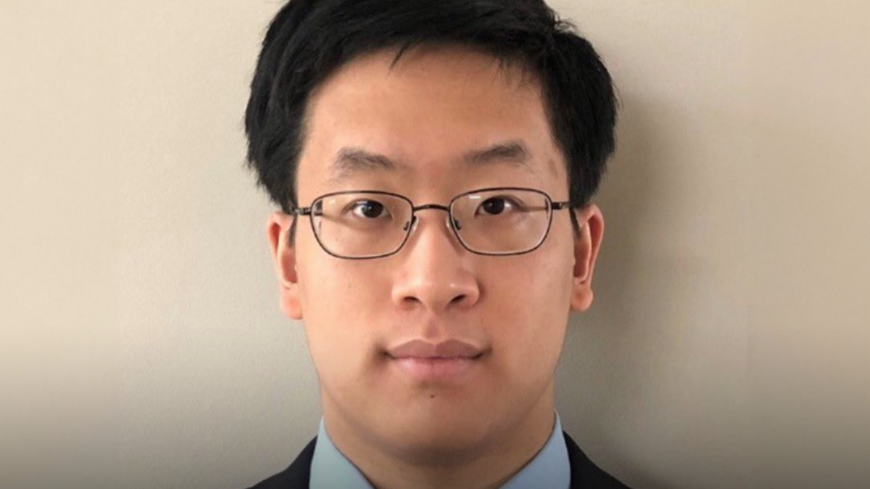

A junior who threatened Jewish students at Cornell is in custody, charged with a felony.

The U.S. Attorney's Office said Patrick Dai threatened to " 'stab' and 'slit the throat' of any Jewish males he sees on campus, to rape and throw off a cliff any Jewish females, and to behead any Jewish babies." He is expected to appear in federal court on Wednesday in Syracuse, New York, charged with a felony for posting threats to kill or injure another using interstate communications.

—————————————————————-

Trumps begin to testify in New York fraud case.

Donald Trump Jr., executive vice president of the Trump Organization and a son of former President Donald Trump, took the witness stand Wednesday to begin testifying in a civil trial accusing him, his brother and his father of knowingly committing fraud.

Colleen Faherty, the lawyer for the attorney general, kicked off questions by asking Trump Jr. about his prior roles in the Trump Organization and the Trump Revocable Trust. She also asked about the hierarchy in the business and where it placed him, his brother, his father and Allen Weissleberg, the former Trump Organization chief financial officer, who are all defendants in the trial.

Trump Jr. testified that he didn't recall being involved in the compilation of the statements of financial condition for Donald Trump on any year, but he had the responsibility to sign off on the documents. He said he relied on others, like Weissleberg, to vet the documents.

His testimony is expected to continue on Thursday and be followed by that of his younger brother Eric, another vice president of the family's landmark business.

The former president is expected to take the witness stand next Monday, marking the first time he is formally called up to publicly testify in any of his pending trials.

Ivanka Trump, the former president's daughter, is also scheduled to testify next week. She is not a defendant. (NPR).

One more thing.

Touch 👇 to watch the police officer, trying to bond with Donald, Jr. over white supremacy.

This police offer flashed the white power sign to Don Jr. Should that officer be fired?

— David Leaning Left (@LeaningLeftShow) November 1, 2023

pic.twitter.com/VMc4MI2Mju

—————————————————————-

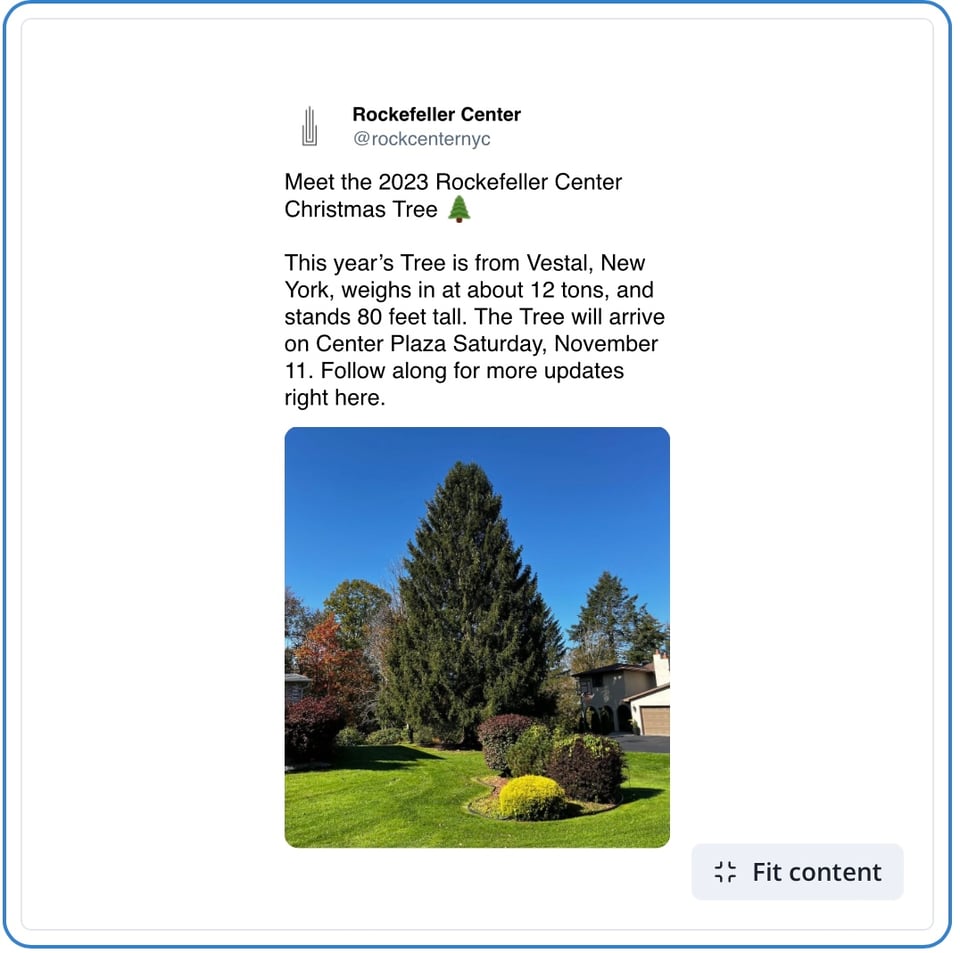

#PumpkinDay is over. First comes 🦃, and then 🎄 and 🕎.

Rockefeller Center selects its 2023 Christmas tree, an 80-footer : NPR

The Rockefeller Center Christmas tree stands illuminated following the 90th annual lighting ceremony, on Nov. 30, 2022, in New York.

The Rockefeller Center Christmas tree is coming to town. The Center has picked the huge tree that, per tradition, it will display in its plaza this year in New York City.

The tree is coming from Vestal, N.Y., is 80 to 85 years old, weighs 12 tons and is about 80 feet tall and 43 feet wide. It will land at Rockefeller Center Plaza on Nov. 11, accompanied by a ceremony with book readings, letters to Santa and ornament making.

Erik Pauze has chosen the tree for the past 30 years. As the head gardener at the Rockefeller Center, he is responsible for finding the tree, feeding and watering it, trimming it, measuring it and transporting it, a sometimes monthslong process.

Pauze started as a "summer helper" at the Rockefeller Center in 1988 and now manages all of the Center's gardens. But he thinks about the annual Christmas trees almost every day, he said in an interview with the Center's magazine.

"What I look for is a tree you would want in your living room, but on a grander scale. It's got that nice, perfect shape all around," he said. "And most of all, it's gotta look good for those kids who turn the corner at 30 Rock; it needs to instantly put a huge smile on their faces. It needs to evoke that feeling of happiness."

The Rockefeller Center Christmas trees are Norway spruces, which are good because of their size and sturdiness, Pauze said. The largest tree so far has been the 1999 tree, which was 100 feet tall and came from Killingworth, Conn.

This year's tree will be adorned with over 50,000 lights, covering about five miles of wire.

It is topped by a star that has about 70 spikes, 3 million Swarovski crystals and weighs about 900 pounds. The Swarovski Star was first introduced in 2004.

The lighting ceremony will air on NBC at 8 p.m. ET Nov. 29. The tree will be taken down on Jan. 13, 2024.

The tradition of the Rockefeller Center tree began in 1931, when employees pitched in to buy a 20-foot balsam fir and decorated it with handmade garlands. The Rockefeller Center turned it into an annual tradition two years later and had its first official lighting ceremony.

The notable ice skating rink that sits below the tree was introduced in 1936. (NPR).

—————————————————————-